Implementation of a Software Interface Layer Between Model-Based-Design Tool and Embedded Graphic Frameworks

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Company Brochure

Accord Global Technology Solutions Pvt. Ltd. Accord Global Technology Solutions Private Limited (AGTSPL) is part of the Accord Group of companies with primary focus on Aerospace, Embedded, Automotive and Enterprise products and engineering services. Accord Group was established in 1991 by five technologists who have worked in the field of Avionics and real time embedded systems over several decades. Over the years, Accord Group has chartered an impressive record of accomplishment in customer satisfaction, innovation, sales, and profitability. The success of the group has been made possible with continuous enhancement of Competence in Technology, Quality Processes, Project Management and Customer Focus. VISION QUALITY To build the best Engineering Brand in the world. Awards & Recognitions MISSION U We shall provide innovative Products and Services to our R L customers in the field of Aerospace, Automotive, Embedded and other Safety Critical Systems including Commercial Systems. DQS Inc. We shall strive to be the most trusted partner to our customers, Accord Global Technology consistently exceeding the expectations through Innovation, Solution Pvt. Ltd. Commitment and Passion for Excellence. ISO 9001:2015 VALUES AS9100:2016 Rev D Nimble Integrity Fairness Social Responsibility CMMI Level 5 V1.3 Innovation Reliability & Safety Passion Excellence Defence Technology Award from the Government of India Awarded by the Prime Minister Of India Our range of Products & Services Autosar/ Aerospace Body Control Products Module (BCM) ISO-26262 ATE/ Staff -

Branchenführer Embedded Software Engineering 2019

Embedded Software Engineering Branchenführer | 2020 Branchenführer Embedded Software Engineering 2020 Eine Gemeinschaftpublikation von Embedded Software Engineering Branchenführer | 2020 • Software-Entwicklungslösungen für Arm • IDEs, Compiler, Debugger, Debug-Adapter • Performance Analyse für Code und Grafi k • Software Entwicklungsunterstützung für Arm IP Beschleunigen Sie die Software-Entwicklung von Embedded-Applikationen Firmenprofi l Studio unterstützt Entwicklung für jede Arm-Architektur, und Keil MDK wurde speziell für die Erstellung und das Die Arm-Technologie ist das Herzstück einer Revolu- Testen von Embedded-Anwendungen auf Arm-basierten tion in den Bereichen Computing & Connectivity, die Mikrokontrollern entwickelt. die Lebensweise der Menschen und die Arbeitsweise von Unternehmen verändert. Unsere fortgeschrittenen, Aktuelle Themen 2020 energieeffi zienten Prozessordesigns haben intelligentes Computing in mehr als 130 Milliarden Chips ermöglicht, Das Internet der Dinge erfordert eine hohe Sicherheit und unsere Technologien versorgen Produkte vom Sen- in den Endknoten, was eine neue Herausforderung für sor über das Smartphone bis hin zum Supercomputer. Softwareentwickler darstellt. PSA ist eine Schritt-für- In Kombination mit unserer IoT-Plattform für Geräte, Schritt-Anleitung, um das richtige Sicherheitsniveau zu Konnektivität und Datenmanagement ermöglichen wir erreichen, das Risiko der Datenzuverlässigkeit zu redu- unseren Kunden leistungsstarke und umsetzbare Ge- zieren und Unternehmen die Möglichkeit zu geben, bei schäftseinblicke, die aus ihren vernetzten Geräten und neuen Ideen innovativ zu sein. Die Open-Source Trusted Daten neue Werte schaffen. Zusammen mit über 1.000 Firmware for Cortex-M (TF-M) implementiert die in PSA Technologiepartnern sind wir führend bei der Konzeption, beschriebenen Software-Services. Es bietet dem Software– Sicherung und Verwaltung aller Rechenbereiche vom entwickler eine vertrauenswürdige Ausführungsumge- Chip bis zur Cloud. -

Composition and Construction of Embedded Software Families

Composition and Construction of Embedded Software Families Dissertation zur Erlangung des akademischen Grades Doktoringenieur (Dr. Ing.) angenommen durch die Fakultät für Informatik der Otto-von-Guericke-Universität Magdeburg von Dipl.-Inform. Danilo Beuche geb. am 2.12.1968 in Nauen Gutachter: Prof. Dr. Wolfgang Schröder-Preikschat Prof. Dr. Reiner Dumke Prof. Dr. Jörg Nolte Promotionskolloquium: Magdeburg, den 19. Dezember 2003 Acknowledgements Finishing this dissertation would have been impossible without the help of many persons. I would like to express my gratitude to all of them. However, it is impossible to name all of my voluntary and sometimes involuntary helpers. My special thanks go to Wolfgang Schröder-Preikschat who gave me opportunity and room to develop my own ideas. His research group at Otto-von-Guericke-Universität Magdeburg was the perfect place for me and my research project. Beside my advisor I have to thank my colleagues Guido Domnick, Andreas Gal, Jens Lauterbach, André Maaß, Daniel Mahren- holz, and Michael Schulze from this group. They frequently had to use the Consul program which is a substantial result of this thesis. I am grateful that they kept sending bug reports instead of deleting it from the disk right away when it still did not work as expected after the “final” bug fix. Olaf and Ute Spinczyk, who were my first colleagues in the group, had not only to live with my chaos for about five years, they also were first time readers of my drafts. Their comments and also their believe in this work were invaluable. Frank Behrens, who always tried to motivate me, is hopefully pleased with the result of his efforts. -

Release Notes - Please Read Carefully

TARA Systems Release Notes - please read carefully - Version 8.00 26 September 2016 Authors: Dipl. Ing. Paul Banach, Dipl. Ing. Manfred Schweyer Copyright © TARA Systems GmbH www.embedded-wizard.de Release Notes Release Notes The following chapters contain a complete version history of Embedded Wizard and describe all news and changes. Please read carefully. The following versions have been released: Embedded Wizard V8.00 (preview release) Embedded Wizard V7.10 Embedded Wizard V7.02 (preview release) Embedded Wizard V7.00 (preview release) Embedded Wizard V6.60 Embedded Wizard V6.51 Embedded Wizard V6.50 Embedded Wizard V6.41 Embedded Wizard V6.40 Embedded Wizard V6.30 Embedded Wizard V6.20 Embedded Wizard V6.10 Embedded Wizard V6.00 Embedded Wizard V5.40 Embedded Wizard V5.30 Embedded Wizard V5.20 Embedded Wizard V5.10 Embedded Wizard V5.00 Embedded Wizard V4.40 Embedded Wizard V4.30 Embedded Wizard V4.20 Embedded Wizard V4.11 Embedded Wizard V4.10 Embedded Wizard V4.01 Embedded Wizard V4.00 Embedded Wizard V3.30 Embedded Wizard V3.20 Embedded Wizard V3.00 Embedded Wizard V2.30 Embedded Wizard V2.20 Embedded Wizard Copyright © TARA Systems GmbH Page 2 Release Notes www.embedded-wizard.de Release Notes Embedded Wizard V2.10 Embedded Wizard V2.00 Embedded Wizard V1.01 Embedded Wizard V1.00 Embedded Wizard V0.92 Embedded Wizard V0.91 Embedded Wizard Copyright © TARA Systems GmbH Page 3 Release Notes www.embedded-wizard.de Embedded Wizard V8.00 (preview release) Embedded Wizard V8.00 (preview release) This version is a preview release. This means, that all new features and improvements are completed and well tested – just the documentation is not completed. -

Magazine January/February 2006 • an Idbma, Inc

INSIDE! CELEBRATING 25 YEARS - A LOOK BACK AT SPECTRUM'S MOST FAMOUS SPEAKERS $7.00 U.S. INTERNATIONAL ® SPECTRUMSPECTRUMTHE BUSINESS COMPUTER MAGAZINE JANUARY/FEBRUARY 2006 • AN IDBMA, INC. PUBLICATION Ajax:Ajax: MakingMaking thethe WebWeb MoreMore InteractiveInteractive WhatWhat AjaxAjax Is,Is, What It Isn’t Isn’t,, andand WhyWhy ItIt MattersMatters PLUS! Improving the Bottom Line With Business Intelligence INTERNATIONAL Making the 12 Web More Interactive — ® Understanding SPECTRUMJANUARY/FEBRUARY 2006 the Real Benefits MULTIVALUE REPORTING AND BUSINESS INTELLIGENCE of the Ajax 6 One of the strengths of MultiValue systems is the rich, expressive query environment that allows users to extract the detail and summary transaction information needed to Technology A clear effectively run the business. But what happens when we want simpler access to picture of what Ajax is, what operational data and quick export to spreadsheets, or trending and drill-down analysis to improve the business? BY ROSS MORRISSEY it isn't and why it matters. BY MELVIN SORIANO WHY VISUAL STUDIO .NET? As an IT professional in charge of a 30MultiValue system, you are probably wondering the impact, if any, that the latest release of .NET will have on the work that you do and the decisions that you make. This article demystifies the new features and tells why some of them are important to you. BY ROBERT HOUBEN IS YOUR DATA SECURITY ADEQUATE? Did you know that the weakest 35part of a security system is the user? All MultiValue databases provide tools to protect your data, but many of them aren’t being used or applied to their full advantage. -

IDOL Connector Framework Server 12.2 Administration Guide

Connector Framework Server Software Version 12.2 Administration Guide Document Release Date: February 2019 Software Release Date: February 2019 Administration Guide Legal notices Copyright notice © Copyright 2019 Micro Focus or one of its affiliates. The only warranties for products and services of Micro Focus and its affiliates and licensors (“Micro Focus”) are set forth in the express warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. Micro Focus shall not be liable for technical or editorial errors or omissions contained herein. The information contained herein is subject to change without notice. Documentation updates The title page of this document contains the following identifying information: l Software Version number, which indicates the software version. l Document Release Date, which changes each time the document is updated. l Software Release Date, which indicates the release date of this version of the software. You can check for more recent versions of a document through the MySupport portal. Many areas of the portal, including the one for documentation, require you to sign in with a Software Passport. If you need a Passport, you can create one when prompted to sign in. Additionally, if you subscribe to the appropriate product support service, you will receive new or updated editions of documentation. Contact your Micro Focus sales representative for details. Support Visit the MySupport portal to access contact information and details about the products, services, and support that Micro Focus offers. This portal also provides customer self-solve capabilities. It gives you a fast and efficient way to access interactive technical support tools needed to manage your business. -

Introduction to Software Engineering

Introduction to Software Engineering Edited by R.P. Lano (Version 0.1) Table of Contents Introduction to Software Engineering..................................................................................................1 Introduction........................................................................................................................................13 Preface...........................................................................................................................................13 Introduction....................................................................................................................................13 History...........................................................................................................................................14 References......................................................................................................................................15 Software Engineer..........................................................................................................................15 Overview........................................................................................................................................15 Education.......................................................................................................................................16 Profession.......................................................................................................................................17 Debates within -

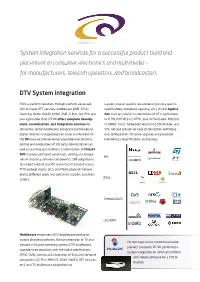

System Integration Services for a Successful Product Build

System integration services for a successful product build and placement in consumer electronics and multimedia - for manufacturers, telecom operators, and broadcasters DTV System integration From a platform selection, through platform depended support product specific requirements (country specific SDK of mayor OTT services, middleware (DVB, ATSC) specifications, broadcast signaling, etc.). On the Applica- featuring: DLNA, HbbTV, MHEG, PVR, CI Plus, to HTML and tion level we provide customization of DTV applications Java application level, RT-RK offers complete develop- in: HTML4/HTML5/CE-HTML, Java UI framework, Maestro ment, customization, and integration services for UI toolkit, Tara’s Embedded Wizard and OSD Builder, and connected, content protected, broadcast and broadband SVG. We also provide services of connection with head- digital receivers and gateways on Linux and Android. On end: configuration, firmware upgrade, and parameter the OS level we provide kernel adaptations/extensions, monitoring; precertification, and testing. porting and integrations of 3rd party devices/drivers as well as real time optimizations. Customization of Chipset SDK includes porting of peripherals, porting and integra- SoC tion of 3rd party software components, SDK adaptations to support product specific requirements (second screen, IPTV protocol stacks, etc.), and Media playback improve- ments (different codec and containers support, synchroni- OS21 RTOS zation). TEHNOLOGIES CAS/DRM Middleware encounters: DTV middleware porting on various chipsets/platforms, Native integration of TV mid- For the major Swiss telecommunication dleware in Android operating system, DTV middleware provider Swisscom, RT-RK performed a upgrade to be compliant with the latest specifications system integration on a Marvell platform (ATSC, DVB), porting and integration of 3rd party software with iWedia software for a STB on components (CI Plus, MHEG-5, DLNA, HbbTV, OTT services, Android. -

Revvis-VV-Methods-And-Tools V20

INPE-15648-NTC/377 SOFTWARE VERIFICATION AND VALIDATION METHODS AND TOOLS Ana Maria Ambrosio Nuno Silva* *Critical Software S.A. INPE São José dos Campos 2008 Publicado por: esta página é responsabilidade do SID Instituto Nacional de Pesquisas Espaciais (INPE) Gabinete do Diretor – (GB) Serviço de Informação e Documentação (SID) Caixa Postal 515 – CEP 12.245-970 São José dos Campos – SP – Brasil Tel.: (012) 3945-6911 Fax: (012) 3945-6919 E-mail: [email protected] Solicita-se intercâmbio We ask for exchange Publicação Externa – É permitida sua reprodução para interessados. INPE-15648-NTC/377 SOFTWARE VERIFICATION AND VALIDATION METHODS AND TOOLS Ana Maria Ambrosio Nuno Silva* *Critical Software S.A. INPE São José dos Campos 2008 SOFTWARE VERIFICATION AND VALIDATION METHODS AND TOOLS REWIS PROJECT REVVIS – Reunião de Especialistas em Verificação e Validação de Software Working Group - Benchmarking de métodos e ferramentas de V&V de software Ana Maria Ambrosio [email protected] Instituto National de Pesquisas Espaciais (INPE) – Brasil Author Nuno Silva [email protected] Critical Software S.A. – Portugal Author Paulo C. Veras [email protected] Instituto de Tecnologia Aeronáutica (ITA) - Brasil Contributor Enrique Larios Vargas [email protected] Pontificia Universidad Catolica Del Peru - Peru Contributor Maximiliano Cristiá REVVIS Coordinator SUMMARY Pág. LIST OF TABLES…………………………………………………………………………… 1. Introduction .............................................................................................. .... 9 1.1 Document Scope ........................................................................................... -

Purebasic Reference Manual 4.50 (Windows)

PureBasic Reference Manual 4.50 (Windows) http://www.purebasic.com/ June 8, 2010 Contents I General 27 1 Introduction 28 2 Terms And Conditions 29 3 System requirements 30 4 Installation 31 5 Order 32 6 Contact 34 7 Acknowledgements 36 II The PureBasic Editor 38 8 Getting Started 39 9 Working with source files 41 10 Editing features 43 11 Managing projects 49 12 Compiling your programs 55 13 Using the debugger 65 14 Included debugging tools 73 15 Using the buildin Tools 82 16 Using external tools 87 17 Getting Help 92 18 Customizing the IDE 95 19 Commandline options for the IDE 112 III Language Reference 113 20 Working with different number bases 114 21 Break : Continue 119 22 Using the command line compiler 121 23 Compiler Directives 123 1 24 Compiler Functions 127 25 Data 132 26 Debugger keywords in PureBasic 135 27 Define 137 28 Dim 139 29 Building a DLL 141 30 Enumerations 143 31 For : Next 145 32 ForEach : Next 147 33 General Rules 149 34 Global 152 35 Gosub : Return 154 36 Handles and Numbers 156 37 If : Else : EndIf 158 38 Import : EndImport 159 39 Includes Functions 161 40 Inline x86 ASM 163 41 Interfaces 165 42 Macros 167 43 Pointers and memory access 169 44 NewList 173 45 NewMap 175 46 Others Commands 177 47 Procedures 179 48 Protected 182 49 Prototypes 184 50 Pseudotypes 186 51 PureBasic objects 188 52 Repeat : Until 190 53 Select : EndSelect 191 54 Using several PureBasic versions on Windows 193 55 Shared 194 2 56 Static 195 57 Structures 197 58 Threaded 201 59 Unicode 202 60 Variables and Types 204 61 We start programming.. -

Advanced Commands Reference Guide

Kryon Studio VERSION 20.9 Advanced ommands C Reference Guide This document contains proprietary and confidential information of Kryon Systems, and can be distributed only with the prior written consent of Kryon Systems Ltd. © 2008-2020 Kryon Systems Ltd. All rights reserved. Document revision: 21-Sep.-2020 Contents CHAPTER 1: Variable Commands Set Value 18 Using the SET VALUE command 18 Find 20 Using the FIND command 20 Replace 28 Using the REPLACE command 28 Mathematics 30 Using the MATHEMATICS command 30 Evaluate Expression 31 Using the EVALUATE EXPRESSION command 31 Split 36 Using the SPLIT command 36 Get Array Data 40 Using the GET ARRAY DATA command 41 Get Table Data 44 Using the GET TABLE DATA command 44 Table Lookup 49 Using the TABLE LOOKUP command 49 Get ASCII Character 54 Using the GET ASCII CHARACTER command 54 Remove Blank Spaces 56 Using the REMOVE BLANK SPACES command 56 Change Case 57 Using the CHANGE CASE command 57 Check Type 58 Using the CHECK TYPE command 58 Get Random Number 59 Using the GET RANDOM NUMBER command 59 Reverse 60 Using the REVERSE command 60 Get Length 61 Using the GET LENGTH command 61 Extract Numeric Values 62 Using the EXTRACT NUMERIC VALUES command 62 CHAPTER 2: Flow Commands If Else 66 Using the IF ELSE command 66 Complex If Else 70 Using the COMPLEX IF ELSE command 70 Multi-Value If Else 72 Using the MULTI-VALUE IF ELSE command 72 Loop 74 Using the LOOP command 74 Loop: Break 76 Loop: Restart 77 Loop Table 78 Using the LOOP TABLE command 78 Loop Items 80 Using the LOOP ITEMS command 80 Pause 82 -

Appeon Developer User Guide

Appeon Developer User Guide Appeon® for PowerBuilder® 2015 FOR WINDOWS & UNIX & LINUX DOCUMENT ID: ADC20231-01-0700-01 LAST REVISED: October 15, 2014 Copyright © 2000-2014 by Appeon Corporation. All rights reserved. This publication pertains to Appeon software and to any subsequent release until otherwise indicated in new editions or technical notes. Information in this document is subject to change without notice. The software described herein is furnished under a license agreement, and it may be used or copied only in accordance with the terms of that agreement. No part of this publication may be reproduced, transmitted, or translated in any form or by any means, electronic, mechanical, manual, optical, or otherwise, without the prior written permission of Appeon Corporation. Appeon, the Appeon logo, Appeon Developer, Appeon Enterprise Manager, AEM, Appeon Server and Appeon Server Web Component are registered trademarks of Appeon Corporation. Sybase, Adaptive Server Anywhere, Adaptive Server Enterprise, iAnywhere, PowerBuilder, Sybase Central, and Sybase jConnect for JDBC are trademarks or registered trademarks of Sybase, Inc. Java and JDBC are trademarks or registered trademarks of Sun Microsystems, Inc. All other company and product names used herein may be trademarks or registered trademarks of their respective companies. Use, duplication, or disclosure by the government is subject to the restrictions set forth in subparagraph (c)(1)(ii) of DFARS 52.227-7013 for the DOD and as set forth in FAR 52.227-19(a)-(d) for civilian agencies. Appeon Corporation, 1/F, Shell Industrial Building, 12 Lee Chung Street, Chai Wan District, Hong Kong. Contents 1 About This Book .................................................................................................... 1 1.1 Audience .....................................................................................................