On Improving Integer Factorization and Discrete Logarithm Computation Using Partial Triangulation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Primality Testing and Integer Factorisation

Primality Testing and Integer Factorisation Richard P. Brent, FAA Computer Sciences Laboratory Australian National University Canberra, ACT 2601 Abstract The problem of finding the prime factors of large composite numbers has always been of mathematical interest. With the advent of public key cryptosystems it is also of practical importance, because the security of some of these cryptosystems, such as the Rivest-Shamir-Adelman (RSA) system, depends on the difficulty of factoring the public keys. In recent years the best known integer factorisation algorithms have improved greatly, to the point where it is now easy to factor a 60-decimal digit number, and possible to factor numbers larger than 120 decimal digits, given the availability of enough computing power. We describe several recent algorithms for primality testing and factorisation, give examples of their use and outline some applications. 1. Introduction It has been known since Euclid’s time (though first clearly stated and proved by Gauss in 1801) that any natural number N has a unique prime power decomposition α1 α2 αk N = p1 p2 ··· pk (1.1) αj (p1 < p2 < ··· < pk rational primes, αj > 0). The prime powers pj are called αj components of N, and we write pj kN. To compute the prime power decomposition we need – 1. An algorithm to test if an integer N is prime. 2. An algorithm to find a nontrivial factor f of a composite integer N. Given these there is a simple recursive algorithm to compute (1.1): if N is prime then stop, otherwise 1. find a nontrivial factor f of N; 2. -

Computing Prime Divisors in an Interval

MATHEMATICS OF COMPUTATION Volume 84, Number 291, January 2015, Pages 339–354 S 0025-5718(2014)02840-8 Article electronically published on May 28, 2014 COMPUTING PRIME DIVISORS IN AN INTERVAL MINKYU KIM AND JUNG HEE CHEON Abstract. We address the problem of finding a nontrivial divisor of a com- posite integer when it has a prime divisor in an interval. We show that this problem can be solved in time of the square root of the interval length with a similar amount of storage, by presenting two algorithms; one is probabilistic and the other is its derandomized version. 1. Introduction Integer factorization is one of the most challenging problems in computational number theory. It has been studied for centuries, and it has been intensively in- vestigated after introducing the RSA cryptosystem [18]. The difficulty of integer factorization has been examined not only in pure factoring situations but also in various modified situations. One such approach is to find a nontrivial divisor of a composite integer when it has prime divisors of special form. These include Pol- lard’s p − 1 algorithm [15], Williams’ p + 1 algorithm [20], and others. On the other hand, owing to the importance and the usage of integer factorization in cryptog- raphy, one needs to examine this problem when some partial information about divisors is revealed. This side information might be exposed through the proce- dures of generating prime numbers or through some attacks against crypto devices or protocols. This paper deals with integer factorization given the approximation of divisors, and it is motivated by the above mentioned research. -

Abstract in This Paper, D-Strong and Almost D-Strong Near-Rings Ha

Periodica Mathematica Hungarlca Vol. 17 (1), (1986), pp. 13--20 D-STRONG AND ALMOST D-STRONG NEAR-RINGS A. K. GOYAL (Udaipur) Abstract In this paper, D-strong and almost D-strong near-rings have been defined. It has been proved that if R is a D-strong S-near ring, then prime ideals, strictly prime ideals and completely prime ideals coincide. Also if R is a D-strong near-ring with iden- tity, then every maximal right ideal becomes a maximal ideal and moreover every 2- primitive near-ring becomes a near-field. Several properties, chain conditions and structure theorems have also been discussed. Introduction In this paper, we have generalized some of the results obtained for rings by Wong [12]. Corresponding to the prime and strictly prime ideals in near-rings, we have defined D-strong and almost D-strong near-rings. It has been shown that a regular near-ring having all idempotents central in R is a D-strong and hence almost D-strong near-ring. If R is a D-strong S-near ring, then it has been shown that prime ideals, strictly prime ideals and com- pletely prime ideals coincide and g(R) = H(R) ~ ~(R), where H(R) is the intersection of all strictly prime ideals of R. Also if R is a D-strong near-ring with identity, then every maximal right ideal becomes a maximal ideal and moreover every 2-primitive near-ring becomes a near-field. Some structure theorems have also been discussed. Preliminaries Throughout R will denote a zero-symmetric left near-ring, i.e., R -- Ro in the sense of Pilz [10]. -

A Public-Key Cryptosystem Based on Discrete Logarithm Problem Over Finite Fields 퐅퐩퐧

IOSR Journal of Mathematics (IOSR-JM) e-ISSN: 2278-5728, p-ISSN: 2319-765X. Volume 11, Issue 1 Ver. III (Jan - Feb. 2015), PP 01-03 www.iosrjournals.org A Public-Key Cryptosystem Based On Discrete Logarithm Problem over Finite Fields 퐅퐩퐧 Saju M I1, Lilly P L2 1Assistant Professor, Department of Mathematics, St. Thomas’ College, Thrissur, India 2Associate professor, Department of Mathematics, St. Joseph’s College, Irinjalakuda, India Abstract: One of the classical problems in mathematics is the Discrete Logarithm Problem (DLP).The difficulty and complexity for solving DLP is used in most of the cryptosystems. In this paper we design a public key system based on the ring of polynomials over the field 퐹푝 is developed. The security of the system is based on the difficulty of finding discrete logarithms over the function field 퐹푝푛 with suitable prime p and sufficiently large n. The presented system has all features of ordinary public key cryptosystem. Keywords: Discrete logarithm problem, Function Field, Polynomials over finite fields, Primitive polynomial, Public key cryptosystem. I. Introduction For the construction of a public key cryptosystem, we need a finite extension field Fpn overFp. In our paper [1] we design a public key cryptosystem based on discrete logarithm problem over the field F2. Here, for increasing the complexity and difficulty for solving DLP, we made a proper additional modification in the system. A cryptosystem for message transmission means a map from units of ordinary text called plaintext message units to units of coded text called cipher text message units. The face of cryptography was radically altered when Diffie and Hellman invented an entirely new type of cryptography, called public key [Diffie and Hellman 1976][2]. -

Neural Networks and the Search for a Quadratic Residue Detector

Neural Networks and the Search for a Quadratic Residue Detector Michael Potter∗ Leon Reznik∗ Stanisław Radziszowski∗ [email protected] [email protected] [email protected] ∗ Department of Computer Science Rochester Institute of Technology Rochester, NY 14623 Abstract—This paper investigates the feasibility of employing This paper investigates the possibility of employing ANNs artificial neural network techniques for solving fundamental in the search for a solution to the quadratic residues (QR) cryptography problems, taking quadratic residue detection problem, which is defined as follows: as an example. The problem of quadratic residue detection Suppose that you have two integers a and b. Then a is is one which is well known in both number theory and a quadratic residue of b if and only if the following two cryptography. While it garners less attention than problems conditions hold: [1] such as factoring or discrete logarithms, it is similar in both 1) The greatest common divisor of a and b is 1, and difficulty and importance. No polynomial–time algorithm is 2) There exists an integer c such that c2 ≡ a (mod b). currently known to the public by which the quadratic residue status of one number modulo another may be determined. This The first condition is satisfied automatically so long as ∗ work leverages machine learning algorithms in an attempt to a 2 Zb . There is, however, no known polynomial–time (in create a detector capable of solving instances of the problem the number of bits of a and b) algorithm for determining more efficiently. A variety of neural networks, currently at the whether the second condition is satisfied. -

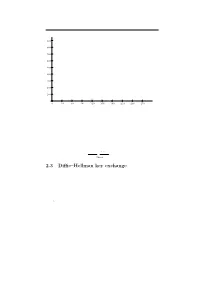

2.3 Diffie–Hellman Key Exchange

2.3. Di±e{Hellman key exchange 65 q q q q q q 6 q qq q q q q q q 900 q q q q q q q qq q q q q q q q q q q q q q q q q 800 q q q qq q q q q q q q q q qq q q q q q q q q q q q 700 q q q q q q q q q q q q q q q q q q q q q q q q q q qq q 600 q q q q q q q q q q q q qq q q q q q q q q q q q q q q q q q qq q q q q q q q q 500 q qq q q q q q qq q q q q q qqq q q q q q q q q q q q q q qq q q q 400 q q q q q q q q q q q q q q q q q q q q q q q q q 300 q q q q q q q q q q q q q q q q q q qqqq qqq q q q q q q q q q q q 200 q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q qq q q qq q q 100 q q q q q q q q q q q q q q q q q q q q q q q q q 0 q - 0 30 60 90 120 150 180 210 240 270 Figure 2.2: Powers 627i mod 941 for i = 1; 2; 3;::: any group and use the group law instead of multiplication. -

A Scalable System-On-A-Chip Architecture for Prime Number Validation

A SCALABLE SYSTEM-ON-A-CHIP ARCHITECTURE FOR PRIME NUMBER VALIDATION Ray C.C. Cheung and Ashley Brown Department of Computing, Imperial College London, United Kingdom Abstract This paper presents a scalable SoC architecture for prime number validation which targets reconfigurable hardware This paper presents a scalable SoC architecture for prime such as FPGAs. In particular, users are allowed to se- number validation which targets reconfigurable hardware. lect predefined scalable or non-scalable modular opera- The primality test is crucial for security systems, especially tors for their designs [4]. Our main contributions in- for most public-key schemes. The Rabin-Miller Strong clude: (1) Parallel designs for Montgomery modular arith- Pseudoprime Test has been mapped into hardware, which metic operations (Section 3). (2) A scalable design method makes use of a circuit for computing Montgomery modu- for mapping the Rabin-Miller Strong Pseudoprime Test lar exponentiation to further speed up the validation and to into hardware (Section 4). (3) An architecture of RAM- reduce the hardware cost. A design generator has been de- based Radix-2 Scalable Montgomery multiplier (Section veloped to generate a variety of scalable and non-scalable 4). (4) A design generator for producing hardware prime Montgomery multipliers based on user-defined parameters. number validators based on user-specified parameters (Sec- The performance and resource usage of our designs, im- tion 5). (5) Implementation of the proposed hardware ar- plemented in Xilinx reconfigurable devices, have been ex- chitectures in FPGAs, with an evaluation of its effective- plored using the embedded PowerPC processor and the soft ness compared with different size and speed tradeoffs (Sec- MicroBlaze processor. -

Making NTRU As Secure As Worst-Case Problems Over Ideal Lattices

Making NTRU as Secure as Worst-Case Problems over Ideal Lattices Damien Stehlé1 and Ron Steinfeld2 1 CNRS, Laboratoire LIP (U. Lyon, CNRS, ENS Lyon, INRIA, UCBL), 46 Allée d’Italie, 69364 Lyon Cedex 07, France. [email protected] – http://perso.ens-lyon.fr/damien.stehle 2 Centre for Advanced Computing - Algorithms and Cryptography, Department of Computing, Macquarie University, NSW 2109, Australia [email protected] – http://web.science.mq.edu.au/~rons Abstract. NTRUEncrypt, proposed in 1996 by Hoffstein, Pipher and Sil- verman, is the fastest known lattice-based encryption scheme. Its mod- erate key-sizes, excellent asymptotic performance and conjectured resis- tance to quantum computers could make it a desirable alternative to fac- torisation and discrete-log based encryption schemes. However, since its introduction, doubts have regularly arisen on its security. In the present work, we show how to modify NTRUEncrypt to make it provably secure in the standard model, under the assumed quantum hardness of standard worst-case lattice problems, restricted to a family of lattices related to some cyclotomic fields. Our main contribution is to show that if the se- cret key polynomials are selected by rejection from discrete Gaussians, then the public key, which is their ratio, is statistically indistinguishable from uniform over its domain. The security then follows from the already proven hardness of the R-LWE problem. Keywords. Lattice-based cryptography, NTRU, provable security. 1 Introduction NTRUEncrypt, devised by Hoffstein, Pipher and Silverman, was first presented at the Crypto’96 rump session [14]. Although its description relies on arithmetic n over the polynomial ring Zq[x]=(x − 1) for n prime and q a small integer, it was quickly observed that breaking it could be expressed as a problem over Euclidean lattices [6]. -

The Discrete Log Problem and Elliptic Curve Cryptography

THE DISCRETE LOG PROBLEM AND ELLIPTIC CURVE CRYPTOGRAPHY NOLAN WINKLER Abstract. In this paper, discrete log-based public-key cryptography is ex- plored. Specifically, we first examine the Discrete Log Problem over a general cyclic group and algorithms that attempt to solve it. This leads us to an in- vestigation of the security of cryptosystems based over certain specific cyclic × groups: Fp, Fp , and the cyclic subgroup generated by a point on an elliptic curve; we ultimately see the highest security comes from using E(Fp) as our group. This necessitates an introduction of elliptic curves, which is provided. Finally, we conclude with cryptographic implementation considerations. Contents 1. Introduction 2 2. Public-Key Systems Over a Cyclic Group G 3 2.1. ElGamal Messaging 3 2.2. Diffie-Hellman Key Exchanges 4 3. Security & Hardness of the Discrete Log Problem 4 4. Algorithms for Solving the Discrete Log Problem 6 4.1. General Cyclic Groups 6 × 4.2. The cyclic group Fp 9 4.3. Subgroup Generated by a Point on an Elliptic Curve 10 5. Elliptic Curves: A Better Group for Cryptography 11 5.1. Basic Theory and Group Law 11 5.2. Finding the Order 12 6. Ensuring Security 15 6.1. Special Cases and Notes 15 7. Further Reading 15 8. Acknowledgments 15 References 16 1 2 NOLAN WINKLER 1. Introduction In this paper, basic knowledge of number theory and abstract algebra is assumed. Additionally, rather than beginning from classical symmetric systems of cryptog- raphy, such as the famous Caesar or Vigni`ere ciphers, we assume a familiarity with these systems and why they have largely become obsolete on their own. -

Sieve Algorithms for the Discrete Logarithm in Medium Characteristic Finite Fields Laurent Grémy

Sieve algorithms for the discrete logarithm in medium characteristic finite fields Laurent Grémy To cite this version: Laurent Grémy. Sieve algorithms for the discrete logarithm in medium characteristic finite fields. Cryptography and Security [cs.CR]. Université de Lorraine, 2017. English. NNT : 2017LORR0141. tel-01647623 HAL Id: tel-01647623 https://tel.archives-ouvertes.fr/tel-01647623 Submitted on 24 Nov 2017 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. AVERTISSEMENT Ce document est le fruit d'un long travail approuvé par le jury de soutenance et mis à disposition de l'ensemble de la communauté universitaire élargie. Il est soumis à la propriété intellectuelle de l'auteur. Ceci implique une obligation de citation et de référencement lors de l’utilisation de ce document. D'autre part, toute contrefaçon, plagiat, reproduction illicite encourt une poursuite pénale. Contact : [email protected] LIENS Code de la Propriété Intellectuelle. articles L 122. 4 Code de la Propriété Intellectuelle. articles L 335.2- L 335.10 http://www.cfcopies.com/V2/leg/leg_droi.php -

Integer Factorization and Computing Discrete Logarithms in Maple

Integer Factorization and Computing Discrete Logarithms in Maple Aaron Bradford∗, Michael Monagan∗, Colin Percival∗ [email protected], [email protected], [email protected] Department of Mathematics, Simon Fraser University, Burnaby, B.C., V5A 1S6, Canada. 1 Introduction As part of our MITACS research project at Simon Fraser University, we have investigated algorithms for integer factorization and computing discrete logarithms. We have implemented a quadratic sieve algorithm for integer factorization in Maple to replace Maple's implementation of the Morrison- Brillhart continued fraction algorithm which was done by Gaston Gonnet in the early 1980's. We have also implemented an indexed calculus algorithm for discrete logarithms in GF(q) to replace Maple's implementation of Shanks' baby-step giant-step algorithm, also done by Gaston Gonnet in the early 1980's. In this paper we describe the algorithms and our optimizations made to them. We give some details of our Maple implementations and present some initial timings. Since Maple is an interpreted language, see [7], there is room for improvement of both implementations by coding critical parts of the algorithms in C. For example, one of the bottle-necks of the indexed calculus algorithm is finding and integers which are B-smooth. Let B be a set of primes. A positive integer y is said to be B-smooth if its prime divisors are all in B. Typically B might be the first 200 primes and y might be a 50 bit integer. ∗This work was supported by the MITACS NCE of Canada. 1 2 Integer Factorization Starting from some very simple instructions | \make integer factorization faster in Maple" | we have implemented the Quadratic Sieve factoring al- gorithm in a combination of Maple and C (which is accessed via Maple's capabilities for external linking). -

Comparing the Difficulty of Factorization and Discrete Logarithm: a 240-Digit Experiment⋆

Comparing the Difficulty of Factorization and Discrete Logarithm: a 240-digit Experiment? Fabrice Boudot1, Pierrick Gaudry2, Aurore Guillevic2[0000−0002−0824−7273], Nadia Heninger3, Emmanuel Thomé2, and Paul Zimmermann2[0000−0003−0718−4458] 1 Université de Limoges, XLIM, UMR 7252, F-87000 Limoges, France 2 Université de Lorraine, CNRS, Inria, LORIA, F-54000 Nancy, France 3 University of California, San Diego, USA In memory of Peter L. Montgomery Abstract. We report on two new records: the factorization of RSA-240, a 795-bit number, and a discrete logarithm computation over a 795-bit prime field. Previous records were the factorization of RSA-768 in 2009 and a 768-bit discrete logarithm computation in 2016. Our two computations at the 795-bit level were done using the same hardware and software, and show that computing a discrete logarithm is not much harder than a factorization of the same size. Moreover, thanks to algorithmic variants and well-chosen parameters, our computations were significantly less expensive than anticipated based on previous records. The last page of this paper also reports on the factorization of RSA-250. 1 Introduction The Diffie-Hellman protocol over finite fields and the RSA cryptosystem were the first practical building blocks of public-key cryptography. Since then, several other cryptographic primitives have entered the landscape, and a significant amount of research has been put into the development, standardization, cryptanalysis, and optimization of implementations for a large number of cryptographic primitives. Yet the prevalence of RSA and finite field Diffie-Hellman is still a fact: between November 11, 2019 and December 11, 2019, the ICSI Certificate Notary [21] observed that 90% of the TLS certificates used RSA signatures, and 7% of the TLS connections used RSA for key exchange.