Making NTRU As Secure As Worst-Case Problems Over Ideal Lattices

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Lectures on the NTRU Encryption Algorithm and Digital Signature Scheme: Grenoble, June 2002

Lectures on the NTRU encryption algorithm and digital signature scheme: Grenoble, June 2002 J. Pipher Brown University, Providence RI 02912 1 Lecture 1 1.1 Integer lattices Lattices have been studied by cryptographers for quite some time, in both the field of cryptanalysis (see for example [16{18]) and as a source of hard problems on which to build encryption schemes (see [1, 8, 9]). In this lecture, we describe the NTRU encryption algorithm, and the lattice problems on which this is based. We begin with some definitions and a brief overview of lattices. If a ; a ; :::; a are n independent vectors in Rm, n m, then the 1 2 n ≤ integer lattice with these vectors as basis is the set = n x a : x L f 1 i i i 2 Z . A lattice is often represented as matrix A whose rows are the basis g P vectors a1; :::; an. The elements of the lattice are simply the vectors of the form vT A, which denotes the usual matrix multiplication. We will specialize for now to the situation when the rank of the lattice and the dimension are the same (n = m). The determinant of a lattice det( ) is the volume of the fundamen- L tal parallelepiped spanned by the basis vectors. By the Gram-Schmidt process, one can obtain a basis for the vector space generated by , and L the det( ) will just be the product of these orthogonal vectors. Note that L these vectors are not a basis for as a lattice, since will not usually L L possess an orthogonal basis. -

Solving Hard Lattice Problems and the Security of Lattice-Based Cryptosystems

Solving Hard Lattice Problems and the Security of Lattice-Based Cryptosystems † Thijs Laarhoven∗ Joop van de Pol Benne de Weger∗ September 10, 2012 Abstract This paper is a tutorial introduction to the present state-of-the-art in the field of security of lattice- based cryptosystems. After a short introduction to lattices, we describe the main hard problems in lattice theory that cryptosystems base their security on, and we present the main methods of attacking these hard problems, based on lattice basis reduction. We show how to find shortest vectors in lattices, which can be used to improve basis reduction algorithms. Finally we give a framework for assessing the security of cryptosystems based on these hard problems. 1 Introduction Lattice-based cryptography is a quickly expanding field. The last two decades have seen exciting devel- opments, both in using lattices in cryptanalysis and in building public key cryptosystems on hard lattice problems. In the latter category we could mention the systems of Ajtai and Dwork [AD], Goldreich, Goldwasser and Halevi [GGH2], the NTRU cryptosystem [HPS], and systems built on Small Integer Solu- tions [MR1] problems and Learning With Errors [R1] problems. In the last few years these developments culminated in the advent of the first fully homomorphic encryption scheme by Gentry [Ge]. A major issue in this field is to base key length recommendations on a firm understanding of the hardness of the underlying lattice problems. The general feeling among the experts is that the present understanding is not yet on the same level as the understanding of, e.g., factoring or discrete logarithms. -

NTRU Cryptosystem: Recent Developments and Emerging Mathematical Problems in Finite Polynomial Rings

XXXX, 1–33 © De Gruyter YYYY NTRU Cryptosystem: Recent Developments and Emerging Mathematical Problems in Finite Polynomial Rings Ron Steinfeld Abstract. The NTRU public-key cryptosystem, proposed in 1996 by Hoffstein, Pipher and Silverman, is a fast and practical alternative to classical schemes based on factorization or discrete logarithms. In contrast to the latter schemes, it offers quasi-optimal asymptotic effi- ciency and conjectured security against quantum computing attacks. The scheme is defined over finite polynomial rings, and its security analysis involves the study of natural statistical and computational problems defined over these rings. We survey several recent developments in both the security analysis and in the applica- tions of NTRU and its variants, within the broader field of lattice-based cryptography. These developments include a provable relation between the security of NTRU and the computa- tional hardness of worst-case instances of certain lattice problems, and the construction of fully homomorphic and multilinear cryptographic algorithms. In the process, we identify the underlying statistical and computational problems in finite rings. Keywords. NTRU Cryptosystem, lattice-based cryptography, fully homomorphic encryption, multilinear maps. AMS classification. 68Q17, 68Q87, 68Q12, 11T55, 11T71, 11T22. 1 Introduction The NTRU public-key cryptosystem has attracted much attention by the cryptographic community since its introduction in 1996 by Hoffstein, Pipher and Silverman [32, 33]. Unlike more classical public-key cryptosystems based on the hardness of integer factorisation or the discrete logarithm over finite fields and elliptic curves, NTRU is based on the hardness of finding ‘small’ solutions to systems of linear equations over polynomial rings, and as a consequence is closely related to geometric problems on certain classes of high-dimensional Euclidean lattices. -

On Ideal Lattices and Learning with Errors Over Rings∗

On Ideal Lattices and Learning with Errors Over Rings∗ Vadim Lyubashevskyy Chris Peikertz Oded Regevx June 25, 2013 Abstract The “learning with errors” (LWE) problem is to distinguish random linear equations, which have been perturbed by a small amount of noise, from truly uniform ones. The problem has been shown to be as hard as worst-case lattice problems, and in recent years it has served as the foundation for a plethora of cryptographic applications. Unfortunately, these applications are rather inefficient due to an inherent quadratic overhead in the use of LWE. A main open question was whether LWE and its applications could be made truly efficient by exploiting extra algebraic structure, as was done for lattice-based hash functions (and related primitives). We resolve this question in the affirmative by introducing an algebraic variant of LWE called ring- LWE, and proving that it too enjoys very strong hardness guarantees. Specifically, we show that the ring-LWE distribution is pseudorandom, assuming that worst-case problems on ideal lattices are hard for polynomial-time quantum algorithms. Applications include the first truly practical lattice-based public-key cryptosystem with an efficient security reduction; moreover, many of the other applications of LWE can be made much more efficient through the use of ring-LWE. 1 Introduction Over the last decade, lattices have emerged as a very attractive foundation for cryptography. The appeal of lattice-based primitives stems from the fact that their security can often be based on worst-case hardness assumptions, and that they appear to remain secure even against quantum computers. -

Performance Evaluation of RSA and NTRU Over GPU with Maxwell and Pascal Architecture

Performance Evaluation of RSA and NTRU over GPU with Maxwell and Pascal Architecture Xian-Fu Wong1, Bok-Min Goi1, Wai-Kong Lee2, and Raphael C.-W. Phan3 1Lee Kong Chian Faculty of and Engineering and Science, Universiti Tunku Abdul Rahman, Sungai Long, Malaysia 2Faculty of Information and Communication Technology, Universiti Tunku Abdul Rahman, Kampar, Malaysia 3Faculty of Engineering, Multimedia University, Cyberjaya, Malaysia E-mail: [email protected]; {goibm; wklee}@utar.edu.my; [email protected] Received 2 September 2017; Accepted 22 October 2017; Publication 20 November 2017 Abstract Public key cryptography important in protecting the key exchange between two parties for secure mobile and wireless communication. RSA is one of the most widely used public key cryptographic algorithms, but the Modular exponentiation involved in RSA is very time-consuming when the bit-size is large, usually in the range of 1024-bit to 4096-bit. The speed performance of RSA comes to concerns when thousands or millions of authentication requests are needed to handle by the server at a time, through a massive number of connected mobile and wireless devices. On the other hand, NTRU is another public key cryptographic algorithm that becomes popular recently due to the ability to resist attack from quantum computer. In this paper, we exploit the massively parallel architecture in GPU to perform RSA and NTRU computations. Various optimization techniques were proposed in this paper to achieve higher throughput in RSA and NTRU computation in two GPU platforms. To allow a fair comparison with existing RSA implementation techniques, we proposed to evaluate the speed performance in the best case Journal of Software Networking, 201–220. -

A Public-Key Cryptosystem Based on Discrete Logarithm Problem Over Finite Fields 퐅퐩퐧

IOSR Journal of Mathematics (IOSR-JM) e-ISSN: 2278-5728, p-ISSN: 2319-765X. Volume 11, Issue 1 Ver. III (Jan - Feb. 2015), PP 01-03 www.iosrjournals.org A Public-Key Cryptosystem Based On Discrete Logarithm Problem over Finite Fields 퐅퐩퐧 Saju M I1, Lilly P L2 1Assistant Professor, Department of Mathematics, St. Thomas’ College, Thrissur, India 2Associate professor, Department of Mathematics, St. Joseph’s College, Irinjalakuda, India Abstract: One of the classical problems in mathematics is the Discrete Logarithm Problem (DLP).The difficulty and complexity for solving DLP is used in most of the cryptosystems. In this paper we design a public key system based on the ring of polynomials over the field 퐹푝 is developed. The security of the system is based on the difficulty of finding discrete logarithms over the function field 퐹푝푛 with suitable prime p and sufficiently large n. The presented system has all features of ordinary public key cryptosystem. Keywords: Discrete logarithm problem, Function Field, Polynomials over finite fields, Primitive polynomial, Public key cryptosystem. I. Introduction For the construction of a public key cryptosystem, we need a finite extension field Fpn overFp. In our paper [1] we design a public key cryptosystem based on discrete logarithm problem over the field F2. Here, for increasing the complexity and difficulty for solving DLP, we made a proper additional modification in the system. A cryptosystem for message transmission means a map from units of ordinary text called plaintext message units to units of coded text called cipher text message units. The face of cryptography was radically altered when Diffie and Hellman invented an entirely new type of cryptography, called public key [Diffie and Hellman 1976][2]. -

Presentation

Side-Channel Analysis of Lattice-based PQC Candidates Prasanna Ravi and Sujoy Sinha Roy [email protected], [email protected] Notice • Talk includes published works from journals, conferences, and IACR ePrint Archive. • Talk includes works of other researchers (cited appropriately) • For easier explanation, we ‘simplify’ concepts • Due to time limit, we do not exhaustively cover all relevant works. • Main focus on LWE/LWR-based PKE/KEM schemes • Timing, Power, and EM side-channels Classification of PQC finalists and alternative candidates Lattice-based Cryptography Public Key Encryption (PKE)/ Digital Signature Key Encapsulation Mechanisms (KEM) Schemes (DSS) LWE/LWR-based NTRU-based LWE, Fiat-Shamir with Aborts NTRU, Hash and Sign (Kyber, SABER, Frodo) (NTRU, NTRUPrime) (Dilithium) (FALCON) This talk Outline • Background: • Learning With Errors (LWE) Problem • LWE/LWR-based PKE framework • Overview of side-channel attacks: • Algorithmic-level • Implementation-level • Overview of masking countermeasures • Conclusions and future works Given two linear equations with unknown x and y 3x + 4y = 26 3 4 x 26 or . = 2x + 3y = 19 2 3 y 19 Find x and y. Solving a system of linear equations System of linear equations with unknown s Gaussian elimination solves s when number of equations m ≥ n Solving a system of linear equations with errors Matrix A Vector b mod q • Search Learning With Errors (LWE) problem: Given (A, b) → computationally infeasible to solve (s, e) • Decisional Learning With Errors (LWE) problem: Given (A, b) → -

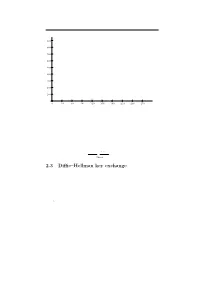

2.3 Diffie–Hellman Key Exchange

2.3. Di±e{Hellman key exchange 65 q q q q q q 6 q qq q q q q q q 900 q q q q q q q qq q q q q q q q q q q q q q q q q 800 q q q qq q q q q q q q q q qq q q q q q q q q q q q 700 q q q q q q q q q q q q q q q q q q q q q q q q q q qq q 600 q q q q q q q q q q q q qq q q q q q q q q q q q q q q q q q qq q q q q q q q q 500 q qq q q q q q qq q q q q q qqq q q q q q q q q q q q q q qq q q q 400 q q q q q q q q q q q q q q q q q q q q q q q q q 300 q q q q q q q q q q q q q q q q q q qqqq qqq q q q q q q q q q q q 200 q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q qq q q qq q q 100 q q q q q q q q q q q q q q q q q q q q q q q q q 0 q - 0 30 60 90 120 150 180 210 240 270 Figure 2.2: Powers 627i mod 941 for i = 1; 2; 3;::: any group and use the group law instead of multiplication. -

On Lattices, Learning with Errors, Random Linear Codes, and Cryptography

On Lattices, Learning with Errors, Random Linear Codes, and Cryptography Oded Regev ¤ May 2, 2009 Abstract Our main result is a reduction from worst-case lattice problems such as GAPSVP and SIVP to a certain learning problem. This learning problem is a natural extension of the ‘learning from parity with error’ problem to higher moduli. It can also be viewed as the problem of decoding from a random linear code. This, we believe, gives a strong indication that these problems are hard. Our reduction, however, is quantum. Hence, an efficient solution to the learning problem implies a quantum algorithm for GAPSVP and SIVP. A main open question is whether this reduction can be made classical (i.e., non-quantum). We also present a (classical) public-key cryptosystem whose security is based on the hardness of the learning problem. By the main result, its security is also based on the worst-case quantum hardness of GAPSVP and SIVP. The new cryptosystem is much more efficient than previous lattice-based cryp- tosystems: the public key is of size O~(n2) and encrypting a message increases its size by a factor of O~(n) (in previous cryptosystems these values are O~(n4) and O~(n2), respectively). In fact, under the assumption that all parties share a random bit string of length O~(n2), the size of the public key can be reduced to O~(n). 1 Introduction Main theorem. For an integer n ¸ 1 and a real number " ¸ 0, consider the ‘learning from parity with n error’ problem, defined as follows: the goal is to find an unknown s 2 Z2 given a list of ‘equations with errors’ hs; a1i ¼" b1 (mod 2) hs; a2i ¼" b2 (mod 2) . -

Overview of Post-Quantum Public-Key Cryptosystems for Key Exchange

Overview of post-quantum public-key cryptosystems for key exchange Annabell Kuldmaa Supervised by Ahto Truu December 15, 2015 Abstract In this report we review four post-quantum cryptosystems: the ring learning with errors key exchange, the supersingular isogeny key exchange, the NTRU and the McEliece cryptosystem. For each protocol, we introduce the underlying math- ematical assumption, give overview of the protocol and present some implementa- tion results. We compare the implementation results on 128-bit security level with elliptic curve Diffie-Hellman and RSA. 1 Introduction The aim of post-quantum cryptography is to introduce cryptosystems which are not known to be broken using quantum computers. Most of today’s public-key cryptosys- tems, including the Diffie-Hellman key exchange protocol, rely on mathematical prob- lems that are hard for classical computers, but can be solved on quantum computers us- ing Shor’s algorithm. In this report we consider replacements for the Diffie-Hellmann key exchange and introduce several quantum-resistant public-key cryptosystems. In Section 2 the ring learning with errors key exchange is presented which was introduced by Peikert in 2014 [1]. We continue in Section 3 with the supersingular isogeny Diffie–Hellman key exchange presented by De Feo, Jao, and Plut in 2011 [2]. In Section 5 we consider the NTRU encryption scheme first described by Hoffstein, Piphe and Silvermain in 1996 [3]. We conclude in Section 6 with the McEliece cryp- tosystem introduced by McEliece in 1978 [4]. As NTRU and the McEliece cryptosys- tem are not originally designed for key exchange, we also briefly explain in Section 4 how we can construct key exchange from any asymmetric encryption scheme. -

The Discrete Log Problem and Elliptic Curve Cryptography

THE DISCRETE LOG PROBLEM AND ELLIPTIC CURVE CRYPTOGRAPHY NOLAN WINKLER Abstract. In this paper, discrete log-based public-key cryptography is ex- plored. Specifically, we first examine the Discrete Log Problem over a general cyclic group and algorithms that attempt to solve it. This leads us to an in- vestigation of the security of cryptosystems based over certain specific cyclic × groups: Fp, Fp , and the cyclic subgroup generated by a point on an elliptic curve; we ultimately see the highest security comes from using E(Fp) as our group. This necessitates an introduction of elliptic curves, which is provided. Finally, we conclude with cryptographic implementation considerations. Contents 1. Introduction 2 2. Public-Key Systems Over a Cyclic Group G 3 2.1. ElGamal Messaging 3 2.2. Diffie-Hellman Key Exchanges 4 3. Security & Hardness of the Discrete Log Problem 4 4. Algorithms for Solving the Discrete Log Problem 6 4.1. General Cyclic Groups 6 × 4.2. The cyclic group Fp 9 4.3. Subgroup Generated by a Point on an Elliptic Curve 10 5. Elliptic Curves: A Better Group for Cryptography 11 5.1. Basic Theory and Group Law 11 5.2. Finding the Order 12 6. Ensuring Security 15 6.1. Special Cases and Notes 15 7. Further Reading 15 8. Acknowledgments 15 References 16 1 2 NOLAN WINKLER 1. Introduction In this paper, basic knowledge of number theory and abstract algebra is assumed. Additionally, rather than beginning from classical symmetric systems of cryptog- raphy, such as the famous Caesar or Vigni`ere ciphers, we assume a familiarity with these systems and why they have largely become obsolete on their own. -

Lattices That Admit Logarithmic Worst-Case to Average-Case Connection Factors

Lattices that Admit Logarithmic Worst-Case to Average-Case Connection Factors Chris Peikert∗ Alon Rosen† April 4, 2007 Abstract We demonstrate an average-case problem that is as hard as finding γ(n)-approximate√ short- est vectors in certain n-dimensional lattices in the worst case, where γ(n) = O( log n). The previously best known factor for any non-trivial class of lattices was γ(n) = O˜(n). Our results apply to families of lattices having special algebraic structure. Specifically, we consider lattices that correspond to ideals in the ring of integers of an algebraic number field. The worst-case problem we rely on is to find approximate shortest vectors in these lattices, under an appropriate form of preprocessing of the number field. For the connection factors γ(n) we achieve, the corresponding decision problems on ideal lattices are not known to be NP-hard; in fact, they are in P. However, the search approximation problems still appear to be very hard. Indeed, ideal lattices are well-studied objects in com- putational number theory, and the best known algorithms for them seem to perform no better than the best known algorithms for general lattices. To obtain the best possible connection factor, we instantiate our constructions with infinite families of number fields having constant root discriminant. Such families are known to exist and are computable, though no efficient construction is yet known. Our work motivates the search for such constructions. Even constructions of number fields having root discriminant up to O(n2/3−) would yield connection factors better than O˜(n).