TUGBOAT Volume 40, Number 2 / 2019 TUG 2019 Conference

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Method to Accommodate Backward Compatibility on the Learning Application-Based Transliteration to the Balinese Script

(IJACSA) International Journal of Advanced Computer Science and Applications, Vol. 12, No. 6, 2021 A Method to Accommodate Backward Compatibility on the Learning Application-based Transliteration to the Balinese Script 1 3 4 Gede Indrawan , I Gede Nurhayata , Sariyasa I Ketut Paramarta2 Department of Computer Science Department of Balinese Language Education Universitas Pendidikan Ganesha (Undiksha) Universitas Pendidikan Ganesha (Undiksha) Singaraja, Indonesia Singaraja, Indonesia Abstract—This research proposed a method to accommodate transliteration rules (for short, the older rules) from The backward compatibility on the learning application-based Balinese Alphabet document 1 . It exposes the backward transliteration to the Balinese Script. The objective is to compatibility method to accommodate the standard accommodate the standard transliteration rules from the transliteration rules (for short, the standard rules) from the Balinese Language, Script, and Literature Advisory Agency. It is Balinese Language, Script, and Literature Advisory Agency considered as the main contribution since there has not been a [7]. This Bali Province government agency [4] carries out workaround in this research area. This multi-discipline guidance and formulates programs for the maintenance, study, collaboration work is one of the efforts to preserve digitally the development, and preservation of the Balinese Language, endangered Balinese local language knowledge in Indonesia. The Script, and Literature. proposed method covered two aspects, i.e. (1) Its backward compatibility allows for interoperability at a certain level with This study was conducted on the developed web-based the older transliteration rules; and (2) Breaking backward transliteration learning application, BaliScript, for further compatibility at a certain level is unavoidable since, for the same ubiquitous Balinese Language learning since the proposed aspect, there is a contradictory treatment between the standard method reusable for the mobile application [8], [9]. -

Free and Open Source Software Codes for Antenna Design: Preliminary Numerical Experiments

ISSN 2255-9159 (online) ISSN 2255-9140 (print) Electrical, Control and Communication Engineering 2019, vol. 15, no. 2, pp. 88–95 doi: 10.2478/ecce-2019-0012 https://content.sciendo.com Free and Open Source Software Codes for Antenna Design: Preliminary Numerical Experiments Alessandro Fedeli* (Assistant Professor, University of Genoa, Genoa, Italy), Claudio Montecucco (Consultant, Atel Antennas s.r.l., Arquata Scrivia, Italy), Gian Luigi Gragnani (Associate Professor, University of Genoa, Genoa, Italy) Abstract – In both industrial and scientific frameworks, free and the natural choice when ethical concerns about science open source software codes create novel and interesting diffusion and reproducibility [2] are faced. opportunities in computational electromagnetics. One of the For these reasons, work has started, aiming to identify and possible applications, which usually requires a large set of numerical tests, is related to antenna design. Despite the well- evaluate possible open source programs that can be usefully known advantages offered by open source software, there are employed for the design of antennas and possibly other passive several critical points that restrict its practical application. First, electromagnetic devices such as, for example, filters, couplers, the knowledge of the open source programs is often limited. impedance adapters. The research also aims at finding software Second, by using open source packages it is sometimes not easy to that allows for the pre- and post-processing of data. obtain results with a high level of confidence, and to integrate open Furthermore, it has been decided to consider the possibility of source modules in the production workflow. In the paper, a discussion about open source programs for antenna design is easily integrating the design into the complete production and carried out. -

Open Source License Report on the Product

OPEN SOURCE LICENSE REPORT ON THE PRODUCT The software included in this product contains copyrighted software that is licensed under the GPLv2, GPLv3, gSOAP Public License, jQuery, PHP License 3.01, FTL, BSD 3-Clause License, Public Domain, MIT License, OpenSSL Combined License, Apache 2.0 License, zlib/libpng License, , . You may obtain the complete corresponding source code from us for a period of three years after our last shipment of this product by sending email to: [email protected] If you want to obtain the complete corresponding source code with a physical medium such as CD-ROM, the cost of physically performing source distribution might be charged. For more details about Open Source Software, refer to eneo website at www.eneo-security.com, the product CD and manuals. GPLv2: u-Boot 2013.07, Linux Kernel 3.10.55, busybox 1.20.2, ethtool 3.10, e2fsprogs 1.41.14, mtd-utils 1.5.2, lzo 2.05, nfs-utils 1.2.7, cryptsetup 1.6.1, udhcpd 0.9.9 GPLv3: pwstrength 2.0.4 gSOAP Public License: gSOAP 2.8.10 jQuery License: JQuery 2.1.1, JQuery UI 1.10.4 PHP: PHP 5.4.4 FTL (FreeType License): freetype 2.4.10 BSD: libtirpc 0.2.3, rpcbind 0.2.0, lighttpd 1.4.32, hdparm 9,45, hostpad 2, wpa_supplicant 2, jsbn 1.4 Public Domain: sqlite 3.7.17 zlib: zlib 1.2.5 MIT:pwstrength 2.0.4, ezxml 0.8.6, bootstrap 3.3.4, jquery-fullscreen 1.1.5, jeditable 1.7.1, jQuery jqGrid 4.6.0, fullcalendar 2.2.0, datetimepicker 4.17.42, clockpicker 0.0.7, dataTables 1.0.2, dropzone 3.8.7, iCheck 1.0.2, ionRangeSlider 2.0.13, metisMenu 2.0.2, slimscroll 1.3.6, sweetalert 2015.11, Transitionize 0.0.2 , switchery 0.0.2, toastr 2.1.0, animate 3.5.0, font-awesome 4.3.0, Modernizr 2.7.1 pace 1.0.0 OpenSSL Combined: openssl 1.0.1h Apache license 2.0: datepicker 1.4.0, mDNSResponder 379.32.1 wish), that you receive source reflect on the original authors' GNU GENERAL PUBLIC code or can get it if you want it, reputations. -

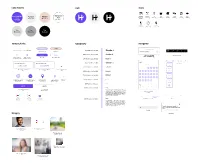

Color Palette Typography Header 1 Navigation Imagery Logo

Color Palette Logo Icons Background Brand/Highlight Highlight Highlight Dates Page/ Restaurant Bar Coffee Movies The Arts Music Sports /Text Date on date (location tag) (location tag) (location tag) (location tag) (location tag) (location tag) (location tag) /Text #EDEDED #F1D7CA plan page #6A42ED #FFFFFF Outdoors Time Location (location tag) (Date plan page) (Date plan page) Text Text Text #DDDDDD #AAAAAA #000000 Buttons/Links Typography Navigation Button Button Ready to go on a date? Open drop down menu Close drop down menu Source Serif Pro, s: 36, Bold Header 1 4 1 Inactive primary CTA Secondary CTA Enable “Set Up a Date” Feature Private until you both have enabled Button Button IBM Plex Sans, s: 30, Semi-Bold Header 2 Popup with the Enable a date Bottom navigation menu feature on the match chat page Inactive toggle state Active toggle state Primary CTA Tertiary CTA and Filters (enable setup feature) (enable setup feature) IBM Plex Sans, s: 21, Semi-Bold Header 3 Activity Price Rating April Source Serif Pro, s: 24, Bold Subheader 1 Who do you want to date? Who do you want to date? Recommended Filters (expanded) S M T W T F S Select a Match Erin Matthews New Spots IBM Plex Sans, s: 18, Semi-Bold Subheader 2 1 2 3 4 Upcoming Events Setup a Date Buttons (nothing Setup a Date Buttons (selection Close button (used for popout selected made) screens) Get a Drink IBM Plex Sans, s: 15, Semi-Bold Subheader 3 5 6 7 8 9 10 11 Get some Food 12 13 14 15 16 17 18 IBM Plex Sans, s: 24, Semi-Bold Button 1 See a Movie Add Add 19 20 21 22 23 24 25 Get Outside Add button for set up a date Add button for set up a date Location selector on Location selector on IBM Plex Sans, s: 13, Semi-Bold Button 2 Back button 26 27 28 29 30 Sports options (inactive) options (active) map view (current) map view (not-current) Listen to Music IBM Plex Sans, s: 9, Medium Button 3 May Add 6:00 PM 6:00 PM S M T W T F S IBM Plex Sans, s: 14, Medium Body 1 Setup a date button (selected Setup a date button This is an example of a body text paragraph. -

X E TEX Live

X TE EX Live Jonathan Kew SIL International Horsleys Green High Wycombe Bucks HP14 3XL, England jonathan_kew (at) sil dot org 1 X TE EX in TEX Live in the preamble are sufficient to set the typefaces through- out the document. ese fonts were installed by simply e release of TEX Live 2007 marked a milestone for the dropping the .otf or .ttf files in the computer’s Fonts X TE EX project, as the first major TEX distribution to in- folder; no .tfm, .fd, .sty, .map, or other TEX-related files clude X TE EX (version 0.996) as an integral part. Prior to had to be created or installed. this, X TE EX was a tool that could be added to a TEX setup, Release 0.996 of X T X also provides some enhance- but version and configuration differences meant that it was E E ments over earlier, pre-T X Live versions. In particular, difficult to ensure smooth integration in all cases, and it was E there are new primitives for low-level access to glyph infor- only available for users who specifically chose to seek it out mation (useful during font development and testing); some and install it. (One exception to this is the MacTEX pack- preliminary support for the use of OpenType math fonts age, which has included X TE EX for the past year or so, but (such as the Cambria Math font shipped with MS Office this was just one distribution on one platform.) Integration 2007); and a variety of bug fixes. -

Variable First

Variable first Baptiste Guesnon Designer & Design Technologist at Söderhavet A small text Text with hyphen- ation Gulliver, Gerard Unger, 1993 Screens are variable first Static units Relative units Pica, type points, %, viewport width, pixels, cm… viewport height Why should type be static… …while writing is organic? Century Expanded plomb from 72 pt to 4 pt and putted at a same scale Image by Nick Sherman Through our experience with traditional typesetting methods, we have come to expect that the individual letterforms of a particular typeface should always look the same. This notion is the result of a technical process, not the other way round. However, there is no technical reason for making a digital letter the same every time it is printed. Is Best Really Better, Erik van Blokland & Just van Rossum First published in Emigre magazine issue 18, 1990 Lettering ? Typography Potential of variations in shape a same letter [————— 500 years of type technology evolution —————] Metal / Ink / Paper / Punch & Counterpunch / Pantograph / Linotype & Monotype / etc. Opentype Gutenberg Postscript Photocompo. Variable Fonts Change is the rule in the computer, stability the exception. Jay David Bolter, Writing Space, 1991 Fedra outlines, Typotheque Metafont Postscript Truetype GX Opentype 1.8 (Variable Fonts) Multiple Master Ikarus Fonts HZ Program Webfont Opentype (.woff) Now 1970 1975 1980 1985 1990 1995 2000 2005 2010 Punk/Metafont Beowolf Digital Lettering Donald Knuth Letteror Letteror 1988 1995 2015 HZ-program Indesign glyph scaling glyph scaling Skia, Matthew Carter for Mac OS, 1993 How to make it work? The Stroke, Gerrit Noordzij, 1985 Metafont book, Donald Knuth Kalliculator, 2007, Frederik Berlaen Beowolf, Letteror, 1995 Parametric fonts ≠ Variable fonts Designing instances vs. -

The Unicode Cookbook for Linguists: Managing Writing Systems Using Orthography Profiles

Zurich Open Repository and Archive University of Zurich Main Library Strickhofstrasse 39 CH-8057 Zurich www.zora.uzh.ch Year: 2017 The Unicode Cookbook for Linguists: Managing writing systems using orthography profiles Moran, Steven ; Cysouw, Michael DOI: https://doi.org/10.5281/zenodo.290662 Posted at the Zurich Open Repository and Archive, University of Zurich ZORA URL: https://doi.org/10.5167/uzh-135400 Monograph The following work is licensed under a Creative Commons: Attribution 4.0 International (CC BY 4.0) License. Originally published at: Moran, Steven; Cysouw, Michael (2017). The Unicode Cookbook for Linguists: Managing writing systems using orthography profiles. CERN Data Centre: Zenodo. DOI: https://doi.org/10.5281/zenodo.290662 The Unicode Cookbook for Linguists Managing writing systems using orthography profiles Steven Moran & Michael Cysouw Change dedication in localmetadata.tex Preface This text is meant as a practical guide for linguists, and programmers, whowork with data in multilingual computational environments. We introduce the basic concepts needed to understand how writing systems and character encodings function, and how they work together. The intersection of the Unicode Standard and the International Phonetic Al- phabet is often not met without frustration by users. Nevertheless, thetwo standards have provided language researchers with a consistent computational architecture needed to process, publish and analyze data from many different languages. We bring to light common, but not always transparent, pitfalls that researchers face when working with Unicode and IPA. Our research uses quantitative methods to compare languages and uncover and clarify their phylogenetic relations. However, the majority of lexical data available from the world’s languages is in author- or document-specific orthogra- phies. -

Opentype Postscript Fonts with Unusual Units-Per-Em Values

Luigi Scarso VOORJAAR 2010 73 OpenType PostScript fonts with unusual units-per-em values Abstract Symbola is an example of OpenType font with TrueType OpenType fonts with Postscript outline are usually defined outlines which has been designed to match the style of in a dimensionless workspace of 1000×1000 units per em Computer Modern font. (upm). Adobe Reader exhibits a strange behaviour with pdf documents that embed an OpenType PostScript font with A brief note about bitmap fonts: among others, Adobe unusual upm: this paper describes a solution implemented has published a “Glyph Bitmap Distribution Format by LuaTEX that resolves this problem. (BDF)” [2] and with fontforge it’s easy to convert a bdf font into an opentype one without outlines. A fairly Keywords complete bdf font is http://unifoundry.com/unifont-5.1 LuaTeX, ConTeXt Mark IV, OpenType, FontMatrix. .20080820.bdf.gz: this Vle can be converted to an Open- type format unifontmedium.otf with fontforge and it Introduction can inspected with showttf, a C program from [3]. Here is an example of glyph U+26A5 MALE AND FEMALE Opentype is a font format that encompasses three kinds SIGN: of widely used fonts: 1. outline fonts with cubic Bézier curves, sometimes Glyph 9887 ( uni26A5) starts at 492 length=17 referred to CFF fonts or PostScript fonts; height=12 width=8 sbX=4 sbY=10 advance=16 2. outline fonts with quadratic Bézier curve, sometimes Bit aligned referred to TrueType fonts; .....*** 3. bitmap fonts. ......** .....*.* Nowadays in digital typography an outline font is almost ..***... the only choice and no longer there is a relevant diUer- .*...*. -

Travels in TEX Land: Choosing a TEX Environment for Windows

The PracTEX Journal TPJ 2005 No 02, 2005-04-15 Rev. 2005-04-17 Travels in TEX Land: Choosing a TEX Environment for Windows David Walden The author of this column wanders through world of TEX, as a non-expert, reporting what he observes and learns, which hopefully will be interesting to other non-expert users of TEX. 1 Introduction This column recounts my experiences looking at and thinking about different ways TEX is set up for users to go through the document-composition to type- setting cycle (input and edit, compile, and view or print). First, I’ll describe my own experience randomly trying various TEX environments. I suspect that some other users have had a similar introduction to TEX; and perhaps other users have just used the environment that was available at their workplace or school. Then I’ll consider some categories for thinking about options in TEX setups. Last, I’ll suggest some follow-on steps. Since I use Microsoft Windows as my computer operating system, this note focuses on environments that are available for Windows.1 2 My random path to choosing a TEX environment 2 I started using TEX in the late 1990s. 1But see my offer in Section 4. 2 While I started using TEX, I switched from TEX to using LATEX as soon as I discovered LATEX existed. Since both TEX and LATEX are operated in the same way, I’ll mostly refer to TEX in this note, since that is the more basic system. c 2005 David C. Walden I don’t quite remember my first setup for trying TEX. -

Master Thesis

Master thesis To obtain a Master of Science Degree in Informatics and Communication Systems from the Merseburg University of Applied Sciences Subject: Tunisian truck license plate recognition using an Android Application based on Machine Learning as a detection tool Author: Supervisor: Achraf Boussaada Prof.Dr.-Ing. Rüdiger Klein Matr.-Nr.: 23542 Prof.Dr. Uwe Schröter Table of contents Chapter 1: Introduction ................................................................................................................................. 1 1.1 General Introduction: ................................................................................................................................... 1 1.2 Problem formulation: ................................................................................................................................... 1 1.3 Objective of Study: ........................................................................................................................................ 4 Chapter 2: Analysis ........................................................................................................................................ 4 2.1 Methodological approaches: ........................................................................................................................ 4 2.1.1 Actual approach: ................................................................................................................................... 4 2.1.2 Image Processing with OCR: ................................................................................................................ -

An Accuracy Examination of OCR Tools

International Journal of Innovative Technology and Exploring Engineering (IJITEE) ISSN: 2278-3075, Volume-8, Issue-9S4, July 2019 An Accuracy Examination of OCR Tools Jayesh Majumdar, Richa Gupta texts, pen computing, developing technologies for assisting Abstract—In this research paper, the authors have aimed to do a the visually impaired, making electronic images searchable comparative study of optical character recognition using of hard copies, defeating or evaluating the robustness of different open source OCR tools. Optical character recognition CAPTCHA. (OCR) method has been used in extracting the text from images. OCR has various applications which include extracting text from any document or image or involves just for reading and processing the text available in digital form. The accuracy of OCR can be dependent on text segmentation and pre-processing algorithms. Sometimes it is difficult to retrieve text from the image because of different size, style, orientation, a complex background of image etc. From vehicle number plate the authors tried to extract vehicle number by using various OCR tools like Tesseract, GOCR, Ocrad and Tensor flow. The authors in this research paper have tried to diagnose the best possible method for optical character recognition and have provided with a comparative analysis of their accuracy. Keywords— OCR tools; Orcad; GOCR; Tensorflow; Tesseract; I. INTRODUCTION Optical character recognition is a method with which text in images of handwritten documents, scripts, passport documents, invoices, vehicle number plate, bank statements, Fig.1: Functioning of OCR [2] computerized receipts, business cards, mail, printouts of static-data, any appropriate documentation or any II. OCR PROCDURE AND PROCESSING computerized receipts, business cards, mail, printouts of To improve the probability of successful processing of an static-data, any appropriate documentation or any picture image, the input image is often ‘pre-processed’; it may be with text in it gets processed and the text in the picture is de-skewed or despeckled. -

S Government of India Atomic Energy Commission Annual

' '/• B.A.R.C-1263 S "7 U as GOVERNMENT OF INDIA TOIT5J 3^TI 3TP7TT ATOMIC ENERGY COMMISSION ANNUAL PROGRESS REPORT FOR 1984 OF THEORETICAL PHYSICS DIVISION by B. P. Rastogi, S. V. G. Menon and R. P. Jain BHABHA ATOMIC RESEARCH CENTRE BOMBAY, INDIA 1985 B.A.R.C. - 1263 GOVERNMENT OF INDIA ATOMIC ENERGY COMMISSION O ffl ANNUAL PROGRESS REPORT FOR 1984 OP THEORETICAL PHYSICS DIVISION by B.P.Rastogl, S.V.G.Menon and R.P.Jain BHABHA ATOMIC RESEARCH CENTRE BOMBAY, INDIA 1985 BARC-1263 IMS Subject Category : E2100 Descriptors BARC RESEARCH PROGRAMS REACTOR KINETICS T CODES C CODES MULTIGROUP THEORY NEUTRON DIFFUSION EQUATION REACTOR SAFETY THERMONUCLEAR REACTIONS FEYNMAN PATH INTEGRALS TRANSPORT THEORY FOKKER-PLANCK EQUATION ABSTRACT This report presents a resume of the work done In the Theoretical Physics Division during the calendar year 1984. The report is divided Into two parts, namely, Nuclear Technology and Mathematical Physics. The topics covered ire described by brief summaries. A list of research publications and papers presented In symposia / workshops is also included. TABLE OF CONTENTS NUCLEAR TECHNOLOGY 1.1 Research and Development for Reactor Physics Analysis of Large 1 PHWRs 1.2 Development of a Computer Code CLUBPU for Calculating 4 Hyperflne Power Distribution In Fuel Pins of PHWR Fuel Cluster 1.3 Burnup Analysis of 28-, and 37-Rod Fuel Cluster for 500 MWe PHWR 4 1.4 Computer Code Development for Fuel Management of PHWRs 4 1.5 Adaptation of TRIVKNI and TUMULT on ND-Computer 5 1.6 Adequacy of One Bundle as Mesh In TAQUIL Code