Gifnets: Differentiable GIF Encoding Framework

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Indexed Color

Indexed Color A browser may support only a certain number of specific colors, creating a palette from which to choose Figure 3.11 The Netscape color palette 1 QUIZ How many bits are needed to represent this palette? Show your work. 2 How to digitize a picture • Sample it → Represent it as a collection of individual dots called pixels • Quantize it → Represent each pixel as one of 224 possible colors (TrueColor) Resolution = The # of pixels used to represent a picture 3 Digitized Images and Graphics Whole picture Figure 3.12 A digitized picture composed of many individual pixels 4 Digitized Images and Graphics Magnified portion of the picture See the pixels? Hands-on: paste the high-res image from the previous slide in Paint, then choose ZOOM = 800 Figure 3.12 A digitized picture composed of many individual pixels 5 QUIZ: Images A low-res image has 200 rows and 300 columns of pixels. • What is the resolution? • If the pixels are represented in True-Color, what is the size of the file? • Same question in High-Color 6 Two types of image formats • Raster Graphics = Storage on a pixel-by-pixel basis • Vector Graphics = Storage in vector (i.e. mathematical) form 7 Raster Graphics GIF format • Each image is made up of only 256 colors (indexed color – similar to palette!) • But they can be a different 256 for each image! • Supports animation! Example • Optimal for line art PNG format (“ping” = Portable Network Graphics) Like GIF but achieves greater compression with wider range of color depth No animations 8 Bitmap format Contains the pixel color -

The Effects of Learning Rate Schedules on Color Quantization

THE EFFECTS OF LEARNING RATE SCHEDULES ON COLOR QUANTIZATION by Garrett Brown A thesis presented to the Department of Computer Science and the Graduate School of the University of Central Arkansas in partial fulfillment of the requirements for the degree of Master of Science in Computer Science Conway, Arkansas May 2020 ProQuest Number:27834147 All rights reserved INFORMATION TO ALL USERS The quality of this reproduction is dependent on the quality of the copy submitted. In the unlikely event that the author did not send a complete manuscript and there are missing pages, these will be noted. Also, if material had to be removed, a note will indicate the deletion. ProQuest 27834147 Published by ProQuest LLC (2020). Copyright of the Dissertation is held by the Author. All Rights Reserved. This work is protected against unauthorized copying under Title 17, United States Code Microform Edition © ProQuest LLC. ProQuest LLC 789 East Eisenhower Parkway P.O. Box 1346 Ann Arbor, MI 48106 - 1346 TO THE OFFICE OF GRADUATE STUDIES: The members of the Committee approve the thesis of Garrett Brown presented on March 31, 2020. M. Emre Celebi, Ph.D., Committee Chairperson Ahmad Patooghy, Ph.D. Mahmut Karakaya, Ph.D. PERMISSION Title The Effects of Learning Rate Schedules on Color Quantization Department Computer Science Degree Master of Science In presenting this thesis/dissertation in partial fulfillment of the requirements for a graduate degree from the University of Central Arkansas, I agree that the Library of this University shall make it freely available for inspections. I further agree that permission for extensive copying for scholarly purposes may be granted by the professor who supervised my thesis/dissertation work, or, in the professor’s absence, by the Chair of the Department or the Dean of the Graduate School. -

A Comprehensive Benchmark for Single Image Compression Artifact Reduction

IEEE TRANSACTIONS ON IMAGE PROCESSING, VOL. 29, 2020 7845 A Comprehensive Benchmark for Single Image Compression Artifact Reduction Jiaying Liu , Senior Member, IEEE, Dong Liu , Senior Member, IEEE, Wenhan Yang , Member, IEEE, Sifeng Xia , Student Member, IEEE, Xiaoshuai Zhang , and Yuanying Dai Abstract— We present a comprehensive study and evaluation storage processes to save bandwidth and resources. Based on of existing single image compression artifact removal algorithms human visual properties, the codecs make use of redundan- using a new 4K resolution benchmark. This benchmark is called cies in spatial, temporal, and transform domains to provide the Large-Scale Ideal Ultra high-definition 4K (LIU4K), and it compact approximations of encoded content. They effectively includes including diversified foreground objects and background reduce the bit-rate cost but inevitably lead to unsatisfactory scenes with rich structures. Compression artifact removal, as a visual artifacts, e.g. blockiness, ringing, and banding. These common post-processing technique, aims at alleviating undesir- able artifacts, such as blockiness, ringing, and banding caused artifacts are derived from the loss of high-frequency details by quantization and approximation in the compression process. during the quantization process and the discontinuities caused In this work, a systematic listing of the reviewed methods is by block-wise batch processing. These artifacts not only presented based on their basic models (handcrafted models and degrade user visual experience, but they are also detrimental deep networks). The main contributions and novelties of these for successive image processing and computer vision tasks. methods are highlighted, and the main development directions In our work, we focus on the degradation of compressed are summarized, including architectures, multi-domain sources, images. -

A New Steganographic Method for Palette-Based Images

IS&T's 1999 PICS Conference A New Steganographic Method for Palette-Based Images Jiri Fridrich Center for Intelligent Systems, SUNY Binghamton, Binghamton, New York Abstract messages. The gaps in human visual and audio systems can be used for information hiding. In the case of images, the In this paper, we present a new steganographic technique human eye is relatively insensitive to high frequencies. This for embedding messages in palette-based images, such as fact has been utilized in many steganographic algorithms, GIF files. The new technique embeds one message bit into which modify the least significant bits of gray levels in one pixel (its pointer to the palette). The pixels for message digital images or digital sound tracks. Additional bits of embedding are chosen randomly using a pseudo-random information can also be inserted into coefficients of image number generator seeded with a secret key. For each pixel transforms, such as discrete cosine transform, Fourier at which one message bit is to be embedded, the palette is transform, etc. Transform techniques are typically more searched for closest colors. The closest color with the same robust with respect to common image processing operations parity as the message bit is then used instead of the original and lossy compression. color. This has the advantage that both the overall change The steganographer’s job is to make the secretly hidden due to message embedding and the maximal change in information difficult to detect given the complete colors of pixels is smaller than in methods that perturb the knowledge of the algorithm used to embed the information least significant bit of indices to a luminance-sorted palette, except the secret embedding key.* This so called such as EZ Stego.1 Indeed, numerical experiments indicate Kerckhoff’s principle is the golden rule of cryptography and that the new technique introduces approximately four times is often accepted for steganography as well. -

Understanding Image Formats and When to Use Them

Understanding Image Formats And When to Use Them Are you familiar with the extensions after your images? There are so many image formats that it’s so easy to get confused! File extensions like .jpeg, .bmp, .gif, and more can be seen after an image’s file name. Most of us disregard it, thinking there is no significance regarding these image formats. These are all different and not cross‐ compatible. These image formats have their own pros and cons. They were created for specific, yet different purposes. What’s the difference, and when is each format appropriate to use? Every graphic you see online is an image file. Most everything you see printed on paper, plastic or a t‐shirt came from an image file. These files come in a variety of formats, and each is optimized for a specific use. Using the right type for the right job means your design will come out picture perfect and just how you intended. The wrong format could mean a bad print or a poor web image, a giant download or a missing graphic in an email Most image files fit into one of two general categories—raster files and vector files—and each category has its own specific uses. This breakdown isn’t perfect. For example, certain formats can actually contain elements of both types. But this is a good place to start when thinking about which format to use for your projects. Raster Images Raster images are made up of a set grid of dots called pixels where each pixel is assigned a color. -

Amiga Graphics Reference Card 2Nd Edition

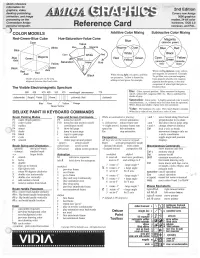

Quick reference information for graphics, video, 2nd Edition desktop publishing, Covers new Amiga animation, and image 3000 graphics processing on the modes, 24-bit color Commodore Amiga hardware, DOS 2.0 personal computer. Reference Card overscan, and PAL. ) COLOR MODELS Additive Color Mixing Subtractive Color Mixing Red-Green-Blue Cube Hue-Saturation-Value Cone Cyan Value White Blue Blue] Axis Yellow Black ~reen Axis When mixi'hgpigments, cyan, yellow, Red When mixing light, red, green, and blue and magenta are primaries. Example: are primaries. Yell ow is formed by To get blue, mix cyan and magenta. Shades of gray are on the long Cyan pigment absorbs red, magenta adding red and green, for example. diagonal between black and white. pigment absorbs green, so the only component of white light that gets re The Visible Electromagnetic Spectrum flected is blue. 380 420 470 495 535 575 wavelength (nanometers) 770 Hue: Color; spectral position. Often measured in degrees; red=0°, yellow=60°, magenta=300°, etc. Hue is undefined for shades of gray. (ultraviolet) Purple Violet yellowish Red (infrared) Saturation: Color purity. A highly-saturated color is nearly Blue Orange monochromatic, i.e., contains only one color from the spectrum. White, black, and shades of gray have zero saturation. Value: The darkness of a color. How much black it contain's. DELUXE PAINT Ill KEYBOARD COMMANDS White has a value of one, black has a value of zero. Brush Painting Modes Page and Screen Commands While an animation is playing: ; ana, move brush along fixed axis -

Learning a Virtual Codec Based on Deep Convolutional Neural

JOURNAL OF LATEX CLASS FILES 1 Learning a Virtual Codec Based on Deep Convolutional Neural Network to Compress Image Lijun Zhao, Huihui Bai, Member, IEEE, Anhong Wang, Member, IEEE, and Yao Zhao, Senior Member, IEEE Abstract—Although deep convolutional neural network has parameters are always assigned to the codec in order to been proved to efficiently eliminate coding artifacts caused by achieve low bit-rate coding leading to serious blurring and the coarse quantization of traditional codec, it’s difficult to ringing artifacts [4–6], when the transmission band-width is train any neural network in front of the encoder for gradient’s back-propagation. In this paper, we propose an end-to-end very limited. In order to alleviate the problem of Internet image compression framework based on convolutional neural transmission congestion [7], advanced coding techniques, network to resolve the problem of non-differentiability of the such as de-blocking, and post-processing [8], are still the hot quantization function in the standard codec. First, the feature and open issues to be researched. description neural network is used to get a valid description in The post-processing technique for compressed image can the low-dimension space with respect to the ground-truth image so that the amount of image data is greatly reduced for storage be explicitly embedded into the codec to improve the coding or transmission. After image’s valid description, standard efficiency and reduce the artifacts caused by the coarse image codec such as JPEG is leveraged to further compress quantization. For instance, adaptive de-blocking filtering is image, which leads to image’s great distortion and compression designed as a loop filter and integrated into H.264/MPEG-4 artifacts, especially blocking artifacts, detail missing, blurring, AVC video coding standard [9], which does not require an and ringing artifacts. -

Certified Digital Designer Professional Certification Examination Review

Digital Imaging & Editing and Digital & General Photography Certified Digital Designer Professional Certification Examination Review Within this presentation – We will use specific names and terminologies. These will be related to specific products, software, brands and trade names. ADDA does not endorse any specific software or manufacturer. It is the sole decision of the individual to choose and purchase based on their personal preference and financial capabilities. the Examination Examination Contain at Total 325 Questions 200 Questions in Digital Image Creation and Editing Image Editing is applicable to all Areas related to Digital Graphics 125 Question in Photography Knowledge and History Photography is applicable to General Principles of Photography Does not cover Photography as a General Arts Program Examination is based on entry level intermediate employment knowledge Certain Processes may be omitted that are required to achieve an end result ADDA Professional Certification Series – Digital Imaging & Editing the Examination Knowledge of Graphic and Photography Acronyms Knowledge of Graphic Program Tool Symbols Some Knowledge of Photography Lighting Ability to do some basic Geometric Calculations Basic Knowledge of Graphic History & Theory Basic Knowledge of Digital & Standard Film Cameras Basic Knowledge of Camera Lens and Operation General Knowledge of Computer Operation Some Common Sense ADDA Professional Certification Series – Digital Imaging & Editing This is the Comprehensive Digital Imaging & Editing Certified Digital Designer Professional Certification Examination Review Within this presentation – We will use specific names and terminologies. These will be related to specific products, software, brands and trade names. ADDA does not endorse any specific software or manufacturer. It is the sole decision of the individual to choose and purchase based on their personal preference and financial capabilities. -

A Framework for Detection of Linear Gradient Filled Regions and Their

21st International Conference on Computer Graphics, Visualization and Computer Vision 2013 A framework for detection of linear gradient filled regions and their reconstruction for vector graphics Ruchin Kansal Prof. Subodh Kumar Department of computer Department of computer science science IIT Delhi IIT Delhi India, New Delhi India, New Delhi [email protected] [email protected] ABSTRACT Linear gradients are commonly applied in non-photographic art-work for shading and other artistic effects. It is sometimes necessary to generate a vector graphics form of raster images comprising such art-work with the expec- tation to obtain a simple output that is further editable, say, using any popular vector editing software. Further, this vectorization process should be automatic with minimal user intervention. We present a simple image vectorization algorithm that meets these requirements by detecting linear gradient filled regions and reconstructing the vector definition using that information. It uses a novel interval gradient optimization scheme to derive large regions of uniform gradient. We also demonstrate the technique on noisy images. Keywords Image vectorization Gradient reconstruction Region detection With the increasing demand for vector content, the need for converting raster images into vectors has also in- creased. This process is called Vectorization. We set out to obtain automatic vectorization with minimal human intervention even on potentially noisy images. Later re-rasterization of our vector representation should pro- duce the appearance of the original image at various (a) Original Image (b) Adobe Livetrace scales. Further, for wide-spread usage, this vector rep- output resentation should be in a standard form and be ed- itable. -

New Image Compression Artifact Measure Using Wavelets

New image compression artifact measure using wavelets Yung-Kai Lai and C.-C. Jay Kuo Integrated Media Systems Center Department of Electrical Engineering-Systems University of Southern California Los Angeles, CA 90089-256 4 Jin Li Sharp Labs. of America 5750 NW Paci c Rim Blvd., Camas, WA 98607 ABSTRACT Traditional ob jective metrics for the quality measure of co ded images such as the mean squared error (MSE) and the p eak signal-to-noise ratio (PSNR) do not correlate with the sub jectivehuman visual exp erience well, since they do not takehuman p erception into account. Quanti cation of artifacts resulted from lossy image compression techniques is studied based on a human visual system (HVS) mo del and the time-space lo calization prop ertyofthe wavelet transform is exploited to simulate HVS in this research. As a result of our research, a new image quality measure by using the wavelet basis function is prop osed. This new metric works for a wide variety of compression artifacts. Exp erimental results are given to demonstrate that it is more consistent with human sub jective ranking. KEYWORDS: image quality assessment, compression artifact measure, visual delity criterion, human visual system (HVS), wavelets. 1 INTRODUCTION The ob jective of lossy image compression is to store image data eciently by reducing the redundancy of image content and discarding unimp ortant information while keeping the quality of the image acceptable. Thus, the tradeo of lossy image compression is the numb er of bits required to represent an image and the quality of the compressed image. -

The Rendering Pipeline

The Rendering Pipeline Framebuffers • Framebuffer is the interface between the device and the computer’s notion of an image • A memory array in which the computer stores an image – On most computers, separate memory bank from main memory – Many different variations, motivated by cost of memory Framebuffers: True-Color • A true-color (aka 24-bit or 32-bit) framebuffer stores one byte each for red, green, and blue • Each pixel can thus be one of 224 colors • Pay attention to Endian-ness • How can 24-bit and 32-bit mean the same thing here? Framebuffers: Indexed-Color • An indexed-color (8-bit or PseudoColor) framebuffer stores one byte per pixel (also: GIF image format) • This byte indexes into a color map: • How many colors can a pixel be? • Common on low-end displays (cell phones, PDAs, GameBoys) Framebuffers: Indexed Color Illustration of how an indexed palette works A 2-bit indexed-color image. The color of each pixel is represented by a number; each number corresponds to a color in the palette. Image credits: Wikipedia Framebuffers: Hi-Color • Hi-Color is (was?) a popular PC SVGA standard • Packs pixels into 16 bits: – 5 Red, 6 Green, 5 Blue (why would green get more?) – Sometimes just 5,5,5 • Each pixel can be one of 216 colors • Hi-color images can exhibit worse quantization artifacts than a well-mapped 8-bit image Color Quantization A process that reduces the number of distinct colors used in an image Intention that the new image should be as visually similar as possible to the original image Image credits: Wikipedia The Rendering -

AN5072: Introduction to Embedded Graphics – Application Note

Freescale Semiconductor Document Number: AN5072 Application Note Rev 0, 02/2015 Introduction to Embedded Graphics with Freescale Devices by: Luis Olea and Ioseph Martinez Contents 1 Introduction 1 Introduction................................................................1 The purpose of this application note is to explain the basic 1.1 Graphics basic concepts.............. ...................1 concepts and requirements of embedded graphics applications, 2 Freescale graphics devices.............. ..........................7 helping software and hardware engineers easily understand the fundamental concepts behind color display technology, which 2.1 MPC5606S.....................................................7 is being expected by consumers more and more in varied 2.2 MPC5645S.....................................................8 applications from automotive to industrial markets. 2.3 Vybrid.............................................................8 This section helps the reader to familiarize with some of the most used concepts when working with graphics. Knowing 2.4 MAC57Dxx....................... ............................ 8 these definitions is basic for any developer that wants to get 2.5 Kinetis K70......................... ...........................8 started with embedded graphics systems, and these concepts apply to any system that handles graphics irrespective of the 2.6 QorIQ LS1021A................... ......................... 9 hardware used. 2.7 i.MX family........................ ........................... 9 2.8 Quick