Characterizing the Function Space for Bayesian Kernel Models

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

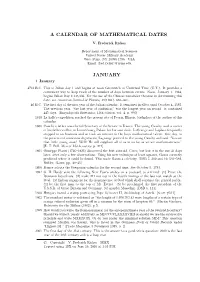

A Calendar of Mathematical Dates January

A CALENDAR OF MATHEMATICAL DATES V. Frederick Rickey Department of Mathematical Sciences United States Military Academy West Point, NY 10996-1786 USA Email: fred-rickey @ usma.edu JANUARY 1 January 4713 B.C. This is Julian day 1 and begins at noon Greenwich or Universal Time (U.T.). It provides a convenient way to keep track of the number of days between events. Noon, January 1, 1984, begins Julian Day 2,445,336. For the use of the Chinese remainder theorem in determining this date, see American Journal of Physics, 49(1981), 658{661. 46 B.C. The first day of the first year of the Julian calendar. It remained in effect until October 4, 1582. The previous year, \the last year of confusion," was the longest year on record|it contained 445 days. [Encyclopedia Brittanica, 13th edition, vol. 4, p. 990] 1618 La Salle's expedition reached the present site of Peoria, Illinois, birthplace of the author of this calendar. 1800 Cauchy's father was elected Secretary of the Senate in France. The young Cauchy used a corner of his father's office in Luxembourg Palace for his own desk. LaGrange and Laplace frequently stopped in on business and so took an interest in the boys mathematical talent. One day, in the presence of numerous dignitaries, Lagrange pointed to the young Cauchy and said \You see that little young man? Well! He will supplant all of us in so far as we are mathematicians." [E. T. Bell, Men of Mathematics, p. 274] 1801 Giuseppe Piazzi (1746{1826) discovered the first asteroid, Ceres, but lost it in the sun 41 days later, after only a few observations. -

Front Matter

Cambridge University Press 978-1-107-19850-0 — Toeplitz Matrices and Operators Nikolaï Nikolski , Translated by Danièle Gibbons , Greg Gibbons Frontmatter More Information CAMBRIDGE STUDIES IN ADVANCEDMATHEMATICS182 Editorial Board B. BOLLOBÁS, W.FULTON, F.KIRWAN, P.SARNAK, B. SIMON, B. TOTARO TOEPLITZ MATRICES AND OPERATORS The theory of Toeplitz matrices and operators is a vital part of modern analysis, with applications to moment problems, orthogonal polynomials, approximation theory, inte- gral equations, bounded- and vanishing-mean oscillations, and asymptotic methods for large structured determinants, among others. This friendly introduction to Toeplitz theory covers the classical spectral theory of Toeplitz forms and Wiener–Hopf integral operators and their manifestations throughout modern functional analysis. Numerous solved exercises illustrate the results of the main text and introduce subsidiary topics, including recent developments. Each chapter ends with a survey of the present state of the theory, making this a valuable work for the beginning graduate student and established researcher alike. With biographies of the principal creators of the theory and historical context also woven into the text, this book is a complete source on Toeplitz theory. Nikolaï Nikolski is Professor Emeritus at the Université de Bordeaux, working primar- ily in analysis and operator theory. He has been co-editor of four international journals, editor of more than 15 books, and has published numerous articles and research mono- graphs. He has also supervised 26 Ph.D. students, including three Salem Prize winners. Professor Nikolski was elected Fellow of the American Mathematical Society (AMS) in 2013 and received the Prix Ampère of the French Academy of Sciences in 2010. -

Heritage and Early History of the Boundary Element Method

Engineering Analysis with Boundary Elements 29 (2005) 268–302 www.elsevier.com/locate/enganabound Heritage and early history of the boundary element method Alexander H.-D. Chenga,*, Daisy T. Chengb aDepartment of Civil Engineering University of Mississippi, University, MS, 38677, USA bJohn D. Williams Library, University of Mississippi, University, MS 38677, USA Received 10 December 2003; revised 7 December 2004; accepted 8 December 2004 Available online 12 February 2005 Abstract This article explores the rich heritage of the boundary element method (BEM) by examining its mathematical foundation from the potential theory, boundary value problems, Green’s functions, Green’s identities, to Fredholm integral equations. The 18th to 20th century mathematicians, whose contributions were key to the theoretical development, are honored with short biographies. The origin of the numerical implementation of boundary integral equations can be traced to the 1960s, when the electronic computers had become available. The full emergence of the numerical technique known as the boundary element method occurred in the late 1970s. This article reviews the early history of the boundary element method up to the late 1970s. q 2005 Elsevier Ltd. All rights reserved. Keywords: Boundary element method; Green’s functions; Green’s identities; Boundary integral equation method; Integral equation; History 1. Introduction industrial settings, mesh preparation is the most labor intensive and the most costly portion in numerical After three decades of development, the boundary modeling, particularly for the FEM [9] Without the need element method (BEM) has found a firm footing in the of dealing with the interior mesh, the BEM is more cost arena of numerical methods for partial differential effective in mesh preparation. -

History of the Boundary Element Method

Engineering Analysis with Boundary Elements 29 (2005) 268–302 www.elsevier.com/locate/enganabound Heritage and early history of the boundary element method Alexander H.-D. Chenga,*, Daisy T. Chengb aDepartment of Civil Engineering University of Mississippi, University, MS, 38677, USA bJohn D. Williams Library, University of Mississippi, University, MS 38677, USA Received 10 December 2003; revised 7 December 2004; accepted 8 December 2004 Available online 12 February 2005 Abstract This article explores the rich heritage of the boundary element method (BEM) by examining its mathematical foundation from the potential theory, boundary value problems, Green’s functions, Green’s identities, to Fredholm integral equations. The 18th to 20th century mathematicians, whose contributions were key to the theoretical development, are honored with short biographies. The origin of the numerical implementation of boundary integral equations can be traced to the 1960s, when the electronic computers had become available. The full emergence of the numerical technique known as the boundary element method occurred in the late 1970s. This article reviews the early history of the boundary element method up to the late 1970s. q 2005 Elsevier Ltd. All rights reserved. Keywords: Boundary element method; Green’s functions; Green’s identities; Boundary integral equation method; Integral equation; History 1. Introduction industrial settings, mesh preparation is the most labor intensive and the most costly portion in numerical After three decades of development, the boundary modeling, particularly for the FEM [9] Without the need element method (BEM) has found a firm footing in the of dealing with the interior mesh, the BEM is more cost arena of numerical methods for partial differential effective in mesh preparation. -

Creators of Mathematical and Computational Sciences Creators of Mathematical and Computational Sciences

Ravi P. Agarwal · Syamal K. Sen Creators of Mathematical and Computational Sciences Creators of Mathematical and Computational Sciences Ravi P. Agarwal • Syamal K. Sen Creators of Mathematical and Computational Sciences 123 Ravi P. Agarwal Syamal K. Sen Department of Mathematics GVP-Prof. V. Lakshmikantham Institute Texas A&M University-Kingsville for Advanced Studies Kingsville, TX, USA GVP College of Engineering Campus Visakhapatnam, India ISBN 978-3-319-10869-8 ISBN 978-3-319-10870-4 (eBook) DOI 10.1007/978-3-319-10870-4 Springer Cham Heidelberg New York Dordrecht London Library of Congress Control Number: 2014949778 Mathematics Subject Classification (2010): 01A05, 01A32, 01A61, 01A85 © Springer International Publishing Switzerland 2014 This work is subject to copyright. All rights are reserved by the Publisher, whether the whole or part of the material is concerned, specifically the rights of translation, reprinting, reuse of illustrations, recitation, broadcasting, reproduction on microfilms or in any other physical way, and transmission or information storage and retrieval, electronic adaptation, computer software, or by similar or dissimilar methodology now known or hereafter developed. Exempted from this legal reservation are brief excerpts in connection with reviews or scholarly analysis or material supplied specifically for the purpose of being entered and executed on a computer system, for exclusive use by the purchaser of the work. Duplication of this publication or parts thereof is permitted only under the provisions of the Copyright Law of the Publisher’s location, in its current version, and permission for use must always be obtained from Springer. Permissions for use may be obtained through RightsLink at the Copyright Clearance Center. -

Fredholm Operators

Fredholm Operators Alonso Delf´ın University of Oregon. October 25, 2018 Abstract. The Fredholm index is an indispensable item in the operator theorist's tool- kit and a very simple prototype of the application of algebraic topological methods to analysis. This document contains all the basics one needs to know to work with Fredholm theory, as well as many examples. The actual talk that I gave only dealt with the essentials and I omitted most of the proofs presented here. At the end, I present my solutions to some of the ex- ercises in Murphy's C∗-algebras and operator theory that deal with compact operators and Fredholm theory. A (not so brief) review of compact operators. Let X be a topological space. Recall that a subset Y ⊆ X is said to be relatively compact if Y is compact in X. Recall also that a subset Y ⊆ X is said to be totally bounded if 8 " > 0, 9 n 2 N, and x1; : : : ; xn 2 X such that n [ Y ⊆ B"(xk) k=1 Definition. A linear map u : X ! Y between Banach spaces X and Y is said to be compact if u(S) is relatively compact in Y , where S := fx 2 X : kxk ≤ 1g = B1(0). N Proposition. Let (X; d) be a complete metric space. Then Y ⊆ X is rela- tively compact if and only if Y is totally bounded. 1 Proof. Assume first that Y is relatively compact. Let " > 0. Then, fB"(x)gx2Y is an open cover for Y . Since Y is compact, it follows that n there are n 2 N, x1; : : : ; xn 2 Y such that fB"(xk)gk=1 is an open cover for Y .