Toward Automated Biodiversity Research on the Tropical

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Neuroprosthetic Interfaces – the Reality Behind Bionics and Cyborgs

1. Korrektur/PDF - Mentis - Schleidgen - Human Nature / Rhema 23.12.10 / Seite: 163 Gerald E. Loeb NEUROPROSTHETIC INTERFACES – THE REALITY BEHIND BIONICS AND CYBORGS History and Definitions The invisible and seemingly magical powers of electricity were employed as early as the ancient Greeks and Romans, who used shocks from electric fish to treat pain. More technological forms of electricity became a favorite of quack medical practitioners in the Victorian era (McNeal, 1977), an unfortunate tradition that persists to this day. In the 20th century the elucidation of neural signaling and the orderly design of electrophysiological instrumentation provided a theoretical foundation for the real therapeutic advances discussed herein (Hambrecht, 1992). Nevertheless, neural prosthetics continues to be haunted by projects that fall along the spectrum from blind empiricism to outright fraud. That problem has been exacerbated by two historical circumstances. The first was the rejection by »rigorous scientists« of early attempts at clinical treatment that then turned out much better than they had predicted (Sterling et al., 1971). This tended to encourage the empiricists to ignore the science even when it was helpful or necessary. The second was the inevitable fictionalization by the entertainment industry, which invented and adopted terminology and projects in ways that the lay public often did not distinguish from reality. The terms defined below are increasingly encountered in both scientific and lay discussions. I have selected the usages most relevant to this discussion; other usages occur: – Neural Control – The use of electronic interfaces with the nervous system both to study normal function and to repair dysfunction (as used by Dr. -

Application of Artificial Intelligence (AI) in Prosthetic and Orthotic Rehabilitation Smita Nayak and Rajesh Kumar Das

Chapter Application of Artificial Intelligence (AI) in Prosthetic and Orthotic Rehabilitation Smita Nayak and Rajesh Kumar Das Abstract Technological integration of Artificial Intelligence (AI) and machine learning in the Prosthetic and Orthotic industry and in the field of assistive technology has become boon for the Persons with Disabilities. The concept of neural network has been used by the leading manufacturers of rehabilitation aids for simulating various anatomical and biomechanical functions of the lost parts of the human body. The involvement of human interaction with various agents’ i.e. electronic circuitry, software, robotics, etc. has made a revolutionary impact in the rehabilita- tion field to develop devices like Bionic leg, mind or thought control prosthesis and exoskeletons. Application of Artificial Intelligence and robotics technology has a huge impact in achieving independent mobility and enhances the quality of life in Persons with Disabilities (PwDs). Keywords: artificial neural network, deep learning, brain computer Interface (BCI), electromyography (EMG), electroencephalogram (EEG) 1. Introduction Human is the most intelligent creature in the planet for their brain power and neural network. The human brain is extremely complex with more than 80 billion neurons and trillion of connections [1]. Simulation scales can array from molecular and genetic expressions to compartment models of subcellular volumes and individual neurons to local networks and system models [2]. Deep Neural Network nodes are an over simplification of how brain synapses work. Signal transmission in the brain is dominated by chemical synapses, which release chemical substances and neurotrans- mitters to convert electrical signals via voltage-gated ion channels at the presynaptic cleft into post-synaptic activity. -

Ecology: Biodiversity and Natural Resources Part 1

CK-12 FOUNDATION Ecology: Biodiversity and Natural Resources Part 1 Akre CK-12 Foundation is a non-profit organization with a mission to reduce the cost of textbook materials for the K-12 market both in the U.S. and worldwide. Using an open-content, web-based collaborative model termed the “FlexBook,” CK-12 intends to pioneer the generation and distribution of high-quality educational content that will serve both as core text as well as provide an adaptive environment for learning. Copyright © 2010 CK-12 Foundation, www.ck12.org Except as otherwise noted, all CK-12 Content (including CK-12 Curriculum Material) is made available to Users in accordance with the Creative Commons Attribution/Non-Commercial/Share Alike 3.0 Un- ported (CC-by-NC-SA) License (http://creativecommons.org/licenses/by-nc-sa/3.0/), as amended and updated by Creative Commons from time to time (the “CC License”), which is incorporated herein by this reference. Specific details can be found at http://about.ck12.org/terms. Printed: October 11, 2010 Author Barbara Akre Contributor Jean Battinieri i www.ck12.org Contents 1 Ecology: Biodiversity and Natural Resources Part 1 1 1.1 Lesson 18.1: The Biodiversity Crisis ............................... 1 1.2 Lesson 18.2: Natural Resources .................................. 32 2 Ecology: Biodiversity and Natural Resources Part I 49 2.1 Chapter 18: Ecology and Human Actions ............................ 49 2.2 Lesson 18.1: The Biodiversity Crisis ............................... 49 2.3 Lesson 18.2: Natural Resources .................................. 53 www.ck12.org ii Chapter 1 Ecology: Biodiversity and Natural Resources Part 1 1.1 Lesson 18.1: The Biodiversity Crisis Lesson Objectives • Compare humans to other species in terms of resource needs and use, and ecosystem service benefits and effects. -

Defining Ultra Artificial Intelligence (UAI) Implementation Using Bionic (Biological-Like- Electronics) Brain Engineering Insight

MOJ Applied Bionics and Biomechanics Short Communication Open Access Defining ultra artificial intelligence (UAI) implementation using bionic (biological-like- electronics) brain engineering insight Abstract Volume 2 Issue 2 - 2018 BIONICS is a common term for bio-inspired information technology, typically including three types of systems, namely: Sadique Shaikh a. bio-morphic (eg: neuromorphic) and bio-inspired electronic/optical devices, Institute of Management & Sciences (IMS), India b. autonomous artificial sensor-processor-activator prostheses and various devices built Correspondence: Sadique Shaikh, Institute of Management & into the human body, and Sciences (IMS), M.S, India, Email [email protected] c. living-artificial interactive symbioses, e.g. brain-controlled devices or robots. Received: March 12, 2018 | Published: March 26, 2018 In spite of some restrictive use of the term ‘bionics’ in popular culture, as well as the unfulfilled promises in the fields of neural networks, artificial intelligence, soft computing and other ‘oversold’ areas, it was agreed that the name bionics as defined above is the right one for the emergent technology also described as bio-inspired information technology (some people are suggesting info-bionics). There are numerous programs at several funding agencies which are supporting parts of this field under various other names.1 Bionic brain for nanotechnology may go some way to accelerating progress in AI, particularly through the sensor interface.2 For these reasons, the Bionic Brain stand for “Biological\-like-Electronic” Brain with list of main research areas that follows should be regarded as neither Mimic Natural Intelligence, Artificially on Silicon Chip which gives exhaustive nor clear-cut. Indeed, future categorizations will again c similar functioning to Humanoid (Human like Robots) like Biological Brain of human being (Refer my complete paper mentioned in Modelling reference to for depth). -

1 Medical Bionics

1 1 Medical Bionics The term ‘‘bionics’’ is synonymous with ‘‘biomimetics’’ and in this context refers to the integration of human-engineered devices to take advantage of functional mechanisms/structures resident in Nature. In this book, we refer to the field of bionics, and in particular medical bionics, as that involved with the development of devices that enable the effective integration of biology (Nature) and electronics to achieve a targeted functional outcome. Since the early experiments of Luigi Galvani and Alessandro Volta (see insets), the use of electrical conductors to transmit charge into and out of biological systems to affect biological processes has been the source of great scientific interest. This has inspired many to explore the possible use of electrical stimulation in promoting positive health out- comes. Some of the earliest examples of using electrical stimulation in a controlled manner to achieve specific clinical outcomes were devel- oped by Guillaume-Benjamin-Amand Duchenne (see inset) (Figure 1.1). Duchenne’s interests in physiognomic esthetics of facial expression led to the definition of neural conduction pathways. During this important period in the history of science, Duchenne developed nerve conduction tests using electrical stimulation and performed pioneering studies of the manner in which nerve lesions could be diagnosed and possibly treated. To date, medical bionic devices have been largely targeted toward the primary ‘‘excitable cell’’ systems, muscle, and nerve, whose functions are inherently capable of being modulated by electrical stimulation. There have also been numerous studies of the use of electrical stimulation for bone regrowth and wound healing. The effects of electrical stimulation are thought to be promoted through the induced movement of positive and negative charged ions in opposite directions (polarization) across cells and tissues that activates sensory or motor functions [2]. -

Defining New Goals in Engineering Education at “Politehnica” University of Bucharest

Bionics in Engineering - Defining new Goals in Engineering Education at “Politehnica” University of Bucharest Ralf Neurohr & Cristian Dragomirescu Politehnica University of Bucharest, Splaiul Independentei 313, Bucharest, 060032, ROMANIA, [email protected], [email protected] Abstract - During the past years, bionics, a new and its benefit for modern engineering, but also to avoid discipline, which is dedicated to the transfer of some misunderstandings and confusion because of principles of construction, regulation, interaction and interferences with terms like biotechnology or organization in biology into innovative technical bioengineering, a short introduction into the fundamentals solutions, is attracting significant interest from various and principles of bionics, as well as its definition will be industries. Based on this request for bionic expertise in presented first. engineering, the faculty for teaching engineering in foreign languages (FILS) at “Politehnica” University of WHAT IS BIONICS ? Bucharest started a course in bionics in SS 2007, which was supported by the expertise of the German “Bionik- Although the historical roots of bionics can be traced back Kompetenz-Netz”, one of the leading organizations in to the time of Leonardo da Vinci, the Italian middle age bionics. This is the report of the considerations involved genius, or maybe even to earlier times, the definition of in the course concept, the first experiences with the bionics as a modern research discipline, was given less than student's acceptance, some conclusions and future 15 years ago by Neumann and may be translated as: perspectives for extending bionics activities at “Bionics is the scientific discipline, which is in charge with “Politehnica”. In order to avoid any confusion, the systematic transfer of construction, process and considering overlapping or mixing up with other bio- evolution principles of living systems into technical disciplines related to technology, the report is starting applications”[2]. -

Summary of Bionics Engineering and Its Applications Zhuojuan Yang 1, A

Advances in Computer Science Research (ACSR), volume 76 7th International Conference on Education, Management, Information and Mechanical Engineering (EMIM 2017) Summary of Bionics Engineering and Its Applications 1, a 批注批注批注 [g1]: 作者Arial字体,14磅,居 Zhuojuan Yang and Zhanyu Xu 中,名在前,姓在后,用全称(如:张国 1 平为Guoping Zhang), 段落间距为段前6 Jilin Engineering Normal University, Changchun, China 磅 a [email protected] 批注批注批注 [g2]: 作者地址Arial字体,11磅, 居中,段落间距为段前6磅(若地址太长 要强行换行时,则该地址内部段落间距均 Keywords: Bionics; Bionic engineering; Application 为0磅) 批注批注批注 [g3]: 段落间距为段前18磅,其中 Abstract. Expounds the connotation and research method of Bionics Engineering, introduces the animal bionic Abstract. 要加粗,在句点后空1格再紧接 摘要内容。摘要内容每句首字母大写。 information, leaf surface wettability, morphology and function of bionic bionic structure in engineering application, and their research progress and application prospect were analyzed and prospects.Introduction 批注批注批注 [g4]: 文中一级标题:实词首字母 In the hundreds of millions of years of evolution and co evolution of life in the process of 大写,加粗,段落间距为段前18磅,段后 6磅,独立成段。 organisms in the micro and macro morphology, structure and function, energy and material transformation, metabolism and utilization system, movement and behavior, heredity, reproduction, development, regulation, assembly process and mechanism, repair, compensation, immune mechanism, information transmission, processing and behavior control ability and the ability to adapt to the environment and so on, through interaction with the environment itself has been optimized, an important source of the biological world of technological innovation. Bionics and Engineering Bionics Bionics(Bionics, Biomimetics, Biomimicry) is defined in many ways, and there is not yet a fully unified understanding. Steel, an American military surgeon, first proposed the concept of Bionics in 1960[1-4] . In 1998, the United States published a monograph on the use of bionics bionics Benyus[5] new term (Biomimicry). -

Functional Bionics/Biomimetics

Contents Forum on the Development of Bionic Engineering .................................................... 1 A Research and Innovation Frontier for Bio-inspired Technologies .........................................................................................................................................................Shih Chi Liu 1 Biomimetics – research and innovative development to turn scientific knowledge into beneficial practice ...........................................................................................................................................Rainer W. Erb 2 Biomimetics: Challenges, Development and Opportunities ................................................................................................................................................Ille C. Gebeshuber 6 Plenaries ......................................................................................................................... 7 Biomimetic superhydrophobic surfaces and their application: Lotus- and Salvinia-Effect .................................................................................................................................................Wilhelm Barthlott 7 Biomimetics on gecko locomotion: Behaviour, reaction forces and Bio-inspired wall-climbing robots Zhendong Dai, Zhouyi Wang, Aihong Ji, Xing Qiang, Longbao Han, Jintong Wang, Qiang Wu, Wenhua Gu, ............................................................................................................................................Jiurong Sun,Dai -

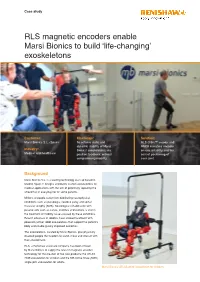

RLS Magnetic Encoders Enable Marsi Bionics to Build ‘Life-Changing’ Exoskeletons

Case study RLS magnetic encoders enable Marsi Bionics to build ‘life-changing’ exoskeletons Customer: Challenge: Solution: Marsi Bionics S.L. (Spain) To achieve static and RLS Orbis™ encoder and dynamic stability of Marsi RM08 miniature encoder Industry: Bionics’ exoskeletons, via ensure reliability and the Medical and healthcare position feedback, without correct positioning of compromising mobility. each joint. Background Marsi Bionics S.L. is a leading technology start-up based in Madrid, Spain. It designs and builds custom exoskeletons for medical applications with the aim of potentially replacing the wheelchair in everyday life for some patients. Millions of people suffer from debilitating neurophysical conditions such as paraplegia, cerebral palsy and spinal muscular atrophy (SMA). Neurological rehabilitation with passive aids such as canes, crutches and walkers is vital in the treatment of mobility issues caused by these conditions. Recent advances in robotics have allowed treatment with powered (active) robot exoskeletons that support the patient’s body and enable greatly improved outcomes. The exoskeletons, created by Marsi Bionics, give physically disabled people the freedom to stand, move and interact with their environment. RLS, a Renishaw associate company, has been chosen by Marsi Bionics to supply the latest in magnetic encoder technology for the creation of two new products: the ATLAS 2030 exoskeleton for children and the MB-Active Knee (MAK) single-joint exoskeleton for adults. Marsi Bionics’ ATLAS 2030 exoskeleton for children Challenge The ATLAS 2030 exoskeleton has up to six degrees of Alberto Plaza, R&D engineer and manager of the MAK freedom per limb. This device enables the user to perform project at Marsi Bionics, describes the stringent encoder both unaided and self-actuated actions such as walking and requirements of human exoskeletons: sitting. -

Cyborgs and Enhancement Technology

philosophies Article Cyborgs and Enhancement Technology Woodrow Barfield 1 and Alexander Williams 2,* 1 Professor Emeritus, University of Washington, Seattle, Washington, DC 98105, USA; [email protected] 2 140 BPW Club Rd., Apt E16, Carrboro, NC 27510, USA * Correspondence: [email protected]; Tel.: +1-919-548-1393 Academic Editor: Jordi Vallverdú Received: 12 October 2016; Accepted: 2 January 2017; Published: 16 January 2017 Abstract: As we move deeper into the twenty-first century there is a major trend to enhance the body with “cyborg technology”. In fact, due to medical necessity, there are currently millions of people worldwide equipped with prosthetic devices to restore lost functions, and there is a growing DIY movement to self-enhance the body to create new senses or to enhance current senses to “beyond normal” levels of performance. From prosthetic limbs, artificial heart pacers and defibrillators, implants creating brain–computer interfaces, cochlear implants, retinal prosthesis, magnets as implants, exoskeletons, and a host of other enhancement technologies, the human body is becoming more mechanical and computational and thus less biological. This trend will continue to accelerate as the body becomes transformed into an information processing technology, which ultimately will challenge one’s sense of identity and what it means to be human. This paper reviews “cyborg enhancement technologies”, with an emphasis placed on technological enhancements to the brain and the creation of new senses—the benefits of which may allow information to be directly implanted into the brain, memories to be edited, wireless brain-to-brain (i.e., thought-to-thought) communication, and a broad range of sensory information to be explored and experienced. -

Glossary of the Key Notions in Bionics and Beyond

Glossary of the key notions in Bionics and beyond Created by XMLmind XSL-FO Converter. Glossary of the key notions in Bionics and beyond Publication date PPKE ITK, Budapest, 2011 Copyright © 2011 Pázmány Péter Catholic University Created by XMLmind XSL-FO Converter. Table of Contents A. Glossary of the key notions in Bionics and beyond ....................................................................... 1 Preface ................................................................................................................................................ ii 1. Resources ........................................................................................................................................ 4 2. Glossary .......................................................................................................................................... 5 1. 1 ............................................................................................................................................ 5 2. 2 ............................................................................................................................................ 5 3. 6 ............................................................................................................................................ 5 4. A ............................................................................................................................................ 5 5. B ......................................................................................................................................... -

Robots and Biological Systems: Towards a New Bionics?

Robots and Biological Systems: Towards a New Bionics? NATO ASI Series Advanced Science Institutes Series A series presenting the results of activities sponsored by the NA TO Science Committee, which aims at the dissemination of advanced scientific and technological knowledge, with a view to strengthening links between scientific communities. The Series is published by an international board of publishers in conjunction with the NATO Scientific Affairs Division A Life Sciences Plenum Publishing Corporation B Physics London and New York C Mathematical and Kluwer Academic Publishers Physical Sciences Dordrecht, Boston and London D Behavioural and Social Sciences E Applied Sciences F Computer and Springer-Verlag Systems Sciences Berlin Heidelberg New York G Ecological Sciences London Paris Tokyo Hong Kong H Cell Biology Barcelona Budapest I Global Environmental Change NATo-pea DATABASE The electronic index to the NATO ASI Series provides full bibliographical references (with keywords and/or abstracts) to more than 30000 contributions from international scientists published in all sections of the NATO ASI Series. Access to the NATO-PCO DATABASE compiled by the NATO Publication Coordination Office is possible in two ways: - via online FILE 128 (NATO-PCO DATABASE) hosted by ESRIN, Via Galileo Galilei, 1-00044 Frascati, Italy. - via CD-ROM "NATO-PCO DATABASE" with user-friendly retrieval software in English, French and German (© WTV GmbH and DATAWARE Technologies Inc. 1989). Series F: Computer and Systems Sciences Vol. 102 Robots and Biological Systems: Towards a New Bionics? Edited by Paolo Dario ARTS Lab, Scuola Superiore S. Anna 56127 Pisa, Italy Giulio Sandini OIST, Universita degli Studi di Genova 16145 Genova, Italy Patrick Aebischer Oivision de Recherche chirurgicale, Pav.