Game Scoring: Towards a Broader Theory

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Name Region System Mapper Savestate Powerpak

Name Region System Mapper Savestate Powerpak Savestate '89 Dennou Kyuusei Uranai Japan Famicom 1 YES Supported YES 10-Yard Fight Japan Famicom 0 YES Supported YES 10-Yard Fight USA NES-NTSC 0 YES Supported YES 1942 Japan Famicom 0 YES Supported YES 1942 USA NES-NTSC 0 YES Supported YES 1943: The Battle of Midway USA NES-NTSC 2 YES Supported YES 1943: The Battle of Valhalla Japan Famicom 2 YES Supported YES 2010 Street Fighter Japan Famicom 4 YES Supported YES 3-D Battles of Worldrunner, The USA NES-NTSC 2 YES Supported YES 4-nin Uchi Mahjong Japan Famicom 0 YES Supported YES 6 in 1 USA NES-NTSC 41 NO Supported NO 720° USA NES-NTSC 1 YES Supported YES 8 Eyes Japan Famicom 4 YES Supported YES 8 Eyes USA NES-NTSC 4 YES Supported YES A la poursuite de l'Octobre Rouge France NES-PAL-B 4 YES Supported YES ASO: Armored Scrum Object Japan Famicom 3 YES Supported YES Aa Yakyuu Jinsei Icchokusen Japan Famicom 4 YES Supported YES Abadox: The Deadly Inner War USA NES-NTSC 1 YES Supported YES Action 52 USA NES-NTSC 228 NO Supported NO Action in New York UK NES-PAL-A 1 YES Supported YES Adan y Eva Spain NES-PAL 3 YES Supported YES Addams Family, The USA NES-NTSC 1 YES Supported YES Addams Family, The France NES-PAL-B 1 YES Supported YES Addams Family, The Scandinavia NES-PAL-B 1 YES Supported YES Addams Family, The Spain NES-PAL-B 1 YES Supported YES Addams Family, The: Pugsley's Scavenger Hunt USA NES-NTSC 1 YES Supported YES Addams Family, The: Pugsley's Scavenger Hunt UK NES-PAL-A 1 YES Supported YES Addams Family, The: Pugsley's Scavenger Hunt -

The Resurrection of Permadeath: an Analysis of the Sustainability of Permadeath Use in Video Games

The Resurrection of Permadeath: An analysis of the sustainability of Permadeath use in Video Games. Hugh Ruddy A research paper submitted to the University of Dublin, in partial fulfilment of the requirements for the degree of Master of Science Interactive Digital Media 2014 Declaration I declare that the work described in this research paper is, except where otherwise stated, entirely my own work and has not been submitted as an exercise for a degree at this or any other university. Signed: ___________________ Hugh Ruddy 28th February 2014 Permission to lend and/or copy I agree that Trinity College Library may lend or copy this research Paper upon request. Signed: ___________________ Hugh Ruddy 28th February 2014 Abstract The purpose of this research paper is to study the the past, present and future use of Permadeath in video games. The emergence of Permadeath games in recent months has exposed the mainstream gaming population to the concept of the permanent death of the game avatar, a notion that has been vehemently avoided by game developers in the past. The paper discusses the many incarnations of Permadeath that have been implemented since the dawn of video games, and uses examples to illustrate how gamers are crying out for games to challenge them in a unique way. The aims of this are to highlight the potential that Permadeath has in the gaming world to become a genre by itself, as well as to give insights into the ways in which gamers play Permadeath games at the present. To carry out this research, the paper examines the motivation players have to play games from a theoretical standpoint, and investigates how the possibilty of failure in video games should not be something gamers stay away from. -

UPC Platform Publisher Title Price Available 730865001347

UPC Platform Publisher Title Price Available 730865001347 PlayStation 3 Atlus 3D Dot Game Heroes PS3 $16.00 52 722674110402 PlayStation 3 Namco Bandai Ace Combat: Assault Horizon PS3 $21.00 2 Other 853490002678 PlayStation 3 Air Conflicts: Secret Wars PS3 $14.00 37 Publishers 014633098587 PlayStation 3 Electronic Arts Alice: Madness Returns PS3 $16.50 60 Aliens Colonial Marines 010086690682 PlayStation 3 Sega $47.50 100+ (Portuguese) PS3 Aliens Colonial Marines (Spanish) 010086690675 PlayStation 3 Sega $47.50 100+ PS3 Aliens Colonial Marines Collector's 010086690637 PlayStation 3 Sega $76.00 9 Edition PS3 010086690170 PlayStation 3 Sega Aliens Colonial Marines PS3 $50.00 92 010086690194 PlayStation 3 Sega Alpha Protocol PS3 $14.00 14 047875843479 PlayStation 3 Activision Amazing Spider-Man PS3 $39.00 100+ 010086690545 PlayStation 3 Sega Anarchy Reigns PS3 $24.00 100+ 722674110525 PlayStation 3 Namco Bandai Armored Core V PS3 $23.00 100+ 014633157147 PlayStation 3 Electronic Arts Army of Two: The 40th Day PS3 $16.00 61 008888345343 PlayStation 3 Ubisoft Assassin's Creed II PS3 $15.00 100+ Assassin's Creed III Limited Edition 008888397717 PlayStation 3 Ubisoft $116.00 4 PS3 008888347231 PlayStation 3 Ubisoft Assassin's Creed III PS3 $47.50 100+ 008888343394 PlayStation 3 Ubisoft Assassin's Creed PS3 $14.00 100+ 008888346258 PlayStation 3 Ubisoft Assassin's Creed: Brotherhood PS3 $16.00 100+ 008888356844 PlayStation 3 Ubisoft Assassin's Creed: Revelations PS3 $22.50 100+ 013388340446 PlayStation 3 Capcom Asura's Wrath PS3 $16.00 55 008888345435 -

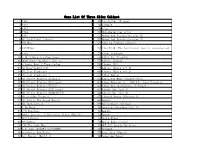

2475 in 1 Game List

Game List Of Three Sides Cabinet 1 1941 47 Air Gallet (Taiwan) 2 1942 48 Airwolf 3 1943 49 Ajax 4 1944 50 Akkanbeder(ver 2.5j) 5 1945 51 Akuma-Jou Dracula(version N) 6 10 Yard Fight (Japan) 52 Akuma-Jou Dracula(version P) 7 1943kai 53 Ales no Tsubasa (Japan) 8 1945Plus 54 Alex Kidd: The Lost Stars (set 2, unprotected) 9 19xx 55 Alien Syndrome 10 2 On 2 Open Ice Challenge 56 Alien vs. Predator 11 2020 Super Baseball (set 1) 57 Aliens (Japan) 12 3 Count Bout / Fire Suplex 58 Aliens (US) 13 3D_Beastorizer(US) 59 Aliens (World set 1) 14 3D_Star Gladiator 60 Aliens (World set 2) 15 3D_Star Gladiator 2 61 Alley Master 16 3D_Street Fighter EX(Asia) 62 Alligator Hunt (unprotected) 17 3D_Street Fighter EX(Japan) 63 Alpha Mission II / ASO II - Last Guardian 18 3D_Street Fighter EX(US) 64 Alpha One (prototype, 3 lives) 19 3D_Street Fighter EX2(Japan) 65 Alpine Ski (set 1) 20 3D_Street Fighter EX2PLUS(US) 66 Alpine Ski (set 2) 21 3D_Strider Hiryu 2 67 Altered Beast (Version 1) 22 3D_Tetris The Grand Master 68 Ambush 23 3D_Toshinden 2 69 Ameisenbaer (German) 24 4 En Raya 70 American Speedway (set 1) 25 4-D Warriors 71 Amidar 26 64th. Street - A Detective Story (World) 72 Amigo 27 800 Fathoms 73 Andro Dunos 28 88 Games! 74 Angel Kids (Japan) 29 '99 The Last War 75 APB-All Points Bulletin 30 A.B. Cop (FD1094 317-0169b) 76 Appoooh 31 Acrobatic Dog_Fight 77 Aqua Jack (World) 32 Act-Fancer (World 1) 78 Aquarium(Japan) 33 Act-Fancer (World 2) 79 Arabian Act-Fancer Cybernetick Hyper Weapon (Japan 34 80 Arabian (Atari) revision 1) 35 Action Fighter 81 Arabian -

Liste Des Jeux Nintendo NES Chase Bubble Bobble Part 2

Liste des jeux Nintendo NES Chase Bubble Bobble Part 2 Cabal International Cricket Color a Dinosaur Wayne's World Bandai Golf : Challenge Pebble Beach Nintendo World Championships 1990 Lode Runner Tecmo Cup : Football Game Teenage Mutant Ninja Turtles : Tournament Fighters Tecmo Bowl The Adventures of Rocky and Bullwinkle and Friends Metal Storm Cowboy Kid Archon - The Light And The Dark The Legend of Kage Championship Pool Remote Control Freedom Force Predator Town & Country Surf Designs : Thrilla's Surfari Kings of the Beach : Professional Beach Volleyball Ghoul School KickMaster Bad Dudes Dragon Ball : Le Secret du Dragon Cyber Stadium Series : Base Wars Urban Champion Dragon Warrior IV Bomberman King's Quest V The Three Stooges Bases Loaded 2: Second Season Overlord Rad Racer II The Bugs Bunny Birthday Blowout Joe & Mac Pro Sport Hockey Kid Niki : Radical Ninja Adventure Island II Soccer NFL Track & Field Star Voyager Teenage Mutant Ninja Turtles II : The Arcade Game Stack-Up Mappy-Land Gauntlet Silver Surfer Cybernoid - The Fighting Machine Wacky Races Circus Caper Code Name : Viper F-117A : Stealth Fighter Flintstones - The Surprise At Dinosaur Peak, The Back To The Future Dick Tracy Magic Johnson's Fast Break Tombs & Treasure Dynablaster Ultima : Quest of the Avatar Renegade Super Cars Videomation Super Spike V'Ball + Nintendo World Cup Dungeon Magic : Sword of the Elements Ultima : Exodus Baseball Stars II The Great Waldo Search Rollerball Dash Galaxy In The Alien Asylum Power Punch II Family Feud Magician Destination Earthstar Captain America and the Avengers Cyberball Karnov Amagon Widget Shooting Range Roger Clemens' MVP Baseball Bill Elliott's NASCAR Challenge Garry Kitchen's BattleTank Al Unser Jr. -

In This Day of 3D Graphics, What Lets a Game Like ADOM Not Only Survive

Ross Hensley STS 145 Case Study 3-18-02 Ancient Domains of Mystery and Rougelike Games The epic quest begins in the city of Terinyo. A Quake 3 deathmatch might begin with a player materializing in a complex, graphically intense 3D environment, grabbing a few powerups and weapons, and fragging another player with a shotgun. Instantly blown up by a rocket launcher, he quickly respawns. Elapsed time: 30 seconds. By contrast, a player’s first foray into the ASCII-illustrated world of Ancient Domains of Mystery (ADOM) would last a bit longer—but probably not by very much. After a complex process of character creation, the intrepid adventurer hesitantly ventures into a dark cave—only to walk into a fireball trap, killing her. But a perished ADOM character, represented by an “@” symbol, does not fare as well as one in Quake: Once killed, past saved games are erased. Instead, she joins what is no doubt a rapidly growing graveyard of failed characters. In a day when most games feature high-quality 3D graphics, intricate storylines, or both, how do games like ADOM not only survive but thrive, supporting a large and active community of fans? How can a game design seemingly premised on frustrating players through continual failure prove so successful—and so addictive? 2 The Development of the Roguelike Sub-Genre ADOM is a recent—and especially popular—example of a sub-genre of Role Playing Games (RPGs). Games of this sort are typically called “Roguelike,” after the founding game of the sub-genre, Rogue. Inspired by text adventure games like Adventure, two students at UC Santa Cruz, Michael Toy and Glenn Whichman, decided to create a graphical dungeon-delving adventure, using ASCII characters to illustrate the dungeon environments. -

Permadeath in Dayz

Fear, Loss and Meaningful Play: Permadeath in DayZ Marcus Carter, Digital Cultures Research Group, The University of Sydney; Fraser Allison, Microsoft Research Centre for Social NUI, The University of Melbourne Abstract This article interrogates player experiences with permadeath in the massively multiplayer online first-person shooter DayZ. Through analysing the differences between ‘good’ and ‘bad’ instances of permadeath, we argue that meaningfulness – in accordance with Salen & Zimmerman’s (2003) concept of meaningful play – is a critical requirement for positive experiences with permadeath. In doing so, this article suggests new ontologies for meaningfulness in play, and demonstrates how meaningfulness can be a useful lens through which to understand player experiences with negatively valanced play. We conclude by relating the appeal of permadeath to the excitation transfer effect (Zillmann 1971), drawing parallels between the appeal of DayZ and fear-inducing horror games such as Silent Hill and gratuitously violent and gory games such as Mortal Kombat. Keywords DayZ, virtual worlds, meaningful play, player experience, excitation transfer, risk play Introduction It's truly frightening, like not game-frightening, but oh my god I'm gonna die-frightening. Your hands starts shaking, your hands gets sweaty, your heart pounds, your mind is racing and you're a wreck when it's all over. There are very few games that – by default – feature permadeath as significantly and totally as DayZ (Bohemia Interactive 2013). A new character in this massively multiplayer online first- person shooter (MMOFPS) begins with almost nothing, and must constantly scavenge from the harsh zombie-infested virtual world to survive. A persistent emotional tension accompanies the requirement to constantly find food and water, and a player will celebrate the discovery of simple items like backpacks, guns and medical supplies. -

Gamer Symphony Orchestra Spring 2010

About the Gamer Symphony Orchestra The University of Maryland’s In the fall of 2005, Michelle Eng decided she wanted to be in Gamer Symphony Orchestra an orchestral group that played video game music. With four others http://umd.gamersymphony.org/ from the University of Maryland Repertoire Orchestra, she founded GSO to achieve that dream. By the time of the ensemble’s first public performance in the spring of 2006, its size had quadrupled. Today, GSO provides a musical and social outlet to over 100 members. It is the world’s first college-level ensemble solely dedicated to video game music as an emerging art form. Aside from its concerts, the orchestra also runs “Deathmatch for Charity,” a video game tournament in the spring. All proceeds benefit Children’s National Medical Center in Washington, D.C., via the “Child’s Play” charity (www.childsplay.org). --------------------------------------------------------------------------------- We love getting feedback from our fans! Please feel free to fill out this form and drop it in the “Question Block” on your way out, or e-mail us at [email protected]. If you need more room, use the space provided on the back of this page. Spring 2010 How did you hear about the Gamer Symphony Orchestra? University of Maryland Memorial Chapel Saturday, Dec. 12, 3 p.m. What arrangements would you like to hear from GSO? Other Conductors comments? Anna Costello Kira Levitzky Peter Fontana (Choral) Please write down your e-mail address if you would like to receive messages about future GSO concerts and events. Level Select Oh-Buta Mask Original Composer: Shogo Sakai Mother 3 (2006) Arranger: Christopher Lee and Description: Oh, Buta-Mask, "Buta" meaning "Pig" in Japanese, is composed of two battle themes from Mother 3. -

![[Japan] SALA GIOCHI ARCADE 1000 Miglia](https://docslib.b-cdn.net/cover/3367/japan-sala-giochi-arcade-1000-miglia-393367.webp)

[Japan] SALA GIOCHI ARCADE 1000 Miglia

SCHEDA NEW PLATINUM PI4 EDITION La seguente lista elenca la maggior parte dei titoli emulati dalla scheda NEW PLATINUM Pi4 (20.000). - I giochi per computer (Amiga, Commodore, Pc, etc) richiedono una tastiera per computer e talvolta un mouse USB da collegare alla console (in quanto tali sistemi funzionavano con mouse e tastiera). - I giochi che richiedono spinner (es. Arkanoid), volanti (giochi di corse), pistole (es. Duck Hunt) potrebbero non essere controllabili con joystick, ma richiedono periferiche ad hoc, al momento non configurabili. - I giochi che richiedono controller analogici (Playstation, Nintendo 64, etc etc) potrebbero non essere controllabili con plance a levetta singola, ma richiedono, appunto, un joypad con analogici (venduto separatamente). - Questo elenco è relativo alla scheda NEW PLATINUM EDITION basata su Raspberry Pi4. - Gli emulatori di sistemi 3D (Playstation, Nintendo64, Dreamcast) e PC (Amiga, Commodore) sono presenti SOLO nella NEW PLATINUM Pi4 e non sulle versioni Pi3 Plus e Gold. - Gli emulatori Atomiswave, Sega Naomi (Virtua Tennis, Virtua Striker, etc.) sono presenti SOLO nelle schede Pi4. - La versione PLUS Pi3B+ emula solo 550 titoli ARCADE, generati casualmente al momento dell'acquisto e non modificabile. Ultimo aggiornamento 2 Settembre 2020 NOME GIOCO EMULATORE 005 SALA GIOCHI ARCADE 1 On 1 Government [Japan] SALA GIOCHI ARCADE 1000 Miglia: Great 1000 Miles Rally SALA GIOCHI ARCADE 10-Yard Fight SALA GIOCHI ARCADE 18 Holes Pro Golf SALA GIOCHI ARCADE 1941: Counter Attack SALA GIOCHI ARCADE 1942 SALA GIOCHI ARCADE 1943 Kai: Midway Kaisen SALA GIOCHI ARCADE 1943: The Battle of Midway [Europe] SALA GIOCHI ARCADE 1944 : The Loop Master [USA] SALA GIOCHI ARCADE 1945k III SALA GIOCHI ARCADE 19XX : The War Against Destiny [USA] SALA GIOCHI ARCADE 2 On 2 Open Ice Challenge SALA GIOCHI ARCADE 4-D Warriors SALA GIOCHI ARCADE 64th. -

Ogre Battle Review

Eric Liao STS 145 February 2 1,2001 Ogre Battle Review This review is of the cult Super Nintendo hit Ogre Battle. In my eyes, it achieved cult hit status because of its appeal to hard-core garners as well as its limited release (only 25,000 US copies!), despite being extraordinarily successful in Japan. Its innovative gameplay has not been emulated by any other games to date (besides sequels). As a monument to Ogre Battle’s amazing replayability, I still play the game today on an SNES emulator. General information: Full name: Ogre Battle Saga, Episode 5: The March of the Black Queen Publisher: Atlus Developer: Quest Copyright Date: 1993 Genre: real time strategy with RPG elements Platform: Originally released for SNES, ported to Sega Saturn and Sony Playstation, with sequels for SNES, N64, NeoGeo Pocket Color, Sony Playstation # Players: 1 player game Storyline: This game hasa tremendously deep storyline for a console game, rivaling most RPGs. When I say deep, it is not in the traditional interactive fiction sense. There are no cutscenes in the game, and characters do not talk to each other. Rather, what makes the game deep is not the plot, but the completely branching structure of the plot. The story itself is not extremely original: The gametakes place thousands of years after a huge battle, called “The Ogre Battle,” was fought. This war was between mankind and demonkind, with the winner to rule the world. Mankind won and sealed the demons in the underworld. However, an evil wizard created an artifact called “The Black Diamond” that could destroy this seal. -

Retro Gaming News

CONTENTS SPACE HARRIER 4 ZERO WING 30 WEWANA:PLAY APP 11 MEET THE TEAM 33 CONTACT SAM CRUISE 17 CHASE HQ 34 JASON MACKENZIE 20 MARBLE MADNESS 37 TRGN AWARD 25 MILLENNIUM 2.2 39 EMULATOR REVIEW 26 FRIDAY THE 13TH 42 MAIL BAG 29 FIST 2 48 The Retro Games News © Shinobisoft Publishing Founder Phil Wheatley Editorial: Welcome to another month of retro gaming goodness. Along with the usual classic game reviews such as Space Harrier, Contact Sam Cruise and Friday 13th, we also have a couple of unique interviews. The first of which is from a guy called Deepak Pathak who is one of the partners behind the WeWana:Play App which allows you to schedule gaming sessions with your friends. A second is with Jason ‘Kenz’ Mackenzie who is well known in the retro gaming community. We also have some new sections such as the Mail Bag and the TRGN Award. Please feel free to email your letters to the email above. Thanks as ever to everybody who contributed such as the writers, our proof reader and Logo artist. Happy Retro Gaming – Phil Wheatley © The Retro Games News Page 2 elisoftware.com © The Retro Games News Page 3 SPACE HARRIER Essentials Welcome to the fantasy zone. Get Ready! The first ever samples I heard on my Atari ST. Yet another fantastic what was the forefront hit from Sega, Space of 3D games. WOW! My friend and I Harrier came to the were in awe. arcades back in 1985. Other systems too Computers can now This game, along with were at the time talk at you as the low Sega's other popular beginning to develop quality distorted titles back then, Super 3D games Sega were sounds came at us. -

Living Faith Lutheran Church Presents

Living Faith Lutheran Church Presents THE WASHINGTON METROPOLITAN GAMER SYMPHONY ORCHESTRA Nigel Horne, Music Director Living Faith Lutheran Church Rockville, Md. April 26, 2014, 2 p.m. WMGSO.org | @MetroGSO | Facebook.com/WMGSO [Classical Music. Game On.] Concert Program Gusty Garden Galaxy Mahito Yokota Super Mario Galaxy (2007) arr. Robert Garner Twilight Princess Toru Minegishi, Asuka Ota, The Legend of Zelda: Koji Kondo Twilight Princess (2006) arr. Robert Garner, Katie Noble Dancing Mad Nobuo Uematsu Final Fantasy VI (1994) arr. Alexander Ryan Objection! Masakazu Sugimori, Phoenix Wright: Ace Naoto Tanaka Attorney (2001) arr. Alexander Ryan Kid Icarus Hirokazu Tanaka Kid Icarus (1987) arr. Alyssa Menes Dämmerung Yasunori Mitsuda Xenosaga Episode I: Der Wille arr. Chris Apple zur Macht (2003) Castles Koji Kondo, David Wise Super Mario World (1990), The arr. Chris Apple Legend of Zelda: Link to the Past (1991), Donkey Kong Country 2 (1995), Super Mario Bros. (1985) Pokémedley Junichi Masuda, Pokémon: The Animated Series John Siegler, Go Ichinose (1998), Pokémon: Red/Blue arr. Chris Lee, Robert Garner, (1998), Pokémon: White/Black Doug Eber (2011) Roster Flute Flugelhorn Tenor Voice Jessie Biele Robert Garner Darin Brown Jessica Robertson Sheldon Zamora-Soon Trombone Oboe Eric Fagan Bass Voice David Shapiro Steve O’Brien Alexander Booth Matthew Harker Clarinet Concert Percussion Alisha Bhore Joshua Rappaport Violin Jessica Elmore Zara Simpson Victoria Chang Lee Stearns Matthew Costales Bass Clarinet Van Stottlemyer Lauren Kologe Yannick