D2.4 Weblog Spider Prototype and Associated Methodology

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

CHAPTER 12 Making Your Web Site Mashable

CHAPTER 12 Making Your Web Site Mashable This chapter is a guide to content producers who want to make their web sites friendly to mashups. That is, this chapter answers the question, how would you as a content producer make your digital content most effectively remixable and mashable to users and developers? Most of this book is addressed to creators of mashups who are therefore consumers of data and services. Why then should I shift in this chapter to addressing producers of data and services? Well, you have already seen aspects of APIs and web content that make it either easier or harder to remix, and you’ve seen what makes APIs easy and enjoyable to use. Showing content and data producers what would make life easier for consumers of their content provides useful guidance to service providers who might not be fully aware of what it’s like for consumers. The main audience for the book—as consumers (as opposed to producers) of services—should still find this chapter a helpful distillation of best practices for creating mashups. In some ways, this chapter is a review of Chapters 1–11 and a preview of Chapters 13–19. Chapters 1–11 prepared you for how to create mashups in general. I presented a lot of the technologies and showed how to build a reasonably sophisticated mashup with PHP and JavaScript as well as using mashup tools. Some of the discussion in this chapter will be amplified by in-depth discussions in Chapters 13–19. For example, I’ll refer to topics such as geoRSS, iCalendar, and microformats that I discuss in greater detail in those later chapters. -

Requirements, Design and Applications to Semantic Integration and Knowledge Discovery

Practical Ontologies: Requirements, Design and Applications to Semantic Integration and Knowledge Discovery by Daniela Rosu A thesis submitted in conformity with the requirements for the degree of Doctor of Philosophy Graduate Department of Computer Science University of Toronto © Copyright by Daniela Rosu 2013 Practical Ontologies: Requirements, Design and Applications to Semantic Integration and Knowledge Discovery Daniela Rosu Doctor of Philosophy Graduate Department of Computer Science University of Toronto 2013 Abstract Due to their role in describing the semantics of information, knowledge representations, from formal ontologies to informal representations such as folksonomies, are becoming increasingly important in facilitating the exchange of information, as well as the semantic integration of information systems and knowledge discovery in a large number of areas, from e-commerce to bioinformatics. In this thesis we present several studies related to the development and application of knowledge representations, in the form of practical ontologies. In Chapter 2, we examine representational challenges and requirements for describing practical domain knowledge. We present representational requirements we collected by surveying current and potential ontology users, discuss current approaches to codifying knowledge and give formal representation solutions to some of the issues we identified. In Chapter 3 we introduce a practical ontology for representing data exchanges, discuss its relationship with existing standards and its role in facilitating the interoperability between information producing and consuming services. ii We also consider the problem of assessing similarity between ontological concepts and propose three novel measures of similarity, detailed in Chapter 4. Two of our proposals estimate semantic similarity between concepts in the same ontology, while the third measures similarity between concepts belonging to different ontologies. -

Copyright 2009 Cengage Learning. All Rights Reserved. May Not Be Copied, Scanned, Or Duplicated, in Whole Or in Part

Index Note: Page numbers referencing fi gures are italicized and followed by an “f ”. Page numbers referencing tables are italicized and followed by a “t”. A Ajax, 353 bankruptcy, 4, 9f About.com, 350 Alexa.com, 42, 78 banner advertising, 7f, 316, 368 AboutUs.org, 186, 190–192 Alta Vista, 7 Barack Obama’s online store, 328f Access application, 349 Amazon.com, 7f, 14f, 48, 247, BaseballNooz.com, 98–100 account managers, 37–38 248f–249f, 319–320, 322 BBC News, 3 ActionScript, 353–356 anonymity, 16 Bebo, 89t Adobe Flash AOL, 8f, 14f, 77, 79f, 416 behavioral changes, 16 application, 340–341 Apple iTunes, 13f–14f benign disinhibition, 16 fi le format. See .fl v fi le format Apple site, 11f, 284f Best Dates Now blog, 123–125 player, 150, 153, 156 applets, Java, 352, 356 billboard advertising, 369 Adobe GoLive, 343 applications, see names of specifi c bitmaps, 290, 292, 340, 357 Adobe Photoshop, 339–340 applications BJ’s site, 318 Advanced Research Projects ARPA (Advanced Research Black Friday, 48 Agency (ARPA), 2 Projects Agency), 2 blog communities, 8f advertising artistic fonts, 237 blog editors, 120, 142 dating sites, 106 ASCO Power University, 168–170 blog search engines, 126 defi ned, 397 .asf fi le format, 154t–155t Blogger, 344–347 e-commerce, 316 AskPatty.com, 206–209 blogging, 7f, 77–78, 86, 122–129, Facebook, 94–96 AuctionWeb, 7f 133–141, 190, 415 family and lifestyle sites, 109 audience, capturing and retaining, blogosphere, 122, 142 media, 373–376 61–62, 166, 263, 405–407, blogrolls, 121, 142 message, 371–372 410–422, 432 Blue Nile site, -

Life Sciences and the Web: a New Era for Collaboration

Life Sciences and the web: a new era for collaboration The Harvard community has made this article openly available. Please share how this access benefits you. Your story matters Citation Sagotsky, Jonathan A., Le Zhang, Zhihui Wang, Sean Martin, and Thomas S. Deisboeck. 2008. Life Sciences and the web: a new era for collaboration. Molecular Systems Biology 4: 201. Published Version doi:10.1038/msb.2008.39 Citable link http://nrs.harvard.edu/urn-3:HUL.InstRepos:4874800 Terms of Use This article was downloaded from Harvard University’s DASH repository, and is made available under the terms and conditions applicable to Other Posted Material, as set forth at http:// nrs.harvard.edu/urn-3:HUL.InstRepos:dash.current.terms-of- use#LAA Molecular Systems Biology 4; Article number 201; doi:10.1038/msb.2008.39 Citation: Molecular Systems Biology 4:201 & 2008 EMBO and Nature Publishing Group All rights reserved 1744-4292/08 www.molecularsystemsbiology.com PERSPECTIVE Life Sciences and the web: a new era for collaboration Jonathan A Sagotsky1, Le Zhang1, Zhihui Wang1, Sean Martin2 inaccuracies per article compared to Encyclopedia Britannica’s and Thomas S Deisboeck1,* 2.92 errors. Although measures have been taken to improve the editorial process, accuracy and completeness remain valid 1 Complex Biosystems Modeling Laboratory, Harvard-MIT (HST) Athinoula concerns. Perhaps the issue is one in which it has become A Martinos Center for Biomedical Imaging, Massachusetts General Hospital, difficult to establish exactly what has actually been peer Charlestown, MA, USA and reviewed and what has not, given that the low cost of digital 2 Cambridge Semantics Inc., Cambridge, MA, USA publishing on the web has led to an explosive amount * Corresponding author. -

Liferay Portal 6 Enterprise Intranets

Liferay Portal 6 Enterprise Intranets Build and maintain impressive corporate intranets with Liferay Jonas X. Yuan BIRMINGHAM - MUMBAI Liferay Portal 6 Enterprise Intranets Copyright © 2010 Packt Publishing All rights reserved. No part of this book may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, without the prior written permission of the publisher, except in the case of brief quotations embedded in critical articles or reviews. Every effort has been made in the preparation of this book to ensure the accuracy of the information presented. However, the information contained in this book is sold without warranty, either express or implied. Neither the author, nor Packt Publishing, and its dealers and distributors will be held liable for any damages caused or alleged to be caused directly or indirectly by this book. Packt Publishing has endeavored to provide trademark information about all of the companies and products mentioned in this book by the appropriate use of capitals. However, Packt Publishing cannot guarantee the accuracy of this information. First published: April 2010 Production Reference: 1230410 Published by Packt Publishing Ltd. 32 Lincoln Road Olton Birmingham, B27 6PA, UK. ISBN 978-1-849510-38-7 www.packtpub.com Cover Image by Karl Swedberg ([email protected]) Credits Author Editorial Team Leader Jonas X. Yuan Aanchal Kumar Reviewer Project Team Leader Amine Bousta Lata Basantani Acquisition Editor Project Coordinator Dilip Venkatesh Shubhanjan Chatterjee Development Editor Proofreaders Mehul Shetty Aaron Nash Lesley Harrison Technical Editors Aditya Belpathak Graphics Alfred John Geetanjali Sawant Charumathi Sankaran Nilesh Mohite Copy Editors Production Coordinators Leonard D'Silva Avinish Kumar Sanchari Mukherjee Aparna Bhagat Nilesh Mohite Indexers Hemangini Bari Cover Work Rekha Nair Aparna Bhagat About the Author Dr. -

Address Munging: the Practice of Disguising, Or Munging, an E-Mail Address to Prevent It Being Automatically Collected and Used

Address Munging: the practice of disguising, or munging, an e-mail address to prevent it being automatically collected and used as a target for people and organizations that send unsolicited bulk e-mail address. Adware: or advertising-supported software is any software package which automatically plays, displays, or downloads advertising material to a computer after the software is installed on it or while the application is being used. Some types of adware are also spyware and can be classified as privacy-invasive software. Adware is software designed to force pre-chosen ads to display on your system. Some adware is designed to be malicious and will pop up ads with such speed and frequency that they seem to be taking over everything, slowing down your system and tying up all of your system resources. When adware is coupled with spyware, it can be a frustrating ride, to say the least. Backdoor: in a computer system (or cryptosystem or algorithm) is a method of bypassing normal authentication, securing remote access to a computer, obtaining access to plaintext, and so on, while attempting to remain undetected. The backdoor may take the form of an installed program (e.g., Back Orifice), or could be a modification to an existing program or hardware device. A back door is a point of entry that circumvents normal security and can be used by a cracker to access a network or computer system. Usually back doors are created by system developers as shortcuts to speed access through security during the development stage and then are overlooked and never properly removed during final implementation. -

Quality Spine Care

Quality Spine Care Healthcare Systems, Quality Reporting, and Risk Adjustment John Ratliff Todd J. Albert Joseph Cheng Jack Knightly Editors 123 Quality Spine Care John Ratliff • Todd J. Albert Joseph Cheng • Jack Knightly Editors Quality Spine Care Healthcare Systems, Quality Reporting, and Risk Adjustment Editors John Ratliff Todd J. Albert Department of Neurosurgery Hospital for Special Surgery Stanford University New York, NY Stanford, CA USA USA Jack Knightly Joseph Cheng Atlantic Neurosurgical Specialists University of Cincinnati Morristown, NJ Cincinnati, OH USA USA ISBN 978-3-319-97989-2 ISBN 978-3-319-97990-8 (eBook) https://doi.org/10.1007/978-3-319-97990-8 Library of Congress Control Number: 2018957706 © Springer Nature Switzerland AG 2019 This work is subject to copyright. All rights are reserved by the Publisher, whether the whole or part of the material is concerned, specifically the rights of translation, reprinting, reuse of illustrations, recitation, broadcasting, reproduction on microfilms or in any other physical way, and transmission or information storage and retrieval, electronic adaptation, computer software, or by similar or dissimilar methodology now known or hereafter developed. The use of general descriptive names, registered names, trademarks, service marks, etc. in this publication does not imply, even in the absence of a specific statement, that such names are exempt from the relevant protective laws and regulations and therefore free for general use. The publisher, the authors, and the editors are safe to assume that the advice and information in this book are believed to be true and accurate at the date of publication. Neither the publisher nor the authors or the editors give a warranty, express or implied, with respect to the material contained herein or for any errors or omissions that may have been made. -

Hidden Meaning

FEATURES Microdata and Microformats Kit Sen Chin, 123RF.com Chin, Sen Kit Working with microformats and microdata Hidden Meaning Programs aren’t as smart as humans when it comes to interpreting the meaning of web information. If you want to maximize your search rank, you might want to dress up your HTML documents with microformats and microdata. By Andreas Möller TML lets you mark up sections formats and microdata into your own source code for the website shown in of text as headings, body text, programs. Figure 1 – an HTML5 document with a hyperlinks, and other web page business card. The Heading text block is H elements. However, these defi- Microformats marked up with the element h1. The text nitions have nothing to do with the HTML was originally designed for hu- for the business card is surrounded by meaning of the data: Does the text refer mans to read, but with the explosive the div container element, and <br/> in- to a person, an organization, a product, growth of the web, programs such as troduces a line break. or an event? Microformats [1] and their search engines also process HTML data. It is easy for a human reader to see successor, microdata [2] make the mean- What do programs that read HTML data that the data shown in Figure 1 repre- ing a bit more clear by pointing to busi- typically find? Listing 1 shows the HTML sents a business card – even if the text is ness cards, product descriptions, offers, and event data in a machine-readable LISTING 1: HTML5 Document with Business Card way. -

Le Microformat Hproduct C'est Quoi ? Pourquoi Utiliser Le Microformat

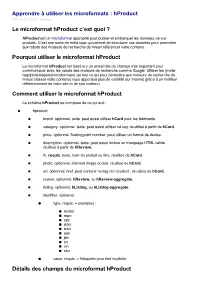

Apprendre à utiliser les microformats : hProduct 2017-02-21 23:02:01 Nicolaseo Le microformat hProduct c’est quoi ? hProduct est un microformat approprié pour publier et embarquer les données de vos produits. C’est une sorte de méta tags qui permet de structurer vos données pour permettre aux robots des moteurs de recherche de mieux référencer votre contenu. Pourquoi utiliser le microformat hProduct Le microformat hProduct est basé sur un ensemble de champs très important pour communiquer avec les robots des moteurs de recherche comme Google. Utiliser les {meta- tags|richsnippets|microformats} (et tout ce qui peut permettre aux moteurs de recherche de mieux classer votre contenu) vous apportera plus de visibilité sur internet grâce à un meilleur référencement de votre site et de son contenu. Comment utiliser le microformat hProduct Le schéma hProduct se compose de ce qui suit : hproduct brand. optionnel. texte. peut aussi utiliser hCard pour les fabricants. category. optionnel. texte. peut aussi utiliser rel-tag. ré-utilisé à partir de hCard. price. optionnel. floating point number. peut utiliser un format de devise. description. optionnel. texte. peut aussi inclure un marquage HTML valide. réutilisé à partir de hReview. fn. requis. texte. nom du produit ou titre. réutilisé de hCard. photo. optionnel. élément image ou lien. réutilisé de hCard. url. optionnel. href. peut contenir rel-tag rel=’product’. ré-utilisé de hCard. review. optionnel. hReview, ou hReview-aggregate. listing. optionnel. hListing, ou hListing-aggregate. identifier. optionnel. type. requis. – exemples : model mpn upc isbn issn ean jan sn vin sku value. requis. – l’étiquette peut être implicite. Détails des champs du microformat hProduct Les noms de classe category, fn, photo, url sont réutilisés à partir de hCard. -

Where Is the Semantic Web? – an Overview of the Use of Embeddable Semantics in Austria

Where Is The Semantic Web? – An Overview of the Use of Embeddable Semantics in Austria Wilhelm Loibl Institute for Service Marketing and Tourism Vienna University of Economics and Business, Austria [email protected] Abstract Improving the results of search engines and enabling new online applications are two of the main aims of the Semantic Web. For a machine to be able to read and interpret semantic information, this content has to be offered online first. With several technologies available the question arises which one to use. Those who want to build the software necessary to interpret the offered data have to know what information is available and in which format. In order to answer these questions, the author analysed the business websites of different Austrian industry sectors as to what semantic information is embedded. Preliminary results show that, although overall usage numbers are still small, certain differences between individual sectors exist. Keywords: semantic web, RDFa, microformats, Austria, industry sectors 1 Introduction As tourism is a very information-intense industry (Werthner & Klein, 1999), especially novel users resort to well-known generic search engines like Google to find travel related information (Mitsche, 2005). Often, these machines do not provide satisfactory search results as their algorithms match a user’s query against the (weighted) terms found in online documents (Berry and Browne, 1999). One solution to this problem lies in “Semantic Searches” (Maedche & Staab, 2002). In order for them to work, web resources must first be annotated with additional metadata describing the content (Davies, Studer & Warren., 2006). Therefore, anyone who wants to provide data online must decide on which technology to use. -

Adversarial Web Search by Carlos Castillo and Brian D

Foundations and TrendsR in Information Retrieval Vol. 4, No. 5 (2010) 377–486 c 2011 C. Castillo and B. D. Davison DOI: 10.1561/1500000021 Adversarial Web Search By Carlos Castillo and Brian D. Davison Contents 1 Introduction 379 1.1 Search Engine Spam 380 1.2 Activists, Marketers, Optimizers, and Spammers 381 1.3 The Battleground for Search Engine Rankings 383 1.4 Previous Surveys and Taxonomies 384 1.5 This Survey 385 2 Overview of Search Engine Spam Detection 387 2.1 Editorial Assessment of Spam 387 2.2 Feature Extraction 390 2.3 Learning Schemes 394 2.4 Evaluation 397 2.5 Conclusions 400 3 Dealing with Content Spam and Plagiarized Content 401 3.1 Background 402 3.2 Types of Content Spamming 405 3.3 Content Spam Detection Methods 405 3.4 Malicious Mirroring and Near-Duplicates 408 3.5 Cloaking and Redirection 409 3.6 E-mail Spam Detection 413 3.7 Conclusions 413 4 Curbing Nepotistic Linking 415 4.1 Link-Based Ranking 416 4.2 Link Bombs 418 4.3 Link Farms 419 4.4 Link Farm Detection 421 4.5 Beyond Detection 424 4.6 Combining Links and Text 426 4.7 Conclusions 429 5 Propagating Trust and Distrust 430 5.1 Trust as a Directed Graph 430 5.2 Positive and Negative Trust 432 5.3 Propagating Trust: TrustRank and Variants 433 5.4 Propagating Distrust: BadRank and Variants 434 5.5 Considering In-Links as well as Out-Links 436 5.6 Considering Authorship as well as Contents 436 5.7 Propagating Trust in Other Settings 437 5.8 Utilizing Trust 438 5.9 Conclusions 438 6 Detecting Spam in Usage Data 439 6.1 Usage Analysis for Ranking 440 6.2 Spamming Usage Signals 441 6.3 Usage Analysis to Detect Spam 444 6.4 Conclusions 446 7 Fighting Spam in User-Generated Content 447 7.1 User-Generated Content Platforms 448 7.2 Splogs 449 7.3 Publicly-Writable Pages 451 7.4 Social Networks and Social Media Sites 455 7.5 Conclusions 459 8 Discussion 460 8.1 The (Ongoing) Struggle Between Search Engines and Spammers 460 8.2 Outlook 463 8.3 Research Resources 464 8.4 Conclusions 467 Acknowledgments 468 References 469 Foundations and TrendsR in Information Retrieval Vol. -

Uncovering Social Network Sybils in the Wild

Uncovering Social Network Sybils in the Wild ZHI YANG, Peking University 2 CHRISTO WILSON, University of California, Santa Barbara XIAO WANG, Peking University TINGTING GAO,RenrenInc. BEN Y. ZHAO, University of California, Santa Barbara YAFEI DAI, Peking University Sybil accounts are fake identities created to unfairly increase the power or resources of a single malicious user. Researchers have long known about the existence of Sybil accounts in online communities such as file-sharing systems, but they have not been able to perform large-scale measurements to detect them or measure their activities. In this article, we describe our efforts to detect, characterize, and understand Sybil account activity in the Renren Online Social Network (OSN). We use ground truth provided by Renren Inc. to build measurement-based Sybil detectors and deploy them on Renren to detect more than 100,000 Sybil accounts. Using our full dataset of 650,000 Sybils, we examine several aspects of Sybil behavior. First, we study their link creation behavior and find that contrary to prior conjecture, Sybils in OSNs do not form tight-knit communities. Next, we examine the fine-grained behaviors of Sybils on Renren using clickstream data. Third, we investigate behind-the-scenes collusion between large groups of Sybils. Our results reveal that Sybils with no explicit social ties still act in concert to launch attacks. Finally, we investigate enhanced techniques to identify stealthy Sybils. In summary, our study advances the understanding of Sybil behavior on OSNs and shows that Sybils can effectively avoid existing community-based Sybil detectors. We hope that our results will foster new research on Sybil detection that is based on novel types of Sybil features.