“Fake News” Is Not Simply False Information: A

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Fake News and Propaganda: a Critical Discourse Research Perspective

Open Information Science 2019; 3: 197–208 Research article Iulian Vamanu* Fake News and Propaganda: A Critical Discourse Research Perspective https://doi.org/10.1515/opis-2019-0014 Received September 25, 2018; accepted May 9, 2019 Abstract: Having been invoked as a disturbing factor in recent elections across the globe, fake news has become a frequent object of inquiry for scholars and practitioners in various fields of study and practice. My article draws intellectual resources from Library and Information Science, Communication Studies, Argumentation Theory, and Discourse Research to examine propagandistic dimensions of fake news and to suggest possible ways in which scientific research can inform practices of epistemic self-defense. Specifically, the article focuses on a cluster of fake news of potentially propagandistic import, employs a framework developed within Argumentation Theory to explore ten ways in which fake news may be used as propaganda, and suggests how Critical Discourse Research, an emerging cluster of theoretical and methodological approaches to discourses, may provide people with useful tools for identifying and debunking fake news stories. My study has potential implications for further research and for literacy practices. In particular, it encourages empirical studies of its guiding premise that people who became familiar with certain research methods are less susceptible to fake news. It also contributes to the design of effective research literacy practices. Keywords: post-truth, literacy, scientific research, discourse studies, persuasion “Don’t be so overly dramatic about it, Chuck. You’re saying it’s a falsehood [...] Sean Spicer, our press secretary, gave alternative facts to that.” (Kellyanne Conway, Counselor to the U.S. -

Media Effects and Marginalized Ideas: Relationships Among Media Consumption and Support for Black Lives Matter

International Journal of Communication 13(2019), 4287–4305 1932–8036/20190005 Media Effects and Marginalized Ideas: Relationships Among Media Consumption and Support for Black Lives Matter DANIELLE KILGO Indiana University, USA RACHEL R. MOURÃO Michigan State University, USA Building on research analyses of Black Lives Matter media portrayals, this inquiry uses a two-wave panel survey to examine the effects news coverage has on the evaluation of the core ideas from the Black Lives Matter social movement agenda. Results show that conservative media use increases negative evaluations; models suggest this relationship works as a multidirectional feedback loop. Mainstream and liberal media consumptions do not lead to more positive views about Black Lives Matter’s core ideas. Keywords: media effects, partisan media, conflict, news audiences, Black Lives Matter The 2014 shooting death of Michael Brown in Ferguson, MO, was the catalyst that launched a brewing protest movement into the international spotlight. Police officer Darren Wilson shot the unarmed teenager multiple times in the middle of a neighborhood street. Initial protests aimed at finding justice for Brown turned violent quickly and were subsequently met with a militarized police force (e.g., Brown, 2014). Local protests continued in Ferguson while the jury deliberated Wilson’s possible indictment. However, in November 2014, Wilson was not indicted, and the decision refueled national protests. Brown’s death was one of many in 2014, and news media paid attention to the reoccurrence of similar scenarios, as well as the associated protests. These demonstrations were part of the growing, decentralized Black Lives Matter (BLM) movement. Protests echoed demands for justice for Black men, women, and children killed by excessive use of force, for police policy reformation, and for the acknowledgment of oppression against Blacks and other marginalized communities. -

Protests, Free Expression, and College Campuses

Social Education 82(1), pp. 6–9 ©2018 National Council for the Social Studies Lessons on the Law Protests, Free Expression, and College Campuses Evan Gerstmann Much has been written about university student protests against conservative speak- commencement speaker at Rutgers ers on campus, and there has been a great deal of media coverage as well. The bulk University. At Smith, students and faculty of the coverage has been critical, lamenting the lack of respect that today’s students protested Lagarde’s invitation because have for free speech and meaningful debate. Across the country, legislation is being of her representation of IMF policies, considered that would mandate punishment for disruptive students and remove which critics argue have not had a posi- university autonomy in dealing with controversial social issues. This legislation will tive influence on developing economies. be discussed in greater detail later in the article. At Rutgers, students protested Rice’s invitation based on her support of the Is this concern overly hyped? Is this Maddow; Clarence Thomas and Ruth Iraq War. It was competely reasonable legislation really neccesary, or even Bader Ginsburg. Students have abso- for students to object to both women as positive? While free speech is a corner- lutely no right to keep a speaker off of commencement speakers. stone of American democracy and is their campus simply because they don’t Why is commencement different? central to the mission of higher educa- like their point of view. If a professor Many reasons. Serving as a commence- tion, the coverage of this issue has gen- invites a member of the Nazi Party to ment speaker is not just a speaking erally lacked nuance and has failed to campus (and I should mention that not opportunity—it is a major honor by a pay attention to important distinctions. -

1 to What Extent Has Sensationalism in the Oregon Shooting, the Ebola

1 To what extent has sensationalism in the Oregon shooting, the Ebola Crisis, and the missing Malaysian flight avoided genuine problems in print media? AP CAPSTONE RESEARCH March 14, 2016 2 Introduction Sensationalism can be defined as subject matter designed to produce startling or thrilling impressions or to excite and please vulgar taste. (Dictionary.com, 2016) The use of sensationalism in media, or other forms of entertainment can be traced back to ancient roman society, where messages would be publically presented. On public message boards, information was considered to be sensationalized as scandalous and thrill-seeking stories were typically presented first, and in the most exciting manner. From that period on, although not officially given a name, the idea of sensationalizing stories became predominant in society. (Czarny, 2016) It is typical amongst news outlets that stories involving death and crime gain the most attention. Not only do these stories exhibit controversial, graphic, and expressive details, but give news outlets the opportunity to report on high profile cases, that can be followed and updated for days to come, filling up air time. (Czarny, 2016) The idea of sensationalism began after the nineteenth century, due to William Randolph Hearst and Joseph Pulitzer. Both publishers worked for high profile media outlets based out of New York City, and developed the idea and name of Yellow Journalism. Journalists would uphold the title of a “yellow journalist” as their stories would exaggerate events and conceal accurate details to attract and rouse viewers. (Story, 2016) Although unethical, media outlets saw dramatic increases in the numbers of viewers, and thrived off of the attention they were receiving. -

The Discursive Construction of the Gamification of Journalism

Archived version from NCDOCKS Institutional Repository http://libres.uncg.edu/ir/asu/ The Discursive Construction Of The Gamification Of Journalism By: Tim P. Vos and Gregory P. Perreault Abstract This study explores the discursive, normative construction of gamification within journalism. Rooted in a theory of discursive institutionalism and by analyzing a significant corpus of metajournalistic discourse from 2006 to 2019, the study demonstrates how journalists have negotiated gamification’s place within journalism’s boundaries. The discourse addresses criticism that gamified news is a move toward infotainment and makes the case for gamification as serious journalism anchored in norms of audience engagement. Thus, gamification does not constitute institutional change since it is construed as an extension of existing institutional norms and beliefs. Vos TP, Perreault GP. The discursive construction of the gamification of journalism. Convergence. 2020;26(3):470-485. doi:10.1177/1354856520909542. Publisher version of record available at: https://journals.sagepub.com/doi/full/10.1177/1354856520909542 Special Issue: Article Convergence: The International Journal of Research into The discursive construction New Media Technologies 2020, Vol. 26(3) 470–485 ª The Author(s) 2020 of the gamification of journalism Article reuse guidelines: sagepub.com/journals-permissions DOI: 10.1177/1354856520909542 journals.sagepub.com/home/con Tim P Vos Michigan State University, USA Gregory P Perreault Appalachian State University, USA Abstract This study explores the discursive, normative construction of gamification within journalism. Rooted in a theory of discursive institutionalism and by analyzing a significant corpus of meta- journalistic discourse from 2006 to 2019, the study demonstrates how journalists have negotiated gamification’s place within journalism’s boundaries. -

Political Rhetoric and Minority Health: Introducing the Rhetoric- Policy-Health Paradigm

Saint Louis University Journal of Health Law & Policy Volume 12 Issue 1 Public Health Law in the Era of Alternative Facts, Isolationism, and the One Article 7 Percent 2018 Political Rhetoric and Minority Health: Introducing the Rhetoric- Policy-Health Paradigm Kimberly Cogdell Grainger North Carolina Central University, [email protected] Follow this and additional works at: https://scholarship.law.slu.edu/jhlp Part of the Health Law and Policy Commons Recommended Citation Kimberly C. Grainger, Political Rhetoric and Minority Health: Introducing the Rhetoric-Policy-Health Paradigm, 12 St. Louis U. J. Health L. & Pol'y (2018). Available at: https://scholarship.law.slu.edu/jhlp/vol12/iss1/7 This Symposium Article is brought to you for free and open access by Scholarship Commons. It has been accepted for inclusion in Saint Louis University Journal of Health Law & Policy by an authorized editor of Scholarship Commons. For more information, please contact Susie Lee. SAINT LOUIS UNIVERSITY SCHOOL OF LAW POLITICAL RHETORIC AND MINORITY HEALTH: INTRODUCING THE RHETORIC-POLICY-HEALTH PARADIGM KIMBERLY COGDELL GRAINGER* ABSTRACT Rhetoric is a persuasive device that has been studied for centuries by philosophers, thinkers, and teachers. In the political sphere of the Trump era, the bombastic, social media driven dissemination of rhetoric creates the perfect space to increase its effect. Today, there are clear examples of how rhetoric influences policy. This Article explores the link between divisive political rhetoric and policies that negatively affect minority health in the U.S. The rhetoric-policy-health (RPH) paradigm illustrates the connection between rhetoric and health. Existing public health policy research related to Health in All Policies and the social determinants of health combined with rhetorical persuasive tools create the foundation for the paradigm. -

Fake News, Real Hip: Rhetorical Dimensions of Ironic Communication in Mass Media

FAKE NEWS, REAL HIP: RHETORICAL DIMENSIONS OF IRONIC COMMUNICATION IN MASS MEDIA By Paige Broussard Matthew Guy Heather Palmer Associate Professor Associate Professor Director of Thesis Committee Chair Rebecca Jones UC Foundation Associate Professor Committee Chair i FAKE NEWS, REAL HIP: RHETORICAL DIMENSIONS OF IRONIC COMMUNICATION IN MASS MEDIA By Paige Broussard A Thesis Submitted to the Faculty of the University of Tennessee at Chattanooga in Partial Fulfillment of the Requirements of the Degree of Master of Arts in English The University of Tennessee at Chattanooga Chattanooga, Tennessee December 2013 ii ABSTRACT This paper explores the growing genre of fake news, a blend of information, entertainment, and satire, in main stream mass media, specifically examining the work of Stephen Colbert. First, this work examines classic definitions of satire and contemporary definitions and usages of irony in an effort to understand how they function in the fake news genre. Using a theory of postmodern knowledge, this work aims to illustrate how satiric news functions epistemologically using both logical and narrative paradigms. Specific artifacts are examined from Colbert’s speech in an effort to understand how rhetorical strategies function during his performances. iii ACKNOWLEDGEMENTS Without the gracious help of several supporting faculty members, this thesis simply would not exist. I would like to acknowledge Dr. Matthew Guy, who agreed to direct this project, a piece of work that I was eager to tackle though I lacked a steadfast compass. Thank you, Dr. Rebecca Jones, for both stern revisions and kind encouragement, and knowing the appropriate times for each. I would like to thank Dr. -

Download a PDF Version of the Official

“To Open Minds, To Educate Intelligence, To Inform Decisions” The International Academic Forum provides new perspectives to the thought-leaders and decision-makers of today and tomorrow by offering constructive environments for dialogue and interchange at the intersections of nation, culture, and discipline. Headquartered in Nagoya, Japan, and registered as a Non-Profit Organization 一般社( 団法人) , IAFOR is an independent think tank committed to the deeper understanding of contemporary geo-political transformation, particularly in the Asia Pacific Region. INTERNATIONAL INTERCULTURAL INTERDISCIPLINARY iafor The Executive Council of the International Advisory Board Mr Mitsumasa Aoyama Professor June Henton Professor Baden Offord Director, The Yufuku Gallery, Tokyo, Japan Dean, College of Human Sciences, Auburn University, Professor of Cultural Studies and Human Rights & Co- USA Director of the Centre for Peace and Social Justice Southern Cross University, Australia Lord Charles Bruce Professor Michael Hudson Lord Lieutenant of Fife President of The Institute for the Study of Long-Term Professor Frank S. Ravitch Chairman of the Patrons of the National Galleries of Economic Trends (ISLET) Professor of Law & Walter H. Stowers Chair in Law Scotland Distinguished Research Professor of Economics, The and Religion, Michigan State University College of Law Trustee of the Historic Scotland Foundation, UK University of Missouri, Kansas City Professor Richard Roth Professor Donald E. Hall Professor Koichi Iwabuchi Senior Associate Dean, Medill School of Journalism, Herbert J. and Ann L. Siegel Dean Professor of Media and Cultural Studies & Director of Northwestern University, Qatar Lehigh University, USA the Monash Asia Institute, Monash University, Australia Former Jackson Distinguished Professor of English Professor Monty P. -

Outline for Introduction

Introduction Twentieth-century American journalism was born in a little-remembered burst of inspired self-promotion. It was born in a paroxysm of yellow journalism. Ten seconds into the century, the first issue of the New York Journal of 1 January 1901 fell from the newspaper’s complex of fourteen high- speed presses. The first issue was rushed by automobile across pave- ments slippery with mud and rain to a waiting express train, reserved especially for the occasion. The newspaper was folded into an engraved silver case and carried aboard by Langdon Smith, a young reporter known for his vivid prose style. At speeds that reached eighty miles an hour, the special train raced through the darkness to Washington, D.C., and Smith’s rendezvous with the president, William McKinley. The president’s personal secretary made no mention in his diary of the special delivery of the Journal that day, noting instead that the New Year’s reception at the executive mansion had attracted 5,500 well- wishers and was said to have been “the most successful for many years.”1 But the Journal exulted: A banner headline spilled across the front page of the 2 January 1901 issue, asserting the Journal’s distinction of having published “the first Twentieth Century newspaper . in this country,” and that the first issue had been delivered at considerable ex- pense and effort directly to McKinley.2 There was a lot of yellow journalism in Smith’s turn-of-the-century run to Washington. The occasion illuminated the qualities that made the genre—of which the New York Journal was an archetype—both so irritat- ing and so irresistible: Yellow journalism could be imaginative yet frivo- lous, aggressive yet self-indulgent. -

“Yellow-Kid Reporter” Era, and Artifice

Introduction American Children’s Literature, the “Yellow-Kid Reporter” Era, and Artifice Pretend it’s 1896. You live in New York City. It’s Sunday. That means today is the day for the supplement edition of The World news- paper, and that means Hogan’s Alley and the Yellow Kid. In the days when the printed newspaper defined the world for the reading public, Joseph Pulitzer’s World attempted to report and reimagine it in bold, innovative ways, including through the use of color and comics. Hogan’s Alley, a one-panel illustration starring the uncouth Yellow Kid and his gang of street children, became one of the pioneers of this daring, fledgling form that had discovered a new way to—well, what exactly did it do? The introduction of bright visual hues and irreverent characters made it a novelty feature and popular amusement. But it also functioned as biting political satire and cultural commentary, and it did so through the figures of children. Whether young or old, it’s not unlikely that you would have eagerly sought out a copy of The World on Sundays. But what “news” of the world would you have absorbed? What was the Yellow Kid reporting to his readers? If you had picked up the Sunday World on July 26, 1896, you may have skipped straight to the supplement to see the new raucous Hogan’s Alley. It might not have been giving its audience new information about the latest hap- penings in New York or Washington, but in some fashion, it was usually saying something about those happenings. -

Muckraker Project.Pdf

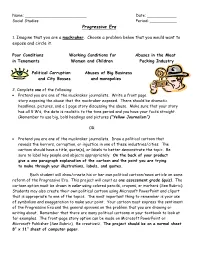

Name: _________________________ Date: ____________ Social Studies Period: ___________ Progressive Era 1. Imagine that you are a muckraker. Choose a problem below that you would want to expose and circle it. Poor Conditions Working Conditions for Abuses in the Meat in Tenements Women and Children Packing Industry Political Corruption Abuses of Big Business and City Bosses and monopolies 2. Complete one of the following: • Pretend you are one of the muckraker journalists. Write a front page story exposing the abuse that the muckraker exposed. There should be dramatic headlines, pictures, and a 1 page story discussing the abuse. Make sure that your story has all 5 W’s, the date is realistic to the time period and you have your facts straight. (Remember to use big, bold headings and pictures (“Yellow Journalism”) OR • Pretend you are one of the muckraker journalists. Draw a political cartoon that reveals the horrors, corruption, or injustice in one of these industries/cities. The cartoon should have a title, quote(s), or labels to better demonstrate the topic. Be sure to label key people and objects appropriately. On the back of your product give a one paragraph explanation of the cartoon and the point you are trying to make through your illustrations, labels, and quotes. Each student will draw/create his or her own political cartoon/news article on some reform of the Progressive Era. This project will count as one assessment grade (quiz). The cartoon option must be drawn in color using colored pencils, crayons, or markers (See Rubric). Students may also create their own political cartoon using Microsoft PowerPoint and clipart that is appropriate to one of the topics. -

What Is a Muckraker? (PDF, 67

What Is a Muckraker? President Theodore Roosevelt popularized the term muckrakers in a 1907 speech. The muckraker was a man in John Bunyan’s 1678 allegory Pilgrim’s Progress who was too busy raking through barnyard filth to look up and accept a crown of salvation. Though Roosevelt probably meant to include “mud-slinging” politicians who attacked others’ moral character rather than debating their ideas, the term has stuck as a reference to journalists who investigate wrongdoing by the rich or powerful. Historical Significance In its narrowest sense, the term refers to long-form, investigative journalism published in magazines between 1900 and World War I. Unlike yellow journalism, the reporters and editors of this period valued accuracy and the aggressive pursuit of information, often by searching through mounds of documents and data and by conducting extensive interviews. It sometimes included first- person reporting. Muckraking was seen in action when Ida M. Tarbell exposed the strong-armed practices of the Standard Oil Company in a 19-part series in McClure’s Magazine between 1902 and 1904. Her exposé helped end the company’s monopoly over the oil industry. Her stories include information from thousands of documents from across the nation as well as interviews with current and former executives, competitors, government regulators, antitrust lawyers and academic experts. It was republished as “The Rise of the Standard Oil Company.” Upton Sinclair’s investigation of the meatpacking industry gave rise to at least two significant federal laws, the Meat Inspection Act and the Pure Food and Drug Act. Sinclair spent seven weeks as a worker at a Chicago meatpacking plant and another four months investigating the industry, then published a fictional work based on his findings as serial from February to November of 1905 in a socialist magazine.