Are Research Datasets FAIR in the Long Run?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Digital Backgrounds Using GIMP

Digital Backgrounds using GIMP GIMP is a free image editing program that rivals the functions of the basic versions of industry leaders such as Adobe Photoshop and Corel Paint Shop Pro. For information on which image editing program is best for your needs, please refer to our website, digital-photo- backgrounds.com in the FAQ section. This tutorial was created to teach you, step-by-step, how to remove a subject (person or item) from its original background and place it onto a Foto*Fun Digital Background. Upon completing this tutorial, you will have the skills to create realistic portraits plus photographic collages and other useful functions. If you have any difficulties with any particular step in the process, feel free to contact us anytime at digital-photo-backgrounds.com. How to read this tutorial It is recommended that you use your own image when following the instructions. However, one has been provided for you in the folder entitled "Tutorial Images". This tutorial uses screenshots to visually show you each step of the process. Each step will be numbered which refers to a number within the screenshot image to indicate the location of the function or tool being described in that particular step. Written instructions are first and refer to the following screenshot image. Keyboard shortcuts are shown after most of the described functions and are in parentheses as in the following example (ctrl+T); which would indicate that the "ctrl" key and the letter "T" key should be pressed at the same time. These are keyboard shortcuts and can be used in place of the described procedure; not in addition to the described procedure. -

Seashore Guide

Seashore The Incomplete Guide Contents Contents..........................................................................................................................1 Introducing Seashore.......................................................................................................4 Product Summary........................................................................................................4 Technical Requirements ..............................................................................................4 Development Notice....................................................................................................4 Seashore’s Philosophy.................................................................................................4 Seashore and the GIMP...............................................................................................4 How do I contribute?...................................................................................................5 The Concepts ..................................................................................................................6 Bitmaps.......................................................................................................................6 Colours .......................................................................................................................7 Layers .........................................................................................................................7 Channels .................................................................................................................. -

Freeware Irfanview Windows 10 Latest Version Download Freeware Irfanview Windows 10 Latest Version Download

freeware irfanview windows 10 latest version download Freeware irfanview windows 10 latest version download. Advantages of IrfanView 64-bit over 32-bit version: It can load VERY large files/images (image RAM size over 1.3 GB, for special users) Faster for very large images (25+ Megapixels, loading or image operations) Runs 'only' on a 64-bit Windows (Vista, Win7, Win8, Win10) Advantages of IrfanView 32-bit over 64-bit version: Runs on a 32-bit and 64-bit Windows Loads all files/images for normal needs (max. RAM size is about 1.3 GB) Needs less disc space All PlugIns will work: not all PlugIns are ported (yet) to 64-bit (like OCR) and some 32-bit PlugIns must be still used in the 64-bit version, some with limitations (see the "Plugins32" folder) Some old 32-bit PlugIns (like RIOT and Adobe 8BF PlugIn) work only in compatilibilty mode in IrfanView-64 ( only 32-bit 8BF files/effects can be used ) Command line options for scanning (/scan etc.) work only in 32-bit (because no 64-bit TWAIN drivers ) Notes: You can install both versions on the same system, just use different folders . For example: install the 32-bit version in your "Program Files (x86)" folder and the 64-bit version in your "Program Files" folder (install 32-bit PlugIns to IrfanView-32 and 64-bit PlugIns to IrfanView-64, DO NOT mix the PlugIns and IrfanView bit versions) The program name and icon have some extra text in the 64-bit version for better distinguishing. Available 64-bit downloads. -

Digital Photo Editing

Digital Photo Editing Digital Photo Editing There are two main catagories of photo editing software. 1. Photo Organizers - Programs that help you find your pictures. May also do some editing, and create web pages and collages. Examples: Picasa, XNView, ACDsee, Adobe Photoshop Elements 2. Photo Editors - Work on one picture file at a time. Usually more powerful editing features. Examples: Adobe Photoshop, Gimp, Paint.Net, Corel Paint Shop Photo Organizers Organizers tend to have a similar look and functionality to each other. Thumb nail views, a directory tree of your files and folders, and a slightly larger preview of the picture currently selected. A selection of the most used editing tools, and batch editing for making minor corrections to multiple pictures at once. The ability to create slide shows, contact sheets, and web pages are also features you can expect to see. XNView Picasa ACDsee Some of the editing features included in Photo Organizer software are: Red Eye Reduction, Rotate, Resize, Contrast, Color Saturation, Sharpen Focus and more. Many of these can be done in batch mode to as many pictures as you select. Picasa has added Picnik to it's tool set allowing you to upload your photo to the Picnik website for added editing features. Here is an example of Redeye removal in Picasa. Crop, Straighten, and Fill Light are often needed basic fixes. Saving and converting your picture file. In Xnview you can import about 400 file formats and export in about 50. For the complete list goto http://www.xnview. com/en/formats.html . Here is a list of some of the key file formats your likely to use and / or come across often. -

R-Photo User's Manual

User's Manual © R-Tools Technology Inc 2020. All rights reserved. www.r-tt.com © R-tools Technology Inc 2020. All rights reserved. No part of this User's Manual may be copied, altered, or transferred to, any other media without written, explicit consent from R-tools Technology Inc.. All brand or product names appearing herein are trademarks or registered trademarks of their respective holders. R-tools Technology Inc. has developed this User's Manual to the best of its knowledge, but does not guarantee that the program will fulfill all the desires of the user. No warranty is made in regard to specifications or features. R-tools Technology Inc. retains the right to make alterations to the content of this Manual without the obligation to inform third parties. Contents I Table of Contents I Start 1 II Quick Start Guide in 3 Steps 1 1 Step 1. Di.s..k.. .S..e..l.e..c..t.i.o..n.. .............................................................................................................. 1 2 Step 2. Fi.l.e..s.. .M..a..r..k.i.n..g.. ................................................................................................................ 4 3 Step 3. Re..c..o..v..e..r.y.. ...................................................................................................................... 6 III Features 9 1 File Sorti.n..g.. .............................................................................................................................. 9 2 File Sea.r.c..h.. ............................................................................................................................ -

Forcepoint DLP Supported File Formats and Size Limits

Forcepoint DLP Supported File Formats and Size Limits Supported File Formats and Size Limits | Forcepoint DLP | v8.8.1 This article provides a list of the file formats that can be analyzed by Forcepoint DLP, file formats from which content and meta data can be extracted, and the file size limits for network, endpoint, and discovery functions. See: ● Supported File Formats ● File Size Limits © 2021 Forcepoint LLC Supported File Formats Supported File Formats and Size Limits | Forcepoint DLP | v8.8.1 The following tables lists the file formats supported by Forcepoint DLP. File formats are in alphabetical order by format group. ● Archive For mats, page 3 ● Backup Formats, page 7 ● Business Intelligence (BI) and Analysis Formats, page 8 ● Computer-Aided Design Formats, page 9 ● Cryptography Formats, page 12 ● Database Formats, page 14 ● Desktop publishing formats, page 16 ● eBook/Audio book formats, page 17 ● Executable formats, page 18 ● Font formats, page 20 ● Graphics formats - general, page 21 ● Graphics formats - vector graphics, page 26 ● Library formats, page 29 ● Log formats, page 30 ● Mail formats, page 31 ● Multimedia formats, page 32 ● Object formats, page 37 ● Presentation formats, page 38 ● Project management formats, page 40 ● Spreadsheet formats, page 41 ● Text and markup formats, page 43 ● Word processing formats, page 45 ● Miscellaneous formats, page 53 Supported file formats are added and updated frequently. Key to support tables Symbol Description Y The format is supported N The format is not supported P Partial metadata -

Designing and Developing a Model for Converting Image Formats Using Java API for Comparative Study of Different Image Formats

International Journal of Scientific and Research Publications, Volume 4, Issue 7, July 2014 1 ISSN 2250-3153 Designing and developing a model for converting image formats using Java API for comparative study of different image formats Apurv Kantilal Pandya*, Dr. CK Kumbharana** * Research Scholar, Department of Computer Science, Saurashtra University, Rajkot. Gujarat, INDIA. Email: [email protected] ** Head, Department of Computer Science, Saurashtra University, Rajkot. Gujarat, INDIA. Email: [email protected] Abstract- Image is one of the most important techniques to Different requirement of compression in different area of image represent data very efficiently and effectively utilized since has produced various compression algorithms or image file ancient times. But to represent data in image format has number formats with time. These formats includes [2] ANI, ANIM, of problems. One of the major issues among all these problems is APNG, ART, BMP, BSAVE, CAL, CIN, CPC, CPT, DPX, size of image. The size of image varies from equipment to ECW, EXR, FITS, FLIC, FPX, GIF, HDRi, HEVC, ICER, equipment i.e. change in the camera and lens puts tremendous ICNS, ICO, ICS, ILBM, JBIG, JBIG2, JNG, JPEG, JPEG 2000, effect on the size of image. High speed growth in network and JPEG-LS, JPEG XR, MNG, MIFF, PAM, PCX, PGF, PICtor, communication technology has boosted the usage of image PNG, PSD, PSP, QTVR, RAS, BE, JPEG-HDR, Logluv TIFF, drastically and transfer of high quality image from one point to SGI, TGA, TIFF, WBMP, WebP, XBM, XCF, XPM, XWD. another point is the requirement of the time, hence image Above mentioned formats can be used to store different kind of compression has remained the consistent need of the domain. -

Scape D10.1 Keeps V1.0

Identification and selection of large‐scale migration tools and services Authors Rui Castro, Luís Faria (KEEP Solutions), Christoph Becker, Markus Hamm (Vienna University of Technology) June 2011 This work was partially supported by the SCAPE Project. The SCAPE project is co-funded by the European Union under FP7 ICT-2009.4.1 (Grant Agreement number 270137). This work is licensed under a CC-BY-SA International License Table of Contents 1 Introduction 1 1.1 Scope of this document 1 2 Related work 2 2.1 Preservation action tools 3 2.1.1 PLANETS 3 2.1.2 RODA 5 2.1.3 CRiB 6 2.2 Software quality models 6 2.2.1 ISO standard 25010 7 2.2.2 Decision criteria in digital preservation 7 3 Criteria for evaluating action tools 9 3.1 Functional suitability 10 3.2 Performance efficiency 11 3.3 Compatibility 11 3.4 Usability 11 3.5 Reliability 12 3.6 Security 12 3.7 Maintainability 13 3.8 Portability 13 4 Methodology 14 4.1 Analysis of requirements 14 4.2 Definition of the evaluation framework 14 4.3 Identification, evaluation and selection of action tools 14 5 Analysis of requirements 15 5.1 Requirements for the SCAPE platform 16 5.2 Requirements of the testbed scenarios 16 5.2.1 Scenario 1: Normalize document formats contained in the web archive 16 5.2.2 Scenario 2: Deep characterisation of huge media files 17 v 5.2.3 Scenario 3: Migrate digitised TIFFs to JPEG2000 17 5.2.4 Scenario 4: Migrate archive to new archiving system? 17 5.2.5 Scenario 5: RAW to NEXUS migration 18 6 Evaluation framework 18 6.1 Suitability for testbeds 19 6.2 Suitability for platform 19 6.3 Technical instalability 20 6.4 Legal constrains 20 6.5 Summary 20 7 Results 21 7.1 Identification of candidate tools 21 7.2 Evaluation and selection of tools 22 8 Conclusions 24 9 References 25 10 Appendix 28 10.1 List of identified action tools 28 vi 1 Introduction A preservation action is a concrete action, usually implemented by a software tool, that is performed on digital content in order to achieve some preservation goal. -

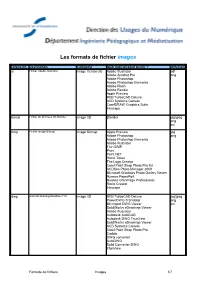

Les Formats De Fichier Images

Les formats de fichier images Extension Description Catégorie Quel logiciel pour ouvrir ? diffusion ai Fichier Adobe Illustrator Image Vectorielle Adobe Illustrator pdf Adobe Acrobat Pro png Adobe Photoshop Adobe Photoshop Elements Adobe Flash Adobe Reader Apple Preview IMSI TurboCAD Deluxe ACD Systems Canvas CorelDRAW Graphics Suite Inkscape blend Fichier de données 3D Blender Image 3D Blender jpg/jpeg png avi bmp Fichier Image Bitmap Image Bitmap Apple Preview jpg Adobe Photoshop png Adobe Photoshop Elements Adobe Illustrator The GIMP Paint Paint.NET Roxio Toast The Logo Creator Corel Paint Shop Photo Pro X3 ACDSee Photo Manager 2009 Microsoft Windows Photo Gallery Viewer Nuance PaperPort Nuance OmniPage Professional Roxio Creator Inkscape dwg AtoCAD Drawing Database File Image 3D IMSI TurboCAD Deluxe jpg/jpeg PowerDWG Translator png Microspot DWG Viewer avi SolidWorks eDrawings Viewer Adobe Illustrator Autodesk AutoCAD Autodesk DWG TrueView SolidWorks eDrawings Viewer ACD Systems Canvas Corel Paint Shop Photo Pro Caddie DWG converter AutoDWG Solid Converter DWG IrfanView Formats de fichiers Images 1/7 Les formats de fichier images Extension Description Catégorie Quel logiciel pour ouvrir ? diffusion dxf Drawing Exchange Format File Image 3D TurboCAD Deluxe 16 jpg/jpeg PowerCADD PowerDWG translator png Microspot DWG Viewer avi NeoOffice Draw DataViz MacLink Plus Autodesk AutoCAD IMSI TurboCAD Deluxe SolidWorks eDrawings Viewer Corel Paint Shop Photo Pro ACD Systems Canvas DWG converter DWG2Image Converter OpenOffice.org Draw Adobe Illustrator -

Table of Contents

Contents in Detail Introduction ..................... xv 1.5 Exercises.................. 24 ThisBook.................. xv 2 Photograph Retouching ....... 25 TheAuthors................ xvi 2.1 Tutorial: Enhancing Badly Taken Production Notes . xvii Photographs . 25 Conventions . xvii Cropping, Straightening, and Acknowledgments . xvii Restoring Perspective . 25 Correcting Exposure . 26 Part I Learning GIMP Correcting the Color Balance . 28 1 Getting Started .............. 3 Removing an Object . 29 1.1 GIMP Basics . 3 Improving Sharpness . 30 The Screen Layout . 3 Removing Red Eye . 30 Dockable Dialogs . 4 2.2 Global Transformations . 32 TheToolbox................ 6 Resizing an Image . 32 The Single-Window Interface . 6 Shearing an Image . 33 1.2 Image Handling Basics . 8 Changing Brightness, Contrast, and Opening an Image . 8 Levels............... 34 Maximizing an Image . 9 Adjusting Colors . 36 Scanning.................. 10 2.3 Local Transformations . 38 Image Window Menus . 11 Making a Selection . 38 Zooming.................. 13 Modifying Sharpness . 41 Saving an Image . 13 Modifying Brightness . 42 1.3 Working with Images . 15 Using the Clone Tool . 45 Resizing an Image . 15 Perspective Cloning . 46 Cropping an Image . 15 More Correction Methods for Red Eye 47 Adding a Frame with Filters . 17 Changing the Sky of a Landscape . 49 UsingLayers................ 18 2.4 Retouching a Scanned Photograph . 50 Drawing in GIMP . 19 Correcting Colors . 50 Printing with GIMP . 20 Correcting Scratches and Spots . 53 1.4 Using a Tablet . 21 Restoring Very Old Photographs . 54 Installing a Tablet . 22 2.5 Advanced Techniques . 55 The Tablet Mouse and Stylus . 22 Making a Photograph Look Older . 55 Working with a Tablet in GIMP . 23 Improving a Portrait . 57 The Book of GIMP ©2013, Olivier Lecarme and Karine Delvare gimp_FINAL_FINAL_pourPDF.indd 7 10/16/2012 11:45:48 AM viii Contents in Detail Contents in Detail 0 ix Using Multiple Captures of the Using the Embossing Filters . -

Registry Support for Multimedia and Metadata in Emu 3.2.03

Registry support for multimedia and metadata in EMu 3.2.03. • Overview • ImageMagick • Multimedia o Audio o Video o Images • Metadata o EXIF o IPTC o XMP o Embed in derivatives o Extract into Multimedia module o Limiting Colours computation Overview The image, audio and video libraries used to support multimedia have been replaced in KE EMu 3.2.03. The previous libraries were becoming dated and lacked support for newer file formats, in particular 16 bit graphics and CMYK colour spaces, as well as JPEG 2000. The previous libraries also used a simple algorithm for resizing images, which led to loss of clarity and colour. Rather than tie EMu image development to a third party vendor an open source solution was adopted as this provides development for new image formats and metadata standards as they emerge. It was decided that ImageMagick offered the functionally to expand the current image support in EMu. Unfortunately ImageMagick does not provide support for audio or video formats, so it was decided to build this functionality into EMu rather then use third party libraries. Access to metadata stored in image files is made available through ImageMagick. In particular, it has limited support for EXIF, IPTC and XMP metadata profiles. EMu now uses this support to extract metadata from master images and to embed metadata into derived images. This document describes how the new multimedia and metadata features can be configured using the EMu Registry. ImageMagick The ImageMagick libraries distributed with EMu are stored under the same directory as the program executable. If a network installation is performed, the libraries reside on a server machine in a directory accessible to all client machines. -

Tipos De Ficheros Soportados

Tipos de ficheros soportados File Type Support Description EXIF IPTC XMP ICC1 Other 3FR R Hasselblad RAW (TIFF-based) R R R R - 3G2, 3GP2 R/W R/W2 R/W2 R/W/C - R/W QuickTime3 3rd Gen. Partnership Project 2 a/v (QuickTime-based) 3GP, 3GPP R/W R/W2 R/W2 R/W/C - R/W QuickTime3 3rd Gen. Partnership Project a/v (QuickTime-based) - - - - AA R Audible Audiobook R Audible AAX R/W R/W2 R/W2 R/W/C - R/W QuickTime3 Audible Enhanced Audiobook (QuickTime-based) ACR R - - - - R DICOM American College of Radiology ACR-NEMA (DICOM-like) R - - - - R Font Adobe [Composite/Multiple Master] AFM, ACFM, AMFM Font Metrics AI, AIT R/W R/W/C4 R/W/C4 R/W/C R/W/C4 Adobe Illustrator [Template] (PS or PDF) R/W/C PDF PostScript, R Photoshop AIFF, AIF, AIFC R - - - - R AIFF ID3 Audio Interchange File Format [Compressed] - - - - APE R Monkey’s Audio R APE ID3 ARW R/W Sony Alpha RAW (TIFF-based) R/W/C R/W/C R/W/C R/W/C R/W Sony SonyIDC ASF R - - R - R ASF Microsoft Advanced Systems Format AVI R/W R/W R/W R/W R/W R RIFF Audio Video Interleaved (RIFF- based) Tipos de ficheros soportados BMP, DIB R - - - - R BMP Windows BitMaP / Device Independent Bitmap BTF R R R R R - BigTIFF (64-bit Tagged Image File Format) CHM R - - - - R EXE Microsoft Compiled HTML format COS R - - - - R XML Capture One Settings (XML- based) CR2 R/W Canon RAW 2 (TIFF-based) R/W/C R/W/C R/W/C R/W/C R/W/C CanonVRD, R/W Canon CRW, CIFF R/W - - R/W/C - Canon RAW Camera Image File R/W/C CanonVRD, Format (CRW spec.) R/W CanonRaw CS1 R/W Sinar CaptureShop 1-shot RAW R/W/C R/W/C R/W/C R/W/C R Photoshop (PSD-based)