Code-Switching Patterns Can Be an Effective Route to Improve Performance of Downstream NLP Applications: a Case Study of Humour, Sarcasm and Hate Speech Detection

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

LS2208 Product Reference Guide, P/N MN000754A02 Rev A

LS2208 PRODUCT REFERENCE GUIDE LS2208 PRODUCT REFERENCE GUIDE MN000754A02 Revision A March 2015 ii LS2208 Product Reference Guide No part of this publication may be reproduced or used in any form, or by any electrical or mechanical means, without permission in writing. This includes electronic or mechanical means, such as photocopying, recording, or information storage and retrieval systems. The material in this manual is subject to change without notice. The software is provided strictly on an “as is” basis. All software, including firmware, furnished to the user is on a licensed basis. We grant to the user a non-transferable and non-exclusive license to use each software or firmware program delivered hereunder (licensed program). Except as noted below, such license may not be assigned, sublicensed, or otherwise transferred by the user without our prior written consent. No right to copy a licensed program in whole or in part is granted, except as permitted under copyright law. The user shall not modify, merge, or incorporate any form or portion of a licensed program with other program material, create a derivative work from a licensed program, or use a licensed program in a network without our written permission. The user agrees to maintain our copyright notice on the licensed programs delivered hereunder, and to include the same on any authorized copies it makes, in whole or in part. The user agrees not to decompile, disassemble, decode, or reverse engineer any licensed program delivered to the user or any portion thereof. Zebra reserves the right to make changes to any product to improve reliability, function, or design. -

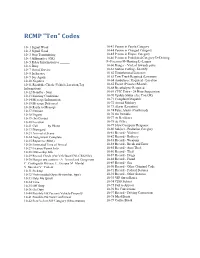

RCMP Ten Codes

RCMP "Ten" Codes 10- 1 Signal Weak 10-43 Person in Parole Category 10- 2 Signal Good 10-44 Person in Charged Category 10- 3 Stop Transmitting 10-45 Person in Elopee Category 10- 4 Affirmative (OK) 10-46 Person in Prohibited Category D=Driving 10- 5 Relay Information to ______ F=Firearms H=Hunting L=Liquor 10- 6 Busy 10-60 Danger - Violent towards police 10- 7 Out of Service 10-61 Station Calling - Identify 10- 8 In Service 10-62 Unauthorized Listeners 10- 9 Say Again 10-63 Tow Truck Required (Location) 10-10 Negative 10-64 Ambulance Required - Location 10-11 Roadside Check (Vehicle,Location,Tag 10-65 Escort (Prisoner/Mental) Information) 10-68 Breathalyzer Required 10-12 Standby - Stop 10-69 CPIC Entry - 24 Hour Suspension 10-13 Existing Conditions 10-70 Update Status (Are You OK) 10-14 Message/Information 10-71 Complaint Dispatch 10-15 Message Delivered 10-72 Armed Robbery 10-16 Reply to Message 10-73 Alarm (Location) 10-17 Enroute 10-74 False Alarm (Confirmed) 10-18 Urgent 10-76 On Portable 10-19 (In) Contact 10-77 At Residence 10-20 Location 10-78 At Office 10-21 Call _____ by Phone 10-79 Slow Computer Response 10-22 Disregard 10-80 Subject - Probation Category 10-23 Arrived at Scene 10-81 Record - Violence 10-24 Assignment Complete 10-82 Record - Robbery 10-25 Report to (Meet) 10-83 Record - Weapons 10-26 Estimated Time of Arrival 10-84 Record - Break and Enter 10-27 Licence/Permit Info 10-85 Record - Auto Theft 10-28 Ownership Info 10-86 Record - Theft 10-29 Record Check (Per/Veh/Boat/CNI-CRS File) 10-87 Record - Drugs 10-30 Danger -

International Character Code Standard for the BE2

°, , CMU-ITC-87-091 International Character Code Standard for the BE2 June 18, 1987 Tomas Centerlind Information Technology Center (ITC) Camegie Mellon University 1. Major problems with foreign languages All European languages have a set of unique characters, even Great Britain with their Pound sign. Most of these characters are static and do not change if they are in the end or in the middle of the word. The Greek sigma sign however is an example of a character that changes look depending on the position. If we move on to the non-Roman alphabets like Arabic, they have a more complex structure. A basic rule is that certain of the characters are written together if they follow each other but not otherwise. This complicates representation and requires look ahead. In addition to this many of the languages have leftwards or downwards writing. All together these properties makes it very difficult to integrate them with Roman languages. Lots of guidelines have to be established to solve these problems, and before any coding can be done a set of standards must be selected or defined. In this paper I intend to gather all that I can think of that must be considered before selecting standards. Only the basic level of the implementation will be designed, so therefore routines that for example display complex languages like Arabic must be written by the user. A basic method that dislpays Roman script correctly will be supported. 1. Standards 1.1 Existing standards Following is a list of currently existing and used standards. 1.1.1 ASCII, ISO646 The ASCII standard, that is in use ahnost anywhere, will probably have to rcmain as a basic part of the system, and without any doubt it must be possible to read and write ASCII coded documents in the foreseeable future. -

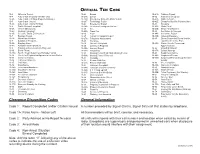

Official Ten Codes.Pdf

OFFICIAL TEN CODE 10-3 Officer in Trouble 10-23 Errand 10-41A Robbery Report 10-4 Auto Crash (Property Damage only) 10-23A Escort 10-42 Robbery in Progress 10-4A Auto Crash (Hit Skip, Property Damage) 10-23B Emergency Entry into Motor Vehicle 10-42A Robbery Alarm 10-5 Auto Crash (Injury) 10-23T Technology Repair 10-42E Electronic Satellite Robbery Alert 10-5A Auto Crash (Injury Hit Skip) 10-24 Emergency Squad 10-43 Shooting 10-6 Traffic Violator/Complaint 10-24A Infectious/Contagious Disease 10-43A Shots Fired 10-6A Vehicle Obstructing 10-25 Fire 10-43B Shots Fired/Hunters 10-6B Parking Complaint 10-25A Trash Fire 10-44 Sex Crime in Progress 10-6S Selective Traffic Enforcement 10-26 Fight 10-44A Sex Crime Report 10-7 Burglary Report 10-27 Assault or Hospital Report 10-44B Indecent Exposure 10-7A Open Door/Window 10-27A Telephone Harassment 10-45 Stolen/Suspected Stolen Vehicle, 10-8 Burglary in Progress 10-28 Homicide Lost/Stolen License Plate 10-8A Burglary Alarm 10-29 Juvenile Complaint 10-45A Stolen Vehicle Recovered/ 10-8B Burglary-Vacant Structure 10-30 Larceny in Progress Apprehensions 10-9 Fraudulent Documents (In Progress) 10-30A Larceny Report 10-46 Stranded Motorist 10-10 Bomb Threat 10-30B Shoplifting 10-47A Suicide Attempt 10-10A Bomb Threat (Suspicious Package Found) 10-31 Missing Person/High-Risk Missing Person 10-48 Suspicious Vehicle 10-12 Check for Registration/Operator’s License/Stolen 10-31A Missing Person Returned 10-48A Suspicious Person 10-13 Check for Tickets 10-32 Message 10-48G Suspected Threat Group Member/ 10-14 -

KOI-126: a Triply-Eclipsing Hierarchical Triple with Two Low

KOI-126: A Triply-Eclipsing Hierarchical Triple with Two Low-Mass Stars 1,2 2,3 1 Joshua A. Carter, ∗ Daniel C. Fabrycky, Darin Ragozzine, Matthew J. Holman,1 Samuel N. Quinn,1 David W. Latham,1 Lars A. Buchhave,1,4 Jeffrey Van Cleve,5,8 William D. Cochran,6 Miles T. Cote,5 Michael Endl,6 Eric B. Ford,7 Michael R. Haas,5 Jon M. Jenkins,5,8 David G. Koch,5 Jie Li,5,8 Jack J. Lissauer,5,9 Phillip J. MacQueen,6 Christopher K. Middour,5,13 Jerome A. Orosz,10 Jason F. Rowe,5,8,11 Jason H. Steffen,12 William F. Welsh10 1Harvard-Smithsonian Center for Astrophyics, 60 Garden Street, Cambridge, MA 02138, USA. 2Hubble Fellow. 3UCO/Lick, University of California, Santa Cruz, CA 95064, USA. 4Niels Bohr Institute, Copenhagen University, DK-2100 Copenhagen, Denmark. 5NASA Ames Research Center, Moffett Field, CA 94035, USA. 6University of Texas, Austin, TX 78712, USA. 7University of Florida, Gainesville, FL 32611, USA. 8 SETI Institute, Mountain View, CA 94043, USA. 9Stanford University, Stanford, CA 94305, USA. 10San Diego State University, San Diego, CA 92182, USA. 11NASA Postdoctoral Program Fellow. arXiv:1102.0562v1 [astro-ph.SR] 2 Feb 2011 12Fermilab Center for Particle Astrophysics, Batavia, IL 60510, USA. 13Orbital Sciences Corp. ∗To whom correspondence should be addressed; E-mail: [email protected]. The Kepler spacecraft has been monitoring the light from 150,000 stars in its primary quest to detect transiting exoplanets. Here we report on the detection 1 of an eclipsing stellar hierarchical triple, identified in the Kepler photometry. -

ARIOOOO ! Site Name: Hunterstown Road Site '•• TDD No.: F3-8404-07 " ^ TABLE of CONTENTS •

51S15 SITE INSPECTION OF HUNTERSTOWN ROAD SITE PREPARED UNDER TDD NO. F3-8*0*-07 EPA NO. PA-1018 CONTRACT NO. 68-01-6699 FOR THE HAZARDOUS SITE CONTROL DIVISION U.S. ENVIRONMENTAL PROTECTION AGENCY MARCH 26, 1985 NUS CORPORATION SUPERFUND DIVISION SUBMITTED BY REVIEWED BY APPROVED BY RICHAR'DGORRELL/ WILLIAM WENTWORTH GARTH GLENN ENVIRON. ENGINEER ASSISTANT MANAGER MANAGER, FIT III ARIOOOO ! Site Name: Hunterstown Road Site '•• TDD No.: F3-8404-07 " ^ TABLE OF CONTENTS • SECTION PAGE 1.0 INTRODUCTION 1-1 1.1 AUTHORISATION ' 1-1 1.2 SCOPE OF WORK 1-1 1.3 SUMMARY 1-1 2.0 THE SITE 2-1 2.1 LOCATION 2-1 2.2 SITE LAYOUT ' 2-1 2.3 OWNERSHIP HISTORY 2-1 2.4 SITE USE 'HISTORY ' 2-1 2.5 PERMIT AND REGULATORY ACTION HISTORY 2-2 2.6 REMEDIAL ACTION TO DATE \ 2-2 3.0 ENVIRONMENTAL SETTING ' 3-1 3.1 SURFACE WATERS 3-1 3.2 GEOLOGY AND SOILS 3-1 3.3 GROUNDWATERS , 3-2 3.4 CLIMATE AND METEOROLOGY 3-2 3.5 LAND USE " 3-3 3.6 POPULATION DISTRIBUTION 3-3 3.7 WATER SUPPLY 3-3 3.8 CRITICAL ENVIRONMENTS 3-3 j 4.0 WASTE TYPES AND QUANTITIES. 4-1 5.0 FIELD TRIP REPORT 5-1 5.1 SUMMARY ' 5-1 5.2 PERSONS CONTACTED ' 5-1 5.2.1 PRIOR TO FIELD TRIP 5-1 5.2.2 AT THE SITE ! 5-1 5.3 SAMPLE j-OG 5-2 5.4 • SITE OBSERVATIONS 5-3 5.5 PHOTOGRAPH LOG 5.6 EPA SITE INSPECTION FORM [ 6.0 LABORATORY DATA ' 6-1 6.1 SAMPLE DATA SUMMARY ' 6-1 6.2 QUALITY ASSURANCE REVIEW .6-2 6.2.1 ORGANIC ' 6-2 6.2.2 INORGANIC ; 6-5 ; I ; 7.0 TOXICOLOGICAL EVALUATION 7-1 7.1 SUMMARY 7-1 7.2 SCOPE OF CONTAMINATION 7-2 7.3 TOXICOLOGICAL CONSIDERATIONS 7-4 7.3.1 ACUTE HAZARDS 7-4 7.3.2 LONG-TERM HAZARDS ' 7-7 U flRI00002 Site Name: Hunterstown Road Site TDD No.; F3-84Q4-07 APPENDICES A 1.0 COPY OF TDD A-l B 1.0 MAPS AND SKETCHES B-l 1.1 SITE LOCATION MAP 1.2 CONTAMINATED WELLS IN THE VICINITY OF THE HUNTERSTOWN ROAD SITE 1.3 SAMPLE LOCATION MAP 1.4 PHOTOGRAPH LOCATION MAP C 1.0 QUALITY ASSURANCE C-l SUPPORT DOCUMENTATION D 1.0 LABORATORY DATA SHEETS D-l ni fiRiOQQQS 1i i -1 SECTION 1 •-i-.^S--:'•':'•••-..-.k V• Lv^r'^.s ' • - ',. -

Learning Postscript by Doing

Learning PostScript by Doing Andr¶eHeck °c 2005, AMSTEL Institute, UvA Contents 1 Introduction 3 2 Getting Started 3 2.1 A Simple Example Using Ghostscript ..................... 4 2.2 A Simple Example Using GSview ........................ 5 2.3 Using PostScript Code in a Microsoft Word Document . 6 2.4 Using PostScript Code in a LATEX Document . 7 2.5 Numeric Quantities . 8 2.6 The Operand Stack . 9 2.7 Mathematical Operations and Functions . 11 2.8 Operand Stack Manipulation Operators . 12 2.9 If PostScript Interpreting Goes Wrong . 13 3 Basic Graphical Primitives 14 3.1 Point . 15 3.2 Curve . 18 3.2.1 Open and Closed Curves . 18 3.2.2 Filled Shapes . 18 3.2.3 Straight and Circular Line Segments . 21 3.2.4 Cubic B¶ezierLine Segment . 23 3.3 Angle and Direction Vector . 25 3.4 Arrow . 27 3.5 Circle, Ellipse, Square, and Rectangle . 29 3.6 Commonly Used Path Construction Operators and Painting Operators . 31 3.7 Text . 33 3.7.1 Simple PostScript Text . 33 3.7.2 Importing LATEX text . 38 3.8 Symbol Encoding . 40 4 Style Directives 42 4.1 Dashing . 42 4.2 Coloring . 43 4.3 Joining Lines . 45 1 5 Control Structures 48 5.1 Conditional Operations . 48 5.2 Repetition . 50 6 Coordinate Transformations 66 7 Procedures 75 7.1 De¯ning Procedure . 75 7.2 Parameter Passing . 75 7.3 Local variables . 76 7.4 Recursion . 76 8 More Examples 82 8.1 Planar curves . 83 8.2 The Lorenz Butterfly . 88 8.3 A Surface Plot . -

DS2208 Digital Scanner Product Reference Guide (En)

DS2208 Digital Scanner Product Reference Guide MN-002874-06 DS2208 DIGITAL SCANNER PRODUCT REFERENCE GUIDE MN-002874-06 Revision A October 2018 ii DS2208 Digital Scanner Product Reference Guide No part of this publication may be reproduced or used in any form, or by any electrical or mechanical means, without permission in writing from Zebra. This includes electronic or mechanical means, such as photocopying, recording, or information storage and retrieval systems. The material in this manual is subject to change without notice. The software is provided strictly on an “as is” basis. All software, including firmware, furnished to the user is on a licensed basis. Zebra grants to the user a non-transferable and non-exclusive license to use each software or firmware program delivered hereunder (licensed program). Except as noted below, such license may not be assigned, sublicensed, or otherwise transferred by the user without prior written consent of Zebra. No right to copy a licensed program in whole or in part is granted, except as permitted under copyright law. The user shall not modify, merge, or incorporate any form or portion of a licensed program with other program material, create a derivative work from a licensed program, or use a licensed program in a network without written permission from Zebra. The user agrees to maintain Zebra’s copyright notice on the licensed programs delivered hereunder, and to include the same on any authorized copies it makes, in whole or in part. The user agrees not to decompile, disassemble, decode, or reverse engineer any licensed program delivered to the user or any portion thereof. -

This Is Our Jerusalem”: Early American Evangelical Localizations of the Hebraic Republic

Article “This Is Our Jerusalem”: Early American Evangelical Localizations of the Hebraic Republic Steele Brand Received: 16 October 2015; Accepted: 20 December 2015; Published: 28 December 2015 Academic Editors: Victor Roudometof and Peter Iver Kaufman Politics, Philosophy, and Economics Program, The King’s College, 56 Broadway, New York, NY 10004, USA; [email protected]; Tel.: +1-212-659-7200 Abstract: This paper examines how evangelical pastors applied Protestant notions of a Hebraic Republic for their parishioners as America transitioned from a colonial frontier to a new republic. As the American constitutions took shape during and after the Revolution, many evangelical pastors argued that America emulated or was inspired by the Israelite polity as described by the Old Testament. America and its institutions thus became a reincarnated Hebraic Republic, a new “city on a hill”, and a new Jerusalem. Originally these pastors drew on a broader, global movement that was shaping republican attempts at reform in Europe, but as they localized the biblical model to their own particular experiences, they brought new meaning to it and exported the transformed model back out to the world. Keywords: hebraic republic; evangelical; preaching; sermon; constitutionalism On 5 October 2015, contractors used the cover of darkness and an increased presence of state police to separate a granite monument of the 10 Commandments from its base and remove it from the premises of the Oklahoma capitol. So ended the long drama stirred by objections that the monument violated the constitutional separation of church and state, objections upheld by the Oklahoma Supreme Court earlier that year. The situation in Oklahoma was another episode in an ongoing American saga. -

Services Withdrawal: Declaration of Plan to Discontinue Lease, Rental, and Maintenance Services for Selected Machines

IBM Europe Withdrawal Announcement ZS21-0013, dated July 13, 2021 Services withdrawal: Declaration of plan to discontinue lease, rental, and maintenance services for selected machines Table of contents 1 Overview 136Country availability 1 Description Overview End of Service Periodically, IBM(R) provides notice to customers of plans to terminate services for IBM and original equipment manufacturer (OEM) machines under an IBM Agreement. At this time, we are providing notice that selected machine types, models, and features will be discontinued from maintenance services. IBM will send no further correspondence of this nature for these machine types, models, features, or RPQs. After the effective date, IBM will no longer offer full hardware maintenance service for the listed machine types. If available, clients will continue to receive limited hardware maintenance service as described in the "Withdrawal" section of your terms with IBM, without lapse in coverage, for select machine types. For a list of affected products and effective dates, see the Description section. End of Development Periodically, IBM provides notice to customers of plans to terminate new defect support for IBM and original equipment manufacturer (OEM) machines under an IBM Agreement. IBM will continue to provide hardware maintenance support services under the terms of existing agreements. Although IBM, in its discretion, may have periodically provided updates, patches, or fixes to the hardware's machine code (for example, microcode or firmware), IBM will no longer provide -

DS2278 Digital Scanner Product Reference Guide (En)

DS2278 Digital Scanner Product Reference Guide MN-002915-02 DS2278 DIGITAL SCANNER PRODUCT REFERENCE GUIDE MN-002915-02 Revision A August 2017 ii DS2278 Digital Scanner Product Reference Guide No part of this publication may be reproduced or used in any form, or by any electrical or mechanical means, without permission in writing from Zebra. This includes electronic or mechanical means, such as photocopying, recording, or information storage and retrieval systems. The material in this manual is subject to change without notice. The software is provided strictly on an “as is” basis. All software, including firmware, furnished to the user is on a licensed basis. Zebra grants to the user a non-transferable and non-exclusive license to use each software or firmware program delivered hereunder (licensed program). Except as noted below, such license may not be assigned, sub-licensed, or otherwise transferred by the user without prior written consent of Zebra. No right to copy a licensed program in whole or in part is granted, except as permitted under copyright law. The user shall not modify, merge, or incorporate any form or portion of a licensed program with other program material, create a derivative work from a licensed program, or use a licensed program in a network without written permission from Zebra. The user agrees to maintain Zebra’s copyright notice on the licensed programs delivered hereunder, and to include the same on any authorized copies it makes, in whole or in part. The user agrees not to decompile, disassemble, decode, or reverse engineer any licensed program delivered to the user or any portion thereof. -

Audited Financial

JEWISH FAMILY SERVICE OF SAN DIEGO CONSOLIDATED FINANCIAL STATEMENTS JUNE 30, 2013 AND 2012 & L& C Leaf Cole, LLP Certified Public Accountants JEWISH FAMILY SERVICE OF SAN DIEGO CONSOLIDATED FINANCIAL STATEMENTS JUNE 30, 2013 AND 2012 TABLE OF CONTENTS Page Independent Auditor’s Report 1 - 2 Consolidated Statements of Financial Position 3 - 4 Consolidated Statements of Activities 5 - 6 Consolidated Statement of Functional Expenses - 2013 7 - 8 Consolidated Statement of Functional Expenses - 2012 9 - 10 Consolidated Statements of Cash Flows 11 - 12 Notes to Consolidated Financial Statements 13 - 32 Supplementary Information: 2013: Supplementary Consolidating Schedule of Financial Position 33 - 34 Supplementary Consolidating Schedule of Activities 35 - 36 Supplementary Schedule of Functional Expenses - Jewish Family Service 37 Supplementary Schedule of Function Expenses - Charitable Adult Rides & Services, Inc. 38 2012: Supplementary Consolidating Schedule of Financial Position 39 - 40 Supplementary Consolidating Schedule of Activities 41 - 42 Supplementary Schedule of Functional Expenses - Jewish Family Service 43 Supplementary Schedule of Function Expenses - Charitable Adult Rides & Services, Inc. 44 i Steven W. Northcote, C.P.A. Michael S. Schreibman, C.P.A. Michael J. Zizzi, C.P.A. Julie A. Firl, C.P.A. & Nicholas M. Gines, C.P.A. Members L&C Leaf Cole, LLP American Institute of Certified Public Accountants Certified Public Accountants California Society of Certified Public Accountants A Partnership of Professional Corporations Independent Auditor’s Report To the Board of Directors Jewish Family Service of San Diego San Diego, California Report on the Consolidated Financial Statements We have audited the accompanying consolidated financial statements of Jewish Family Service of San Diego, which comprise the consolidated statements of financial position as of June 30, 2013 and 2012, and the related consolidated statements of activities, functional expenses and cash flows for the years then ended, and the related notes to the consolidated financial statements.