Computational Methods for Linear Matrix Equations∗

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

How and Why to Solve the Operator Equation Axâ‹™Xb

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Publications of the IAS Fellows HOW AND WHY TO SOLVE THE OPERATOR EQUATION AX®XB ¯ Y RAJENDRA BHATIA PETER ROSENTHAL 1. Introduction The entities A, B, X, Y in the title are operators, by which we mean either linear transformations on a finite-dimensional vector space (matrices) or bounded (¯ continuous) linear transformations on a Banach space. (All scalars will be complex numbers.) The definitions and statements below are valid in both the finite- dimensional and the infinite-dimensional cases, unless the contrary is stated. The simplest kind of equation involving operators is a linear equation of the form AX ¯ Y or of the form XB ¯ Y. The condition that is both necessary and sufficient that such an equation have a unique solution is that A (or B) should be inertible (bijective). Let σ(A) denote the spectrum of A; in the finite-dimensional case this is just the set of eigenvalues of A. In any case, invertibility of A is the statement 0 a σ(A). The equation ax ¯ y in numbers can always be solved when a 1 0. The analogous condition for the operator equation AX ¯ Y is 0 a σ(A). This analogy provides guidance in formulating potential theorems and guessing solutions of AX®XB ¯ Y. When does AX®XB ¯ Y have a unique solution X for each given Y? In the scalar case of ax®xb ¯ y, the answer is obvious: we must have a®b 1 0. The answer is almost as obvious in another case: if the matrices A and B are both diagonal, with diagonal entries ²λ",...,λn´ and ²µ",...,µn´, respectively, then the above equation has the solution xij ¯ yij}(λi®µj) provided λi®µj 1 0 for all i, j. -

![Arxiv:1903.05360V1 [Math.NA]](https://docslib.b-cdn.net/cover/7447/arxiv-1903-05360v1-math-na-3577447.webp)

Arxiv:1903.05360V1 [Math.NA]

Relation between the T-congruence Sylvester equation and the generalized Sylvester equation Yuki Satake,∗ Masaya Oozawa∗, Tomohiro Sogabe∗ Yuto Miyatake,† Tomoya Kemmochi∗, Shao-Liang Zhang∗ Abstract The T-congruenceSylvester equation is the matrix equation AX +XT B = C, where A ∈ Rm×n, B ∈ Rn×m, and C ∈ Rm×m are given, and X ∈ Rn×m is to be determined. Recently, Oozawa et al. discovered a transformation that the matrix equation is equivalent to one of the well-studied matrix equations (the Lyapunov equation); however, the condition of the transformation seems to be too limited because matrices A and B are assumed to be square matrices (m = n). In this paper, two transformations are provided for rectangular matrices A and B. One of them is an extension of the result of Oozawa et al. for the case m ≥ n, and the other is a novel transformation for the case m ≤ n. 1 Introduction We consider the T-congruence Sylvester equation of the form AX + XTB = C, (1) where A ∈ Rm×n, B ∈ Rn×m, and C ∈ Rm×m are given, and X ∈ Rn×m is to be determined. The matrix equation (1) appears in the industrial and applied mathematical field: palindromic eigenvalue problems for studying the vibration of rail tracks under the excitation arising from high-speed trains [1, 2] and also in an abstract mathematical field: the tangent spaces of the Stiefel manifold [3]. In the past decade, the numerical solvers for solving equation (1) in view of direct methods and iterative methods have been well developed, (see e.g., [4, 5, 6]); however, the theoretical features of the matrix equation itself such as the uniqueness of the solution have not been explored extensively. -

Singular Sylvester Equation and Its Applications

University of Niˇs Faculty of Sciences and Mathematics Bogdan D. Dord¯evi´c- Singular Sylvester equation and its applications DOCTORAL DISSERTATION Niˇs,2020. Univerzitet u Niˇsu Prirodno-matematiˇckifakultet Bogdan D. Dord¯evi´c- Singularna Silvesterova jednaˇcinai njene primene DOKTORSKA DISERTACIJA Niˇs,2020. Data on Doctoral Dissertation Doctoral Nebojša Dinčić, full professor at Faculty of Sciences and mathematics, University of Niš supervisor: Title: Singular Sylvester equation and its applications Abstract: This thesis concerns singular Sylvester operator equations, that is, equations of the form AX- XB=C, under the premise that they are either unsolvable or have infinitely many solutions. The equation is studied in different cases, first in the matrix case, then in the case when A, B and C are bounded linear operators on Banach spaces, and finally in the case when A and B are closed linear operators defined on Banach or Hilbert spaces. In each of these cases, solvability conditions are derived and then, under those conditions, the initial equation is solved. Exact solutions are obtained in their closed forms, and their classification is conducted. It is shown that all solutions are obtained in the manner illustrated in this thesis. Special attention is dedicated to approximation schemes of the solutions. Obtained results are illustrated on some contemporary problems from operator theory, among which are spectral problems of bounded and unbounded linear operators, Sturm-Liouville inverse problems and some operator equations from quantum mechanics. Scientific Mathematics field: Scientific Functional analysis discipline: Sylvester equation; operator equations; operator algebras; spectral theory of operators; closed Key words: operators; Fredholm theory; Banach algebras. -

SHIFTED KRONECKER PRODUCT SYSTEMS ∗ 1. Introduction. Matrix Problems with Replicated Block Structure Abound in Signal and Imag

SHIFTED KRONECKER PRODUCT SYSTEMS ∗ CARLA D. MORAVITZ MARTIN † AND CHARLES F. VAN LOAN ‡ Abstract. A fast method for solving a linear system of the form (A(p) ⊗···⊗ A(1) − λI)x = b (i) is given where each A is an ni-by-ni matrix. The first step is to convert the problem to triangular form (T (p) ⊗···⊗ T (1) − λI)y = c by computing the (complex) Schur decompositions of the A(i). This is followed by a recursive back-substitution process that fully exploits the Kronecker structure and requires just O(N(n1 +···+np)) flops where N = n1 ··· np. A similar method is employed when the real Schur Decomposition is used to convert each A(i) to quasi-triangular form. The numerical properties of these new methods are the same as if we explicitly formed (T (p) ⊗···⊗ T (1) − λI) and used conventional back-substitution to solve for y. Key words. Linear Systems, Schur decomposition, Back-substitution, Kronecker products AMS subject classifications. 15A06, 65F05, 65G50 1. Introduction. Matrix problems with replicated block structure abound in signal and image processing, semidefinite programming, control theory, and many other application areas. In these venues fast algorithms have emerged that exploit the rich algebra of the Kronecker product. Perhaps the best example of this is the fast Fourier transform which can be described using the “language” of sparse, matrix factorizations and the Kronecker product. This operation is surfacing more and more as cheap memory prompts the assembly of huge, multidimensional datasets. When techniques for problems of low dimension are generalized or “tensored” together to address a high-dimensional, multilinear problem, then one typically finds a computa- tional challenge that involves the Kronecker product. -

Graph Minor from Wikipedia, the Free Encyclopedia Contents

Graph minor From Wikipedia, the free encyclopedia Contents 1 2 × 2 real matrices 1 1.1 Profile ................................................. 1 1.2 Equi-areal mapping .......................................... 2 1.3 Functions of 2 × 2 real matrices .................................... 2 1.4 2 × 2 real matrices as complex numbers ............................... 3 1.5 References ............................................... 4 2 Abelian group 5 2.1 Definition ............................................... 5 2.2 Facts ................................................. 5 2.2.1 Notation ........................................... 5 2.2.2 Multiplication table ...................................... 6 2.3 Examples ............................................... 6 2.4 Historical remarks .......................................... 6 2.5 Properties ............................................... 6 2.6 Finite abelian groups ......................................... 7 2.6.1 Classification ......................................... 7 2.6.2 Automorphisms ....................................... 7 2.7 Infinite abelian groups ........................................ 8 2.7.1 Torsion groups ........................................ 9 2.7.2 Torsion-free and mixed groups ................................ 9 2.7.3 Invariants and classification .................................. 9 2.7.4 Additive groups of rings ................................... 9 2.8 Relation to other mathematical topics ................................. 10 2.9 A note on the typography ...................................... -

Al-Kashi Background Statistics

Al-Kashi Background Statistics http://www.ar-php.org/stats/al-kashi/ PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Sat, 01 Feb 2014 07:11:01 UTC Contents Articles Summary Statistics 1 Mean 1 Median 6 Mode (statistics) 15 Variance 20 Standard deviation 32 Coefficient of variation 46 Skewness 49 Kurtosis 55 Ranking 61 Graphics 65 Box plot 65 Histogram 68 Q–Q plot 74 Ternary plot 79 Distributions 84 Normal distribution 84 Student's t-distribution 113 F-distribution 128 Feature scaling 131 Correlation and Regression 134 Covariance 134 Correlation and dependence 138 Regression analysis 144 Path analysis (statistics) 154 Analysis 156 Moving average 156 Student's t-test 162 Contingency table 171 Analysis of variance 174 Principal component analysis 189 Diversity index 205 Diversity index 205 Clustering 210 Hierarchical clustering 210 K-means clustering 215 Matrix 225 Matrix (mathematics) 225 Matrix addition 247 Matrix multiplication 249 Transpose 263 Determinant 266 Minor (linear algebra) 284 Adjugate matrix 287 Invertible matrix 291 Eigenvalues and eigenvectors 298 System of linear equations 316 References Article Sources and Contributors 326 Image Sources, Licenses and Contributors 332 Article Licenses License 335 1 Summary Statistics Mean In mathematics, mean has several different definitions depending on the context. In probability and statistics, mean and expected value are used synonymously to refer to one measure of the central tendency either of a probability distribution or of the random variable characterized by that distribution. In the case of a discrete probability distribution of a random variable X, the mean is equal to the sum over every possible value weighted by the probability of that value; that is, it is computed by taking the product of each possible value x of X and its probability P(x), and then adding all these products together, giving .[1] An analogous formula applies to the case of a continuous probability distribution. -

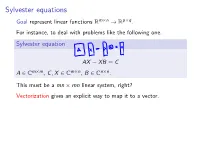

Sylvester Equations M×N P×Q Goal Represent Linear Functions R → R

Sylvester equations m×n p×q Goal represent linear functions R → R . For instance, to deal with problems like the following one. Sylvester equation AX − XB = C m×m m×n n×n A ∈ C , C, X ∈ C , B ∈ C . This must be a mn × mn linear system, right? Vectorization gives an explicit way to map it to a vector. Vectorization: definition x11 x 21 . . xm1 x12 x x11 x12 ... x1n 22 . x21 x22 ... x2n . vec X = vec . := . . .. xm2 x x ... x . m1 m2 mn . x1n x2n . . xmn Vectorization: comments Column-major order: leftmost index ‘changes more often’. Matches Fortran, Matlab standard (C/C++ prefer row-major instead). Converting indices in the matrix into indices in the vector: (X)ij = (vec X)i+mj 0-based, (X)ij = (vec X)i+m(j−1) 1-based. vec(AXB) First, we will work out the representation of a simple linear map, X 7→ AXB (for fixed matrices A, B of compatible dimensions). m×n p×q If X ∈ R , AXB ∈ R , we need the pq × mn matrix that maps vec X to vec(AXB). X X X (AXB)hl = (AX)hj (B)jl = Ahi Xij Bjl j j i = Ah1B1l Ah2B1l ... AhmB1l Ah1B2l Ah2B2l ... AhmB2l ... Ah1Bnl Ah2Bnl AhmBnl ] vec X Kronecker product: definition b11A b21A ... bn1A b12A b22A ... bn2A vec(AXB) = . vec X . .. . b1qA b2qA ... bnqA Each block is a multiple of A, with coefficient given by the corresponding entry of B>. Definition x11Y x12Y ... x1nY . . x21Y x22Y ... X ⊗ Y := . . . .. .. . xm1Y xm2Y ... xmnY so the matrix above is B> ⊗ A. Properties of Kronecker products x11Y x12Y .. -

On the Solution of the Nonsymmetric T-Riccati Equation∗

Electronic Transactions on Numerical Analysis. Volume 54, pp. 68–88, 2021. ETNA Kent State University and Copyright © 2021, Kent State University. Johann Radon Institute (RICAM) ISSN 1068–9613. DOI: 10.1553/etna_vol54s68 ON THE SOLUTION OF THE NONSYMMETRIC T-RICCATI EQUATION∗ PETER BENNERy AND DAVIDE PALITTAy Abstract. The nonsymmetric T-Riccati equation is a quadratic matrix equation where the linear part corresponds to the so-called T-Sylvester or T-Lyapunov operator that has previously been studied in the literature. It has applications in macroeconomics and policy dynamics. So far, it presents an unexplored problem in numerical analysis, and both theoretical results and computational methods are lacking in the literature. In this paper we provide some sufficient conditions for the existence and uniqueness of a nonnegative minimal solution, namely the solution with component- wise minimal entries. Moreover, the efficient computation of such a solution is analyzed. Both the small-scale and large-scale settings are addressed, and Newton-Kleinman-like methods are derived. The convergence of these procedures to the minimal solution is proven, and several numerical results illustrate the computational efficiency of the proposed methods. Key words. T-Riccati equation, M-matrices, minimal nonnegative solution, Newton-Kleinman method AMS subject classifications. 65F30, 15A24, 49M15, 39B42, 40C05 1. Introduction. In this paper, we consider the nonsymmetric T-Riccati operator n×n n×n T T RT : R ! R ; RT (X) := DX + X A − X BX + C; where A; B; C; D 2 Rn×n, and we provide sufficient conditions for the existence and unique- n×n ness of a minimal solution Xmin 2 R to (1.1) RT (X) = 0: The solution of the nonsymmetric T-Riccati equation (1.1) plays a role in solving dynamics generalized equilibrium (DSGE) problems [9, 22, 25]. -

Solving the Sylvester Equation Ax − Xb = C When Σ(A) ∩ Σ(B) 6= ∅ ∗

Electronic Journal of Linear Algebra, ISSN 1081-3810 A publication of the International Linear Algebra Society Volume 35, pp. 1-23, January 2019. SOLVING THE SYLVESTER EQUATION AX − XB = C WHEN σ(A) \ σ(B) 6= ; ∗ NEBOJSAˇ C.ˇ DINCIˇ C´ y Abstract. The method for solving the Sylvester equation AX−XB = C in the complex matrix case, when σ(A)\σ(B) 6= ;, by using Jordan normal form, is given. Also, the approach via the Schur decomposition is presented. Key words. Sylvester equation, Jordan normal form, Schur decomposition. AMS subject classifications. 15A24. 1. An introduction. The Sylvester equation (1.1) AX − XB = C; where A 2 Cm×m;B 2 Cn×n and C 2 Cm×n are given, and X 2 Cm×n is an unknown matrix, is a very popular topic in linear algebra, with numerous applications in control theory, signal processing, image restoration, engineering, solving ordinary and partial differential equations, etc. It is named for famous mathematician J. J. Sylvester, who was the first to prove that this equation in matrix case has unique solution if and only if σ(A) \ σ(B) = ; [17]. One important special case of the Sylvester equation is the continuous-time Lyapunov matrix equation (AX + XA∗ = C). AC In 1952, Roth [15] proved that for operators A; B on finite-dimensional spaces is similar to 0 B A 0 if and only if the equation AX − XB = C has a solution X. Rosenblum [14] proved that if A 0 B and B are operators such that σ(A) \ σ(B) = ;, then the equation AX − XB = Y has a unique solution X for every operator Y . -

Solution Formulas for Differential Sylvester and Lyapunov Equations

Solution Formulas for Differential Sylvester and Lyapunov Equations Maximilian Behr Peter Benner Jan Heiland November 21, 2018 Abstract The differential Sylvester equation and its symmetric version, the differential Lyapunov equation, appear in different fields of applied mathematics like control theory, system theory, and model order reduction. The few available straight-forward numerical approaches if applied to large- scale systems come with prohibitively large storage requirements. This shortage motivates us to summarize and explore existing solution formulas for these equations. We develop a unifying approach based on the spectral theorem for normal operators like the Sylvester operator (X) = AX + XB and derive a formula for its norm using an induced operator norm based on S the spectrum of A and B. In view of numerical approximations, we propose an algorithm that identifies a suitable Krylov subspace using Taylor series and use a projection to approximate the solution. Numerical results for large-scale differential Lyapunov equations are presented in the last sections. Contents 1. Introduction 2 2. Preliminaries 3 3. Spectral Decomposition of the Sylvester Operator4 4. Variation of Constants7 5. Solution as Taylor Series7 6. Feasible Numerical Solution Approaches9 arXiv:1811.08327v1 [math.NA] 20 Nov 2018 7. Numerical Results 12 7.1. Setup . 12 7.2. Projection Approach . 12 7.3. Computational Time . 15 7.4. Comparison with Backward Differentiation Formulas . 16 8. Conclusions 17 A. Numerical Results Projection Approach 18 A.1. Results for the Differential Lyapunov Equation . 18 A.2. Results for the Transposed Differential Lyapunov Equation . 22 1 B. Backward Differentiation Formulas 25 B.1. Results for the Differential Lyapunov Equation . -

Krylov Methods for Low-Rank Commuting Generalized Sylvester Equations

Krylov methods for low-rank commuting generalized Sylvester equations Elias Jarlebring ,∗ Giampaolo Mele ,∗ Davide Palitta ,y Emil Ringh ∗ April 14, 2017 Abstract We consider generalizations of the Sylvester matrix equation, consist- ing of the sum of a Sylvester operator and a linear operator Π with a particular structure. More precisely, the commutator of the matrix coef- ficients of the operator Π and the Sylvester operator coefficients are as- sumed to be matrices with low rank. We show (under certain additional conditions) low-rank approximability of this problem, i.e., the solution to this matrix equation can be approximated with a low-rank matrix. Projec- tion methods have successfully been used to solve other matrix equations with low-rank approximability. We propose a new projection method for this class of matrix equations. The choice of subspace is a crucial ingredi- ent for any projection method for matrix equations. Our method is based on an adaption and extension of the extended Krylov subspace method for Sylvester equations. A constructive choice of the starting vector/block is derived from the low-rank commutators. We illustrate the effectiveness of our method by solving large-scale matrix equations arising from appli- cations in control theory and the discretization of PDEs. The advantages of our approach in comparison to other methods are also illustrated. Keywords Generalized Sylvester equation · Low-rank commutation · Krylov subspace · projection methods · Iterative solvers · Matrix equation Mathematics Subject Classification -

Affine Transformation

Affine transformation From Wikipedia, the free encyclopedia Contents 1 2 × 2 real matrices 1 1.1 Profile ................................................. 1 1.2 Equi-areal mapping .......................................... 2 1.3 Functions of 2 × 2 real matrices .................................... 2 1.4 2 × 2 real matrices as complex numbers ............................... 3 1.5 References ............................................... 4 2 3D projection 5 2.1 Orthographic projection ........................................ 5 2.2 Weak perspective projection ..................................... 5 2.3 Perspective projection ......................................... 6 2.4 Diagram ................................................ 8 2.5 See also ................................................ 8 2.6 References ............................................... 9 2.7 External links ............................................. 9 2.8 Further reading ............................................ 9 3 Affine coordinate system 10 3.1 See also ................................................ 10 4 Affine geometry 11 4.1 History ................................................. 12 4.2 Systems of axioms ........................................... 12 4.2.1 Pappus’ law .......................................... 12 4.2.2 Ordered structure ....................................... 13 4.2.3 Ternary rings ......................................... 13 4.3 Affine transformations ......................................... 14 4.4 Affine space .............................................