Vector Spaces PCMI Summer 2015 Undergraduate Lectures on Flag Varieties Lecture 2

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Multivector Differentiation and Linear Algebra 0.5Cm 17Th Santaló

Multivector differentiation and Linear Algebra 17th Santalo´ Summer School 2016, Santander Joan Lasenby Signal Processing Group, Engineering Department, Cambridge, UK and Trinity College Cambridge [email protected], www-sigproc.eng.cam.ac.uk/ s jl 23 August 2016 1 / 78 Examples of differentiation wrt multivectors. Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. Functional Differentiation: very briefly... Summary Overview The Multivector Derivative. 2 / 78 Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. Functional Differentiation: very briefly... Summary Overview The Multivector Derivative. Examples of differentiation wrt multivectors. 3 / 78 Functional Differentiation: very briefly... Summary Overview The Multivector Derivative. Examples of differentiation wrt multivectors. Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. 4 / 78 Summary Overview The Multivector Derivative. Examples of differentiation wrt multivectors. Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. Functional Differentiation: very briefly... 5 / 78 Overview The Multivector Derivative. Examples of differentiation wrt multivectors. Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. Functional Differentiation: very briefly... Summary 6 / 78 We now want to generalise this idea to enable us to find the derivative of F(X), in the A ‘direction’ – where X is a general mixed grade multivector (so F(X) is a general multivector valued function of X). Let us use ∗ to denote taking the scalar part, ie P ∗ Q ≡ hPQi. Then, provided A has same grades as X, it makes sense to define: F(X + tA) − F(X) A ∗ ¶XF(X) = lim t!0 t The Multivector Derivative Recall our definition of the directional derivative in the a direction F(x + ea) − F(x) a·r F(x) = lim e!0 e 7 / 78 Let us use ∗ to denote taking the scalar part, ie P ∗ Q ≡ hPQi. -

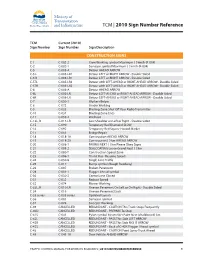

2010 Sign Number Reference for Traffic Control Manual

TCM | 2010 Sign Number Reference TCM Current (2010) Sign Number Sign Number Sign Description CONSTRUCTION SIGNS C-1 C-002-2 Crew Working symbol Maximum ( ) km/h (R-004) C-2 C-002-1 Surveyor symbol Maximum ( ) km/h (R-004) C-5 C-005-A Detour AHEAD ARROW C-5 L C-005-LR1 Detour LEFT or RIGHT ARROW - Double Sided C-5 R C-005-LR1 Detour LEFT or RIGHT ARROW - Double Sided C-5TL C-005-LR2 Detour with LEFT-AHEAD or RIGHT-AHEAD ARROW - Double Sided C-5TR C-005-LR2 Detour with LEFT-AHEAD or RIGHT-AHEAD ARROW - Double Sided C-6 C-006-A Detour AHEAD ARROW C-6L C-006-LR Detour LEFT-AHEAD or RIGHT-AHEAD ARROW - Double Sided C-6R C-006-LR Detour LEFT-AHEAD or RIGHT-AHEAD ARROW - Double Sided C-7 C-050-1 Workers Below C-8 C-072 Grader Working C-9 C-033 Blasting Zone Shut Off Your Radio Transmitter C-10 C-034 Blasting Zone Ends C-11 C-059-2 Washout C-13L, R C-013-LR Low Shoulder on Left or Right - Double Sided C-15 C-090 Temporary Red Diamond SLOW C-16 C-092 Temporary Red Square Hazard Marker C-17 C-051 Bridge Repair C-18 C-018-1A Construction AHEAD ARROW C-19 C-018-2A Construction ( ) km AHEAD ARROW C-20 C-008-1 PAVING NEXT ( ) km Please Obey Signs C-21 C-008-2 SEALCOATING Loose Gravel Next ( ) km C-22 C-080-T Construction Speed Zone C-23 C-086-1 Thank You - Resume Speed C-24 C-030-8 Single Lane Traffic C-25 C-017 Bump symbol (Rough Roadway) C-26 C-007 Broken Pavement C-28 C-001-1 Flagger Ahead symbol C-30 C-030-2 Centre Lane Closed C-31 C-032 Reduce Speed C-32 C-074 Mower Working C-33L, R C-010-LR Uneven Pavement On Left or On Right - Double Sided C-34 -

Math 217: Multilinearity of Determinants Professor Karen Smith (C)2015 UM Math Dept Licensed Under a Creative Commons By-NC-SA 4.0 International License

Math 217: Multilinearity of Determinants Professor Karen Smith (c)2015 UM Math Dept licensed under a Creative Commons By-NC-SA 4.0 International License. A. Let V −!T V be a linear transformation where V has dimension n. 1. What is meant by the determinant of T ? Why is this well-defined? Solution note: The determinant of T is the determinant of the B-matrix of T , for any basis B of V . Since all B-matrices of T are similar, and similar matrices have the same determinant, this is well-defined—it doesn't depend on which basis we pick. 2. Define the rank of T . Solution note: The rank of T is the dimension of the image. 3. Explain why T is an isomorphism if and only if det T is not zero. Solution note: T is an isomorphism if and only if [T ]B is invertible (for any choice of basis B), which happens if and only if det T 6= 0. 3 4. Now let V = R and let T be rotation around the axis L (a line through the origin) by an 21 0 0 3 3 angle θ. Find a basis for R in which the matrix of ρ is 40 cosθ −sinθ5 : Use this to 0 sinθ cosθ compute the determinant of T . Is T othogonal? Solution note: Let v be any vector spanning L and let u1; u2 be an orthonormal basis ? for V = L . Rotation fixes ~v, which means the B-matrix in the basis (v; u1; u2) has 213 first column 405. -

Determinants Math 122 Calculus III D Joyce, Fall 2012

Determinants Math 122 Calculus III D Joyce, Fall 2012 What they are. A determinant is a value associated to a square array of numbers, that square array being called a square matrix. For example, here are determinants of a general 2 × 2 matrix and a general 3 × 3 matrix. a b = ad − bc: c d a b c d e f = aei + bfg + cdh − ceg − afh − bdi: g h i The determinant of a matrix A is usually denoted jAj or det (A). You can think of the rows of the determinant as being vectors. For the 3×3 matrix above, the vectors are u = (a; b; c), v = (d; e; f), and w = (g; h; i). Then the determinant is a value associated to n vectors in Rn. There's a general definition for n×n determinants. It's a particular signed sum of products of n entries in the matrix where each product is of one entry in each row and column. The two ways you can choose one entry in each row and column of the 2 × 2 matrix give you the two products ad and bc. There are six ways of chosing one entry in each row and column in a 3 × 3 matrix, and generally, there are n! ways in an n × n matrix. Thus, the determinant of a 4 × 4 matrix is the signed sum of 24, which is 4!, terms. In this general definition, half the terms are taken positively and half negatively. In class, we briefly saw how the signs are determined by permutations. -

Matrices and Tensors

APPENDIX MATRICES AND TENSORS A.1. INTRODUCTION AND RATIONALE The purpose of this appendix is to present the notation and most of the mathematical tech- niques that are used in the body of the text. The audience is assumed to have been through sev- eral years of college-level mathematics, which included the differential and integral calculus, differential equations, functions of several variables, partial derivatives, and an introduction to linear algebra. Matrices are reviewed briefly, and determinants, vectors, and tensors of order two are described. The application of this linear algebra to material that appears in under- graduate engineering courses on mechanics is illustrated by discussions of concepts like the area and mass moments of inertia, Mohr’s circles, and the vector cross and triple scalar prod- ucts. The notation, as far as possible, will be a matrix notation that is easily entered into exist- ing symbolic computational programs like Maple, Mathematica, Matlab, and Mathcad. The desire to represent the components of three-dimensional fourth-order tensors that appear in anisotropic elasticity as the components of six-dimensional second-order tensors and thus rep- resent these components in matrices of tensor components in six dimensions leads to the non- traditional part of this appendix. This is also one of the nontraditional aspects in the text of the book, but a minor one. This is described in §A.11, along with the rationale for this approach. A.2. DEFINITION OF SQUARE, COLUMN, AND ROW MATRICES An r-by-c matrix, M, is a rectangular array of numbers consisting of r rows and c columns: ¯MM.. -

Glossary of Linear Algebra Terms

INNER PRODUCT SPACES AND THE GRAM-SCHMIDT PROCESS A. HAVENS 1. The Dot Product and Orthogonality 1.1. Review of the Dot Product. We first recall the notion of the dot product, which gives us a familiar example of an inner product structure on the real vector spaces Rn. This product is connected to the Euclidean geometry of Rn, via lengths and angles measured in Rn. Later, we will introduce inner product spaces in general, and use their structure to define general notions of length and angle on other vector spaces. Definition 1.1. The dot product of real n-vectors in the Euclidean vector space Rn is the scalar product · : Rn × Rn ! R given by the rule n n ! n X X X (u; v) = uiei; viei 7! uivi : i=1 i=1 i n Here BS := (e1;:::; en) is the standard basis of R . With respect to our conventions on basis and matrix multiplication, we may also express the dot product as the matrix-vector product 2 3 v1 6 7 t î ó 6 . 7 u v = u1 : : : un 6 . 7 : 4 5 vn It is a good exercise to verify the following proposition. Proposition 1.1. Let u; v; w 2 Rn be any real n-vectors, and s; t 2 R be any scalars. The Euclidean dot product (u; v) 7! u · v satisfies the following properties. (i:) The dot product is symmetric: u · v = v · u. (ii:) The dot product is bilinear: • (su) · v = s(u · v) = u · (sv), • (u + v) · w = u · w + v · w. -

New Foundations for Geometric Algebra1

Text published in the electronic journal Clifford Analysis, Clifford Algebras and their Applications vol. 2, No. 3 (2013) pp. 193-211 New foundations for geometric algebra1 Ramon González Calvet Institut Pere Calders, Campus Universitat Autònoma de Barcelona, 08193 Cerdanyola del Vallès, Spain E-mail : [email protected] Abstract. New foundations for geometric algebra are proposed based upon the existing isomorphisms between geometric and matrix algebras. Each geometric algebra always has a faithful real matrix representation with a periodicity of 8. On the other hand, each matrix algebra is always embedded in a geometric algebra of a convenient dimension. The geometric product is also isomorphic to the matrix product, and many vector transformations such as rotations, axial symmetries and Lorentz transformations can be written in a form isomorphic to a similarity transformation of matrices. We collect the idea Dirac applied to develop the relativistic electron equation when he took a basis of matrices for the geometric algebra instead of a basis of geometric vectors. Of course, this way of understanding the geometric algebra requires new definitions: the geometric vector space is defined as the algebraic subspace that generates the rest of the matrix algebra by addition and multiplication; isometries are simply defined as the similarity transformations of matrices as shown above, and finally the norm of any element of the geometric algebra is defined as the nth root of the determinant of its representative matrix of order n. The main idea of this proposal is an arithmetic point of view consisting of reversing the roles of matrix and geometric algebras in the sense that geometric algebra is a way of accessing, working and understanding the most fundamental conception of matrix algebra as the algebra of transformations of multiple quantities. -

Calculus Terminology

AP Calculus BC Calculus Terminology Absolute Convergence Asymptote Continued Sum Absolute Maximum Average Rate of Change Continuous Function Absolute Minimum Average Value of a Function Continuously Differentiable Function Absolutely Convergent Axis of Rotation Converge Acceleration Boundary Value Problem Converge Absolutely Alternating Series Bounded Function Converge Conditionally Alternating Series Remainder Bounded Sequence Convergence Tests Alternating Series Test Bounds of Integration Convergent Sequence Analytic Methods Calculus Convergent Series Annulus Cartesian Form Critical Number Antiderivative of a Function Cavalieri’s Principle Critical Point Approximation by Differentials Center of Mass Formula Critical Value Arc Length of a Curve Centroid Curly d Area below a Curve Chain Rule Curve Area between Curves Comparison Test Curve Sketching Area of an Ellipse Concave Cusp Area of a Parabolic Segment Concave Down Cylindrical Shell Method Area under a Curve Concave Up Decreasing Function Area Using Parametric Equations Conditional Convergence Definite Integral Area Using Polar Coordinates Constant Term Definite Integral Rules Degenerate Divergent Series Function Operations Del Operator e Fundamental Theorem of Calculus Deleted Neighborhood Ellipsoid GLB Derivative End Behavior Global Maximum Derivative of a Power Series Essential Discontinuity Global Minimum Derivative Rules Explicit Differentiation Golden Spiral Difference Quotient Explicit Function Graphic Methods Differentiable Exponential Decay Greatest Lower Bound Differential -

Rotation Matrix - Wikipedia, the Free Encyclopedia Page 1 of 22

Rotation matrix - Wikipedia, the free encyclopedia Page 1 of 22 Rotation matrix From Wikipedia, the free encyclopedia In linear algebra, a rotation matrix is a matrix that is used to perform a rotation in Euclidean space. For example the matrix rotates points in the xy -Cartesian plane counterclockwise through an angle θ about the origin of the Cartesian coordinate system. To perform the rotation, the position of each point must be represented by a column vector v, containing the coordinates of the point. A rotated vector is obtained by using the matrix multiplication Rv (see below for details). In two and three dimensions, rotation matrices are among the simplest algebraic descriptions of rotations, and are used extensively for computations in geometry, physics, and computer graphics. Though most applications involve rotations in two or three dimensions, rotation matrices can be defined for n-dimensional space. Rotation matrices are always square, with real entries. Algebraically, a rotation matrix in n-dimensions is a n × n special orthogonal matrix, i.e. an orthogonal matrix whose determinant is 1: . The set of all rotation matrices forms a group, known as the rotation group or the special orthogonal group. It is a subset of the orthogonal group, which includes reflections and consists of all orthogonal matrices with determinant 1 or -1, and of the special linear group, which includes all volume-preserving transformations and consists of matrices with determinant 1. Contents 1 Rotations in two dimensions 1.1 Non-standard orientation -

1 Sets and Set Notation. Definition 1 (Naive Definition of a Set)

LINEAR ALGEBRA MATH 2700.006 SPRING 2013 (COHEN) LECTURE NOTES 1 Sets and Set Notation. Definition 1 (Naive Definition of a Set). A set is any collection of objects, called the elements of that set. We will most often name sets using capital letters, like A, B, X, Y , etc., while the elements of a set will usually be given lower-case letters, like x, y, z, v, etc. Two sets X and Y are called equal if X and Y consist of exactly the same elements. In this case we write X = Y . Example 1 (Examples of Sets). (1) Let X be the collection of all integers greater than or equal to 5 and strictly less than 10. Then X is a set, and we may write: X = f5; 6; 7; 8; 9g The above notation is an example of a set being described explicitly, i.e. just by listing out all of its elements. The set brackets {· · ·} indicate that we are talking about a set and not a number, sequence, or other mathematical object. (2) Let E be the set of all even natural numbers. We may write: E = f0; 2; 4; 6; 8; :::g This is an example of an explicity described set with infinitely many elements. The ellipsis (:::) in the above notation is used somewhat informally, but in this case its meaning, that we should \continue counting forever," is clear from the context. (3) Let Y be the collection of all real numbers greater than or equal to 5 and strictly less than 10. Recalling notation from previous math courses, we may write: Y = [5; 10) This is an example of using interval notation to describe a set. -

THE NUMBER of ⊕-SIGN TYPES Jian-Yi Shi Department Of

THE NUMBER OF © -SIGN TYPES Jian-yi Shi Department of Mathematics, East China Normal University Shanghai, 200062, P.R.C. In [6], the author introduced the concept of a sign type indexed by the set f(i; j) j 1 · i; j · n; i 6= jg for the description of the cells in the affine Weyl group of type Ae` ( in the sense of Kazhdan and Lusztig [3] ) . Later, the author generalized it by defining a sign type indexed by any root system [8]. Since then, sign type has played a more and more important role in the study of the cells of any irreducible affine Weyl group [1],[2],[5],[8],[9],[10],[12]. Thus it is worth to study sign type itself. One task is to enumerate various kinds of sign types. In [8], the author verified Carter’s conjecture on the number of all sign types indexed by any irreducible root system. In the present paper, we consider some special kind of sign types, called ©-sign types. We show that these sign types are in one-to-one correspondence with the increasing subsets of the related positive root system as a partially ordered set. Thus we get the number of all the ©-sign types indexed by any irreducible positive root system Φ+ by enumerating all the increasing subsets Key words and phrases. © -sign types, increasing subsets, Young diagram. Supported by the National Science Foundation of China and by the Science Foundation of the University Doctorial Program of CNEC Typeset by AMS-TEX 1 2 JIAN.YI-SHI of Φ+. -

Sign of the Derivative TEACHER NOTES MATH NSPIRED

Sign of the Derivative TEACHER NOTES MATH NSPIRED Math Objectives • Students will make a connection between the sign of the derivative (positive/negative/zero) and the increasing or decreasing nature of the graph. Activity Type • Student Exploration About the Lesson TI-Nspire™ Technology Skills: • Students will move a point on a function and observe the changes • Download a TI-Nspire in the sign of the derivative. Based on observations, students will document answer questions on a worksheet. In the second part of the • Open a document investigation, students will move the point and see a trace of the • Move between pages derivative to connect the positive or negative sign of the • Grab and drag a point derivative with the location on the coordinate plane. Tech Tips: Directions • Make sure the font size on • Grab the point on the function and move it along the curve. your TI-Nspire handheld is Observe the relationship between the sign of the derivative and set to Medium. the direction of the graph. • You can hide the function entry line by pressing /G. Lesson Materials: Student Activity Sign_of_the_Derivative_Student .pdf Sign_of_the_Derivative_Student .doc TI-Nspire document Sign_of_the_Derivative.tns Visit www.mathnspired.com for lesson updates. ©2010 DANIEL R. ILARIA, PH.D 1 Used with permission Sign of the Derivative TEACHER NOTES MATH NSPIRED Discussion Points and Possible Answers PART I: Move to page 1.3. Tech Tips: The sign of the derivative will indicate negative when the function is decreasing and positive when the function is increasing. The screen will also indicate a zero derivative. Students will need to edit the function on the screen in order to investigate other functions.