CRYPTREC Review of Eddsa CRYPTREC EX-3003-2020

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Public-Key Cryptography

Public Key Cryptography EJ Jung Basic Public Key Cryptography public key public key ? private key Alice Bob Given: Everybody knows Bob’s public key - How is this achieved in practice? Only Bob knows the corresponding private key Goals: 1. Alice wants to send a secret message to Bob 2. Bob wants to authenticate himself Requirements for Public-Key Crypto ! Key generation: computationally easy to generate a pair (public key PK, private key SK) • Computationally infeasible to determine private key PK given only public key PK ! Encryption: given plaintext M and public key PK, easy to compute ciphertext C=EPK(M) ! Decryption: given ciphertext C=EPK(M) and private key SK, easy to compute plaintext M • Infeasible to compute M from C without SK • Decrypt(SK,Encrypt(PK,M))=M Requirements for Public-Key Cryptography 1. Computationally easy for a party B to generate a pair (public key KUb, private key KRb) 2. Easy for sender to generate ciphertext: C = EKUb (M ) 3. Easy for the receiver to decrypt ciphertect using private key: M = DKRb (C) = DKRb[EKUb (M )] Henric Johnson 4 Requirements for Public-Key Cryptography 4. Computationally infeasible to determine private key (KRb) knowing public key (KUb) 5. Computationally infeasible to recover message M, knowing KUb and ciphertext C 6. Either of the two keys can be used for encryption, with the other used for decryption: M = DKRb[EKUb (M )] = DKUb[EKRb (M )] Henric Johnson 5 Public-Key Cryptographic Algorithms ! RSA and Diffie-Hellman ! RSA - Ron Rives, Adi Shamir and Len Adleman at MIT, in 1977. • RSA -

Modern Cryptography: Lecture 13 Digital Signatures

Modern Cryptography: Lecture 13 Digital Signatures Daniel Slamanig Organizational ● Where to find the slides and homework? – https://danielslamanig.info/ModernCrypto18.html ● ow to conta!t me? – [email protected] ● #utor: Karen Klein – [email protected] ● Offi!ial page at #', )o!ation et!. – https://tiss.tuwien.ac.at/!ourse/!o$rseDetails.+html?dswid=86-2&dsrid,6/0. !ourseNr,102262&semester,2018W ● #utorial, #U site – https://tiss.tuwien.ac.at/!ourse/!o$rseAnnouncement.+html?dswid=4200.dsr id,-51.!ourseNum6er,10206-.!ourseSemester,2018W ● 8+am for the se!ond part: Thursday 31.21.2210 15:00-1/:00 (Tutorial slot; 2/26 Overview Digital Signatures message m / :m( σ; secret key skA Inse!$re !hannel p$bli! key pkA p$bli! key pkA σ σ :, 7igskA:m; 6 :, =rfypkA:m( ; 3: pkA -/26 Digital Signatures: Intuitive Properties Can 6e seen as the p$6li!9key analog$e of M3Cs with p$6li! >erifiability ● Integrity protection: 3ny modifi!ation of a signed message !an 6e detected ● 7o$r!e a$thenti!ity: The sender of a signed message !an be identified ● Non9rep$diation: The signer !annot deny ha>ing signed :sent) a message 7e!$rity :intuition;: sho$ld 6e hard to !ome $p with a signat$re for a message that has not 6een signed by the holder of the pri>ate key 5/26 Digital Signatures: pplications *igital signat$res ha>e many applications and are at the heart of implementing p$6lic9key !ryptography in practice ● <ss$ing !ertifi!ates 6y CAs (?$6lic %ey <nfrastr$!t$res;: 6inding of identities to p$6lic keys ● @$ilding authenticated !hannels: a$thenti!ate parties :ser>ers; in sec$rity proto!ols :e.g.( TL7; or se!$re messaging :Whats3pp( 7ignal, ...; ● Code signing: a$thenti!ate software/firmware :$pdates; ● 7ign do!$ments :e.g.( !ontra!ts;: )egal reg$lations define when digital signat$res are eq$ivalent to handwritten signat$res ● 7ign transa!tions: $sed in the !rypto!$rren!y realm ● et!. -

Public Key Cryptography And

PublicPublic KeyKey CryptographyCryptography andand RSARSA Raj Jain Washington University in Saint Louis Saint Louis, MO 63130 [email protected] Audio/Video recordings of this lecture are available at: http://www.cse.wustl.edu/~jain/cse571-11/ Washington University in St. Louis CSE571S ©2011 Raj Jain 9-1 OverviewOverview 1. Public Key Encryption 2. Symmetric vs. Public-Key 3. RSA Public Key Encryption 4. RSA Key Construction 5. Optimizing Private Key Operations 6. RSA Security These slides are based partly on Lawrie Brown’s slides supplied with William Stallings’s book “Cryptography and Network Security: Principles and Practice,” 5th Ed, 2011. Washington University in St. Louis CSE571S ©2011 Raj Jain 9-2 PublicPublic KeyKey EncryptionEncryption Invented in 1975 by Diffie and Hellman at Stanford Encrypted_Message = Encrypt(Key1, Message) Message = Decrypt(Key2, Encrypted_Message) Key1 Key2 Text Ciphertext Text Keys are interchangeable: Key2 Key1 Text Ciphertext Text One key is made public while the other is kept private Sender knows only public key of the receiver Asymmetric Washington University in St. Louis CSE571S ©2011 Raj Jain 9-3 PublicPublic KeyKey EncryptionEncryption ExampleExample Rivest, Shamir, and Adleman at MIT RSA: Encrypted_Message = m3 mod 187 Message = Encrypted_Message107 mod 187 Key1 = <3,187>, Key2 = <107,187> Message = 5 Encrypted Message = 53 = 125 Message = 125107 mod 187 = 5 = 125(64+32+8+2+1) mod 187 = {(12564 mod 187)(12532 mod 187)... (1252 mod 187)(125 mod 187)} mod 187 Washington University in -

CS 255: Intro to Cryptography 1 Introduction 2 End-To-End

Programming Assignment 2 Winter 2021 CS 255: Intro to Cryptography Prof. Dan Boneh Due Monday, March 1st, 11:59pm 1 Introduction In this assignment, you are tasked with implementing a secure and efficient end-to-end encrypted chat client using the Double Ratchet Algorithm, a popular session setup protocol that powers real- world chat systems such as Signal and WhatsApp. As an additional challenge, assume you live in a country with government surveillance. Thereby, all messages sent are required to include the session key encrypted with a fixed public key issued by the government. In your implementation, you will make use of various cryptographic primitives we have discussed in class—notably, key exchange, public key encryption, digital signatures, and authenticated encryption. Because it is ill-advised to implement your own primitives in cryptography, you should use an established library: in this case, the Stanford Javascript Crypto Library (SJCL). We will provide starter code that contains a basic template, which you will be able to fill in to satisfy the functionality and security properties described below. 2 End-to-end Encrypted Chat Client 2.1 Implementation Details Your chat client will use the Double Ratchet Algorithm to provide end-to-end encrypted commu- nications with other clients. To evaluate your messaging client, we will check that two or more instances of your implementation it can communicate with each other properly. We feel that it is best to understand the Double Ratchet Algorithm straight from the source, so we ask that you read Sections 1, 2, and 3 of Signal’s published specification here: https://signal. -

A Public-Key Cryptosystem Based on Discrete Logarithm Problem Over Finite Fields 퐅퐩퐧

IOSR Journal of Mathematics (IOSR-JM) e-ISSN: 2278-5728, p-ISSN: 2319-765X. Volume 11, Issue 1 Ver. III (Jan - Feb. 2015), PP 01-03 www.iosrjournals.org A Public-Key Cryptosystem Based On Discrete Logarithm Problem over Finite Fields 퐅퐩퐧 Saju M I1, Lilly P L2 1Assistant Professor, Department of Mathematics, St. Thomas’ College, Thrissur, India 2Associate professor, Department of Mathematics, St. Joseph’s College, Irinjalakuda, India Abstract: One of the classical problems in mathematics is the Discrete Logarithm Problem (DLP).The difficulty and complexity for solving DLP is used in most of the cryptosystems. In this paper we design a public key system based on the ring of polynomials over the field 퐹푝 is developed. The security of the system is based on the difficulty of finding discrete logarithms over the function field 퐹푝푛 with suitable prime p and sufficiently large n. The presented system has all features of ordinary public key cryptosystem. Keywords: Discrete logarithm problem, Function Field, Polynomials over finite fields, Primitive polynomial, Public key cryptosystem. I. Introduction For the construction of a public key cryptosystem, we need a finite extension field Fpn overFp. In our paper [1] we design a public key cryptosystem based on discrete logarithm problem over the field F2. Here, for increasing the complexity and difficulty for solving DLP, we made a proper additional modification in the system. A cryptosystem for message transmission means a map from units of ordinary text called plaintext message units to units of coded text called cipher text message units. The face of cryptography was radically altered when Diffie and Hellman invented an entirely new type of cryptography, called public key [Diffie and Hellman 1976][2]. -

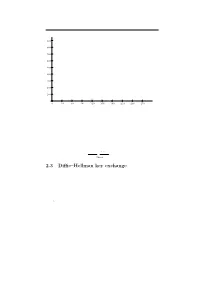

2.3 Diffie–Hellman Key Exchange

2.3. Di±e{Hellman key exchange 65 q q q q q q 6 q qq q q q q q q 900 q q q q q q q qq q q q q q q q q q q q q q q q q 800 q q q qq q q q q q q q q q qq q q q q q q q q q q q 700 q q q q q q q q q q q q q q q q q q q q q q q q q q qq q 600 q q q q q q q q q q q q qq q q q q q q q q q q q q q q q q q qq q q q q q q q q 500 q qq q q q q q qq q q q q q qqq q q q q q q q q q q q q q qq q q q 400 q q q q q q q q q q q q q q q q q q q q q q q q q 300 q q q q q q q q q q q q q q q q q q qqqq qqq q q q q q q q q q q q 200 q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q q qq q q qq q q 100 q q q q q q q q q q q q q q q q q q q q q q q q q 0 q - 0 30 60 90 120 150 180 210 240 270 Figure 2.2: Powers 627i mod 941 for i = 1; 2; 3;::: any group and use the group law instead of multiplication. -

A Provably-Unforgeable Threshold Eddsa with an Offline Recovery Party

A Provably-Unforgeable Threshold EdDSA with an Offline Recovery Party Michele Battagliola,∗ Riccardo Longo,y Alessio Meneghetti,z and Massimiliano Salax Department of Mathematics, University Of Trento Abstract A(t; n)-threshold signature scheme enables distributed signing among n players such that any subset of size at least t can sign, whereas any subset with fewer players cannot. The goal is to produce threshold digital signatures that are compatible with an existing centralized sig- nature scheme. Starting from the threshold scheme for the ECDSA signature due to Battagliola et al., we present the first protocol that supports EdDSA multi-party signatures with an offline participant dur- ing the key-generation phase, without relying on a trusted third party. Under standard assumptions we prove our scheme secure against adap- tive malicious adversaries. Furthermore we show how our security notion can be strengthen when considering a rushing adversary. We discuss the resiliency of the recovery in the presence of a malicious party. Using a classical game-based argument, we prove that if there is an adversary capable of forging the scheme with non-negligible prob- ability, then we can build a forger for the centralized EdDSA scheme with non-negligible probability. Keywords| 94A60 Cryptography, 12E20 Finite fields, 14H52 Elliptic curves, 94A62 Authentication and secret sharing, 68W40 Analysis of algorithms 1 Introduction A(t; n)-threshold signature scheme is a multi-party computation protocol that en- arXiv:2009.01631v1 [cs.CR] 2 Sep 2020 ables a subset of at least t among n authorized players to jointly perform digital signatures. The flexibility and security advantages of threshold protocols have be- come of central importance in the research for new cryptographic primitives [9]. -

On Notions of Security for Deterministic Encryption, and Efficient Constructions Without Random Oracles

A preliminary version of this paper appears in Advances in Cryptology - CRYPTO 2008, 28th Annual International Cryptology Conference, D. Wagner ed., LNCS, Springer, 2008. This is the full version. On Notions of Security for Deterministic Encryption, and Efficient Constructions without Random Oracles Alexandra Boldyreva∗ Serge Fehr† Adam O’Neill∗ Abstract The study of deterministic public-key encryption was initiated by Bellare et al. (CRYPTO ’07), who provided the “strongest possible” notion of security for this primitive (called PRIV) and con- structions in the random oracle (RO) model. We focus on constructing efficient deterministic encryption schemes without random oracles. To do so, we propose a slightly weaker notion of security, saying that no partial information about encrypted messages should be leaked as long as each message is a-priori hard-to-guess given the others (while PRIV did not have the latter restriction). Nevertheless, we argue that this version seems adequate for certain practical applica- tions. We show equivalence of this definition to single-message and indistinguishability-based ones, which are easier to work with. Then we give general constructions of both chosen-plaintext (CPA) and chosen-ciphertext-attack (CCA) secure deterministic encryption schemes, as well as efficient instantiations of them under standard number-theoretic assumptions. Our constructions build on the recently-introduced framework of Peikert and Waters (STOC ’08) for constructing CCA-secure probabilistic encryption schemes, extending it to the deterministic-encryption setting and yielding some improvements to their original results as well. Keywords: Public-key encryption, deterministic encryption, lossy trapdoor functions, leftover hash lemma, standard model. ∗ College of Computing, Georgia Institute of Technology, 266 Ferst Drive, Atlanta, GA 30332, USA. -

Post-Quantum Cryptography

Post-quantum cryptography Daniel J. Bernstein & Tanja Lange University of Illinois at Chicago; Ruhr University Bochum & Technische Universiteit Eindhoven 12 September 2020 I Motivation #1: Communication channels are spying on our data. I Motivation #2: Communication channels are modifying our data. I Literal meaning of cryptography: \secret writing". I Achieves various security goals by secretly transforming messages. I Confidentiality: Eve cannot infer information about the content I Integrity: Eve cannot modify the message without this being noticed I Authenticity: Bob is convinced that the message originated from Alice Cryptography with symmetric keys AES-128. AES-192. AES-256. AES-GCM. ChaCha20. HMAC-SHA-256. Poly1305. SHA-2. SHA-3. Salsa20. Cryptography with public keys BN-254. Curve25519. DH. DSA. ECDH. ECDSA. EdDSA. NIST P-256. NIST P-384. NIST P-521. RSA encrypt. RSA sign. secp256k1. Cryptography / Sender Receiver \Alice" \Bob" Tsai Ing-Wen picture credit: By =q府, Attribution, Wikimedia. Donald Trump picture credit: By Shealah Craighead - White House, Public Domain, Wikimedia. Daniel J. Bernstein & Tanja Lange Post-quantum cryptography2 Cryptography with symmetric keys AES-128. AES-192. AES-256. AES-GCM. ChaCha20. HMAC-SHA-256. Poly1305. SHA-2. SHA-3. Salsa20. I Literal meaning of cryptography: \secret writing". Cryptography with public keys Achieves various security goals by secretly transforming messages. BN-254I . Curve25519. DH. DSA. ECDH. ECDSA. EdDSA. NIST P-256. NIST P-384. Confidentiality: Eve cannot infer information about the content NISTI P-521. RSA encrypt. RSA sign. secp256k1. I Integrity: Eve cannot modify the message without this being noticed I Authenticity: Bob is convinced that the message originated from Alice Cryptography / Sender Untrustworthy network Receiver \Alice" \Eve" \Bob" I Motivation #1: Communication channels are spying on our data. -

Elliptic Curve Arithmetic for Cryptography

Elliptic Curve Arithmetic for Cryptography Srinivasa Rao Subramanya Rao August 2017 A Thesis submitted for the degree of Doctor of Philosophy of The Australian National University c Copyright by Srinivasa Rao Subramanya Rao 2017 All Rights Reserved Declaration I confirm that this thesis is a result of my own work, except as cited in the references. I also confirm that I have not previously submitted any part of this work for the purposes of an award of any degree or diploma from any University. Srinivasa Rao Subramanya Rao March 2017. Previously Published Content During the course of research contributing to this thesis, the following papers were published by the author of this thesis. This PhD thesis contains material from these papers: 1. Srinivasa Rao Subramanya Rao, A Note on Schoenmakers Algorithm for Multi Exponentiation, 12th International Conference on Security and Cryptography (Colmar, France), Proceedings of SECRYPT 2015, pages 384-391. 2. Srinivasa Rao Subramanya Rao, Interesting Results Arising from Karatsuba Multiplication - Montgomery family of formulae, in Proceedings of Sixth International Conference on Computer and Communication Technology 2015 (Allahabad, India), pages 317-322. 3. Srinivasa Rao Subramanya Rao, Three Dimensional Montgomery Ladder, Differential Point Tripling on Montgomery Curves and Point Quintupling on Weierstrass' and Edwards Curves, 8th International Conference on Cryptology in Africa, Proceedings of AFRICACRYPT 2016, (Fes, Morocco), LNCS 9646, Springer, pages 84-106. 4. Srinivasa Rao Subramanya Rao, Differential Addition in Edwards Coordinates Revisited and a Short Note on Doubling in Twisted Edwards Form, 13th International Conference on Security and Cryptography (Lisbon, Portugal), Proceedings of SECRYPT 2016, pages 336-343 and 5. -

Hash Functions and Thetitle NIST of Shapresentation-3 Competition

The First 30 Years of Cryptographic Hash Functions and theTitle NIST of SHAPresentation-3 Competition Bart Preneel COSIC/Kath. Univ. Leuven (Belgium) Session ID: CRYP-202 Session Classification: Hash functions decoded Insert presenter logo here on slide master Hash functions X.509 Annex D RIPEMD-160 MDC-2 SHA-256 SHA-3 MD2, MD4, MD5 SHA-512 SHA-1 This is an input to a crypto- graphic hash function. The input is a very long string, that is reduced by the hash function to a string of fixed length. There are 1A3FD4128A198FB3CA345932 additional security conditions: it h should be very hard to find an input hashing to a given value (a preimage) or to find two colliding inputs (a collision). Hash function history 101 DES RSA 1980 single block ad hoc length schemes HARDWARE MD2 MD4 1990 SNEFRU double MD5 block security SHA-1 length reduction for RIPEMD-160 factoring, SHA-2 2000 AES permu- DLOG, lattices Whirlpool SOFTWARE tations SHA-3 2010 Applications • digital signatures • data authentication • protection of passwords • confirmation of knowledge/commitment • micropayments • pseudo-random string generation/key derivation • construction of MAC algorithms, stream ciphers, block ciphers,… Agenda Definitions Iterations (modes) Compression functions SHA-{0,1,2,3} Bits and bytes 5 Hash function flavors cryptographic hash function this talk MAC MDC OWHF CRHF UOWHF (TCR) Security requirements (n-bit result) preimage 2nd preimage collision ? x ? ? ? h h h h h h(x) h(x) = h(x‘) h(x) = h(x‘) 2n 2n 2n/2 Informal definitions (1) • no secret parameters -

Making NTRU As Secure As Worst-Case Problems Over Ideal Lattices

Making NTRU as Secure as Worst-Case Problems over Ideal Lattices Damien Stehlé1 and Ron Steinfeld2 1 CNRS, Laboratoire LIP (U. Lyon, CNRS, ENS Lyon, INRIA, UCBL), 46 Allée d’Italie, 69364 Lyon Cedex 07, France. [email protected] – http://perso.ens-lyon.fr/damien.stehle 2 Centre for Advanced Computing - Algorithms and Cryptography, Department of Computing, Macquarie University, NSW 2109, Australia [email protected] – http://web.science.mq.edu.au/~rons Abstract. NTRUEncrypt, proposed in 1996 by Hoffstein, Pipher and Sil- verman, is the fastest known lattice-based encryption scheme. Its mod- erate key-sizes, excellent asymptotic performance and conjectured resis- tance to quantum computers could make it a desirable alternative to fac- torisation and discrete-log based encryption schemes. However, since its introduction, doubts have regularly arisen on its security. In the present work, we show how to modify NTRUEncrypt to make it provably secure in the standard model, under the assumed quantum hardness of standard worst-case lattice problems, restricted to a family of lattices related to some cyclotomic fields. Our main contribution is to show that if the se- cret key polynomials are selected by rejection from discrete Gaussians, then the public key, which is their ratio, is statistically indistinguishable from uniform over its domain. The security then follows from the already proven hardness of the R-LWE problem. Keywords. Lattice-based cryptography, NTRU, provable security. 1 Introduction NTRUEncrypt, devised by Hoffstein, Pipher and Silverman, was first presented at the Crypto’96 rump session [14]. Although its description relies on arithmetic n over the polynomial ring Zq[x]=(x − 1) for n prime and q a small integer, it was quickly observed that breaking it could be expressed as a problem over Euclidean lattices [6].