The Semi-Supervised Information Extraction System from HTML: an Improvement in the Wrapper Induction

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

STEPHEN MARK, ACE Editor

STEPHEN MARK, ACE Editor Television PROJECT DIRECTOR STUDIO / PRODUCTION CO. DELILAH (series) Various OWN / Warner Bros. Television EP: Craig Wright, Oprah Winfrey, Charles Randolph-Wright NEXT (series) Various Fox / 20th Century Fox Television EP: Manny Coto, Charlie Gogolak, Glenn Ficarra, John Requa SNEAKY PETE (series) Various Amazon / Sony Pictures Television EP: Blake Masters, Bryan Cranston, Jon Avnet, James Degus GREENLEAF (series) Various OWN / Lionsgate Television EP: Oprah Winfrey, Clement Virgo, Craig Wright HELL ON WHEELS (series) Various AMC / Entertainment One Television EP: Mark Richard, John Wirth, Jeremy Gold BLACK SAILS (series) Various Starz / Platinum Dunes EP: Michael Bay, Jonathan Steinberg, Robert Levine, Dan Shotz LEGENDS (pilot)* David Semel TNT / Fox 21 Prod: Howard Gordon, Jonathan Levin, Cyrus Voris, Ethan Reiff DEFIANCE (series) Various Syfy / Universal Cable Productions Prod: Kevin Murphy GAME OF THRONES** (season 2, ep.10) Alan Taylor HBO EP: Devid Benioff, D.B. Weiss BOSS (pilot* + series) Various Starz / Lionsgate Television EP: Farhad Safinia, Gus Van Sant, THE LINE (pilot) Michael Dinner CBS / CBS Television Studios EP: Carl Beverly, Sarah Timberman CANE (series) Various CBS / ABC Studios Prod: Jimmy Smits, Cynthia Cidre, MASTERS OF SCIENCE FICTION (anthology series) Michael Tolkin ABC / IDT Entertainment Jonathan Frakes Prod: Keith Addis, Sam Egan 3 LBS. (series) Various CBS / The Levinson-Fontana Company Prod: Peter Ocko DEADWOOD (series) Various HBO 2007 ACE Eddie Award Nominee | 2006 ACE Eddie Award Winner Prod: David Milch, Gregg Fienberg, Davis Guggenheim 2005 Emmy Nomination WITHOUT A TRACE (pilot) David Nutter CBS / Warner Bros. Television / Jerry Bruckheimer TV Prod: Jerry Bruckheimer, Hank Steinberg SMALLVILLE (pilot + series) David Nutter (pilot) CW / Warner Bros. -

Sagawkit Acceptancespeechtran

Screen Actors Guild Awards Acceptance Speech Transcripts TABLE OF CONTENTS INAUGURAL SCREEN ACTORS GUILD AWARDS ...........................................................................................2 2ND ANNUAL SCREEN ACTORS GUILD AWARDS .........................................................................................6 3RD ANNUAL SCREEN ACTORS GUILD AWARDS ...................................................................................... 11 4TH ANNUAL SCREEN ACTORS GUILD AWARDS ....................................................................................... 15 5TH ANNUAL SCREEN ACTORS GUILD AWARDS ....................................................................................... 20 6TH ANNUAL SCREEN ACTORS GUILD AWARDS ....................................................................................... 24 7TH ANNUAL SCREEN ACTORS GUILD AWARDS ....................................................................................... 28 8TH ANNUAL SCREEN ACTORS GUILD AWARDS ....................................................................................... 32 9TH ANNUAL SCREEN ACTORS GUILD AWARDS ....................................................................................... 36 10TH ANNUAL SCREEN ACTORS GUILD AWARDS ..................................................................................... 42 11TH ANNUAL SCREEN ACTORS GUILD AWARDS ..................................................................................... 48 12TH ANNUAL SCREEN ACTORS GUILD AWARDS .................................................................................... -

Audio Publishers Association Announces 2018 Audie Award® Finalists in 26 Categories

FOR IMMEDIATE RELEASE Contact: Rachel Tarlow Gul Over the River Public Relations 201-503-1321, [email protected] Audio Publishers Association announces 2018 Audie Award® finalists in 26 categories Winners to be honored at the Audies® Gala in New York City on May 31st New York, NY – The Audio Publishers Association (APA) has announced finalists for the 23rd annual Audie Awards® competition, the premier awards program in the United States recognizing distinction in audiobooks and spoken word entertainment. Winners will be revealed at the Audies® Gala on May 31, 2018, at the New-York Historical Society in New York City. “We are excited to announce all of our Audie Awards® finalists for 2018,” says APA President Linda Lee. “It seems that each year the sky is the limit as we see an increase in submissions of incredibly high quality works. This year’s Audies promises to be more exciting than ever as the judges work hard to pick the winners out of such an august field of finalists. We hope to see you there to join in the excitement!” “Audiobook aficionados and industry insiders alike have a heightened excitement about the amazing range of genres and production styles of the finalists and ultimate winners of the Audies,” adds Mary Burkey, Audie Awards® Competition Chair. “Our team of expert judges debating the winner of the Audiobook of the Year award must choose a single audio that combines the very best in narration and production quality with significant sales impact and marketing outreach to be the standard bearer of excellence for the format and audio publishing industry. -

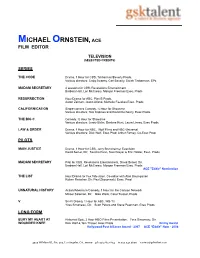

Michael Ornstein,Ace

MICHAEL ORNSTEIN, ACE FILM EDITOR TELEVISION (SELECTED CREDITS) SERIES THE CODE Drama, 1 Hour for CBS, Timberman/Beverly Prods. Various directors; Craig Sweeny, Carl Beverly, Sarah Timberman, EPs MADAM SECRETARY 4 seasons for CBS, Revelations Entertainment Barbara Hall, Lori McCreary, Morgan Freeman Exec. Prods RESURRECTION Hour Drama for ABC, Plan B Prods. Aaron Zelman, Joann Alfano, Michelle Fazekas Exec. Prods CALIFORNICATION Single-camera Comedy, ½ Hour for Showtime Various directors; Tom Kapinos and David Duchovny, Exec Prods. THE BIG C Comedy, ½ Hour for Showtime Various directors; Jenny Bicks, Darlene Hunt, Laura Linney, Exec Prods. LAW & ORDER Drama, 1 Hour for NBC, Wolf Films and NBC-Universal Various directors; Dick Wolf, Exec Prod; Arthur Forney, Co-Exec Prod. PILOTS MAIN JUSTICE Drama, 1 Hour for CBS, Jerry Bruckheimer Television David Semel, Dir; Sascha Penn, Sunil Nayar & Eric Holder, Exec. Prods. MADAM SECRETARY Pilot for CBS, Revelations Entertainment, David Semel, Dir. Barbara Hall, Lori McCreary, Morgan Freeman Exec. Prods ACE "Eddie" Nomination THE LIST Hour Drama for Fox Television, Co-editor with Alan Baumgarten Ruben Fleischer, Dir; Paul Zbyszewski, Exec. Prod. UNNATURAL HISTORY Action/Adventure/Comedy, 1 Hour for the Cartoon Network Mikael Salomon, Dir; Mike Werb, Carol Trussel, Prods. V Sci-Fi Drama, 1 Hour for ABC, WB-TV Yves Simoneau, Dir; Scott Peters and Steve Pearlman, Exec Prods LONG FORM BURY MY HEART AT Historical Epic, 2 Hour HBO Films Presentation, Yves Simoneau, Dir WOUNDED KNEE Dick Wolf & Tom Thayer, Exec Prods Emmy Award Hollywood Post Alliance Award - 2007 ACE “Eddie” Nom - 2008 4929 Wilshire Bl., Ste. 259, Los Angeles, CA., 90010 ph 323.782.1854 fx 323.345.5690 [email protected] MICHAEL ORNSTEIN, ACE WHO IS CLARK Drama, 2 Hour for Lifetime Television, Sony Pictures TV ROCKEFELLER? Mikael Salomon, Dir; Judith Verno, Ilene Cahn-Powers, Exec Prods. -

Movie Data Analysis.Pdf

FinalProject 25/08/2018, 930 PM COGS108 Final Project Group Members: Yanyi Wang Ziwen Zeng Lingfei Lu Yuhan Wang Yuqing Deng Introduction and Background Movie revenue is one of the most important measure of good and bad movies. Revenue is also the most important and intuitionistic feedback to producers, directors and actors. Therefore it is worth for us to put effort on analyzing what factors correlate to revenue, so that producers, directors and actors know how to get higher revenue on next movie by focusing on most correlated factors. Our project focuses on anaylzing all kinds of factors that correlated to revenue, for example, genres, elements in the movie, popularity, release month, runtime, vote average, vote count on website and cast etc. After analysis, we can clearly know what are the crucial factors to a movie's revenue and our analysis can be used as a guide for people shooting movies who want to earn higher renveue for their next movie. They can focus on those most correlated factors, for example, shooting specific genre and hire some actors who have higher average revenue. Reasrch Question: Various factors blend together to create a high revenue for movie, but what are the most important aspect contribute to higher revenue? What aspects should people put more effort on and what factors should people focus on when they try to higher the revenue of a movie? http://localhost:8888/nbconvert/html/Desktop/MyProjects/Pr_085/FinalProject.ipynb?download=false Page 1 of 62 FinalProject 25/08/2018, 930 PM Hypothesis: We predict that the following factors contribute the most to movie revenue. -

Movie Time Descriptive Video Service

DO NOT DISCARD THIS CATALOG. All titles may not be available at this time. Check the Illinois catalog under the subject “Descriptive Videos or DVD” for an updated list. This catalog is available in large print, e-mail and braille. If you need a different format, please let us know. Illinois State Library Talking Book & Braille Service 300 S. Second Street Springfield, IL 62701 217-782-9260 or 800-665-5576, ext. 1 (in Illinois) Illinois Talking Book Outreach Center 125 Tower Drive Burr Ridge, IL 60527 800-426-0709 A service of the Illinois State Library Talking Book & Braille Service and Illinois Talking Book Centers Jesse White • Secretary of State and State Librarian DESCRIPTIVE VIDEO SERVICE Borrow blockbuster movies from the Illinois Talking Book Centers! These movies are especially for the enjoyment of people who are blind or visually impaired. The movies carefully describe the visual elements of a movie — action, characters, locations, costumes and sets — without interfering with the movie’s dialogue or sound effects, so you can follow all the action! To enjoy these movies and hear the descriptions, all you need is a regular VCR or DVD player and a television! Listings beginning with the letters DV play on a VHS videocassette recorder (VCR). Listings beginning with the letters DVD play on a DVD Player. Mail in the order form in the back of this catalog or call your local Talking Book Center to request movies today. Guidelines 1. To borrow a video you must be a registered Talking Book patron. 2. You may borrow one or two videos at a time and put others on your request list. -

Fargo: Seeing the Significance of Style in Television Poetics?

Fargo: Seeing the significance of style in television poetics? By Max Sexton and Dominic Lees 6 Keywords: Poetics; Mini-series; Style; Storyworld; Coen brothers; Noah Hawley Abstract This article argues that Fargo as an example of contemporary US television serial drama renews the debate about the links between Televisuality and narrative. In this way, it seeks to demonstrate how visual and audio strategies de-stabilise subjectivities in the show. By indicating the viewing strategies required by Fargo, it is possible to offer a detailed reading of high-end drama as a means of understanding how every fragment of visual detail can be organised into a ‘grand’ aesthetic. Using examples, the article demonstrates how the construction of meaning relies on the formally playful use of tone and texture, which form the locus of engagement, as well as aesthetic value within Fargo. At the same time, Televisuality can be more easily explained and understood as a strategy by writers, directors and producers to construct discourses around increased thematic uncertainty that invites audience interpretation at key moments. In Fargo, such moments will be shown to be a manifestation not only of the industrial strategy of any particular network ‒ its branding ‒ but how is it part of a programme’s formal design. In this way, it is hoped that a system of television poetics ‒ including elements of camera and performance ‒ generates new insight into the construction of individual texts. By addressing television style as significant, it avoids the need to refer to pre-existing but delimiting categories of high-end television drama as examples of small screen art cinema, the megamovie and so on. -

Critically-Acclaimed, Award-Winning Original Series That Continue to Make Their Mark on the Cultural Landscape

…critically-acclaimed, award-winning original series that continue to make their mark on the cultural landscape. HOMELAND Who’s the hero – who’s the threat? SHAMELESS It’s summertime and the Gallaghers Golden Globe® winner Claire Danes and Damian Lewis star are cooking up fresh ways to bring home the bacon in a in the suspenseful Showtime Original Series HOMELAND. new season of the Showtime Original Series SHAMELESS When rescued MIA Marine Sgt. Nicholas Brody returns starring William H. Macy and Emmy Rossum. Their ventures home to a hero’s welcome after eight years in torturous may not be moral – or even legal – but they certainly are enemy confinement – volatile CIA officer Carrie Mathison outrageous. While Fiona works and parties at the hottest is convinced he’s a dangerous and determined traitor club in town, Lip, Ian, Debbie, Carl and Liam are getting with terrorist intent. SHOWTIME has ordered season into trouble of their own. And Frank is messing with the two of this award-winning drama series. wrong people. CALIFORNICATION Golden Globe® winner David Duchovny is back in a bangin’ new season of HOUSE OF LIES Don Cheadle and Kristen Bell CALIFORNICATION – and Hank Moody has become are twisting the facts, spinning the numbers and playing hip hop’s most wanted. Rich and powerful rap mogul corporate America for everything they’ve got in the extraordinaire Samurai Apocalypse (Wu-Tang’s RZA) is outrageous new series HOUSE OF LIES. pimped-out famous – and hell bent on getting our bad boy writer into more trouble than he’s ever known. -

The Wonder Years: Nostalgia, Memory and Pastness in Television Credits

The Wonder Years: Nostalgia, Memory and Pastness in Television Credits Kathleen Williams Abstract: Opening sequences on television have developed a complex and multifaceted relationship to pastness and memory—particularly in relation to nostalgia. Series such as Transparent (2014–) use the space of the credits to blur our understanding of memory and fiction. Others such as Californication (2007–2014) include fake home videos or Polaroids to lend authenticity to the constructed family memories they depict. In this article, I explore the nostalgic qualities of contemporary television credits and opening sequences. Title sequences occupy a unique temporal position: while they are repeated before each episode and therefore are familiar to repeat viewers, they also typically depict events outside of the temporal realm of the television episode they open. The relationship between retro filters and aesthetics and the playful nostalgic framing of pastness through title sequences provides a framework to play with notions of temporality in television. This article contributes to the limited literature on credits by conceptualising title sequences in relation to the evocation and representation of memory and materiality. The American television series The Wonder Years (1988–1993) opened with fake home video of the main characters each week. The footage was updated as the actors aged along with their characters. The excerpts presented all the aesthetic and rhetorical markers of home video: graininess, hand-held movement, the subjects waving to the camera, and a black frame. The credits served to make the story of the Arnold family a familiar one; the action depicted in the credits was sufficiently generic to belong to any family and, in particular, any American family. -

Meditative Story Transcript – David Duchovny Click Here to Listen to The

Meditative Story Transcript – David Duchovny Click here to listen to the full Meditative Story episode with David Duchovny. DAVID DUCHOVNY: In one exercise we drink an imaginary cup of coffee. For months. I feel the shape of the mug in my hands, the weight of it. I grasp the handle, and ah, there’s the heat, I can feel the heat. I smell the rich, smoky aroma of the coffee, taste it on my lips. I spend hours doing this. My Latin is pretty good, but this is all Greek to me. Acting forces me to use all of myself – not just my mind. My body, my heart. And I love it like I loved basketball and baseball. It’s not curing cancer. But it feels important. It feels good to me. I start to realize how much energy I’ve spent keeping busy – trying to move faster than my feelings. But here they want me to slow down, to feel everything. They demand it. And I can see now how my previous academic success was also somehow performative. Posturing. But now I’m inhabiting a different, more primal – maybe even more authentic – part of myself. And so I make the call, kind of. I subtly abandon my doctoral thesis without telling anyone. And I decide to be an actor without telling anyone. ROHAN GUNATILLAKE: I grew up watching David Duchovny on TV. As a teenager in the ‘90s, his depiction of Fox Mulder in “The X Files” had a big impact on me , and made me realize that it was actually OK to think differently. -

Adrian Hein Resume March 2020

ADRIAN HEIN **BEST STUNT 2016 UBCP/ACTRA AWARDS** **SAG Award Nominee ‘Outstanding Performance’ 2016** Height: 6’0 Waist: 34 Shoe: 10 US Mobile: 310.962.8081 Weight: 180lbs Inseam: 33 Hat: 7 1/8” CAN Mobile: 604.825.0706 Hair: Brown Jacket: 42 DOB: April, 1979 Email: [email protected] Eyes: Hazel Shirt: 16.5/35 UBCP | ACTRA | SAG/AFTRA [email protected] FEATURE FILMS ROLE STUNT COORDINATOR / DIRECTOR Jumanji 2 Stunts Chris Gordon | Wade Eastwood The Preditor Stunts Marny Eng Alpha Stunt Double Rob Alonzo Running For Grace Stunt Coordinator David L. Cunningham Captain America: Civil War Stunt Double (Chris Evans as Captain America) Sam Hargrave | Andy Gill Jack Reacher: Never Go Back Stunts Wade Eastwood King Kong: Skull Island Stunts George Cottle | Brycen Counts Deadpool Stunt Double (Ryan Reynolds as Deadpool) Rob Alonzo | Philip J Silvera Heart of Man Stunt Coordinator Eric Esau Tomorrowland Stunt Double Rob Alonzo Jungle Book Stunt Bagheera (Performance Capture) Tommy Harper Aloha Stunt Double (Bradley Cooper) Brian Keaulana Night at the Museum 3 Stunts Brad Martin Heaven is for Real Stunts Leif Havdale Godzilla Stunts John Stoneham Jr. 7 Minutes Stunt Double (Luke Mitchell, Zane Holtz) Michael Hilow 300: Rise of an Empire Stunts (US Stunt Team - Greek, Persian, Spartan) Damon Caro | Matt Rugetti | Ryan Watson | Guillermo Grispo | Jim Halty Seventh Son Stunt Assassin Larry Lam | Paul Jennings Man of Steel Kryptonian Damon Caro World War Z Stunts Wade Eastwood / Simon Crane Underworld Awakening Lycan Jacob Brad Martin | Todd Schneider | JJ Perry Twilight Saga: Breaking Dawn Stunt Double (Peter Facinelli) Scott Ateah | EJ Foerster | Peng Zhang The Grey Stunt Double (Dallas Roberts) Ben Bray | Scott Ateah This Means War Stunt Double (Chris Pine) Jeff Habberstad | Marny Eng Caesar: Rise of the Apes Stunts Mike Mitchell Sucker Punch Stunts Damon Caro Percy Jackson Stunts Dean Choe 2012 Stunts John Stoneham Jr. -

Movies with Characters with Disabilities JOE TIMMONS / MARCH 26, 2003

Movies with Characters with Disabilities JOE TIMMONS / MARCH 26, 2003 An Affair to Remember — 1957 Disability: paralysis Summary: A remake of 1939’s Love Affair. Terry (Deborah Kerr) is on her way to meet the love of her life (Cary Grant) at the top of the Empire State Building when she is hit by a car and paralyzed. Oscar nominations: Best cinematography, costume design, score, song (“An Affair to Remember”) As Good As It Gets — 1997 Disability: obsessive compulsive disorder Summary: Melvin Udall (Jack Nicholson) is a cantankerous writer with obsessive compulsive disorder who softens when he meets a single mom waitress (Helen Hunt). Oscar awards: Best actor (Nicholson), actress (Hunt) Oscar nominations: Best picture, supporting actor (Greg Kinnear), film editing, score, screenplay At First Sight — 1999 Disability: blind Summary: Masseur Virgil (Val Kilmer) has been blind since age 3. He meets New York architect Amy (Mira Sorvino) who convinces him to have radical eye surgery done to restore his sight. Virgil regains his sight and must adjust to being able to see. A Beautiful Mind — 2001 Disability: schizophrenia Summary: Russell Crowe plays Nobel Prize-winning mathematician John Forbes Nash, who is diagnosed with schizophrenia. The movie is based on the Nash biography of the same title. Oscar awards: Best picture, director (Ron Howard), supporting actress (Jennifer Connelly), adapted screenplay. Oscar nominations: Best actor (Crowe), film editing, makeup, score. Benny & Joon — 1993 Disability: mental illness Summary: Benny (Aidan Quinn) cares for his sister Joon (Mary Stuart Masterson), who has a mental illness. He also inherits the care of Sam (Johnny Depp), who has a personality disorder.