A Framework for the Manipulation of Video Game Elements Using the Player’S Biometric Data

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

John Douglas Owens

John Douglas Owens Electrical and Computer Engineering, University of California One Shields Avenue, Davis, CA - USA +--- / [email protected] http://www.ece.ucdavis.edu/~jowens/ R Computer systems; parallel computing, general-purpose computation on graphics hardware / I GPU computing, parallel algorithms and data structures, graphics architectures, parallel architec- tures and programming models. E Stanford University Stanford, California Department of Electrical Engineering – Ph.D., Electrical Engineering, January M.S., Electrical Engineering, March Advisors: Professors William J. Dally and Pat Hanrahan Dissertation Topic: “Computer Graphics on a Stream Architecture” University of California, Berkeley Berkeley, California Department of Electrical Engineering and Computer Sciences – B.S., Highest Honors, Electrical Engineering and Computer Sciences, June P University of California, Davis Davis, California A Child Family Professor of Engineering and Entrepreneurship – Associate Professor – Assistant Professor – Professor in the Department of Electrical and Computer Engineering; member of Graduate Groups of Electrical and Computer Engineering and Computer Science. Visiting Scientist, Lawrence Berkeley National Laboratory. Twitter San Francisco, California So ware Engineer (Sabbatical) July–December Engineer in Core System Libraries group in Twitter’s Runtime Systems division. Stanford University Stanford, California Research Assistant – An architect of the Imagine Stream Processor under the direction of Professor William J. Dally. Responsible for major portions of hardware and so ware design for Imagine and its tools and applications. Stanford University Stanford, California Teaching Assistant Fall Teaching assistant for Computer Science , “e Coming Revolution in Computer Architecture”, under Professor William J. Dally. Designed course with Professor Dally, including lecture topics, readings, and laboratories. Interval Research Corporation Palo Alto, California Consultant – Investigated new graphics architectures under the direction of Dr. -

Master's Thesis: Visualizing Storytelling in Games

Chronicle Developing a visualisation of emergent narratives in grand strategy games EDVARD RUTSTRO¨ M JONAS WICKERSTRO¨ M Master's Thesis in Interaction Design Department of Applied Information Technology Chalmers University of Technology Gothenburg, Sweden 2013 Master's Thesis 2013:091 The Authors grants to Chalmers University of Technology and University of Gothen- burg the non-exclusive right to publish the Work electronically and in a non-commercial purpose make it accessible on the Internet. The Authors warrants that they are the authors to the Work, and warrants that the Work does not contain text, pictures or other material that violates copyright law. The Authors shall, when transferring the rights of the Work to a third party (for example a publisher or a company), acknowledge the third party about this agreement. If the Authors has signed a copyright agreement with a third party regarding the Work, the Authors warrants hereby that they have obtained any necessary permission from this third party to let Chalmers University of Technology and University of Gothenburg store the Work electronically and make it accessible on the Internet. Chronicle Developing a Visualisation of Emergent Narratives in Grand Strategy Games c EDVARD RUTSTROM,¨ June 2013. c JONAS WICKERSTROM,¨ June 2013. Examiner: OLOF TORGERSSON Department of Applied Information Technology Chalmers University of Technology, SE-412 96, G¨oteborg, Sweden Telephone +46 (0)31-772 1000 Gothenburg, Sweden June 2013 Abstract Many games of high complexity give rise to emergent narratives, where the events of the game are retold as a story. The goal of this thesis was to investigate ways to support the player in discovering their own emergent stories in grand strategy games. -

PRESS RELEASE Media Contact Michael Prefontaine | Silicon Studio

PRESS RELEASE Media Contact Michael Prefontaine | Silicon Studio | [email protected] |+81 (0)3 5488 7070 From Silicon Studio and MISTWALKER New game "Terra Battle 2" under joint development Teaser site, trailer, and social media released Tokyo, Japan, (June 22, 2017) –Middleware and game development company, Silicon Studio Corporation are excited to announce their joint development with renowned game developer MISTWALKER COPRPORATION, to develop the next game in the hit “Terra Battle” series, “Terra Battle 2” for smartphones and PC. The official trailer and social media sites are set to open June 22, 2017. About Terra Battle 2 The battle gameplay that wowed so many players in the original "Terra Battle" remains, but now it is complimented by a full role-playing game in which every system has evolved to deliver a more deeply enriched story and experience. The adoption of the dynamic field map allows you direct and travel with your characters throughout the world. Intense emotion abounds from fated encounters, bitter farewells, the joy of glory won, pain and heartbreak, and more heated battles that will have your hands sweating. 1 | Can the people of "Terra" ever uncover the illusive truth of their world? A spectacular journey is waiting for you. ●Gameplay screenshot(Depicted is still under development) Screenshots from left to right: Title Screen, Field Map, Battle Scene, Event Scene ●Character/ Guardian Left:Roland(Guardian)/Right:Sarah(Character) 2 | Trailer, Teaser site, Social Media Ahead of the release the teaser site, trailer, and official Twitter and Facebook accounts will open. For now, these are only available in Japanese, but users are welcome to check in for more information. -

Achieving Safe DICOM Software in Medical Devices

Master Thesis in Software Engineering & Management REPORT NO. 2009:003 ISSN: 1651-4769 Achieving Safe DICOM Software in Medical Devices Kevin Debruyn IT University of Göteborg Chalmers University of Technology and University of Gothenburg Göteborg, Sweden 2009 Student Kevin Debruyn (820717-2795) Contact Information Phone: +46 73 7418420 / Email: [email protected] Course Supervisor Karin Wagner Course Coordinator Kari Wahll Start and End Date 21 st of February 2008 to 30 th of March 2009 Size 30 Credits Subject Achieving Safe DICOM Software in Medical Devices Overview The present document constitutes the project report that introduces, develops and presents the results of the thesis carried out by a master student of the IT University in Göteborg, Software Engineering & Management during Spring 2008 through Spring 2009 at Micropos Medical AB . Summary This paper reports on an investigation on how to produce a reliable software component to extract critical information from DICOM files. The component shall manipulate safety-critical medical information, i.e. patient demographics and data specific to radiotherapy treatments including radiation target volumes and doses intensity. Handling such sensitive data can potentially lead to medical errors, and threaten the health of patients. Hence, guaranteeing reliability and safety is an essential part of the development process. Solutions for developing the component from scratch or reusing all or parts of existing systems and libraries will be evaluated and compared. The resulting component will be tested to verify that it satisfies its reliability requirements. Subsequently, the component is to be integrated within an innovating radiotherapy positioning system developed by a Swedish start-up, Micropos . -

Investigating the Effect of Pace Mechanic on Player Motivation And

Investigating the Effect of Pace Mechanic on Player Motivation and Experience by Hala Anwar A thesis presented to the University of Waterloo in fulfillment of the thesis requirement for the degree of Master of Applied Science (MASc) in Management Sciences Waterloo, Ontario, Canada, 2016 © Hala Anwar 2016 AUTHOR'S DECLARATION I hereby declare that I am the sole author of this thesis. This is a true copy of the thesis, including any required final revisions, as accepted by my examiners. I understand that my thesis may be made electronically available to the public. Hala Anwar ii Abstract Games have long been employed to motivate people towards positive behavioral change. Numerous studies, for example, have found people who were previously disinterested in a task can be enticed to spend hours gathering information, developing strategies, and solving complex problems through video games. While the effect of factors such as generational influence or genre appeal have previously been researched extensively in serious games, an aspect in the design of games that remains unexplored through scientific inquiry is the pace mechanic—how time passes in a game. Time could be continuous as in the real world (real-time) or it could be segmented into phases (turn- based). Pace mechanic is fiercely debated by many strategy game fans, where real-time games are widely considered to be more engaging, and the slower pace of turn-based games has been attributed to the development of mastery. In this thesis, I present the results of an exploratory mixed-methods user study to evaluate whether pace mechanic and type of game alter the player experience and are contributing factors to how quickly participants feel competent at a game. -

C:\Documents and Settings\Amir\.Texmacs\System\Tmp

Strategy Games Refer ence: Andrew R ollings and Ernest Adam s Design, Chapter 10 on Game 1 Intr o duction The origin of strategy games is ro oted in their close cousins, b oar d games. Com puter strategy games have diversied into two main for ms: classical turn-based strategy games ! ! real-time strategy ga mes Real-time strategy gam es ar rived on the scene after turn-based strategy ga mes. 2 Themes Conquest (e .g., Age of Ki ngs). Engage in conict with one or m ore fo es. Exploration (e.g. , Sid Meie r's Col onizati on). Explore a new wor ld. Tr ade (e .g., the Tyco on seri es of games). More of ten than not, a strategy gam e blends these thr ee activ ities. The ex tent to which any par ticula r activ ity is do minant over the other determ ines the over all avor of the gam e. owever, the three activities are usua lly mutually H inter dep endent. 3 Conquest: StarC raft Star Craft uses co nquest as its primary m echanism . Explo ration and trade do feature in the gam e, but only as an enabler for the player to conq uer m ore ectively. e The player must explore the ar ea to b e conq uered and set up resour ce-pro cessing plants to allow reso urces to b e traded fo r weap ons and units. 4 Exploration: Sid Meier's C olonization Sid Meier 's C olonization is primar ily ab out ex plo- ration. -

Experimentální Porovnání Knihoven Na Detekci Emocí Pomocí Webkamery

MASARYKOVA UNIVERZITA FAKULTA INFORMATIKY Û¡¢£¤¥¦§¨ª«¬Æ°±²³´µ·¸¹º»¼½¾¿Ý Experimentální porovnání knihoven na detekci emocí pomocí webkamery DIPLOMOVÁ PRÁCE Bc. Milan Záleský Brno, podzim 2015 Prohlášení Prohlašuji, že tato diplomová práce je mým p ˚uvodnímautorským dílem, které jsem vypracoval samostatnˇe.Všechny zdroje, prameny a literaturu, které jsem pˇrivypracování používal nebo z nich ˇcerpal,v práci ˇrádnˇecituji s uvedením úplného odkazu na pˇríslušnýzdroj. Bc. Milan Záleský Vedoucí práce: RNDr. Zdenek Eichler i Klíˇcováslova OpenCV, facial expression analysis, webcam, experiment, emotion, detekce emocí, experimentální porovnání ii Podˇekování DˇekujiRNDr. Zdenku Eichlerovi, vedoucímu mé práce, za výteˇcnémento- rování, ochotu pomoci i optimistický pˇrístup, který v pr ˚ubˇehumého psaní projevil. Dˇekujisvým pˇrátel˚uma rodinˇeza opakované vyjádˇrenípodpory. V neposlední ˇradˇetaké dˇekujivšem úˇcastník˚umexperimentu, bez jejichž pˇrispˇeníbych porovnání nemohl uskuteˇcnit. iii Obsah 1 Úvod ...................................1 2 Rozpoznávání emocí ..........................2 2.1 Co je to emoce? . .2 2.2 Posouzení emocí . .3 2.3 Využití informaˇcníchtechnologií k rozpoznávání emocí . .3 2.3.1 Facial Action Coding System . .5 2.3.2 Computer vision . .6 2.3.3 OpenCV . 11 2.3.4 FaceTales . 13 3 Metoda výbˇeruknihoven pro rozpoznávání emocí ........ 15 3.1 Kritéria užšího výbˇeru . 15 3.2 Instalace knihoven . 17 3.3 Charakteristika knihoven zvolených pro srovnání . 20 3.3.1 CLMtrackr . 20 3.3.2 EmotionRecognition . 22 3.3.3 InSight SDK . 24 3.3.4 Vzájemné porovnání aplikací urˇcenýchpro experiment 25 4 Návrh experimentu ........................... 27 4.1 Pˇrípravaexperimentálního porovnání knihoven . 28 4.2 Promˇennéexperimentu . 29 4.2.1 Nezávislé promˇenné. 29 4.2.2 Závislé promˇenné . 29 4.2.3 Sledované promˇenné . 30 4.2.4 Vnˇejšípromˇenné . -

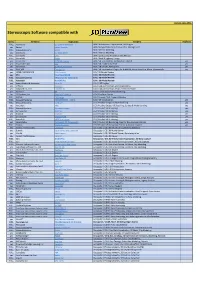

Stereo Software List

Version: June 2021 Stereoscopic Software compatible with Stereo Company Application Category Duplicate FULL Xeometric ELITECAD Architecture BIM / Architecture, Construction, CAD Engine yes Bexcel Bexcel Manager BIM / Design, Data, Project & Facilities Management FULL Dassault Systems 3DVIA BIM / Interior Modeling yes Xeometric ELITECAD Styler BIM / Interior Modeling FULL SierraSoft Land BIM / Land Survey Restitution and Analysis FULL SierraSoft Roads BIM / Road & Highway Design yes Xeometric ELITECAD Lumion BIM / VR Visualization, Architecture Models yes yes Fraunhofer IAO Vrfx BIM / VR enabled, for Revit yes yes Xeometric ELITECAD ViewerPRO BIM / VR Viewer, Free Option yes yes ENSCAPE Enscape 2.8 BIM / VR Visualization Plug-In for ArchiCAD, Revit, SketchUp, Rhino, Vectorworks yes yes OPEN CASCADE CAD CAD Assistant CAx / 3D Model Review yes PTC Creo View MCAD CAx / 3D Model Review FULL Dassault Systems eDrawings for Solidworks CAx / 3D Model Review FULL Autodesk NavisWorks CAx / 3D Model Review yes Robert McNeel & Associates. Rhino (5) CAx / CAD Engine yes Softvise Cadmium CAx / CAD, Architecture, BIM Visualization yes Gstarsoft Co., Ltd HaoChen 3D CAx / CAD, Architecture, HVAC, Electric & Power yes Siemens NX CAx / Construction & Manufacturing Yes 3D Systems, Inc. Geomagic Freeform CAx / Freeform Design FULL AVEVA E3D Design CAx / Process Plant, Power & Marine FULL Dassault Systems 3DEXPERIENCE - CATIA CAx / VR Visualization yes FULL Dassault Systems ICEM Surf CGI / Product Design, Surface Modeling yes yes Autodesk Alias CGI / Product -

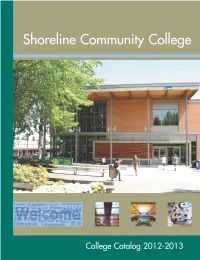

2012 Catalog

Shoreline Community College CATALOG 2012 - 2013 SHORELINE COMMUNITY COLLEGE • 16101 GREENWOOD AVE. N. • SHORELINE, WA 98133 • (206) 546-4101 • WWW.SHORELINE.EDU Shoreline Community College provides equal opportunity in education and employment and does not discriminate on the basis of race, sex, age, color, religion, national origin, marital status, gender, sexual orientation or disability. The following person has been designated to handle inquiries regarding the non-discrimination policies: Stephen Smith, Vice President for Human Resources and Legal Affairs Shoreline Community College 16101 Greenwood Ave N Shoreline, WA 98133 Telephone: 206-546-4694 E-Mail: [email protected] This publication is available in alternate formats by contacting the Office of Special Services at (206) 546-5832 or (206) 546-4520 (TDD). Every effort has been made to assure the accuracy of the information contained in this catalog. Students are advised, however, that such information is subject to change without notice, and advisors should, therefore, be consulted on a regular basis for current information. The College and its divisions reserve the right at any time to make changes in any regulations or requirements governing instruction in and graduation from the College and its various divisions. Changes shall take effect whenever the proper authorities determine and shall apply not only to prospective students but also to those who are currently enrolled at the College. Except as other conditions permit, the College will make every reasonable effort to ensure that students currently enrolled in programs and making normal progress toward completion of any requirements will have the opportunity to complete any program which is to be discontinued. -

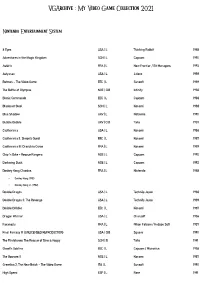

Vgarchive : My Video Game Collection 2021

VGArchive : My Video Game Collection 2021 Nintendo Entertainment System 8 Eyes USA | L Thinking Rabbit 1988 Adventures in the Magic Kingdom SCN | L Capcom 1990 Astérix FRA | L New Frontier / Bit Managers 1993 Astyanax USA | L Jaleco 1989 Batman – The Video Game EEC | L Sunsoft 1989 The Battle of Olympus NOE | CiB Infinity 1988 Bionic Commando EEC | L Capcom 1988 Blades of Steel SCN | L Konami 1988 Blue Shadow UKV | L Natsume 1990 Bubble Bobble UKV | CiB Taito 1987 Castlevania USA | L Konami 1986 Castlevania II: Simon's Quest EEC | L Konami 1987 Castlevania III: Dracula's Curse FRA | L Konami 1989 Chip 'n Dale – Rescue Rangers NOE | L Capcom 1990 Darkwing Duck NOE | L Capcom 1992 Donkey Kong Classics FRA | L Nintendo 1988 • Donkey Kong (1981) • Donkey Kong Jr. (1982) Double Dragon USA | L Technōs Japan 1988 Double Dragon II: The Revenge USA | L Technōs Japan 1989 Double Dribble EEC | L Konami 1987 Dragon Warrior USA | L Chunsoft 1986 Faxanadu FRA | L Nihon Falcom / Hudson Soft 1987 Final Fantasy III (UNLICENSED REPRODUCTION) USA | CiB Square 1990 The Flintstones: The Rescue of Dino & Hoppy SCN | B Taito 1991 Ghost'n Goblins EEC | L Capcom / Micronics 1986 The Goonies II NOE | L Konami 1987 Gremlins 2: The New Batch – The Video Game ITA | L Sunsoft 1990 High Speed ESP | L Rare 1991 IronSword – Wizards & Warriors II USA | L Zippo Games 1989 Ivan ”Ironman” Stewart's Super Off Road EEC | L Leland / Rare 1990 Journey to Silius EEC | L Sunsoft / Tokai Engineering 1990 Kings of the Beach USA | L EA / Konami 1990 Kirby's Adventure USA | L HAL Laboratory 1993 The Legend of Zelda FRA | L Nintendo 1986 Little Nemo – The Dream Master SCN | L Capcom 1990 Mike Tyson's Punch-Out!! EEC | L Nintendo 1987 Mission: Impossible USA | L Konami 1990 Monster in My Pocket NOE | L Team Murata Keikaku 1992 Ninja Gaiden II: The Dark Sword of Chaos USA | L Tecmo 1990 Rescue: The Embassy Mission EEC | L Infogrames Europe / Kemco 1989 Rygar EEC | L Tecmo 1987 Shadow Warriors FRA | L Tecmo 1988 The Simpsons: Bart vs. -

Silicon Studio “CEDEC 2020” to Announce Their New Global Illumination Approach “Hybrid Global Illumination Technology with Real-Time Ray Tracing and Enlighten”

PRESS RELEASE Media Contact [email protected] |+81 (0)3 5488 7070 Silicon Studio “CEDEC 2020” to announce their new global illumination approach “Hybrid global illumination technology with real-time Ray Tracing and Enlighten” Tokyo, Japan, (Aug. 7, 2020) – Silicon Studio Corporation, middleware and technology developer, focusing on entertainment, automotive, video, architecture and other industries, announced their intention to present at the upcoming “Computer Entertainment Developers Conference (CEDEC2020)” online, September 2nd through 4th, and to discuss their new global illumination approach, which integrates Enlighten with real-time Ray Tracing, at their special sponsored session . CEDEC is a conference event for computer entertainment contents developers, featuring games. This year, due to the current COVID-19 epidemiological situation around the world, the physical conference has been canceled, and the event moved to online. Silicon Studio will discuss their new global illumination (GI) approach, which combines Enlighten with real time Ray Tracing technology, at their sponsored session under the session title of “Hybrid global illumination technology with real-time Ray Tracing and Enlighten”. Ray Tracing is a technique for photorealistic CG rendering by simulating refraction and reflection of light from light and other sources in accordance with the laws of physics. This technique has attracted considerable attention thanks to strengthened GPU and architectural CG advancement. However, a large number of rays needs to be projected repeatedly to ensure high quality GI if creators adopt Ray Tracing only. Gaming requires highly interactive environments, where sufficient computation can be difficult to achieve even using the latest powerful GPU cards. Therefore, Silicon Studio has been researching for a reasonable way to render photorealistic imagery by using Enlighten and real time Ray Tracing and has developed a hybrid global illumination method. -

“Bloodborne” Uses Post Effect Middleware “YEBIS 2” to Deliver a Convincingly and Unique Moody Atmosphere

2015,Mar 26th Sony Computer Entertainment’s upcoming title “Bloodborne” uses post effect middleware “YEBIS 2” to deliver a convincingly and unique moody atmosphere. Tokyo, Japan – March 26th , 2015 – Silicon Studio, a high-end middleware provider and game developer announced today that Sony Computer Entertainment Worldwide Studios Japan Studio and FromSoftware will utilize Silicon Studio’s remarkable middleware solution, YEBIS 2 to power the visual effects in their upcoming PlayStation®4 (PS4™) title, Bloodborne. The cutting edge post processing effects of YEBIS 2 enable game developers to elevate graphic quality without sacrificing valuable development resources. “Through the power of YEBIS 2, developers can create fantastic visual effects that complement their games,” says Takehiko Terada, CEO of Silicon Studio. “As seen in the incredibly realistic lighting effects seen in Bloodborne, YEBIS 2 has the flexibility and power to achieve the visuals goals of any game developer.” ■ Some Screenshots of 『Bloodborne』with YEBIS 2 post effect. ■ 1 | More information on YEBIS 2 and YEBIS 3 can be found at the following links: http://www.siliconstudio.co.jp/middleware/yebis/en/ ■Platforms ready for”YEBIS 2” and “YEBIS 3” PlayStation®4、PlayStation®3、PlayStation® Vita、Xbox One、Xbox360®、Windows®(DirectX 9/10/11)、 iOS、 Android ■ ABOUT ”Bloodborne” Publisher:Sony Computer Entertainment Japan Asia(SCEJA) Developer:SCE WWS Japan Studio/From Software, Inc. Platforms: PlayStation®4 Website: https://www.playstation.com/en-us/games/bloodborne-ps4/ Copyright: ©Sony Computer Entertainment Inc. Developed by FromSoftware,Inc. ■ ABOUT SILICON STUDIO Established in 2000, Silicon Studio is an international company based in Tokyo, Japan, that delivers leading innovation in digital entertainment technology and content.