Revisiting Email Spoofing Attacks

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

DMARC and Email Authentication

DMARC and Email Authentication Steve Jones Executive Director DMARC.org Cloud & Messaging Day 2016 Tokyo, Japan November 28th, 2016 What is DMARC.org? • DMARC.org is an independent, non-profit advocate for the use of email authentication • Supported by global industry leaders: Sponsors: Supporters: Copyright © 2016 Trusted Domain Project 2 What Does DMARC Do, Briefly? • DMARC allows the domain owner to signal that fraudulent messages using that domain should be blocked • Mailbox providers use DMARC to detect and block fraudulent messages from reaching your customers • Organizations can use DMARC to perform this filtering on incoming messages – helps protect from some kinds of phishing and “wire transfer fraud” email, also known as Business Email Compromise (BEC) • Encourage your partners/vendors to deploy inbound DMARC filtering for protection when receiving messages • More information available at https://dmarc.org Copyright © 2016 Trusted Domain Project 3 Overview Of Presentation •DMARC Adoption •Case Study - Uber •Technical Challenges •Roadmap Copyright © 2016 Trusted Domain Project 4 DMARC Adoption This section will provide an overview of DMARC adoption since it was introduced, globally and within particular country-specific top-level domains. It will also show how the DMARC policies published by top websites has evolved over the past two years. Copyright © 2016 Trusted Domain Project 5 Deployment & Adoption Highlights 2013: • 60% of 3.3Bn global mailboxes, 80% consumers in US protected • Outlook.com users submitted 50% fewer phishing -

Research Report Email Fraud Landscape, Q2 2018

2018 Q2 Email Fraud Landscape The Fake Email Crisis 6.4 Billion Fake Messages Every Day Email Fraud Landscape, Q2 2018 Executive Summary The crisis of fake email continues. Far from being merely a “social engineering” issue, fake email is a direct result of technical issues with the way email is implemented: It lacks a built-in authentication mechanism making it all too easy to spoof senders. However, this problem is also amenable to a technical solution, starting with the email authentication standards DMARC, SPF, and DKIM. For the purposes of this report, Valimail used proprietary data from our analysis of billions of email message authentication requests, plus our analysis of more than 3 million publicly accessible DMARC and SPF records, to compile a unique view of the email fraud landscape. Now in its third consecutive quarter, our report shows how the fight against fake email is progressing worldwide, in a variety of industry categories. Key Findings • 6.4 billion fake emails (with fake From: addresses) are sent worldwide every day • The United States continues to lead the world as a source of fake email • The rate of DMARC implementation continues to grow in every industry • DMARC enforcement remains a major challenge, with a failure rate of 75-80 percent in every industry • The rate of SPF usage continues to grow in every industry • SPF errors remain a significant problem • The U.S. federal government leads all other sectors in DMARC usage and DMARC enforcement www.valimail.com 2 2018 Q2 Email Fraud Landscape Life on Planet Email Email continues to be a robust, effective medium for communications worldwide, and it is both the last remaining truly open network in wide use as well as the largest digital network, connecting half the planet. -

End-To-End Measurements of Email Spoofing Attacks

End-to-End Measurements of Email Spoofing Attacks Hang Hu, Gang Wang [email protected] Computer Science, Virginia Tech Spear Phishing is a Big Threat • Spear phishing: targeted phishing attack, often involves impersonation • 91% of targeted attacks involve spear phishing1 • 95% of state-affiliated espionage attacks are traced to phishing2 1. Enterprise Phishing Susceptibility and Resiliency Report, PhishMe, 2016 2. 2013 Data Beach Investigation Report, Verizon, 2013 2 Real-life Spear Phishing Examples Yahoo DataJohn Breach Podesta’s in 2014 Gmail Account From Google [accounts.googlemail.comAffected] 500HillaryMillion ClintonYahoo! 2016User CampaignAccount Chairman Why can phishers still impersonate others so easily? 3 I Performed a Spear Phishing Test • I impersonated USENIX Security co-chairs to send spoofing emails to my account ([email protected]) Auto-loaded Profile Picture From Adrienne Porter Felt From William Enck Adrienne Porter Felt [email protected] Enck [email protected] [email protected]@ncsu.edu 4 Background: SMTP & Spoofing • Simple Mail Transfer Protocol (SMTP) defined in 1982 • SMTP has no built-in authentication mechanism • Spoof anyone by modifying MAIL FROM field of SMTP HTTP HTTP POP SMTP SMTP IMAP William ncsu.edu vt.edu Hang Mail Server Mail Server SMTP MAIL FROM: [email protected] Attacker Mail Server 5 Existing Anti-spoofing Protocols MAIL FROM: [email protected] Process SMTP, 1982 IP: 1.2.3.4 ncsu.edu Sender Policy Framework (SPF), 2002 • IP based authentication Publish authorized? the IP Is vt.edu Yes IP authorized? -

Erasmus Mundus Master's Journalism and Media Within Globalisation: The

Erasmus Mundus Master’s Journalism and Media within Globalisation: The European Perspective. Blue Book 2009-2011 The 2009- 2011 Masters group includes 49 students from all over the world: Australia, Brazil, Britain, Canada, China, Czech, Cuba, Denmark, Egypt, Ethiopia, Finland, France, Germany, India, Italy, Kenya, Kyrgyzstan, Norway, Panama, Philippines, Poland, Portugal, Serbia, South Africa, Spain, Ukraine and USA. 1 Mundus Masters Journalism and Media class of 2009-2011. Family name First name Nationality Email Specialism Lopez Belinda Australia lopez.belinda[at]gmail.com London City d'Essen Caroline Brazil/France caroldessen[at]yahoo.com.br Hamburg Werber Cassie Britain cassiewerber[at]gmail.com London City Baker Amy Canada amyabaker[at]gmail.com Swansea Halas Sarka Canada/Czech sarkahalasova[at]gmail.com London City Diao Ying China dydiaoying[at]gmail.com London City Piñero Roig Jennifer Cuba jenniferpiero[at]yahoo.es Hamburg Jørgensen Charlotte Denmark charlotte_j84[at]hotmail.com Hamburg Poulsen Martin Kiil Denmark poulsen[at]martinkiil.dk Swansea Billie Nasrin Sharif Denmark Nasrin.Billie[at]gmail.com Swansea Zidore Christensen Ida Denmark IdaZidore[at]gmail.com Swansea Sørensen Lasse Berg Denmark lasseberg[at]gmail.com London City Hansen Mads Stampe Denmark Mads_Stampe[at]hotmail.com London City Al Mojaddidi Sarah Egypt mojaddidi[at]gmail.com Swansea Gebeyehu Abel Adamu Ethiopia abeltesha2003[at]gmail.com Swansea Eronen Eeva Marjatta Finland eeva.eronen[at]iki.fi London City Abbadi Naouel France naouel.mohamed[at]univ-lyon2.fr -

Acid-H1-2021-Report.Pdf

AGARI CYBER INTELLIGENCE DIVISION REPORT H1 2021 Email Fraud & Identity Deception Trends Global Insights from the Agari Identity Graph™ © Copyright 2021 Agari Data, Inc. Executive Summary Call it a case of locking the back window while leaving the front door wide open. A year into the pandemic and amid successful attacks on GoDaddy1, Magellan Health², and a continuous stream of revelations about the SolarWinds “hack of the decade,” cyber-attackers are proving all too successful at circumventing the elaborate defenses erected against them³. But despite billions spent on perimeter and endpoint security, phishing and business email compromise (BEC) scams continue to be the primary attack vectors into organizations, often giving threat actors the toehold they need to wreak havoc. In addition to nearly $7.5 billion in direct losses each year, advanced email threats like the kind implicated in the SolarWinds case⁴ suggest the price tag could be much higher. As corroborated in this analysis from the Agari Cyber Intelligence Division (ACID), the success of these attacks is growing far less reliant on complex technology than on savvy social engineering ploys that easily evade most of the email defenses in use today. Sophisticated New BEC Actors Signal Serious Consequences Credential phishing accounted for 63% of all phishing attacks during the second half of 2020 as schemes related to COVID-19 gave way to a sharp rise in payroll diversion scams, as well as fraudulent Zoom, Microsoft and Amazon alerts targeting millions of corporate employees working from home. Meanwhile, the state- sponsored operatives behind the SolarWinds hack were just a few of the more sophisticated threat actors moving into vendor email compromise (VEC) and other forms of BEC. -

SECURITY GUIDE with Internet Use on the Rise, Cybercrime Is Big Business

SECURITY GUIDE With internet use on the rise, cybercrime is big business. Computer savvy hackers and opportunistic spammers are constantly trying to steal or scam money from internet users. PayPal works hard to keep your information secure. We have lots of security measures in place that help protect your personal and financial information. PayPal security key Encryption This provides extra security when When you communicate with Here’s you log in to PayPal and eBay. When PayPal online or on your mobile, how to get you opt for a mobile security key, the information you provide is we’ll SMS you a random 6 digit encrypted. This means it can only a security key code to enter with your password be read by you. A padlock symbol when you log in to your accounts. is displayed on the right side of your 1. Log in to your PayPal You can also buy a credit card sized web browser to let you know you account at device that will generate this code. are viewing a secure web page. www.paypal.com.au Visit our website and click Security to learn more. Automatic timeout period 2. Click Profile then If you’re logged into PayPal and My settings. Website identity verification there’s been no activity for 15 3. Click Get started beside If your web browser supports an minutes, we’ll log you out to help “Security key.” Extended Validation Certificate, the stop anyone from accessing your address bar will turn green when information or transferring funds 4. Click Get security key you’re on PayPal’s site. -

View Presentation Slides

www.staysafeonline.org Goal of 5-Step Approach Is Resilience Know the threats Detect problems Know what recovery and Identify and and respond quickly looks like and prepare Protect your assets and appropriately Thanks to our National Sponsors Corey Allert, Manager Network Security How to identify SPAM and protect yourself Corey Allert 8/14/2018 Confidential Definitions • Spam is the practice of sending unsolicited e-mail messages, frequently with commercial content, in large quantities to an indiscriminate set of recipients. • Phishing is a way of attempting to acquire sensitive information such as usernames, passwords and credit card details by masquerading as a trustworthy entity in an electronic communication. • Spoofing is e-mail activity in which the sender address is altered to appear as though the e-mail originated from a different source. Email doesn't provide any authentication, it is very easy to impersonate and forge emails. • Ransomware is a type of malware that prevents or limits users from accessing their system, either by locking the system's screen or by locking the users' files unless a ransom is paid 8/14/2018 Where does spam come from? • Spam today is sent via “bot-nets”, networks of virus- or worm-infected personal computers in homes and offices around the globe. • Some worms install a backdoor which allows the spammer access to the computer and use it for malicious purposes. • Others steal credentials for public or small company email accounts and send spam from there. • A common misconception is that spam is blocked based on the sending email address. • Spam is primarily identified by sending IP address and content. -

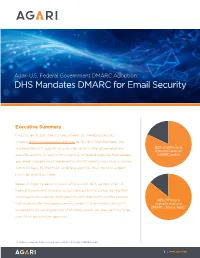

DHS Mandates DMARC for Email Security

Agari U.S. Federal Government DMARC Adoption: DHS Mandates DMARC for Email Security Executive Summary On October 16, 2017, the U.S. Department of Homeland Security issued a Binding Operational Directive (BOD) 18-01 that mandates the implementation of specific security standards to strengthen email and 82% of all Federal domains have no web site security. As part of this directive, all federal agencies that operate DMARC policy .gov email domains must implement a DMARC monitoring policy (p=none) within 90 days. Furthermore, all federal agencies must move to a reject policy (p=reject) by 1 year. Based on Agari research of public DNS records, 82% percent of all US Federal Government domains do not have a DMARC policy, leaving their constituents unprotected from phishing and other forms of email attacks 86% of Federal that impersonate their agency email domains. Cybercriminals exploit this domains that use DMARC choose Agari 1 vulnerability by sending billions of phishing emails per year claiming to be from these government agencies. 1 Includes all domains that send aggregate data to a 3rd party DMARC vendor. 1 | www.agari.com Email Abuse on Federal Agency Domains Phishing continues to be a pervasive threat in the United States and around the world.The impact of these threats has been felt specifically by government agencies. Beyond the high-profile targeted attacks that have made headlines, criminals are executing phishing attacks leveraging the brand name of agencies. Indeed, over the last six months, Agari has seen an amplification of attacks against our Federal customers. As the following chart indicates, on the email-sending and defensive domains that we monitor, 25% of email volume was malicious or failing authentication. -

From SEG to SEC: the Rise of the Next-Generation Secure Email Cloud Executive Summary the Time for Next-Generation Email Security Is Now

WHITE PAPER From SEG to SEC: The Rise of the Next-Generation Secure Email Cloud Executive Summary The Time for Next-Generation Email Security is Now The secure email gateway (SEG) worked for a number of years, but the SEG is no match for a new generation of rapidly evolving advanced The FBI email attacks that use identity deception to trick recipients. With estimates business email compromise scams, spear-phishing attacks, and data breaches, along with other types of crime, cybercriminals are seeing 20,000 massive success to the tune of $2.71 billion each year in the United victims lost States. At the same time that cybercriminals are evolving their tactics, $1.3B businesses are shedding on-premises infrastructure, moving en in 2018 from masse to cloud-based platforms such as Microsoft Office 365 or G business email Suite. These platforms provide native support for anti-spam, virus compromise and malware blocking, email archiving, content filtering, and even sandboxing, but they lack when it comes to protecting against in the United advanced email threats that use identity deception techniques to trick States alone. recipients. This move to cloud-based email and the onslaught of zero-day attacks that successfully penetrate the inbox are shifting email security from signature-based inspection of email on receipt to continuous detection and response using machine learning to detect fraudulent emails and to hunt down latent threats that escaped initial detection or have activated post-delivery. As a result, the Secure Email Cloud has emerged. This AI, graph-based approach detects advanced email attacks and cuts incident response time up to 95% in an effort to reduce the risk of business disruption and the rapidly increasing financial losses from data breaches, ransomware, and phishing. -

Download Our Fraud and Cybercrime Vulnerabilities On

Fraud and cybercrime vulnerabilities on AIM Research into the risks impacting the top 200 AIM listed businesses Audit / Tax / Advisory / Risk Smart decisions. Lasting value. 2 Contents Introduction 5 Key findings 6 Case studies and examples 12 What should organisations do? 18 Organisations and authors profile 20 Appendices 23 Fraud and cybercrime vulnerabilities on AIM 3 Introduction Key findings Ransomware Risk1 47.5% of companies had at least one external 1 internet service exposed, which would place them at a higher risk of a ransomware attack. Email Spoofing 91.5% of companies analysed were 2 exposed to having their email addresses spoofed. Vulnerable Services 85% of companies were running at least one 3 service, such as an email server or web server, with a well-known vulnerability to a cyber attack. Out of Date Software 41.5% of companies had at least one service 4 that was using software which was out of date, no longer supported and vulnerable to cyber attack. Certificate Issues 31.5% of companies had at least 5 one internet security certificate which had expired, been revoked or distrusted. Domain registration risks 64% of companies had at least one domain 6 registered to a personal or individual email address. 1 A new category of risk that was not included in the previous KYND / Crowe analysis of legal firms. Please see ‘Ransomware Risk’ in this report for further context. 4 Introduction There has been a surge in fraud and cybercrime in the UK and AIM listed businesses are not immune. Irrespective of size, listed businesses The impact of a cyber attract cybercriminals due to their visibility and the opportunity to breach could be use share price as leverage to devastating, including extract ransom payments. -

Characterization of Portuguese Web Searches

FACULDADE DE ENGENHARIA DA UNIVERSIDADE DO PORTO Characterization of Portuguese Web Searches Rui Ribeiro Master in Informatics and Computing Engineering Supervisor: Sérgio Nunes (PhD) July 11, 2011 Characterization of Portuguese Web Searches Rui Ribeiro Master in Informatics and Computing Engineering Approved in oral examination by the committee: Chair: João Pascoal Faria (PhD) External Examiner: Daniel Coelho Gomes (PhD) Supervisor: Sérgio Sobral Nunes (PhD) 5st July, 2011 Abstract Nowadays the Web can be seen as a worldwide library, being one of the main access points to information. The large amount of information available on websites all around the world raises the need for mechanisms capable of searching and retrieving relevant information for the user. Information retrieval systems arise in this context, as systems capable of searching large amounts of information and retrieving relevant information in the user’s perspective. On the Web, search engines are the main information retrieval sys- tems. The search engine returns a list of possible relevant websites for the user, according to his search, trying to fulfill his information need. The need to know what users search for in a search engine led to the development of methodologies that can answer this problem and provide statistical data for analysis. Many search engines store the information about all queries made in files called trans- action logs. The information stored in these logs can vary, but most of them contain information about the user, query date and time and the content of the query itself. With the analysis of these logs, it is possible to get information about the number of queries made on the search engine, the mean terms per query, the mean session duration or the most common topics. -

Using Dmarc to Improve Your Email Reputation Zink

USING DMARC TO IMPROVE YOUR EMAIL REPUTATION ZINK USING DMARC TO IMPROVE up with ways to mitigate this problem using two primary technologies. YOUR EMAIL REPUTATION Terry Zink 1.1 Terminology Microsoft, USA In email, people naturally thing that the sender of the message is the one in the From: fi eld – the one that they see in their email Email [email protected] client. For example, suppose that you are a travel enthusiast and you receive the email shown in Figure 3. You received the message ‘from’ [email protected], right? Wrong. ABSTRACT In email, there are two ‘From’ addresses: In 2012, the world of email fi ltering created a new tool to combat 1. The SMTP MAIL FROM, otherwise known as the RFC spam and phishing: DMARC [1, 2]. DMARC, or Domain-based 5321.MailFrom [3]. This is the email address to which the Message Authentication, Reporting & Conformance, is a technology that is designed to prevent spammers from From [email protected] <[email protected]> forging the sender, thus making brands more resistant to Subject Receipt for your Payment to Penguin Magic abuse. However, its most powerful feature is the built-in To Me reporting mechanism that lets brand owners know they are being spoofed. June 10, 2014 08:42:54 PDT DMARC has its upsides, and it is very useful for Transaction ID: 8KAHSL918102341 preventing spoofi ng, but it also has some drawbacks – it will fl ag some legitimate email as spam, and it will Hello Terry Zink, cause some short-term pain. You sent a payment of $427.25 USD to Penguin Magic.