NVIDIA's Fermi: the First Complete GPU Computing Architecture

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Animatsiooni Töövõtted Ja Terminid

Multimeedium, 2D animatsioon Animatsiooni töövõtted ja terminid Nii 2D kui ka 3D animatsioonide juures kasutatakse mitmeid samu võtteid ja termineid. Mõned neist on järgnevas lahtiseletatud. Keyframe Keyframe ehk võtmekaader on selline kaader, millel autor määrab objekti(de) täpse asukoha, suuruse, kuju, värvuse jms. antud ajahetkel. Kaadrid kahe keyframe vahel genereeritakse tavaliselt animatsiooniprogrammi poolt Keyframe 1 Keyframe 2 keerukate arvutuste tulemusena. Traditsioonilise animeerimise korral joonistavad peakunstnikud vajalikud keyframe'id ning nende assistendid kõik ülejäänu. Tracing Tracing on meetod uue kaadri loomiseks eelmise või vahel ka järgmise kopeerimise (trace) teel. Kopeeritava kujutise näitamiseks kasutatakse mitmeid meetodeid, sealhulgas aluseks oleva kujutise näitamine teise värviga, sageli sinisega. Tweening Tweening on tehnika, mis etteantud keyframe'ide vahele genereerib kasutaja poolt etteantud arvu kaadreid, millede jooksul toimub järkjärguline üleminek esimeselt keyframe'ilt teisele. Võttes arvesse kahe keyframe'i erinevusi, kalkuleerib arvuti iga kaadri jaoks proportsionaalsed muudatused objektide asukoha, suuruse, värvuse, kuju jms jaoks. Mida rohkem kaadreid lastakse genereerida, seda väiksemad tulevad järjestikuste kaadrite vahelised erinevused ning seda sujuvam tuleb liikumine. Keyframe 1 genereeritud kaadrid Keyframe 2 Rendering Rendering on protsess, mille käigus objektide traatmudelid (wireframe vahel ka mesh) kaetakse pinnatekstuuridega, neile lisatakse valgusefektid ja värvid, et saada realistlik -

Intel® Desktop Board DG965PZ Technical Product Specification

Intel® Desktop Board DG965PZ Technical Product Specification August 2006 Order Number: D56009-001US The Intel® Desktop Board DG965PZ may contain design defects or errors known as errata that may cause the product to deviate from published specifications. Current characterized errata are documented in the Intel Desktop Board DG965PZ Specification Update. Revision History Revision Revision History Date -001 First release of the Intel® Desktop Board DG965PZ Technical Product August 2006 Specification. This product specification applies to only the standard Intel® Desktop Board DG965PZ with BIOS identifier MQ96510A.86A. Changes to this specification will be published in the Intel Desktop Board DG965PZ Specification Update before being incorporated into a revision of this document. INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL® PRODUCTS. NO LICENSE, EXPRESS OR IMPLIED, BY ESTOPPEL OR OTHERWISE, TO ANY INTELLECTUAL PROPERTY RIGHTS IS GRANTED BY THIS DOCUMENT. EXCEPT AS PROVIDED IN INTEL’S TERMS AND CONDITIONS OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEVER, AND INTEL DISCLAIMS ANY EXPRESS OR IMPLIED WARRANTY, RELATING TO SALE AND/OR USE OF INTEL PRODUCTS INCLUDING LIABILITY OR WARRANTIES RELATING TO FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT OR OTHER INTELLECTUAL PROPERTY RIGHT. INTEL PRODUCTS ARE NOT INTENDED FOR USE IN MEDICAL, LIFE SAVING, OR LIFE SUSTAINING APPLICATIONS. Intel Corporation may have patents or pending patent applications, trademarks, copyrights, or other intellectual property rights that relate to the presented subject matter. The furnishing of documents and other materials and information does not provide any license, express or implied, by estoppel or otherwise, to any such patents, trademarks, copyrights, or other intellectual property rights. -

Lecture 7 CUDA

Lecture 7 CUDA Dr. Wilson Rivera ICOM 6025: High Performance Computing Electrical and Computer Engineering Department University of Puerto Rico Outline • GPU vs CPU • CUDA execution Model • CUDA Types • CUDA programming • CUDA Timer ICOM 6025: High Performance Computing 2 CUDA • Compute Unified Device Architecture – Designed and developed by NVIDIA – Data parallel programming interface to GPUs • Requires an NVIDIA GPU (GeForce, Tesla, Quadro) ICOM 4036: Programming Languages 3 CUDA SDK GPU and CPU: The Differences ALU ALU Control ALU ALU Cache DRAM DRAM CPU GPU • GPU – More transistors devoted to computation, instead of caching or flow control – Threads are extremely lightweight • Very little creation overhead – Suitable for data-intensive computation • High arithmetic/memory operation ratio Grids and Blocks Host • Kernel executed as a grid of thread Device blocks Grid 1 – All threads share data memory Kernel Block Block Block space 1 (0, 0) (1, 0) (2, 0) • Thread block is a batch of threads, Block Block Block can cooperate with each other by: (0, 1) (1, 1) (2, 1) – Synchronizing their execution: For hazard-free shared Grid 2 memory accesses Kernel 2 – Efficiently sharing data through a low latency shared memory Block (1, 1) • Two threads from two different blocks cannot cooperate Thread Thread Thread Thread Thread (0, 0) (1, 0) (2, 0) (3, 0) (4, 0) – (Unless thru slow global Thread Thread Thread Thread Thread memory) (0, 1) (1, 1) (2, 1) (3, 1) (4, 1) • Threads and blocks have IDs Thread Thread Thread Thread Thread (0, 2) (1, 2) (2, -

Gs-35F-4677G

March 2013 NCS Technologies, Inc. Information Technology (IT) Schedule Contract Number: GS-35F-4677G FEDERAL ACQUISTIION SERVICE INFORMATION TECHNOLOGY SCHEDULE PRICELIST GENERAL PURPOSE COMMERCIAL INFORMATION TECHNOLOGY EQUIPMENT Special Item No. 132-8 Purchase of Hardware 132-8 PURCHASE OF EQUIPMENT FSC CLASS 7010 – SYSTEM CONFIGURATION 1. End User Computer / Desktop 2. Professional Workstation 3. Server 4. Laptop / Portable / Notebook FSC CLASS 7-25 – INPUT/OUTPUT AND STORAGE DEVICES 1. Display 2. Network Equipment 3. Storage Devices including Magnetic Storage, Magnetic Tape and Optical Disk NCS TECHNOLOGIES, INC. 7669 Limestone Drive Gainesville, VA 20155-4038 Tel: (703) 621-1700 Fax: (703) 621-1701 Website: www.ncst.com Contract Number: GS-35F-4677G – Option Year 3 Period Covered by Contract: May 15, 1997 through May 14, 2017 GENERAL SERVICE ADMINISTRATION FEDERAL ACQUISTIION SERVICE Products and ordering information in this Authorized FAS IT Schedule Price List is also available on the GSA Advantage! System. Agencies can browse GSA Advantage! By accessing GSA’s Home Page via Internet at www.gsa.gov. TABLE OF CONTENTS INFORMATION FOR ORDERING OFFICES ............................................................................................................................................................................................................................... TC-1 SPECIAL NOTICE TO AGENCIES – SMALL BUSINESS PARTICIPATION 1. Geographical Scope of Contract ............................................................................................................................................................................................................................. -

ATI Radeon™ HD 4870 Computation Highlights

AMD Entering the Golden Age of Heterogeneous Computing Michael Mantor Senior GPU Compute Architect / Fellow AMD Graphics Product Group [email protected] 1 The 4 Pillars of massively parallel compute offload •Performance M’Moore’s Law Î 2x < 18 Month s Frequency\Power\Complexity Wall •Power Parallel Î Opportunity for growth •Price • Programming Models GPU is the first successful massively parallel COMMODITY architecture with a programming model that managgped to tame 1000’s of parallel threads in hardware to perform useful work efficiently 2 Quick recap of where we are – Perf, Power, Price ATI Radeon™ HD 4850 4x Performance/w and Performance/mm² in a year ATI Radeon™ X1800 XT ATI Radeon™ HD 3850 ATI Radeon™ HD 2900 XT ATI Radeon™ X1900 XTX ATI Radeon™ X1950 PRO 3 Source of GigaFLOPS per watt: maximum theoretical performance divided by maximum board power. Source of GigaFLOPS per $: maximum theoretical performance divided by price as reported on www.buy.com as of 9/24/08 ATI Radeon™HD 4850 Designed to Perform in Single Slot SP Compute Power 1.0 T-FLOPS DP Compute Power 200 G-FLOPS Core Clock Speed 625 Mhz Stream Processors 800 Memory Type GDDR3 Memory Capacity 512 MB Max Board Power 110W Memory Bandwidth 64 GB/Sec 4 ATI Radeon™HD 4870 First Graphics with GDDR5 SP Compute Power 1.2 T-FLOPS DP Compute Power 240 G-FLOPS Core Clock Speed 750 Mhz Stream Processors 800 Memory Type GDDR5 3.6Gbps Memory Capacity 512 MB Max Board Power 160 W Memory Bandwidth 115.2 GB/Sec 5 ATI Radeon™HD 4870 X2 Incredible Balance of Performance,,, Power, Price -

Does Your Hardware Support Opencl? - 12-29-2011 by Vincent - Streamcomputing

Does your hardware support OpenCL? - 12-29-2011 by vincent - StreamComputing - http://streamcomputing.eu Does your hardware support OpenCL? by vincent – Thursday, December 29, 2011 http://streamcomputing.eu/blog/2011-12-29/opencl-hardware-support/ Does your computer have OpenCL-capable hardware? Read on and find out… For people who only want to run OpenCL-software and have recent hardware, just read this paragraph. If you have recent drivers for your GPU, you can be sure OpenCL is already supported and you can run OpenCL-capable software. NVidia has support for OpenCL 1.1 since drivers 280.13, so if you need OpenCL 1.1, then make sure you have this version or later. If you want to use Intel-processors and you don’t have an AMD GPU installed, you need to download the runtime of Intel OpenCL. If you want to know if your X86 device is supported, you’ll find answers in this article. Often it is not clear how OpenCL works on CPUs. If you have a 8 core processor with double threading, then it mostly is understood that 16 pipelines of instructions are possible. OpenCL takes care of this threading, but also uses parallelism provided by SSE and AVX extension. I talked more about this here and here. Meaning that an 8-core processor with AVX can compute 8 times 32 bytes (8*8 floats or 8*4 doubles) in parallel. You could see it as parallelism of parallelism. SSE is designed with multimedia-operations in mind, but has enough to be used with OpenCL. -

Data Sheet: Quadro GV100

REINVENTING THE WORKSTATION WITH REAL-TIME RAY TRACING AND AI NVIDIA QUADRO GV100 The Power To Accelerate AI- FEATURES > Four DisplayPort 1.4 Enhanced Workflows Connectors3 The NVIDIA® Quadro® GV100 reinvents the workstation > DisplayPort with Audio to meet the demands of AI-enhanced design and > 3D Stereo Support with Stereo Connector3 visualization workflows. It’s powered by NVIDIA Volta, > NVIDIA GPUDirect™ Support delivering extreme memory capacity, scalability, and > NVIDIA NVLink Support1 performance that designers, architects, and scientists > Quadro Sync II4 Compatibility need to create, build, and solve the impossible. > NVIDIA nView® Desktop SPECIFICATIONS Management Software GPU Memory 32 GB HBM2 Supercharge Rendering with AI > HDCP 2.2 Support Memory Interface 4096-bit > Work with full fidelity, massive datasets 5 > NVIDIA Mosaic Memory Bandwidth Up to 870 GB/s > Enjoy fluid visual interactivity with AI-accelerated > Dedicated hardware video denoising encode and decode engines6 ECC Yes NVIDIA CUDA Cores 5,120 Bring Optimal Designs to Market Faster > Work with higher fidelity CAE simulation models NVIDIA Tensor Cores 640 > Explore more design options with faster solver Double-Precision Performance 7.4 TFLOPS performance Single-Precision Performance 14.8 TFLOPS Enjoy Ultimate Immersive Experiences Tensor Performance 118.5 TFLOPS > Work with complex, photoreal datasets in VR NVIDIA NVLink Connects 2 Quadro GV100 GPUs2 > Enjoy optimal NVIDIA Holodeck experience NVIDIA NVLink bandwidth 200 GB/s Realize New Opportunities with AI -

SIMD Extensions

SIMD Extensions PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Sat, 12 May 2012 17:14:46 UTC Contents Articles SIMD 1 MMX (instruction set) 6 3DNow! 8 Streaming SIMD Extensions 12 SSE2 16 SSE3 18 SSSE3 20 SSE4 22 SSE5 26 Advanced Vector Extensions 28 CVT16 instruction set 31 XOP instruction set 31 References Article Sources and Contributors 33 Image Sources, Licenses and Contributors 34 Article Licenses License 35 SIMD 1 SIMD Single instruction Multiple instruction Single data SISD MISD Multiple data SIMD MIMD Single instruction, multiple data (SIMD), is a class of parallel computers in Flynn's taxonomy. It describes computers with multiple processing elements that perform the same operation on multiple data simultaneously. Thus, such machines exploit data level parallelism. History The first use of SIMD instructions was in vector supercomputers of the early 1970s such as the CDC Star-100 and the Texas Instruments ASC, which could operate on a vector of data with a single instruction. Vector processing was especially popularized by Cray in the 1970s and 1980s. Vector-processing architectures are now considered separate from SIMD machines, based on the fact that vector machines processed the vectors one word at a time through pipelined processors (though still based on a single instruction), whereas modern SIMD machines process all elements of the vector simultaneously.[1] The first era of modern SIMD machines was characterized by massively parallel processing-style supercomputers such as the Thinking Machines CM-1 and CM-2. These machines had many limited-functionality processors that would work in parallel. -

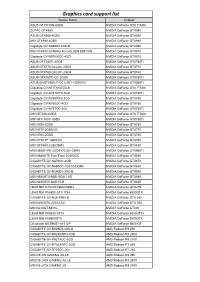

Graphics Card Support List

Graphics card support list Device Name Chipset ASUS GTXTITAN-6GD5 NVIDIA GeForce GTX TITAN ZOTAC GTX980 NVIDIA GeForce GTX980 ASUS GTX980-4GD5 NVIDIA GeForce GTX980 MSI GTX980-4GD5 NVIDIA GeForce GTX980 Gigabyte GV-N980D5-4GD-B NVIDIA GeForce GTX980 MSI GTX970 GAMING 4G GOLDEN EDITION NVIDIA GeForce GTX970 Gigabyte GV-N970IXOC-4GD NVIDIA GeForce GTX970 ASUS GTX780TI-3GD5 NVIDIA GeForce GTX780Ti ASUS GTX770-DC2OC-2GD5 NVIDIA GeForce GTX770 ASUS GTX760-DC2OC-2GD5 NVIDIA GeForce GTX760 ASUS GTX750TI-OC-2GD5 NVIDIA GeForce GTX750Ti ASUS ENGTX560-Ti-DCII/2D1-1GD5/1G NVIDIA GeForce GTX560Ti Gigabyte GV-NTITAN-6GD-B NVIDIA GeForce GTX TITAN Gigabyte GV-N78TWF3-3GD NVIDIA GeForce GTX780Ti Gigabyte GV-N780WF3-3GD NVIDIA GeForce GTX780 Gigabyte GV-N760OC-4GD NVIDIA GeForce GTX760 Gigabyte GV-N75TOC-2GI NVIDIA GeForce GTX750Ti MSI NTITAN-6GD5 NVIDIA GeForce GTX TITAN MSI GTX 780Ti 3GD5 NVIDIA GeForce GTX780Ti MSI N780-3GD5 NVIDIA GeForce GTX780 MSI N770-2GD5/OC NVIDIA GeForce GTX770 MSI N760-2GD5 NVIDIA GeForce GTX760 MSI N750 TF 1GD5/OC NVIDIA GeForce GTX750 MSI GTX680-2GB/DDR5 NVIDIA GeForce GTX680 MSI N660Ti-PE-2GD5-OC/2G-DDR5 NVIDIA GeForce GTX660Ti MSI N680GTX Twin Frozr 2GD5/OC NVIDIA GeForce GTX680 GIGABYTE GV-N670OC-2GD NVIDIA GeForce GTX670 GIGABYTE GV-N650OC-1GI/1G-DDR5 NVIDIA GeForce GTX650 GIGABYTE GV-N590D5-3GD-B NVIDIA GeForce GTX590 MSI N580GTX-M2D15D5/1.5G NVIDIA GeForce GTX580 MSI N465GTX-M2D1G-B NVIDIA GeForce GTX465 LEADTEK GTX275/896M-DDR3 NVIDIA GeForce GTX275 LEADTEK PX8800 GTX TDH NVIDIA GeForce 8800GTX GIGABYTE GV-N26-896H-B -

NVIDIA Quadro RTX for V-Ray Next

NVIDIA QUADRO RTX V-RAY NEXT GPU Image courtesy of © Dabarti Studio, rendered with V-Ray GPU Quadro RTX Accelerates V-Ray Next GPU Rendering Solutions for V-Ray Next GPU V-Ray Next GPU taps into the power of NVIDIA® Quadro® NVIDIA Quadro® provides a wide range of RTX-enabled RTX™ to speed up production rendering with dedicated RT solutions for desktop, mobile, server-based rendering, and Cores for ray tracing and Tensor Cores for AI-accelerated virtual workstations with NVIDIA Quadro Virtual Data denoising.¹ With up to 18X faster rendering than CPU-based Center Workstation (Quadro vDWS) software.2 With up to 96 solutions and enhanced performance with NVIDIA NVLink™, gigabytes (GB) of GPU memory available,3 Quadro RTX V-Ray Next GPU with RTX support provides incredible provides the power you need for the largest professional performance improvements for your rendering workloads. graphics and rendering workloads. “ Accelerating artist productivity is always our top Benchmark: V-Ray Next GPU Rendering Performance Increase on Quadro RTX GPUs priority, so we’re quick to take advantage of the latest ray-tracing hardware breakthroughs. By Quadro RTX 6000 x2 1885 ™ Quadro RTX 6000 104 supporting NVIDIA RTX in V-Ray GPU, we’re Quadro RTX 4000 783 bringing our customers an exciting new boost in PU 1 0 2 4 6 8 10 12 14 16 18 20 their GPU production rendering speeds.” Relatve Performance – Phillip Miller, Vice President, Product Management, Chaos Group Desktop performance Tests run on 1x Xeon old 6154 3 Hz (37 Hz Turbo), 64 B DDR4 RAM Wn10x64 Drver verson 44128 Performance results may vary dependng on the scene NVIDIA Quadro professional graphics solutions are verified and recommended for the most demanding projects by Chaos Group. -

GPU Architecture • Display Controller • Designing for Safety • Vision Processing

i.MX 8 GRAPHICS ARCHITECTURE FTF-DES-N1940 RAFAL MALEWSKI HEAD OF GRAPHICS TECH ENGINEERING CENTER MAY 18, 2016 PUBLIC USE AGENDA • i.MX 8 Series Scalability • GPU Architecture • Display Controller • Designing for Safety • Vision Processing 1 PUBLIC USE #NXPFTF 1 PUBLIC USE #NXPFTF i.MX is… 2 PUBLIC USE #NXPFTF SoC Scalability = Investment Re-Use Replace the Chip a Increase capability. (Pin, Software, IP compatibility among parts) 3 PUBLIC USE #NXPFTF i.MX 8 Series GPU Cores • Dual Core GPU Up to 4 displays Accelerated ARM Cores • 16 Vec4 Shaders Vision Cortex-A53 | Cortex-A72 8 • Up to 128 GFLOPS • 64 execution units 8QuadMax 8 • Tessellation/Geometry total pixels Shaders Pin Compatibility Pin • Dual Core GPU Up to 4 displays Accelerated • 8 Vec4 Shaders Vision 4 • Up to 64 GFLOPS • 32 execution units 4 total pixels 8QuadPlus • Tessellation/Geometry Compatibility Software Shaders • Dual Core GPU Up to 4 displays Accelerated • 8 Vec4 Shaders Vision 4 • Up to 64 GFLOPS 4 • 32 execution units 8Quad • Tessellation/Geometry total pixels Shaders • Single Core GPU Up to 2 displays Accelerated • 8 Vec4 Shaders 2x 1080p total Vision • Up to 64 GFLOPS pixels Compatibility Pin 8 • 32 execution units 8Dual8Solo • Tessellation/Geometry Shaders • Single Core GPU Up to 2 displays Accelerated • 4 Vec4 Shaders 2x 1080p total Vision • Up to 32 GFLOPS pixels 4 • 16 execution units 8DualLite8Solo • Tessellation/Geometry Shaders 4 PUBLIC USE #NXPFTF i.MX 8 Series – The Doubles GPU Cores • Dual Core GPU Up to 4 displays Accelerated • 16 Vec4 Shaders Vision -

GPU-Based Deep Learning Inference

Whitepaper GPU-Based Deep Learning Inference: A Performance and Power Analysis November 2015 1 Contents Abstract ......................................................................................................................................................... 3 Introduction .................................................................................................................................................. 3 Inference versus Training .............................................................................................................................. 4 GPUs Excel at Neural Network Inference ..................................................................................................... 5 Inference Optimizations in Caffe and cuDNN 4 ........................................................................................ 5 Experimental Setup and Testing Methodology ........................................................................................ 7 Inference on Small and Large GPUs .......................................................................................................... 8 Conclusion ................................................................................................................................................... 10 References .................................................................................................................................................. 10 2 Abstract Deep learning methods are revolutionizing various areas of machine perception. On a