DXOMARK Objective Video Quality Measurements

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Breaking Down the “Cosine Fourth Power Law”

Breaking Down The “Cosine Fourth Power Law” By Ronian Siew, inopticalsolutions.com Why are the corners of the field of view in the image captured by a camera lens usually darker than the center? For one thing, camera lenses by design often introduce “vignetting” into the image, which is the deliberate clipping of rays at the corners of the field of view in order to cut away excessive lens aberrations. But, it is also known that corner areas in an image can get dark even without vignetting, due in part to the so-called “cosine fourth power law.” 1 According to this “law,” when a lens projects the image of a uniform source onto a screen, in the absence of vignetting, the illumination flux density (i.e., the optical power per unit area) across the screen from the center to the edge varies according to the fourth power of the cosine of the angle between the optic axis and the oblique ray striking the screen. Actually, optical designers know this “law” does not apply generally to all lens conditions.2 – 10 Fundamental principles of optical radiative flux transfer in lens systems allow one to tune the illumination distribution across the image by varying lens design characteristics. In this article, we take a tour into the fascinating physics governing the illumination of images in lens systems. Relative Illumination In Lens Systems In lens design, one characterizes the illumination distribution across the screen where the image resides in terms of a quantity known as the lens’ relative illumination — the ratio of the irradiance (i.e., the power per unit area) at any off-axis position of the image to the irradiance at the center of the image. -

Denver Cmc Photography Section Newsletter

MARCH 2018 DENVER CMC PHOTOGRAPHY SECTION NEWSLETTER Wednesday, March 14 CONNIE RUDD Photography with a Purpose 2018 Monthly Meetings Steering Committee 2nd Wednesday of the month, 7:00 p.m. Frank Burzynski CMC Liaison AMC, 710 10th St. #200, Golden, CO [email protected] $20 Annual Dues Jao van de Lagemaat Education Coordinator Meeting WEDNESDAY, March 14, 7:00 p.m. [email protected] March Meeting Janice Bennett Newsletter and Communication Join us Wednesday, March 14, Coordinator fom 7:00 to 9:00 p.m. for our meeting. [email protected] Ron Hileman CONNIE RUDD Hike and Event Coordinator [email protected] wil present Photography with a Purpose: Conservation Photography that not only Selma Kristel Presentation Coordinator inspires, but can also tip the balance in favor [email protected] of the protection of public lands. Alex Clymer Social Media Coordinator For our meeting on March 14, each member [email protected] may submit two images fom National Parks Mark Haugen anywhere in the country. Facilities Coordinator [email protected] Please submit images to Janice Bennett, CMC Photo Section Email [email protected] by Tuesday, March 13. [email protected] PAGE 1! DENVER CMC PHOTOGRAPHY SECTION MARCH 2018 JOIN US FOR OUR MEETING WEDNESDAY, March 14 Connie Rudd will present Photography with a Purpose: Conservation Photography that not only inspires, but can also tip the balance in favor of the protection of public lands. Please see the next page for more information about Connie Rudd. For our meeting on March 14, each member may submit two images from National Parks anywhere in the country. -

No Slide Title

December 2007 Updated 12/17/07 AT&T Mobility December ~ Washington Government WSCA – AT&T Mobility Device and Rate Plan Update for 2007 All Offers for Government Use ONLY On the attached pages you will find updates to the December WSCA pricing for Equipment and Rate Plans. Table of Contents Page 2: Voice Rate Plans Page 3: FREE Cellular Phones Page 4: Cellular Phones Page 5: BlackBerry Devices Page 6: SmartPhones (Microsoft, Nokia, Palm) Page 7: Push-to-Talk Devices Page 8: Aircards Page 9: Accessories Page 10: Nationwide Coverage Map December Highlights: ¾New Blackberry 8310 Curve! ~ Onboard GPS ~ Available in Titanium & Red! ¾ New Samsung Blackjack 2 – GPS ~ Available in Black & Red! ¾ Air Card Promotion: Sierra AC881 & Option GT Max FREE! *Certain devices may not be shown due to policy or otherwise WSCA Pricing. For more Information Contact: Prices and Promotions subject to change without notice Rob Holden All Offers for Government Use ONLY 425-580-7741 Master Price Agreement: T07-MST-069 [email protected] All plans receive WSCA 20% discount on monthly recurring service charges December 2007 December 2007 Updated 12/17/07 AT&T Mobility Oregon Government WSCA All plans receive 20% additional discount off of monthly recurring charges! AT&T Mobility Calling Plans REGIONAL Plan NATION Plans (Free Roaming and Long Distance Nationwide) Monthly Fee $9.99 (Rate Code ODNBRDS11) $39.99 $59.99 $79.99 $99.99 $149.99 Included mins 0 450 900 1,350 2,000 4,000 5000 N & W, Unlimited Nights & Weekends, Unlimited Mobile to 1000 Mobile to Mobile Unlim -

Completing a Photography Exhibit Data Tag

Completing a Photography Exhibit Data Tag Current Data Tags are available at: https://unl.box.com/s/1ttnemphrd4szykl5t9xm1ofiezi86js Camera Make & Model: Indicate the brand and model of the camera, such as Google Pixel 2, Nikon Coolpix B500, or Canon EOS Rebel T7. Focus Type: • Fixed Focus means the photographer is not able to adjust the focal point. These cameras tend to have a large depth of field. This might include basic disposable cameras. • Auto Focus means the camera automatically adjusts the optics in the lens to bring the subject into focus. The camera typically selects what to focus on. However, the photographer may also be able to select the focal point using a touch screen for example, but the camera will automatically adjust the lens. This might include digital cameras and mobile device cameras, such as phones and tablets. • Manual Focus allows the photographer to manually adjust and control the lens’ focus by hand, usually by turning the focus ring. Camera Type: Indicate whether the camera is digital or film. (The following Questions are for Unit 2 and 3 exhibitors only.) Did you manually adjust the aperture, shutter speed, or ISO? Indicate whether you adjusted these settings to capture the photo. Note: Regardless of whether or not you adjusted these settings manually, you must still identify the images specific F Stop, Shutter Sped, ISO, and Focal Length settings. “Auto” is not an acceptable answer. Digital cameras automatically record this information for each photo captured. This information, referred to as Metadata, is attached to the image file and goes with it when the image is downloaded to a computer for example. -

What Resolution Should Your Images Be?

What Resolution Should Your Images Be? The best way to determine the optimum resolution is to think about the final use of your images. For publication you’ll need the highest resolution, for desktop printing lower, and for web or classroom use, lower still. The following table is a general guide; detailed explanations follow. Use Pixel Size Resolution Preferred Approx. File File Format Size Projected in class About 1024 pixels wide 102 DPI JPEG 300–600 K for a horizontal image; or 768 pixels high for a vertical one Web site About 400–600 pixels 72 DPI JPEG 20–200 K wide for a large image; 100–200 for a thumbnail image Printed in a book Multiply intended print 300 DPI EPS or TIFF 6–10 MB or art magazine size by resolution; e.g. an image to be printed as 6” W x 4” H would be 1800 x 1200 pixels. Printed on a Multiply intended print 200 DPI EPS or TIFF 2-3 MB laserwriter size by resolution; e.g. an image to be printed as 6” W x 4” H would be 1200 x 800 pixels. Digital Camera Photos Digital cameras have a range of preset resolutions which vary from camera to camera. Designation Resolution Max. Image size at Printable size on 300 DPI a color printer 4 Megapixels 2272 x 1704 pixels 7.5” x 5.7” 12” x 9” 3 Megapixels 2048 x 1536 pixels 6.8” x 5” 11” x 8.5” 2 Megapixels 1600 x 1200 pixels 5.3” x 4” 6” x 4” 1 Megapixel 1024 x 768 pixels 3.5” x 2.5” 5” x 3 If you can, you generally want to shoot larger than you need, then sharpen the image and reduce its size in Photoshop. -

RADA Sense Mobile Application End-User Licence Agreement

RADA Sense Mobile Application End-User Licence Agreement PLEASE READ THESE LICENCE TERMS CAREFULLY BY CONTINUING TO USE THIS APP YOU AGREE TO THESE TERMS WHICH WILL BIND YOU. IF YOU DO NOT AGREE TO THESE TERMS, PLEASE IMMEDIATELY DISCONTINUE USING THIS APP. WHO WE ARE AND WHAT THIS AGREEMENT DOES We Kohler Mira Limited of Cromwell Road, Cheltenham, GL52 5EP license you to use: • Rada Sense mobile application software, the data supplied with the software, (App) and any updates or supplements to it. • The service you connect to via the App and the content we provide to you through it (Service). as permitted in these terms. YOUR PRIVACY Under data protection legislation, we are required to provide you with certain information about who we are, how we process your personal data and for what purposes and your rights in relation to your personal data and how to exercise them. This information is provided in https://www.radacontrols.com/en/privacy/ and it is important that you read that information. Please be aware that internet transmissions are never completely private or secure and that any message or information you send using the App or any Service may be read or intercepted by others, even if there is a special notice that a particular transmission is encrypted. APPLE APP STORE’S TERMS ALSO APPLY The ways in which you can use the App and Documentation may also be controlled by the Apple App Store’s rules and policies https://www.apple.com/uk/legal/internet-services/itunes/uk/terms.html and Apple App Store’s rules and policies will apply instead of these terms where there are differences between the two. -

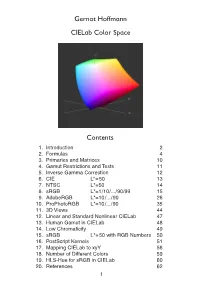

Cielab Color Space

Gernot Hoffmann CIELab Color Space Contents . Introduction 2 2. Formulas 4 3. Primaries and Matrices 0 4. Gamut Restrictions and Tests 5. Inverse Gamma Correction 2 6. CIE L*=50 3 7. NTSC L*=50 4 8. sRGB L*=/0/.../90/99 5 9. AdobeRGB L*=0/.../90 26 0. ProPhotoRGB L*=0/.../90 35 . 3D Views 44 2. Linear and Standard Nonlinear CIELab 47 3. Human Gamut in CIELab 48 4. Low Chromaticity 49 5. sRGB L*=50 with RGB Numbers 50 6. PostScript Kernels 5 7. Mapping CIELab to xyY 56 8. Number of Different Colors 59 9. HLS-Hue for sRGB in CIELab 60 20. References 62 1.1 Introduction CIE XYZ is an absolute color space (not device dependent). Each visible color has non-negative coordinates X,Y,Z. CIE xyY, the horseshoe diagram as shown below, is a perspective projection of XYZ coordinates onto a plane xy. The luminance is missing. CIELab is a nonlinear transformation of XYZ into coordinates L*,a*,b*. The gamut for any RGB color system is a triangle in the CIE xyY chromaticity diagram, here shown for the CIE primaries, the NTSC primaries, the Rec.709 primaries (which are also valid for sRGB and therefore for many PC monitors) and the non-physical working space ProPhotoRGB. The white points are individually defined for the color spaces. The CIELab color space was intended for equal perceptual differences for equal chan- ges in the coordinates L*,a* and b*. Color differences deltaE are defined as Euclidian distances in CIELab. This document shows color charts in CIELab for several RGB color spaces. -

Sample Manuscript Showing Specifications and Style

Information capacity: a measure of potential image quality of a digital camera Frédéric Cao 1, Frédéric Guichard, Hervé Hornung DxO Labs, 3 rue Nationale, 92100 Boulogne Billancourt, FRANCE ABSTRACT The aim of the paper is to define an objective measurement for evaluating the performance of a digital camera. The challenge is to mix different flaws involving geometry (as distortion or lateral chromatic aberrations), light (as luminance and color shading), or statistical phenomena (as noise). We introduce the concept of information capacity that accounts for all the main defects than can be observed in digital images, and that can be due either to the optics or to the sensor. The information capacity describes the potential of the camera to produce good images. In particular, digital processing can correct some flaws (like distortion). Our definition of information takes possible correction into account and the fact that processing can neither retrieve lost information nor create some. This paper extends some of our previous work where the information capacity was only defined for RAW sensors. The concept is extended for cameras with optical defects as distortion, lateral and longitudinal chromatic aberration or lens shading. Keywords: digital photography, image quality evaluation, optical aberration, information capacity, camera performance database 1. INTRODUCTION The evaluation of a digital camera is a key factor for customers, whether they are vendors or final customers. It relies on many different factors as the presence or not of some functionalities, ergonomic, price, or image quality. Each separate criterion is itself quite complex to evaluate, and depends on many different factors. The case of image quality is a good illustration of this topic. -

America's Best Deserve Our Best

Teachers and their Families America’s Best Deserve our best 25% Discount for Eligible Educators Certified/licensed K-12 classroom teachers/educators are eligible Existing customers qualify and it is for ALL the lines on your plan No Purchase Necessary Bring your Own devices to AT&T & get up to $500 in pre-paid Visa cards! Call - Text - Email Additional Promotions Authorized AT&T Retailer JP Stork *FREE Devices 720-635-6119 *FREE Wireless Charging [email protected] Pads w /3+ new phones AT&T April Promotional Pricing *No purchase necessary for 25% discount Eligible Devices: • Eligible Purchased Smartphones o iPhone XS 64GB ($900), 256GB ($1050), 512GB ($1250) o iPhone XR 64GB ($500), 128GB ($550) o iPhone 11 Pro 64GB ($900), 256GB ($1050), 512GB ($1250) o iPhone 11 Pro Max 64GB ($1000), 256GB ($1150), 512GB ($1350) o iPhone 12 mini 64GB ($700), 128GB ($750), 256GB ($850) o iPhone 12 64GB ($800), 128GB ($850), 256GB ($950) o iPhone 12 Pro 128GB ($1000), 256GB ($1100), 512GB ($1300) o iPhone 12 Pro Max 128GB ($1100), 256GB ($1200), 512GB ($1400) • Eligible Trade-in Smartphones: o To qualify for $700 credit, minimum Trade-In value must be $95 or higher after device condition questions have been answered o Eligible devices: ▪ Apple: 8, 8 Plus, X, XR, XS, XS Max, 11, 11 Pro, 11 Pro Max, 12, 12 mini, 12 Pro, 12 Pro Max ▪ Samsung: A71, A71 5G, Fold, Z Fold2 5G, Galaxy S9, Galaxy S9+, Galaxy S9+ Duos, Galaxy S10, Galaxy S10+, Galaxy S10 5G, Galaxy S10e, Galaxy S10 Lite, Galaxy S20, Galaxy S20 Ultra 5G, Galaxy S20+ 5G, Note9, Note10, Note10+, -

Seeing Like Your Camera ○ My List of Specific Videos I Recommend for Homework I.E

Accessing Lynda.com ● Free to Mason community ● Set your browser to lynda.gmu.edu ○ Log-in using your Mason ID and Password ● Playlists Seeing Like Your Camera ○ My list of specific videos I recommend for homework i.e. pre- and post-session viewing.. PART 2 - FALL 2016 ○ Clicking on the name of the video segment will bring you immediately to Lynda.com (or the login window) Stan Schretter ○ I recommend that you eventually watch the entire video class, since we will only use small segments of each video class [email protected] 1 2 Ways To Take This Course What Creates a Photograph ● Each class will cover on one or two topics in detail ● Light ○ Lynda.com videos cover a lot more material ○ I will email the video playlist and the my charts before each class ● Camera ● My Scale of Value ○ Maximum Benefit: Review Videos Before Class & Attend Lectures ● Composition & Practice after Each Class ○ Less Benefit: Do not look at the Videos; Attend Lectures and ● Camera Setup Practice after Each Class ○ Some Benefit: Look at Videos; Don’t attend Lectures ● Post Processing 3 4 This Course - “The Shot” This Course - “The Shot” ● Camera Setup ○ Exposure ● Light ■ “Proper” Light on the Sensor ■ Depth of Field ■ Stop or Show the Action ● Camera ○ Focus ○ Getting the Color Right ● Composition ■ White Balance ● Composition ● Camera Setup ○ Key Photographic Element(s) ○ Moving The Eye Through The Frame ■ Negative Space ● Post Processing ○ Perspective ○ Story 5 6 Outline of This Class Class Topics PART 1 - Summer 2016 PART 2 - Fall 2016 ● Topic 1 ○ Review of Part 1 ● Increasing Your Vision ● Brief Review of Part 1 ○ Shutter Speed, Aperture, ISO ○ Shutter Speed ● Seeing The Light ○ Composition ○ Aperture ○ Color, dynamic range, ● Topic 2 ○ ISO and White Balance histograms, backlighting, etc. -

Foveon FO18-50-F19 4.5 MP X3 Direct Image Sensor

® Foveon FO18-50-F19 4.5 MP X3 Direct Image Sensor Features The Foveon FO18-50-F19 is a 1/1.8-inch CMOS direct image sensor that incorporates breakthrough Foveon X3 technology. Foveon X3 direct image sensors Foveon X3® Technology • A stack of three pixels captures superior color capture full-measured color images through a unique stacked pixel sensor design. fidelity by measuring full color at every point By capturing full-measured color images, the need for color interpolation and in the captured image. artifact-reducing blur filters is eliminated. The Foveon FO18-50-F19 features the • Images have improved sharpness and immunity to color artifacts (moiré). powerful VPS (Variable Pixel Size) capability. VPS provides the on-chip capabil- • Foveon X3 technology directly converts light ity of grouping neighboring pixels together to form larger pixels that are optimal of all colors into useful signal information at every point in the captured image—no light for high frame rate, reduced noise, or dual mode still/video applications. Other absorbing filters are used to block out light. advanced features include: low fixed pattern noise, ultra-low power consumption, Variable Pixel Size (VPS) Capability and integrated digital control. • Neighboring pixels can be grouped together on-chip to obtain the effect of a larger pixel. • Enables flexible video capture at a variety of resolutions. • Enables higher ISO mode at lower resolutions. Analog Biases Row Row Digital Supplies • Reduces noise by combining pixels. Pixel Array Analog Supplies Readout Reset 1440 columns x 1088 rows x 3 layers On-Chip A/D Conversion Control Control • Integrated 12-bit A/D converter running at up to 40 MHz. -

Aperture, Exposure, and Equivalent Exposure Aperture

Aperture, Exposure, and Equivalent Exposure Aperture Also known as f-stop Aperture Controls opening’s size during exposure Another term for aperture: f-stop Controls Depth of Field Each full stop on the aperture (f-stop) either doubles or halves the amount of light let into the camera Light is halved this direction Light is doubled this direction The Camera/Eye Comparison Aperture = Camera body = Pupil Shutter = Eyeball Eyelashes Lens Iris diaphragm = Film = Iris Light sensitive retina Aperture and Depth of Field Depth of Field • The zone of sharpness variable by aperture, focal length, or subject distance f/22 f/8 f/4 f/2 Large Depth of Field Shot at f/22 Jacob Blade Shot at f/64 Ansel Adams Shallow Depth of Field Shot at f/4 Keely Nagel Shot at f/5.6 How is a darkroom test strip like a camera’s light meter? They both tell how much light is being allowed into an exposure and help you to pick the correct amount of light using your aperture and proper time (either timer or shutter speed) This is something called Equivalent Exposure Which will be explained now… What we will discuss • Exposure • Equivalent Exposure • Why is equivalent exposure important? Photography – Greek photo = light graphy = writing What is an exposure? Which one is properly exposed and what happened to the others? A B C Under Exposed A Over Exposed B Properly Exposed C Exposure • Combined effect of volume of light hitting the film or sensor and its duration. • Volume is controlled by the aperture (f-stop) • Duration (time) is controlled by the shutter speed Equivalent