A Bayesian Analysis of Early Dismissals in Cricket

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Life on a Wanderers Green Mamba Can Be Fun

14 Monday 28th September, 2009 It is all about technique and adapting to conditions... Life on a Wanderers green mamba can be fun here has always been and this time of year, it is usu- something sporty about ally very dry, although The Tthe Wanderers pitch. And Wanderers is subject to heavy history as well as tradition dew as well. should have prepared Sri Lanka The surface can be just as for this small fact of life. diabolically tricky even in So far, there have been vocif- January. In 1998, De Villiers and erous grumbling, feet stamping the left-arm fast bowler Greg and any number of misguided Smith reduced Gauteng to an accusations over Sri Lanka’s embarrassing rout by the capitulation to England at the Northerns Titans. Checking a Wanderers on Friday. The report written of that nation’s captain, Kumar SuperSport game, it shows how Sangakkara, complained how De Villiers, well supported with batting on it was like the first some class left-arm bowling by day of a Test. Smith had, in a matter of 34 Even a couple of family balls, reduced Gauteng to 12 for members as well as some media seven during a spell of 34 deliv- yokels were sounding off and eries in their second innings which, Yahaluweni, brought a and all the dismissed batsmen smile as one knows The had played for their country at Wanderers well, Sri Lanka were by Trevor Chesterfield some stage in their careers. fortunate to have reached 212. Many months later, as a In the only Test the island’s won the toss, came as a surprise build up to the Millennium Test team has played at the fame to those who know how dodgy series between South Africa Illovo venue in Corlett Drive, in the surface can be for the bats- and England, it had for weeks early November 2002, they were men, especially if a bowler hits been billed as the Donald and dismissed for 192 and 130, losing the right length. -

Sarwan: WICB Nails Canards

Friday 22nd April 2011 13 The West Indies Cricket Board issued him the necessary No Objection Certificate (NOC), but issued a media release critical of Gayle’s choice. Gayle said the dispute Sophia Vergara predates the World Cup, claiming he was She has the looks and the curvaceous threatened with body that could kill, and he is one of the exclusion from most famous faces in the world. the tournament So it is no wonder that Pepsi chose for asking Modern Family star Sophia Vergara and whether the Beckham and footballer David Beckham, 35, to team up tournament and star in their latest advertising cam- contract was swimsuit-clad paign. approved by In the 30-second advertisement, the 38- the West year-old old Colombian suns herself in a Indies Vergara debut low-cut electric one-piece swimsuit. Players’ She spots a young girl sipping a can of Association. their first ever Pepsi and immediately craves the soft “I got a drink. reply, copied to Pepsi commercial Getting a glimpse of the long line at the refreshment booth, she takes to Twitter to craftily dupe the crowd. Dangerous curves In the 31 second advertisement, the 38- Gayle claims forced year-old old Colombian suns herself in a low-cut electric one-piece swimsuit Immediately beach-goers hurtle away from the drink stand in a bid to find the famous footballer, making way for the stun- ning brunette to purchase her much need- to skip Pakistan series ed refreshment. Getting up from her lounge, she gives viewers a glimpse of her stunning behind CASTRIES (St. -

STILL SAINTLY AFTER ALL THESE YEARS David Wilson on the Questionable Charms of Hansie Cronje

DAVID WILSON THE NIGHTWATCHMAN STILL SAINTLY AFTER ALL THESE YEARS David Wilson on the questionable charms of Hansie Cronje Equipped with a theatrical streak, 7 April 2000, in a bombshell move, Hansie Cronje could recite reams of Delhi police charged Cronje with Hamlet by heart and seemed to embody fixing the results of South Africa’s the Hamlet line that reads: “One may one-day internationals against India smile, and smile, and be a villain.” the previous month. On 11 April, he was sacked as captain and promptly Twenty years ago, the last time deserted by his sponsors. He had the World Cup was held in the UK, tarnished his country and the game. Cronje committed his first striking transgression when he donned an “The damage done to South African earpiece to hear tips from coach sport is already immense, and the Bob Woolmer during his side’s match serious inquiry into the sordid against India, in leafy Hove of all places. details has not even begun. Many South Africans will have woken up Only one month later, just before the this morning feeling an intensely epic 1999 World Cup semi-final against personal hurt,” wrote Mike Selvey in Australia, Cronje was unabashed by the Guardian. Circling back, Selvey the incident, according to an Electronic said that across South Africa, banners Telegraph report. What’s more, he said professing love for Hansie would he was glad all-rounder Lance Klusener be unfurled. had got his first batting failure out the way – a generous remark, as the In a June 2000 Observer article, earpiece incident sank of the radar. -

Wisden Cricketers Almanack

01.21 118 3rd proof FIVE CRICKETERS OF THE YEAR The Five Cricketers of the Year represent a tradition that dates back in Wisden to 1889, making this the oldest individual award in cricket. The Five are picked by the editor, and the selection is based, primarily but not exclusively, on the players’ influence on the previous English season. No one can be chosen more than once. A list of past Cricketers of the Year appears on page 1508. sNB. Cross-ref Hashim Amla NEIL MANTHORP Hashim Amla enjoyed one of the most productive tours of England ever seen. In all three formats he was prolific, top-scoring in eight of his 11 international innings. His triple-century in the First Test at The Oval was as career-defining as it was nation-defining: he was the first South African to reach the landmark. It was an epic, and the fact that it laid the platform for a famous series win marked it out for eternal fame. By the time he added another century, in the Third Test at Lord’s, he had edged past even Jacques Kallis as the wicket England craved most. Amla produced yet another hundred in the one-day series, at Southampton, prompting coach Gary Kirsten to purr: “The pitch was extremely awkward, the bowling very good. To make 150 out of 287 rates it very highly, probably in the top three one-day innings for South Africa.” Accolades kept coming his way as the year progressed; by the end, he had scored 1,950 runs in all internationals, at an average of nearly 63. -

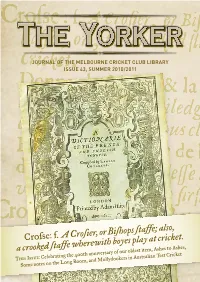

Issue 43: Summer 2010/11

Journal of the Melbourne CriCket Club library issue 43, suMMer 2010/2011 Cro∫se: f. A Cro∫ier, or Bi∫hops ∫taffe; also, a croo~ed ∫taffe wherewith boyes play at cricket. This Issue: Celebrating the 400th anniversary of our oldest item, Ashes to Ashes, Some notes on the Long Room, and Mollydookers in Australian Test Cricket Library News “How do you celebrate a Quadricentennial?” With an exhibition celebrating four centuries of cricket in print The new MCC Library visits MCC Library A range of articles in this edition of The Yorker complement • The famous Ashes obituaries published in Cricket, a weekly cataloguing From December 6, 2010 to February 4, 2010, staff in the MCC the new exhibition commemorating the 400th anniversary of record of the game , and Sporting Times in 1882 and the team has swung Library will be hosting a colleague from our reciprocal club the publication of the oldest book in the MCC Library, Randle verse pasted on to the Darnley Ashes Urn printed in into action. in London, Neil Robinson, research officer at the Marylebone Cotgrave’s Dictionarie of the French and English tongues, published Melbourne Punch in 1883. in London in 1611, the same year as the King James Bible and the This year Cricket Club’s Arts and Library Department. This visit will • The large paper edition of W.G. Grace’s book that he premiere of Shakespeare’s last solo play, The Tempest. has seen a be an important opportunity for both Neil’s professional presented to the Melbourne Cricket Club during his tour in commitment development, as he observes the weekday and event day The Dictionarie is a scarce book, but not especially rare. -

Infographic AMA 2020

Laureus World Sports Academy Members Giacomo Agostini Rahul Dravid Chris Hoy Brian O’Driscoll Marcus Allen Morné du Plessis Miguel Indurain Gary Player Luciana Aymar Nawal El Moutawakel Michael Johnson Hugo Porta Franz Beckenbauer Missy Franklin Kip Keino Carles Puyol Boris Becker Luis Figo Franz Klammer Steve Redgrave Ian Botham Emerson Fittipaldi Lennox Lewis Vivian Richards Sergey Bubka Sean Fitzpatrick Tegla Loroupe Monica Seles Cafu Dawn Fraser Dan Marino Mark Spitz Fabian Cancellara Ryan Giggs Marvelous Marvin Hagler Sachin Tendulkar Bobby Charlton Raúl González Blanco Yao Ming Daley Thompson Sebastian Coe Tanni Grey-Thompson Edwin Moses Alberto Tomba Nadia Comaneci Ruud Gullit Li Na Francesco Totti Alessandro Del Piero Bryan Habana Robby Naish Steve Waugh Marcel Desailly Mika Hakkinen Martina Navratilova Katarina Witt Kapil Dev Tony Hawk Alexey Nemov Li Xiaopeng Mick Doohan Maria Höfl-Riesch Jack Nicklaus Deng Yaping David Douillet Mike Horn Lorena Ochoa Yang Yang Laureus Ambassadors Kurt Aeschbacher David de Rothschild Marcel Hug Garrett McNamara Pius Schwizer Cecil Afrika Jean de Villiers Benjamin Huggel Zanele Mdodana Andrii Shevchenko Ben Ainslie Deco Edith Hunkeler Sarah Meier Marcel Siem Josef Ajram Vicente del Bosque Juan Ignacio Sánchez Elana Meyer Gian Simmen Natascha Badmann Deshun Deysel Colin Jackson Meredith Yuvraj Singh Mansour Bahrami Lucas Di Grassi Butch James Michaels-Beerbaum Graeme Smith Robert Baker Daniel Dias Michael Jamieson Roger Milla Emma Snowsill Andy Barrow Valentina Diouf Marc Janko Aldo Montano Albert -

Croft Mu Apologis Lara

14 Wednesday 23rd January, 2008 by Anil Roberts statistics inherent to the win/loss ormer West Indies fast column. bowler Colin Croft has some- It is no secret that the West Fhow been able to convince Indies, under Brian Lara?s cap- During his tenure, Lara has captained normally perceptive people at a taincy, has lost far more Test mately 52 different players at differe reputable media house in T&T that matches and ODIs than it has won. his scattered thoughts, carelessly However, to use this simple fact to Players of questionable competence, ab strewn on paper, are somehow cast aspersions on Lara?s charac- strength and character. Conversely Cliv worthy of publication. Over the ter, leadership qualities and com- last three weeks Mr Croft has con- mitment is not only unfair but privileged enough to only captain app tradicted himself as regularly as downright ridiculous. our local politicians. The fact of the matter is that 20 players during the period of his cap It all began on April 7, 2005 with Lara has captained some of the 1976 to 1985. his unwarranted, stinging attack worst teams ever assembled by the on Brian Lara's ability to lead the WICB. This, coupled with the vast West Indies. In the veins of a true improvements made by all oppos- simpleton, his entire argument ing teams in the cricketing world was based on during the same period, account the super- for those statistics. For example, ficial Lara captained a team which consisted of: Sherwin Campbell, Suruj Ragoonath, Lincoln Roberts and Dave Croft mu Joseph as the top order against the might of Australia, with Glen McGrath, Shane apologis Warne, Gillespie, Mark Waugh, Steve Waugh, Mark Lara Taylor and Ian Healey.Somehow, Brian Antigua, Gordon Greenidge w managed a 2-2 tie in what has selected as the Technical Dire become known as ?The Shape Up of Bangladesh Cricket where Or Ship Out series.? helped them to qualify for the During his tenure, Lara has first-ever World Cup and laid captained approximately 52 differ- foundation for attaining Test ent players at different times. -

P17 Layout 1

WEDNESDAY, FEBRUARY 8, 2017 SPORTS Australia yet to work out how to stop Kohli: Lehmann BRISBANE: Australia have yet to work out a Kohli has scored six of his 15 centuries in his have to bowl enough good balls and that’s years later, Lehmann said Steve Smith’s side bowlers, led by pacemen Glenn McGrath and plan to foil India captain Virat Kohli beyond 12 matches against Australia, averaging going to be the challenge for our spinners have the bowlers to take 20 wickets against Jason Gillespie, and spinner Shane Warne. bowling well and hoping for some “luck” 60.76 compared to his overall average of and for our quicks, challenging his defence Kohli’s men. “The great thing with the Australian cricket against the in-form batsman, coach Darren 50.10. Former test batsman Lehmann, a and making sure he’s playing in the areas we “We’ve got spinners who can take 20 team for years has been, backs to the wall Lehmann has said. member of that victorious Australian side, want him to play.” wickets and quicks who can reverse the ball,” brings the best out of players,” said Lehmann. Kohli was man-of-the-series against said his players had been watching videos of Michael Clarke’s Australia were white- he said. “So we’re not fearing getting the 20 “Someone like Matthew Hayden will England, plundering 655 runs off their Kohli and his team mates for months but washed 4-0 during their last tour of India in wickets, we’ve just got to put enough score- stand up or Damien Martyn will come out of bowlers at an average of 109.16 to comfort- were yet to work out how to combat the 2013 where a breakdown in team discipline board pressure on them.” That would mean a nowhere and actually play well on a tour. -

Roger Page Cricket Books

ROGER PAGE DEALER IN NEW AND SECOND-HAND CRICKET BOOKS 10 EKARI COURT, YALLAMBIE, VICTORIA, 3085 TELEPHONE: (03) 9435 6332 FAX: (03) 9432 2050 EMAIL: [email protected] ABN 95 007 799 336 OCTOBER 2016 CATALOGUE Unless otherwise stated, all books in good condition & bound in cloth boards. Books once sold cannot be returned or exchanged. G.S.T. of 10% to be added to all listed prices for purchases within Australia. Postage is charged on all orders. For parcels l - 2kgs. in weight, the following rates apply: within Victoria $14:00; to New South Wales & South Australia $16.00; to the Brisbane metropolitan area and to Tasmania $18.00; to other parts of Queensland $22; to Western Australia & the Northern Territory $24.00; to New Zealand $40; and to other overseas countries $50.00. Overseas remittances - bank drafts in Australian currency - should be made payable at the Commonwealth Bank, Greensborough, Victoria, 3088. Mastercard and Visa accepted. This List is a selection of current stock. Enquiries for other items are welcome. Cricket books and collections purchased. A. ANNUALS AND PERIODICALS $ ¢ 1. A.C.S International Cricket Year Books: a. 1986 (lst edition) to 1995 inc. 20.00 ea b. 2014, 2015, 2016 70.00 ea 2. Athletic News Cricket Annuals: a. 1900, 1903 (fair condition), 1913, 1914, 1919 50.00 ea b. 1922 to 1929 inc. 30.00 ea c. 1930 to 1939 inc. 25.00 ea 3. Australian Cricket Digest (ed) Lawrie Colliver: a. 2012-13, 2013-14, 2014-15, 25.00 ea. b. 2015-2016 30.00 ea 4. -

Extract Catalogue for Auction

Page:1 May 19, 2019 Lot Type Grading Description Est $A CRICKET - AUSTRALIA - 1949 Onwards Ex Lot 94 94 1955-66 Melbourne Cricket Club membership badges complete run 1955-56 to 1965-66, all the scarce Country memberships. Very fine condition. (11) 120 95 1960 'The Tied Test', Australia v West Indies, 1st Test at Brisbane, display comprising action picture signed by Wes Hall & Richie Benaud, limited edition 372/1000, window mounted, framed & glazed, overall 88x63cm. With CoA. 100 Lot 96 96 1972-73 Jack Ryder Medal dinner menu for the inaugural medal presentation with 18 signatures including Jack Ryder, Don Bradman, Bill Ponsford, Lindsay Hassett and inaugural winner Ron Bird. Very scarce. 200 Lot 97 97 1977 Centenary Test collection including Autograph Book with 254 signatures of current & former Test players including Harold Larwood, Percy Fender, Peter May, Clarrie Grimmett, Ray Lindwall, Richie Benaud, Bill Brown; entree cards (4), dinner menu, programme & book. 250 98 - Print 'Melbourne Cricket Club 1877' with c37 signatures including Harold Larwood, Denis Compton, Len Hutton, Ted Dexter & Ross Edwards; window mounted, framed & glazed, overall 75x58cm. 100 99 - Strip of 5 reserved seat tickets, one for each day of the Centenary Test, Australia v England at MCG on 12th-17th March, framed, overall 27x55cm. Plus another framed display with 9 reserved seat tickets. (2) 150 100 - 'Great Moments' poster, with signatures of captains Greg Chappell & Tony Greig, limited edition 49/550, framed & glazed, overall 112x86cm. 150 Page:2 www.abacusauctions.com.au May 19, 2019 CRICKET - AUSTRALIA - 1949 Onwards (continued) Lot Type Grading Description Est $A Ex Lot 101 101 1977-86 Photographs noted Australian team photos (6) from 1977-1986; framed photos of Ray Bright (6) including press photo of Bright's 100th match for Victoria (becoming Victoria's most capped player, overtaking Bill Lawry's 99); artworks of cricketer & two-up player. -

The Cricketer Annual Report & Year Book 2003-2004 Contents

WesternThe Cricketer Annual Report & Year Book 2003-2004 Contents BOARD Patron .................................................................................................. 3 Western Australian Cricket Association (Inc.) Board Structure .............. 4-5 President’s Report / Board Attendance Register .................................. 6-7 Chief Executive’s Report...................................................................... 8-9 REPRESENTATIVE Retravision Warriors ING Cup Winning Team .................................... 11 Feature Article – Paul Wilson ING Cup Final Report .......................... 12 Lilac Hill Report.................................................................................. 13 Feature Article – Murray Goodwin and Kade Harvey .......................... 14 Season Review – Wayne Clark ............................................................ 15 Retravision Warriors at International Level .......................................... 16-17 Feature Article – Justin Langer.............................................................. 18-19 Pura Cup Season Review .................................................................... 20-22 Pura Cup Averages................................................................................ 25 Pura Cup Scoreboards .......................................................................... 26-30 Feature Article – Jo Angel .................................................................... 31-32 ING Cup Season Review ................................................................... -

Heat on Skipper Over Breaking Covid Rules

50 SPORT WEDNESDAY DECEMBER 16 2020 Green light for debut Young gun on track for Adelaide Test as Burns and Harris fight to open BEN HORNE the last seat on the bus. protocols and he gets through his family.” Langer was still we’ll have a look at him. We’ll Green will make his debut training and he’s feeling good backing Burns but said he get eyes on him today. We’ll CAMERON Green is set to in Adelaide provided he passes (he will play),” said Langer. would wait to watch the out of see how he’s going. We’ll have make his debut against India in final concussion tests, which at “I saw him last night. He form Queenslander and Harris a chat to him today and we’ll the first Test, with Joe Burns this point he is on track to do. had a big smile on his face, he at training before a final call is make our decision on who is and Marcus Harris now fight- Langer hailed the emer- had another test this morning made on who opens. going to open in the next day ing it out for the last place in gence of the West Australian that we got good news on. “I’ve maintained I’ve been or so.” Australia’s XI. allrounder dubbed by Greg “He’s a terrific young bloke, privately and publicly backing The fact Green is set to Matthew Wade is firming Cameron Green. Chappell as Australia’s hottest he’s obviously an excellent tal- Joey in the whole time.