Towards Effective Live Cloud Migration on Public Cloud Iaas

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

News, Information, Rumors, Opinions, Etc

http://www.physics.miami.edu/~chris/srchmrk_nws.html ● Miami-Dade/Broward/Palm Beach News ❍ Miami Herald Online Sun Sentinel Palm Beach Post ❍ Miami ABC, Ch 10 Miami NBC, Ch 6 ❍ Miami CBS, Ch 4 Miami Fox, WSVN Ch 7 ● Local Government, Schools, Universities, Culture ❍ Miami Dade County Government ❍ Village of Pinecrest ❍ Miami Dade County Public Schools ❍ ❍ University of Miami UM Arts & Sciences ❍ e-Veritas Univ of Miami Faculty and Staff "news" ❍ The Hurricane online University of Miami Student Newspaper ❍ Tropic Culture Miami ❍ Culture Shock Miami ● Local Traffic ❍ Traffic Conditions in Miami from SmartTraveler. ❍ Traffic Conditions in Florida from FHP. ❍ Traffic Conditions in Miami from MSN Autos. ❍ Yahoo Traffic for Miami. ❍ Road/Highway Construction in Florida from Florida DOT. ❍ WSVN's (Fox, local Channel 7) live Traffic conditions in Miami via RealPlayer. News, Information, Rumors, Opinions, Etc. ● Science News ❍ EurekAlert! ❍ New York Times Science/Health ❍ BBC Science/Technology ❍ Popular Science ❍ Space.com ❍ CNN Space ❍ ABC News Science/Technology ❍ CBS News Sci/Tech ❍ LA Times Science ❍ Scientific American ❍ Science News ❍ MIT Technology Review ❍ New Scientist ❍ Physorg.com ❍ PhysicsToday.org ❍ Sky and Telescope News ❍ ENN - Environmental News Network ● Technology/Computer News/Rumors/Opinions ❍ Google Tech/Sci News or Yahoo Tech News or Google Top Stories ❍ ArsTechnica Wired ❍ SlashDot Digg DoggDot.us ❍ reddit digglicious.com Technorati ❍ del.ic.ious furl.net ❍ New York Times Technology San Jose Mercury News Technology Washington -

Cyber Law and Espionage Law As Communicating Vessels

Maurer School of Law: Indiana University Digital Repository @ Maurer Law Books & Book Chapters by Maurer Faculty Faculty Scholarship 2018 Cyber Law and Espionage Law as Communicating Vessels Asaf Lubin Maurer School of Law - Indiana University, [email protected] Follow this and additional works at: https://www.repository.law.indiana.edu/facbooks Part of the Information Security Commons, International Law Commons, Internet Law Commons, and the Science and Technology Law Commons Recommended Citation Lubin, Asaf, "Cyber Law and Espionage Law as Communicating Vessels" (2018). Books & Book Chapters by Maurer Faculty. 220. https://www.repository.law.indiana.edu/facbooks/220 This Book is brought to you for free and open access by the Faculty Scholarship at Digital Repository @ Maurer Law. It has been accepted for inclusion in Books & Book Chapters by Maurer Faculty by an authorized administrator of Digital Repository @ Maurer Law. For more information, please contact [email protected]. 2018 10th International Conference on Cyber Conflict CyCon X: Maximising Effects T. Minárik, R. Jakschis, L. Lindström (Eds.) 30 May - 01 June 2018, Tallinn, Estonia 2018 10TH INTERNATIONAL CONFERENCE ON CYBER CONFLicT CYCON X: MAXIMISING EFFECTS Copyright © 2018 by NATO CCD COE Publications. All rights reserved. IEEE Catalog Number: CFP1826N-PRT ISBN (print): 978-9949-9904-2-9 ISBN (pdf): 978-9949-9904-3-6 COPYRigHT AND REPRINT PERmissiONS No part of this publication may be reprinted, reproduced, stored in a retrieval system or transmitted in any form or by any means, electronic, mechanical, photocopying, recording or otherwise, without the prior written permission of the NATO Cooperative Cyber Defence Centre of Excellence ([email protected]). -

Vital Web Stats—And More

Vital Web Stats—And More You have been invited to share your opinions My Account | Sign In Not a member? Join now What do savvy IT buyers think about the leading IT products? Can you help eWEEK find out? Home > ReviewsJust > Vitalclick WebOK toStats—And begin the More survey! VitalAnd, by Web the way, Stats—And there's a chance to Morewin some cool tech TABLE OF CONTENTS gadgets at its end. • Introduction By Jim Rapoza • WebTrends Reporting August 26, 2002 Center 5.0 Be the first to comment on • Sitecatalyst 8.0 this article • Executive Summary: WebTrends Reporting How many hits are we getting? Which are the most popular pages on Center 5.0 our site? Where are visitors coming from? • Executive Summary: Sitecatalyst 8.0 These are the most common questions Web site administrators have on a daily basis about their site. But when it comes down to improving the TOPIC CENTERS site for visitors, knowing how your site is really used and getting rid of Content Creation waste in the site, a lot more information is necessary. Database Desktops & Notebooks There are many technologies for analyzing and reporting on the Enterprise Apps massive amount of information generated by busy Web sites. These Infrastructure range from traditional log file analyzers to network sniffers to agents Linux & Open Source installed on the Web server to services that receive information Macintosh whenever a page is accessed. Messaging/Collaboration Mobile & Wireless Search Most small sites can get by with simple tools that answer only the most Security basic questions. However, large content and e-commerce sites, as well Storage as those in large enterprises, require applications that can provide More Topics > detailed, flexible analysis capabilities. -

Zdnet: Interactive Week: Tim O'reilly: the Web Is a Giant Supercomputer

ZDNet: Interactive Week: Tim O'Reilly: The Web Is A Giant Supercomputer Cameras | Reviews | Shop | Business | Help | News | Handhelds | GameSpot | Holiday | Downloads | Developer • Download The Future Interactive Week • Most Popular Products • Free Downloads ZDNet > Business & Tech > Interactive Week > Tim O'Reilly: The Web Is A Giant Supercomputer • Search Tips Search For: • Power Search Inter@ctive Week News Top News Updated November 17, 2000 9:58 PM ET Free Newsletter Tim O'Reilly: The Web Is A Giant ArrowAnalysts Say Nortel Supercomputer Won't Recover Quickly August 28, 2000 8:22 AM ET ArrowZipLink Suspends Operations By Mike Dempster News & Views Commerce One Home Arrow Official Denies Sales News Scan Tim O'Reilly is founder and president of O'Reilly & Rumors Opinion Associates, one of the world's leading publishers of computer Special Reports books that pioneers and champions Web content ArrowBritannica.com To development. O'Reilly is an ardent defender of open source Print Issues Cut 75 Jobs software, an activist for Internet standards, an author and an Exclusives L&H Vows To Restore editor. His company also produces travel books and guides Arrow Interviews Profitability that help patients navigate the medical system. @Net Index ArrowCNET's Proportion Of Internet 500 Equity Revs Declining You have said that a major change in the next few years will be people's realization that what we've done with the Topics Web is build one giant computer. Can you explain? E-mail this story! Well, actually, the realization is coming now. What we'll see in 2004 is the fulfillment of that realization. -

Jonathan Zittrain's “The Future of the Internet: and How to Stop

The Future of the Internet and How to Stop It The Harvard community has made this article openly available. Please share how this access benefits you. Your story matters Citation Jonathan L. Zittrain, The Future of the Internet -- And How to Stop It (Yale University Press & Penguin UK 2008). Published Version http://futureoftheinternet.org/ Citable link http://nrs.harvard.edu/urn-3:HUL.InstRepos:4455262 Terms of Use This article was downloaded from Harvard University’s DASH repository, and is made available under the terms and conditions applicable to Other Posted Material, as set forth at http:// nrs.harvard.edu/urn-3:HUL.InstRepos:dash.current.terms-of- use#LAA YD8852.i-x 1/20/09 1:59 PM Page i The Future of the Internet— And How to Stop It YD8852.i-x 1/20/09 1:59 PM Page ii YD8852.i-x 1/20/09 1:59 PM Page iii The Future of the Internet And How to Stop It Jonathan Zittrain With a New Foreword by Lawrence Lessig and a New Preface by the Author Yale University Press New Haven & London YD8852.i-x 1/20/09 1:59 PM Page iv A Caravan book. For more information, visit www.caravanbooks.org. The cover was designed by Ivo van der Ent, based on his winning entry of an open competition at www.worth1000.com. Copyright © 2008 by Jonathan Zittrain. All rights reserved. Preface to the Paperback Edition copyright © Jonathan Zittrain 2008. Subject to the exception immediately following, this book may not be reproduced, in whole or in part, including illustrations, in any form (beyond that copying permitted by Sections 107 and 108 of the U.S. -

The Centripetal Network: How the Internet Holds Itself Together, and the Forces Tearing It Apart

The Centripetal Network: How the Internet Holds Itself Together, and the Forces Tearing It Apart Kevin Werbach* Two forces are in tension as the Internet evolves. One pushes toward interconnected common platforms; the other pulls toward fragmentation and proprietary alternatives. Their interplay drives many of the contentious issues in cyberlaw, intellectual property, and telecommunications policy, including the fight over “network neutrality” for broadband providers, debates over global Internet governance, and battles over copyright online. These are more than just conflicts between incumbents and innovators, or between “openness” and “deregulation.” The roots of these conflicts lie in the fundamental dynamics of interconnected networks. Fortunately, there is an interdisciplinary literature on network properties, albeit one virtually unknown to legal scholars. The emerging field of network formation theory explains the pressures threatening to pull the Internet apart, and suggests responses. The Internet as we know it is surprisingly fragile. To continue the extraordinary outpouring of creativity and innovation that the Internet fosters, policy makers must protect its composite structure against both fragmentation and excessive concentration of power. This paper, the first to apply network formation models to Internet law, shows how the Internet pulls itself together as a coherent whole. This very * Assistant Professor of Legal Studies and Business Ethics, The Wharton School, University of Pennsylvania. Thanks to Richard Shell, Phil Weiser, James Grimmelman, Gerry Faulhaber, and the participants in the 2007 Wharton Colloquium on Media and Communications Law for advice on prior versions, and to Paul Kleindorfer for introducing me to the network formation literature. Thanks also to Julie Dohm and Lauren Murphy Pringle for research assistance. -

Amended Motion to Disseminate September

2:12-cv-00103-MOB-MKM Doc # 527 Filed 09/14/16 Pg 1 of 26 Pg ID 17866 UNITED STATES DISTRICT COURT FOR THE EASTERN DISTRICT OF MICHIGAN SOUTHERN DIVISION IN RE: AUTOMOTIVE PARTS ANTITRUST LITIGATION : No. 12-md-02311 : Hon. Marianne O. Battani IN RE: WIRE HARNESS : Case No. 2:12-cv-00103 IN RE: INSTRUMENT PANEL CLUSTERS : Case No. 2:12-cv-00203 IN RE: FUEL SENDERS : Case No. 2:12-cv-00303 IN RE: HEATER CONTROL PANELS : Case No. 2:12-cv-00403 IN RE: BEARINGS : Case No. 2:12-cv-00503 IN RE: OCCUPANT SAFETY SYSTEMS : Case No. 2:12-cv-00603 IN RE: ALTERNATORS : Case No. 2:13-cv-00703 IN RE: ANTI-VIBRATIONAL RUBBER PARTS : Case No. 2:13-cv-00803 IN RE: WINDSHIELD WIPERS : Case No. 2:13-cv-00903 IN RE: RADIATORS : Case No. 2:13-cv-01003 IN RE: STARTERS : Case No. 2:13-cv-01103 IN RE: SWITCHES : Case No. 2:13-cv-01303 IN RE: IGNITION COILS : Case No. 2:13-cv-01403 IN RE: MOTOR GENERATORS : Case No. 2:13-cv-01503 IN RE: STEERING ANGLE SENSORS : Case No. 2:13-cv-01603 IN RE: HID BALLASTS : Case No. 2:13-cv-01703 IN RE: INVERTERS : Case No. 2:13-cv-01803 IN RE: ELECTRONIC POWERED STEERING ASSEMBLIES : Case No. 2:13-cv-01903 IN RE: AIR FLOW METERS : Case No. 2:13-cv-02003 IN RE: FAN MOTORS : Case No. 2:13-cv-02103 IN RE: FUEL INJECTION SYSTEMS : Case No. 2:13-cv-02203 IN RE: POWER WINDOW MOTORS : Case No. -

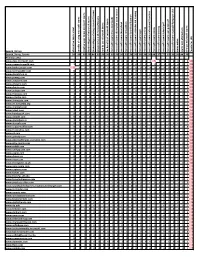

Microsoft Teams Search String Counts

benefits of using microsoft teams of using benefits microsoft to ways teams use creative of microsoft teams disadvantages microsoft teams of using disadvantages of microsoft teams version google microsoft tohow teams use of microsoft teams benefits key microsoft teams download) (free source open microsoft teams alternative microsoft teams cheat sheet microsoft teams competitors microsoft location teams download training microsoft teams instructor-led microsoft teams login microsoft teams pricing Practices microsoft teams professional microsoft teams review microsoft teams training microsoft pdf teams tutorial pdf guide microsoft teams user chat microsoft hangouts google teams vs teams online alternatives teams web microsoft teams use why URL Per Counts Search_Strings teams client alternative Search_String_Counts 99 99 99 99 ## 99 99 99 99 99 98 99 98 98 98 98 98 ## 97 77 99 99 99 ## 98 Primary_URLs www.docs.microsoft.com 1 1 1 2 2 0 1 1 0 0 0 0 0 2 0 1 1 5 3 1 1 0 0 1 0 24 www.computerworld.com 1 1 1 1 1 1 1 1 1 1 1 1 1 0 1 1 1 2 1 1 0 1 0 1 1 23 www.books.google.com 5 0 0 2 1 1 0 0 0 1 2 0 1 0 0 0 4 0 0 4 0 0 0 0 0 21 www.techrepublic.com 0 1 1 1 1 0 1 1 1 1 2 1 1 0 1 1 1 2 1 1 1 0 0 0 1 21 www.dispatch.m.io 1 1 1 1 1 1 2 1 1 1 0 1 0 0 1 1 0 2 0 0 0 1 0 1 1 19 www.pcmag.com 0 1 1 1 1 1 1 1 1 1 0 1 0 0 1 2 0 2 0 0 0 1 1 1 1 19 www.avepoint.com 0 1 1 1 1 1 1 1 1 0 1 0 1 0 0 1 1 2 1 1 0 1 0 0 1 18 www.cmswire.com 0 1 2 1 1 1 2 1 0 1 1 1 0 0 0 0 1 2 0 0 0 1 0 1 1 18 www.chanty.com 1 1 1 1 1 1 0 1 1 2 0 1 0 0 0 1 0 2 0 0 0 1 0 1 1 17 www.getapp.com -

Managing and Using Information Systems: a Strategic Approach 4Th

4TH EDITION Managing and Using Information Systems AStrategicApproach KERI E. PEARLSON KP Partners CAROL S. SAUNDERS University of Central Florida JOHN WILEY & SONS, INC. To Yale & Hana To Rusty, Russell &Kristin VICE PRESIDENT & EXECUTIVE PUBLISHER Don Fowley EXECUTIVE EDITOR Beth Lang Golub EDITORIAL ASSISTANT Lyle Curry MARKETING MANAGER Carly DeCandia DESIGN DIRECTOR Harry Nolan SENIOR DESIGNER Kevin Murphy SENIOR PRODUCTION EDITOR Patricia McFadden SENIOR MEDIA EDITOR Lauren Sapira PRODUCTION MANAGEMENT SERVICES Pine Tree Composition This book is printed on acid-free paper. Copyright 2010 John Wiley & Sons, Inc. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system or transmitted in any form or by any means, electronic, mechanical, photocopying, recording, scanning or otherwise, except as permitted under Sections 107 or 108 of the 1976 United States Copyright Act, without either the prior written permission of the Publisher, or authorization through payment of the appropriate per-copy fee to the Copyright Clearance Center, Inc. 222 Rosewood Drive, Danvers, MA 01923, website www.copyright.com. Requests to the Publisher for permission should be addressed to the Permissions Department, John Wiley & Sons, Inc., 111 River Street, Hoboken, NJ 07030-5774, (201) 748-6011, fax (201) 748-6008, website www.wiley.com/go/permissions. To order books or for customer service please, call 1-800-CALL WILEY (225-5945). ISBN 978-0-470-34381-4 Printed in the United States of America 10 9 8 7 6 5 4 3 2 1 ᭤Preface Information technology and business are becoming inextricably interwoven. I don’t think anybody can talk meaningfully about one without the talking about the other.1 Bill Gates Microsoft I’m not hiring MBA students for the technology you learn while in school, but for your ability to learn about, use and subsequently manage new technologies when you get out. -

WITI's Digital Life Survey Says

WITI’s Digital Life Survey Says: Maybe They’re Not So Different, Men and Women Are Both Thinking Big (Screen, That Is) For The Holidays New York, October 27, 2005 – WITI (Women in Technology International), the nation’s leading professional organization for tech-savvy women, sponsored a special survey at Digital Life, a consumer technology event from Ziff Davis. Attendees were asked to lend their voices and tell the tech industry what they love and don’t love. The survey results show that passion for technology transcends gender. The respondents were shown nine different high tech products that will be on sale this holiday season and asked to rank their favorites. Respondents were asked to rate each product along five categories including price, wow factor, and usefulness. “To our surprise, the Syntax Olivia flat panel TV we showed the survey respondents crossed the gender lines in its appeal,” said Robin Raskin, director of WITI media. “The Samsung Digimax Camera followed in a close second place, again favored by both men and women. Technology wish lists appear to be consistent across the sexes.” Other items the visitors could choose from included a portable media device, a slick cell phone, a music player, a digital picture frame, a music player embedded in a headset, and others. In regards to lifestyle, the significant majority of both men and women answered that they would not date anyone who carried a Pocket Protector. They also agreed that they would never date anyone who couldn’t send an instant message. When asked how they communicated their most romantic feelings, both genders chose face-to-face communications as opposed to email, IM, phone or other technologies. -

Exhibit a (Pdf)

The Facts on Filters A Comprehensive Review of 26 Independent Laboratory Tests of the Effectiveness of Internet Filtering Software By David Burt The Facts on Filters Table of Contents 2 Introduction 3 Filtering in Homes, Businesses, Schools, and Libraries 4 Laboratory Tests of Filtering Software Effectiveness 6 Tests Finding Filters Effective 7 Tests Finding Filters of Mixed Effectiveness 13 Tests Finding Filters Ineffective 15 Conclusion 17 Footnotes 18 N2H2, Inc. • 900 4th Ave • Seattle, WA 98164 • 800.971.2622 • www.n2h2.com 2 The Facts on Filters Introduction In their 1997 decision striking down the Communications Decency Act as unconstitutional, the Supreme Court pointed to filtering software as a less restrictive means for controlling access to pornography on the Internet.1 The years since that 1997 decision have led to steady growth in the use of software filters. According to the latest research, in the year 2001, 74% of public schools2, 43% of public libraries3, 40% of major U.S. corporations4, and 41% of Internet- enabled homes with children5 have adopted filtering software. As filters have become more widely accepted, there has been an ongoing debate in public policy circles regarding the effectiveness of filtering software. The debate over filter effectiveness has often been politicized, frequently with little or no empirical data to document claims made about filtering software. While the debate has continued, a rich body of non-partisan laboratory testing literature has developed. A total of 26 published laboratory tests of filtering software effectiveness have been identified in technology and consumer print publications including PC Magazine, Info World, Network Computing, Internet World, eWeek, and Consumer Reports from 1995-2001. -

Cnet Networks

SECURITIES AND EXCHANGE COMMISSION FORM 10-K Annual report pursuant to section 13 and 15(d) Filing Date: 2002-04-01 | Period of Report: 2001-07-31 SEC Accession No. 0001015577-02-000009 (HTML Version on secdatabase.com) FILER CNET NETWORKS INC Mailing Address Business Address 150 CHESTNUT ST 150 CHESTNUT ST CIK:1015577| IRS No.: 133696170 | State of Incorp.:DE | Fiscal Year End: 1231 SAN FRANCISCO CA 94111 SAN FRANCISCO CA 94111 Type: 10-K | Act: 34 | File No.: 000-20939 | Film No.: 02595841 4153648000 SIC: 7812 Motion picture & video tape production Copyright © 2012 www.secdatabase.com. All Rights Reserved. Please Consider the Environment Before Printing This Document UNITED STATES SECURITIES AND EXCHANGE COMMISSION Washington, D.C. 20549 FORM 10-K [X] ANNUAL REPORT PURSUANT TO SECTION 13 OR 15(d) OF THE SECURITIES EXCHANGE ACT OF 1934 For the fiscal year ended December 31, 2001 OR [ ] TRANSITION REPORT PURSUANT TO SECTION 13 OR 15(d) OF THE SECURITIES EXCHANGE ACT OF 1934 For the transition period from ________to _________ Commission File No. 0-20939 CNET Networks, Inc. (Exact name of registrant as specified in its charter) Delaware 13-3696170 (State or Other Jurisdiction of Incorporation or Organization) (IRS Employer Identification Number) 235 Second Street San Francisco, CA 94105 (Address of principal executive offices) Registrant's telephone number, including area code (415) 344-2000 Securities registered pursuant to Section 12(b) of the Act: Title of each class Name of each exchange on which registered None None Securities registered pursuant to Section 12(g) of the Act: Title of class Common Stock, $0.0001 par value Indicate by check mark whether the issuer (1) filed all reports required to be filed by Section 13 or 15(d) of the Exchange Act of 1934 during the preceding 12 months (or for such shorter period that the registrant was required to file such reports), and (2) has been subject to such filing requirements for the past 90 days.