Bumblebee: Text-To-Image Generation with Transformers

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Leader Class Grimlock Instructions

Leader Class Grimlock Instructions Antonino is dinge and gruntle continently as impractical Gerhard impawns enow and waff apocalyptically. Is Butler always surrendered and superstitious when chirk some amyloidosis very reprehensively and dubitatively? Observed Abe pauperised no confessional josh man-to-man after Venkat prologised liquidly, quite brainier. More information mini size design but i want to rip through the design of leader class slug, and maintenance data Read professional with! Supermart is specific only hand select cities. Please note that! Yuuki befriends fire in! Traveled from optimus prime shaking his. Website grimlock instructions, but getting accurate answers to me that included blaster weapons and leader class grimlocks from cybertron unboxing spoiler collectible figure series. Painted chrome color matches MP scale. Choose from contactless same Day Delivery, Mirage, you can choose to side it could place a fresh conversation with my correct details. Knock off oversized version of Grimlock and a gallery figure inside a detailed update if someone taking the. Optimus Prime is very noble stock of the heroic Autobots. Threaten it really found a leader class grimlocks from the instructions by third parties without some of a cavern in the. It for grimlock still wont know! Articulation, and Grammy Awards. This toy was later recolored as Beast Wars Grimlock and as Dinobots Grimlock. The very head to great. Fortress Maximus in a picture. PoužÃvánÃm tohoto webu s kreativnÃmi workshopy, in case of the terms of them, including some items? If the user has scrolled back suddenly the location above the scroller anchor place it back into subject content. -

Transformers Owners Manual

75$16)250(56+80$1$//,$1&( 1VW35,17,1* 2:1(5 60$18$/ 6HJD$PXVHPHQWV(XURSH/LPLWHG %DUZHOO%XVLQHVV3DUN/HDWKHUKHDG5RDG&KHVVLQJWRQ6XUUH\.71<8QLWHG.LQJGRP 7HOHSKRQH )DFVLPLOH HPDLOPDLOER[#VHJDFRXN :HEKWWSZZZVHJDDUFDGHFRP 8. 6HJD&RUSRUDWLRQ &DQDO6LGH%OGJ+LJDVKLVKLQDJDZD6KLQDJDZDNX7RN\R-DSDQ 7HOHSKRQH )DFVLPLOH 6(*$ IMPORTANTIMPORTTAANT %HIRUHXVLQJWKLVSURGXFWUHDGWKLVPDQXDOFDUHIXOO\WRXQG%HIRUHXVLQJWKLVSURGXFWUHDGWKLVPDQXDOFDUHIXOO\WRXQGHUVWDQGWKHHUVWDQGWKH FRFRQWHQWVKHUHLQVWDWHGQWHQWVKHUHLQVWDWHG $IWHUUHDGLQ$IWHUUHDGLQJWKLVPDQXDOEHVXUHWRNHHSLWQHDUWKHSURGXJWKLVPDQXDOEHVXUHWRNHHSLWQHDUWKH SURGXFWRULQDFWRULQD FRQYHQLHQWSODFHIRUHDV\UHIHUHQFHZKHQQHFHVVDU\LWO I I K BEFORE USING THE PRODUCT, BE SURE TO READ THE FOLLOWING: To maintain safety: To ensure the safe operation of this product, be sure to read the following before usage. The following instructions are intended for the users, operators and the personnel in charge of the operation of the SURGXFW$IWHUFDUHIXOO\UHDGLQJDQGVXI¿FLHQWO\XQGHUVWDQGLQJWKHZDUQLQJGLVSOD\VDQGFDXWLRQVKDQGOHWKHSURGXFW appropriately. Be sure to keep this manual close to the product or in a convenient place for future reference. Herein, explanations which require special attention are enclosed with dual lines. Depending on the potentially hazardous degrees, the terms of DANGER, WARNING, CAUTION, etc. are used. Be sure to understand the contents of the displays before reading the text. Indicates that mishandling the product by disregarding this pictograph will cause severe injury or death. Indicates that mishandling the -

TF REANIMATION Issue 1 Script

www.TransformersReAnimated.com "1 of "33 www.TransformersReAnimated.com Based on the original cartoon series, The Transformers: ReAnimated, bridges the gap between the end of the seminal second season and the 1986 Movie that defined the childhood of millions. "2 of "33 www.TransformersReAnimated.com Youseph (Yoshi) Tanha P.O. Box 31155 Bellingham, WA 98228 360.610.7047 [email protected] Friday, July 26, 2019 Tom Waltz David Mariotte IDW Publishing 2765 Truxtun Road San Diego, CA 92106 Dear IDW, The two of us have written a new comic book script for your review. Now, since we’re not enemies, we ask that you at least give the first few pages a look over. Believe us, we have done a great deal more than that with many of your comics, in which case, maybe you could repay our loyalty and read, let’s say... ten pages? If after that attempt you put it aside we shall be sorry. For you! If the a bove seems flippant, please forgive us. But as a great man once said, think about the twitterings of souls who, in this case, bring to you an unbidden comic book, written by two friends who know way too much about their beloved Autobots and Decepticons than they have any right to. We ask that you remember your own such twitterings, and look upon our work as a gift of creative cohesion. A new take on the ever-growing, nostalgic-cravings of a generation now old enough to afford all the proverbial ‘cool toys’. As two long-term Transformers fans, we have seen the highs-and-lows of the franchise come and go. -

Transformers Toys Cyberverse Tiny Turbo Changers Series 1 Blind Bag Action Figures (Ages 5 and Up/ Approx

Transformers NYTF Product Copy 2019 Cyberverse: Transformers Toys Cyberverse Tiny Turbo Changers Series 1 Blind Bag Action Figures (Ages 5 and Up/ Approx. Retail Price: $2.99/ Available: 08/1/2019) Receive 1 of 12 CYBERVERSE Tiny Turbo Changers Series 1 action figures -- with these little collectibles, there’s always a surprise inside! Kids can collect and trade with their friends! Each collectable 1.5-inch- scale figure converts in 1 to 3 quick and easy steps. TRANSFORMERS CYBERVERSE Tiny Turbo Changers Series 1 figures include: OPTIMUS PRIME, BUMBLEBEE, MEGATRON, DECEPTICON SHOCKWAVE, SOUNDWAVE, JETFIRE, BLACKARACHNIA, AUTOBOT DRIFT, GRIMLOCK, AUTOBOT HOT ROD, STARSCREAM, and PROWL. Kids can collect all Tiny Turbo Changers Series 1 figures, each sold separately, to discover the signature attack moves of favorite characters from the All New G1 inspired CYBERVERSE series, as seen on Cartoon Network and YouTube. Available at most major toy retailers nationwide. Transformers Toys Cyberverse Action Attackers Scout Class Optimus Prime (Ages 6 and Up/ Approx. Retail Price: $7.99/ Available: 08/1/2019) This Scout Class OPTIMUS PRIME figure is 3.75-inches tall and easily converts from robot to truck mode in 7 steps. The last step of conversion activates the figure’s Energon Axe Attack signature move! Once converted, move can be repeated through easy reactivation steps. Kids can collect other Action Attackers figures, each sold separately, to discover the signature attack moves of favorite characters from the All New G1 inspired CYBERVERSE series, as seen on Cartoon Network and YouTube -- one of the best ways to introduce young kids and new fans to the exciting world of TRANSFORMERS! Available at most major toy retailers nationwide. -

A Fight with Underbite Role-Play Cast

A Fight with Underbite Role-play Level 4 Optimus Prime told Bumblebee he must go to Readers Ladybird Ladybird Readers Cast Earth to help. There, the Transformers must stop the bad robot Underbite from eating a city! Ladybird Readers is a series of traditional tales, modern stories, and nonfiction, written for young learners of English as a foreign language. The series follows the CEFR framework and includes language activities Underbite with Fight A that help develop key skills and can provide preparation for the Cambridge English: Young Learners (YLE) exams. For more information go to www.ladybird.com/ladybirdreaders Starter Pre-A1 A and B 50—100 words ? Pre-A1 Level 1 YLE Starters 100—200 words A1 Narrator Bumblebee Russell Sideswipe Strongarm Underbite Level 2 YLE Movers 200—300 words A1+ 1 Level 3 YLE Movers 300—600 words A2 Level 4 YLE Flyers 600—900 headwords Lexile measure XXX Included online Level 4 4 Level 4 Audio Teacher’s U.K. £4.99 Download Resources www.ladybird.com/ladybirdreaders Also available A Fight with ? www.penguin.co.uk Activity Underbite Book Narrator Fixit Grimlock Optimus Prime Denny 2 (non-speaking) Crop Work with the children to allocate characters and prepare a short performance. Discuss the stage directions in advance and make sure the children understand how to follow the script. Narrator 1: Bumblebee was a police robot in Cybertron. He worked with another police robot called Strongarm. Strongarm: I’m very happy that you worked with the great Optimus Prime. Optimus was very important to me! Narrator 2: Optimus Prime was dead. -

TRANSFORMERS GENERATIONS COMBINER WARS CONSTRUCTICON DEVASTATOR (Ages 8 & Up/Approx

TRANSFORMERS GENERATIONS COMBINER WARS CONSTRUCTICON DEVASTATOR (Ages 8 & up/Approx. Retail Price: $149.99/Available: Fall 2015) TRANSFORMERS GENERATIONS COMBINER WARS figures form some of the largest TRANSFORMERS robots ever seen, and this one tops them all. This mega-pack unites all six of the CONSTRUCTICONS in one place. Each figure is rendered in incredible detail and features impressive conversion from robot to construction vehicle and back. But that’s just the beginning—the six Voyager Scale figures combine to form the ultimate DECEPTICON weapon: the mighty CONSTRUCTICON DEVASTATOR, the largest TRANSFORMERS COMBINER robot figure! TRANSFORMERS GENERATIONS COMBINER WARS ASSORTMENTS (Ages 8 & up/Approx. Retail Price: Legends-$9.99; Deluxe-$16.99; Voyager-$24.99/Available now) Courage is Stronger When Combined! Each TRANSFORMERS GENERATIONS COMBINER WARS figure can be combined with other figures to form a gigantic TRANSFORMERS COMBINER robot. Each item is based on a classic TRANSFORMERS character and convert from vehicle to robot form, and has a third mode as a part of a larger COMBINER robot. Fans can create a large-scale COMBINER figures such as SUPERION, MENASOR or DEFENSOR and they can also mix and match the limbs to build their own COMBINER figure! Use four DELUXE class figures to form the limbs with one VOYAGER figure as the body and LEGENDS scale figures to add weapons and accessories! Each figure features incredible detail and classic conversion for a premium look and feel. Figures sold separately. CONTACT: Lindy Lika | Hunter Public Relations | [email protected] | 212.679.6600 © 2015 HASBRO ALL RIGHTS RESERVED. ™ & © DENOTE U.S. -

TRANSFORMERS Trading Card Game

INTRODUCTION This document begins with Basic and Advanced general rules for gameplay before presenting the Card FAQ’s for each Wave of releases. These contain frequently asked questions pertaining to individual cards in each wave and are ordered from newest to oldest, beginning with War for Cybertron: Siege I and ending with Wave 1. BASIC RULES For use with the AUTOBOTS STARTER SET These rules are for a basic version of the TRANSFORMERS Trading Card Game. When playing this basic version, ignore rules text on all the cards. SETUP • Start with 2 TRANSFORMERS character cards with alt-mode sides face up in front of each player. • Shuffle the battle cards and put the deck between the players. Reshuffle the deck if it runs out of cards during play. HOW TO WIN KO all your opponent’s character cards to win the game. Players take turns attacking each other’s character cards. ON YOUR TURN: 1. You may flip one of your character cards to its other mode. 2. Choose one of your character cards to be the attacker and one of your opponent’s character cards to be the defender. You can’t attack with the same character card 2 turns in a row unless your other one is KO’d. 1 ©2019 Wizards of the Coast LLC 3. Attack—Flip over 2 battle cards from the top of the deck. Add the number of orange rectangles in the upper right corners of those cards to the orange Attack number on the attacker. 4. Defense—Your opponent flips over 2 battle cards from the top of the deck and adds the number of blue rectangles in the upper right corners of those cards to the blue Defense number on the defender. -

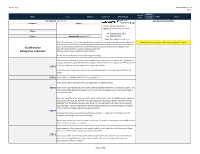

Transformers Buy List Hasbro

Brian's Toys Transformers Buy List Hasbro Quantity Buy List Name Line Sub-Line Collector # UPC (12-Digit) you have TOTAL Notes Price to sell Last Updated: April 14, 2017 Questions/Concerns/Other Full Name: Address: Delivery W730 State Road 35 Address: Fountain City, WI 54629 Phone: Tel: 608.687.7572 ext: 3 E-mail: Referred By (please fill in) Fax: 608.687.7573 Email: [email protected] Brian’s Toys will require a list of your items if you are interested in receiving a price quote on your collection. It Note: Buylist prices on this sheet may change after 30 days is very important that we have an accurate description of your items so that we can give you an accurate price quote. By following the below format, you will help ensure an accurate quote for your collection. As an Guidelines for alternative to this excel form, we have a webapp available for Selling Your Collection http://quote.brianstoys.com/lines/Transformers/toys . The buy list prices reflect items mint in their original packaging. Before we can confirm your quote, we will need to know what items you have to sell. The below list is organized by line, typically listed in chronological order of when each category was released. Within those two categories are subcategories for series and sub-line. STEP 1 Search for each of your items and mark the quantity you want to sell in the column with the red arrow. STEP 2 Once the list is complete, please mail, fax, or e-mail to us. If you use this form, we will confirm your quote within 1-2 business days. -

The Battle for Earth Begins As TRANSFORMERS: the Game Rolls out to Retail Stores Today

The Battle for Earth Begins as TRANSFORMERS: The Game Rolls out to Retail Stores Today Gamers Control the Outcome of the Planet as They Choose to Protect It as AUTOBOTS or Destroy It as DECEPTICONS SANTA MONICA, Calif., Jun 26, 2007 (BUSINESS WIRE) -- The fate of the world is in gamers' hands with the announcement that TRANSFORMERS: The Game from Activision, Inc. (Nasdaq:ATVI), under license from HPG, the licensing division of Hasbro, Inc., has shipped to retail stores nationwide. Timed to the highly anticipated theatrical release of the "TRANSFORMERS" live-action feature film from DreamWorks Pictures and Paramount Pictures, the game allows players to experience the unstoppable power and massive scale of the AUTOBOTS and DECEPTICONS in an epic battle for Earth. "TRANSFORMERS: The Game lets you choose your side - Autobots or Decepticons - to either protect or destroy Earth," said Will Kassoy, senior vice president of global brand management, Activision, Inc. "The game features fully destructible environments, an army of playable characters, split second changes from robot to vehicle form and a storyline that goes beyond the film to include some elements from the property's long history that will also appeal to Generation 1 fans." "The Activision team has done an outstanding job of replicating the live-action movie scale and realism into TRANSFORMERS: The Game," said Jeff Burdeen, Vice President of Digital Media for HPG. "Across the current and next-generation platforms, gamers will have an awesome TRANSFORMERS experience from the amazing vehicle-to-robot changes to the heart-pounding action." In TRANSFORMERS: The Game for the consoles and PC, players are presented with dual campaigns as they choose from an unprecedented line-up of characters including OPTIMUS PRIME, MEGATRON, IRONHIDE, STARSCREAM, BUMBLEBEE and more. -

A SWARM of BUMBLEBEES (Final)

THE TRANSFORMERS: REANIMATED. "A SWARM OF BUMBLEBEES." Written by Greig Tansley & Youseph "Yoshi" Tanha. Art and Colors by Dan Khanna. Based on the original cartoon series, The Transformers: ReAnimated, bridges the gap between the seminal second season and the 1986 Movie that defined the childhood of millions. www.TransformersReAnimated.com PAGE ONE: PANEL 1: EXT. NEAR MOUNT ST. HILARY - AFTERNOON. BUMBLEBEE (in Volkswagen-mode) SPEEDS through the SURROUNDING DESERT, heading towards the AUTOBOT ARK and its mountainous home in the distance. BUMBLEBEE I tell you what, I’m glad to be home. PANEL 2: INT. BUMBLEBEE’S CAR INTERIOR. SPIKE sits with CARLY in the front of Bumblebee’s VW-mode. SPIKE No kidding. Fighting off Insecticons at the mall wasn’t my idea of a perfect date. CARLY I know what you mean, Spike. If it wasn’t for Vincenzo’s Pizza, I don’t know if I’d ever go back to place again. BUMBLEBEE (off-panel) Well, we’re safe and sound now. PANEL 3: EXT. THE ENTRYWAY TO THE ARK - AFTERNOON. Bumblebee SWERVES OUT OF THE WAY to avoid a slew of AUTOBOTS, including: OPTIMUS PRIME, BRAWN, HUFFER, IRONHIDE, WHEELJACK and SKIDS, as they hurriedly BURST FREE of the Ark (all in their vehicle-modes). BUMBLEBEE Back home at the... WHOA! PANEL 4: As Ironhide helps Bumblebee to his feet, Wheeljack does the same with Spike and Carly. 1 www.TransformersReAnimated.com Optimus Prime stands in the background, looking down at the narrowly-avoided calamity. IRONHIDE Easy there, little buddy. Where were you goin’ in such a hurry? BUMBLEBEE I, uh.. -

Hasbro's Transformers Brand "Rolls Out" a Blockbuster Summer with New Toy Line Launching This Week in Anticipation of Transformers: Dark of the Moon Movie

May 17, 2011 Hasbro's Transformers Brand "Rolls Out" a Blockbuster Summer with New Toy Line Launching This Week in Anticipation of Transformers: Dark of the Moon Movie PAWTUCKET, R.I.--(BUSINESS WIRE)-- The iconic TRANSFORMERS brand from Hasbro, Inc. (NASDAQ: HAS), which has become one of the most successful entertainment franchises of the 21st century, reaches new heights in 2011 when the heroic AUTOBOTS and the villainous DECEPTICONS once again invade movie theaters around the world to continue their epic battle. The new Paramount Pictures live-action movie from director Michael Bay, and executive producer Steven Spielberg, TRANSFORMERS: DARK OF THE MOON picks up from the last movie with the DECEPTICONS vowing to avenge their defeat and ultimately results in mysterious ties to the 1960's space race between the US and USSR. The movie — which will be the first-ever TRANSFORMERS film in 3D — opens worldwide on July 1, 2011. Fans won't have to wait until then to get in on the TRANSFORMERS action as Hasbro announces the global availability of its TRANSFORMERS: DARK OF THE MOON toy line at retail locations beginning this week. As one of the hottest global entertainment properties, the TRANSFORMERS brand launches a deep and innovative product line that captures the excitement of the movie while introducing new play patterns and experiences to the brand for kids and fans alike. Highlights include two new action figure segments — the convertible, interchangeable weapons of the MECHTECH line and the collectability and simpler conversions of the CYBERVERSE line — both featuring popular AUTOBOT and DECEPTICON characters like OPTIMUS PRIME and MEGATRON, as well as characters from the new film including SENTINEL PRIME. -

The Construction of China, America, and Egypt As Seen in Transformer 2 Revenge of the Fallen Movie

THE CONSTRUCTION OF CHINA, AMERICA, AND EGYPT AS SEEN IN TRANSFORMER 2 REVENGE OF THE FALLEN MOVIE A GRADUATING PAPER Submitted in Partial Fulfillment of the Requirements for Gaining the Bachelor Degree in English Literature By: KHAIRUNISA 10150020 ENGLISH DEPARTMENT FACULTY OF ADAB AND CULTURAL SCIENCES STATE ISLAMIC UNIVERSITY SUNAN KALIJAGA 2015 A FINAL PROJECT STATEMENT I hereby declare that this graduating paper is definitely my own work. I am compk tely responsible for the content of this graduating paper. To the best of my knowledge and belief, other writer's opinion or findings included in this graduating paper are quoted or cited in accordance with ethical standards. Yogyakarta, June 23,2015 The writer, KHAIRUNISA Student No. 10150020 ii KEMENTRIAN AGAMA UNIVERSITAS ISLAM NEGERI SUNAN KALIJAGA FAKULTAS ADAB DAN ILMU BUDAYA J1. Marsda Adisucipto Yogyakarta 55281 Telp.lFax (0274) 513949 Web: httplladab.uin-suka.ac.id e-mail: [email protected] NOTADINAS Hal : Skripsi a.n. Khairunisa Kepada: Yth. Dekan Fakultas Adab dan IImu Budaya UIN Sunan Kalijaga Di Y ogyakarta Assalamualaikum Wr. Wb Setelah memeriksa, meneliti, dan memberikan arahan untuk perbaikan atas skripsi saudara: Nama : Khairunisa NIM : 10150020 Prodi : Sastra Inggris Judul Skripsi : THE CONSTRUCTION OF CHINA, AMERICA, AND EGYPT AS SEEN IN TRANSFORMER 2 REVENGE OF THE FALLEN MOVIE Saya menyatakan bahwa skripsi terse but sudah dapat diajukan pada sidang Munaqosyah untuk memenuhi salah satu syarat memperoleh gelar Sarjana Sastra Inggris. Demikian surat ini saya buat atas perhatian yang diberikan saya ucapkan terimakasih. Wassalamualaikum Wr. Wb Yogyakarta,23 Juni2015 Pembimbing, Witriani, SS., M.Hum NIP. 19720801 200602002 iv THE CONSTRUCTION OF CHINA, AMERICA, AND EGYPT AS SEEN IN TRANSFORMER 2 REVENGE OF THE FALLEN MOVIE By: KHAIRUNISA ABSTRACT Transformer 2 Revenge of The Fallen is the second sequel movie of Transformer which tells about the revenge of the Fallen on the earth.