Regression Shrinkage and Neural Models in Predicting the Results of 400-Metres Hurdles Races

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Weight Pentathlon Shall Be Included in the Team Events

EVAA TECHNICAL MANAGER WMA STADIA COMMITTEE MEMBER Dear athletes-Affiliates At the general assembly in san Sebastian there will be several point that will be raised regarding competition, as I am aware that many of the affiliates may not attend the assembly I would appreciate your feedback on some of the points raised in the following series of possible proposals. Even when you will have members attending it would be good for me to have some of your ideas as to these things, so that though I may be for or against them I have some feedback from my region, please mail me your comments and I will make a list for the meeting in August. Winston Thomas. [email protected] Possible Team medals in the Weight Pentathlon PROPOSAL The Weight Pentathlon shall be included in the team events, Team medal shall be awarded in the Weight Pentathlon. Awards will be for Women and men *M35 upwards in 5 year age groups Teams will consist of there scoring athlete Their total scores will be added to secure the final points. Athlete will be able to score in a lower age class only where they have no team in their own age group and all the implements are of the same specifications. For a trial period of 1 championships teams shall pay a €6.00 entry fee Teams will be free from this period as with other team events. *Note M35 should they be adopted by WMA/IAAF Ruling to be added in THE COMPETITION Field Events 12.(6) When team competitions are included in Weight pentathlon, there shall be three team awards on the basis that each Affiliate is entitled to count one team (best three to score) in five year age groups, and their results shall be computed on the points gained. -

RESULTS 400 Metres Hurdles Women - Final

Doha (QAT) 27 September - 6 October 2019 RESULTS 400 Metres Hurdles Women - Final RECORDS RESULT NAME COUNTRY AGE VENUE DATE World Record WR 52.16 Dalilah MUHAMMAD USA 29 Doha 4 Oct 2019 Championships Record CR 52.16 Dalilah MUHAMMAD USA 29 Doha 4 Oct 2019 World Leading WL 52.16 Dalilah MUHAMMAD USA 29 Doha 4 Oct 2019 Area Record AR National Record NR Personal Best PB Season Best SB 4 October 2019 21:29 START TIME 26° C 61 % TEMPERATURE HUMIDITY PLACE NAME COUNTRY DATE of BIRTH LANE RESULT REACTION Fn 1 Dalilah MUHAMMAD USA 7 Feb 90 6 52.16 WR 0.200 2 Sydney MCLAUGHLIN USA 7 Aug 99 4 52.23 PB 0.161 3 Rushell CLAYTON JAM 18 Oct 92 5 53.74 PB 0.137 4 Lea SPRUNGER SUI 5 Mar 90 9 54.06 NR 0.199 5 Zuzana HEJNOVÁ CZE 19 Dec 86 8 54.23 0.141 6 Ashley SPENCER USA 8 Jun 93 2 54.45 (.444) 0.163 7 Anna RYZHYKOVA UKR 24 Nov 89 3 54.45 (.445) SB 0.173 8 Sage WATSON CAN 20 Jun 94 7 54.82 0.186 ALL-TIME TOP LIST SEASON TOP LIST RESULT NAME VENUE DATE RESULT NAME VENUE 2019 52.16 Dalilah MUHAMMAD (USA) Doha 4 Oct 19 52.16 Dalilah MUHAMMAD (USA) Doha 4 Oct 52.23 Sydney MCLAUGHLIN (USA) Doha 4 Oct 19 52.23 Sydney MCLAUGHLIN (USA) Doha 4 Oct 52.34 Yuliya PECHONKINA (RUS) Tula (Arsenal Stadium) 8 Aug 03 53.11 Ashley SPENCER (USA) Des Moines, IA (USA) 28 Jul 52.42 Melaine WALKER (JAM) Berlin (Olympiastadion) 20 Aug 09 53.73 Shamier LITTLE (USA) Lausanne (Pontaise) 5 Jul 52.47 Lashinda DEMUS (USA) Daegu (DS) 1 Sep 11 53.74 Rushell CLAYTON (JAM) Doha 4 Oct 52.61 Kim BATTEN (USA) Göteborg (Ullevi Stadium) 11 Aug 95 54.06 Lea SPRUNGER (SUI) Doha 4 Oct 52.62 Tonja -

Heel and Toe 2019/2020 Number 27

HEEL AND TOE ONLINE The official organ of the Victorian Race Walking Club 2019/2020 Number 27 Tuesday 31 March 2020 VRWC Preferred Supplier of Shoes, clothes and sporting accessories. Address: RUNNERS WORLD, 598 High Street, East Kew, Victoria (Melways 45 G4) Telephone: 03 9817 3503 Hours: Monday to Friday: 9:30am to 5:30pm Saturday: 9:00am to 3:00pm Website: http://www.runnersworld.com.au Facebook: http://www.facebook.com/pages/Runners-World/235649459888840 THE RACEWALKING YEAR IS PUT ON HOLD I start with a great little cartoon, published in marciadalmondo (http://www.marciadalmondo.com/eng/dettagli_news.aspx?id=3781). Fantastic work by the racewalking judges – show the red card to COVID-19! Ok, no races on which to report this week but lots of news about the ongoing implications of COVID-19. Firstly what’s happening on the local front • At the moment, the following two events are still scheduled, but obviously final decisions will be made closer to the day ◦ Aug 22 (Sat), 2020 Australian Cross Country Championships, Adelaide ◦ Aug 30 (Sun), 2020 Australian Roadwalk Championships (and RWA Carnival), Melbourne • However, Sporting Schools Australia has announced the cancellation of all sports carnivals this year and therefore will not be holding their cross country championships in Adelaide in August. I know that some walkers also compete in this meet. ◦ August 23 (Sun), 2020 Sporting Schools Australia Cross Country Championships, Adelaide (CANCELLED) • Athletics Australia announced last mnth that the Australian T&F Championships (Sydney, March) had been postponed. That has now been changed to a cancellation. Alas, no Australian T&F Championships this year. -

A Proposal to Change the Women's Hurdles Events by Sergio Guarda

VIEWPOINT Ptrgi -i^^ by l/V\F 8:2; 23-26. 1993 A proposal to change the 1 Introduction women's hurdles events The Sprint hurdles race, more or less, as we now know it. was 'invented' at Oxford University in 1864. The dislance was 12(1 by Sergio Guarda Etcheverry yards, with an approach and finish of 15 yards and a 10 yards spacing between 10 hurdles, 3 foot 6 inches in height. These measurements formed the basis for the event when it was included in the firsi modern Olympic Games, held at Athens in 1896. There, measurements became the metric equivalents. 1 Ul metres dislance. 10 hurdles 106.7cm in height and 9.14 metres apart, a dislance from starl line lo first hurdle of 13.72 metres and from lasl hurdle to finish of 14.02 meires. The first gold medal for the Olympic event was won by Thomas Curtis (USA) wilh a lime of 17 •V5 sec. From thai dale to the present, the rules of this event have noi been modified despite the progress made in lhe construc tion of the hurdles, in the quality of the track surface, in the quality of the shoes, in the selection of lhe athletes and in the spe cific training methodology and planning. Thc 400 metres Hurdles for men was incorporated in the programme for lhe Sergio Guarda Etcheverry is a professor 1900 Olympic Games, held in Paris. The of phvsical education al the Ufiivcisiiy of winner on this firsl occasion was Waller Santiago. Chile, andanteinberofihe Tewksbury (USA) with a time of 57.6 sec. -

HEEL and TOE ONLINE the Official Organ of the Victorian Race Walking

HEEL AND TOE ONLINE The official organ of the Victorian Race Walking Club 2019/2020 Number 40 Tuesday 30 June 2020 VRWC Preferred Supplier of Shoes, clothes and sporting accessories. Address: RUNNERS WORLD, 598 High Street, East Kew, Victoria (Melways 45 G4) Telephone: 03 9817 3503 Hours: Monday to Friday: 9:30am to 5:30pm Saturday: 9:00am to 3:00pm Website: http://www.runnersworld.com.au Facebook: http://www.facebook.com/pages/Runners-World/235649459888840 VRWC COMPETITION RESTARTS THIS SATURDAY Here is the big news we have all been waiting for. Our VRWC winter roadwalking season will commence on Saturday afternoon at Middle Park. Club Secretary Terry Swan advises the the club committee meet tonight (Tuesday) and has given the green light. There will be 3 Open races as follows VRWC Roadraces, Middle Park, Saturday 6th July 1:45pm 1km Roadwalk Open (no timelimit) 2.00pm 3km Roadwalk Open (no timelimit) 2.30pm 10km Roadwalk Open (timelimit 70 minutes) Each race will be capped at 20 walkers. Places will be allocated in order of entry. No exceptions can be made for late entries. $10 per race entry. Walkers can only walk in ONE race. Multiple race entries are not possible. Race entries close at 6PM Thursday. No entries will be allowed on the day. You can enter in one of two ways • Online entry via the VRWC web portal at http://vrwc.org.au/wp1/race-entries-2/race-entry-sat-04jul20/. We prefer payment by Credit Card or Paypal within the portal when you register. Ignore the fact that the portal says entries close at 10PM on Wednesday. -

YOUTH OLYMPIC GAMES BUENOS AIRES 2018 Quota Places By

APPENDIX 3 YOUTH OLYMPIC GAMES BUENOS AIRES 2018 02-Feb-18 Quota Places by Continent and Event Boys AFRICA AMERICAS ASIA EUROPE OCEANIA totals 100 Metres 5 12 8 6 1 32 200 Metres 3 5 4 11 1 24 400 Metres 5 8 5 5 1 24 800 Metres 8 5 2 8 1 24 1500 Metres 7 3 1 6 1 18 3000 Metres 6 1 5 5 1 18 110m Hurdles 2 8 3 7 2 22 400 Metres Hurdles 3 6 3 3 1 16 2000 Metres Steeplechase 6 2 2 7 1 18 High Jump 1 5 4 5 1 16 Pole Vault 1 1 3 10 1 16 Long Jump 1 6 2 6 1 16 Triple Jump 2 6 4 3 1 16 Shot Put 2 4 2 7 1 16 Discus Throw 1 4 2 8 1 16 Hammer Throw 1 2 2 10 1 16 Javelin Throw 2 3 2 8 1 16 10,000 Metres Race Walk 1 7 3 4 1 16 Totals 57 88 57 119 19 340 Girls AFRICA AMERICAS ASIA EUROPE OCEANIA Totals 100 Metres 3 14 1 13 1 32 200 Metres 1 7 4 11 1 24 400 Metres 3 7 2 11 1 24 800 Metres 5 3 1 14 1 24 1500 Metres 7 2 3 5 1 18 3000 Metres 8 2 3 4 1 18 100m Hurdles 1 8 2 10 1 22 400 Metres Hurdles 1 5 2 7 1 16 2000 Metres Steeplechase 3 3 2 9 1 18 High Jump 1 2 1 11 1 16 Pole Vault 1 3 1 10 1 16 Long Jump 1 5 1 8 1 16 Triple Jump 1 4 2 8 1 16 Shot Put 1 3 1 10 1 16 Discus Throw 2 4 1 8 1 16 Hammer Throw 1 3 1 10 1 16 Javelin Throw 1 5 2 7 1 16 5000 Metres Race Walk 2 5 2 6 1 16 Totals 43 85 32 162 18 340 EVENTS (38) Men’s Events (19) Women’s Events (19) Track Track 100m 100m 200m 200m 400m 400m 800m 800m 1500m* 1500m* 3000m* 3000m* 2000m Steeplechase* 2000m Steeplechase* 110m hurdles 100m hurdles 400m hurdles 400m hurdles 10’000m Race Walk 5’000m Race Walk Field Field High Jump High Jump Pole Vault Pole Vault Long Jump Long Jump Triple Jump Triple Jump Shot Put Shot Put Discus Throw Discus Throw Hammer Throw Hammer Throw Javelin Throw Javelin Throw Cross country* Cross country* Distance (4-6km) Distance (4-6km) Note*: All athletes entered in 1500m, 3000m, 2000m Steeple Chase to compete in Cross Country event. -

Track Stats Index

Index to Track Stats Volumes 31-46 (1993-2008) compiled by Keith Morbey & Stuart Mazdon (figures in brackets are page numbers, usually for small mentions which may not be in the contents page) Contents AUTHORS ................................................................................................................................................................... 1 AREAS......................................................................................................................................................................... 5 CHAMPIONSHIPS and MEETINGS............................................................................................................................ 6 EVENTS – Men............................................................................................................................................................ 7 EVENTS – Women......................................................................................................................................................11 MISCELLANEOUS.....................................................................................................................................................12 NATIONS ...................................................................................................................................................................12 PEOPLE ......................................................................................................................................................................14 RECORDS...................................................................................................................................................................18 -

Athlete Typology and Training Strategy in the 400M Hurdles

Coaching Practice © by IAAF Athlete Typology and 27:1/2; 27-37, 2012 Training Strategy in the 400m Hurdles by Janusz Iskra ABSTRACT AUTHOR Most coaches will find themselves with ath- Prof. Janusz Iskra is a scientist, sport meth- letes who vary from any established ideal odologist and athletics coach specialised in set of characteristics, making it necessary hurdles events. He currenty works in two develop an appropriate training strategy to Polish high schools: Technical University maximise each individual athlete’s poten- in Opole and University School of Physical tial. For them, the most interesting analy- Education in Katowice. sis of training concerns groups of similar athletes - defined by body build, motor and coordinative (technical, rhythm) prepara- tion, personality and the specifics of their sport careers - so that the relevant lessons Introduction and information can be applied in practice. In a previous study the author, who is a coach and scientist, created a typology of erformance level in the 400m hurdles 400m hurdlers and found there were seven P depends on the athlete’s genetic and basic types of top performers in the event. physical pre-dispositions, particularly The aim of this article is to systematically movement awareness and coordination, and present the appropriate training means the type of long-term (6-10 years) training work for the three types of 400m hurdlers seen that is applied. The nature and specific char- most often in the early 21st century: “Spe- acteristics of this discipline make it difficult to cial Endurance”, “Technical” and “Rhythm” identify “pure” talent at the first (youth) stage and to show how these means are incorpo- of training and the best we can do is look for rated into microcycles for each of the main tall individuals with running speed, endurance, training periods as a guide for coaches who strength and good co-ordination. -

01.04.21 2021 Australian Track & Field Championships Sydney

01.04.21 2021 Australian Track & Field Championships Sydney Olympic Park Athletic Centre 12th - 19th April Day 1 - Monday 12th April 2:15 PM F Hammer Throw Under 20 Men FINAL 2:15 PM F High Jump Under 14 Men FINAL 2:30 PM T 100 metres Under 14 Men 1st Round 2:30 PM F Shot Put Under 16 Women FINAL 2:45 PM T 100 metres Under 14 Women 1st Round 3:05 PM T 100 metres Under 15 Women 1st Round 3:15 PM T 100 metres Under 15 Men 1st Round 3:30 PM T 100 metres Under 16 Men 1st Round 3:45 PM F High Jump Under 14 Women FINAL 3:50 PM T 100 metres Under 16 Women 1st Round 4:00 PM F Shot Put Under 16 Men FINAL 4:05 PM T 100 metres Under 20 Men 1st Round 4:20 PM T 100 metres Under 20 Women 1st Round 4:40 PM T 400 metres Under 14 Men 1st Round 4:52 PM T 400 metres Under 14 Women 1st Round 5:04 PM T 400 metres Under 15 Women 1st Round 5:16 PM T 400 metres Under 15 Men 1st Round 5:28 PM T 400 metres Under 16 Men 1st Round 5:30 PM F Shot Put Under 14 Women FINAL 5:40 PM T 400 metres Under 16 Women 1st Round 5:55 PM T 400 metres Under 20 Men 1st Round 6:00 PM F Hammer Throw Under 15 Women FINAL 6:00 PM F High Jump Under 16 Men FINAL 6:15 PM T 400 metres Under 20 Women 1st Round 6:30 PM T 100 metres Under 14 Men FINAL 6:30 PM F Pole Vault Under 20 Men FINAL 6:35 PM T 100 metres Under 14 Women FINAL 6:40 PM T 100 metres Under 15 Women FINAL 6:45 PM T 100 metres Under 15 Men FINAL 6:50 PM T 100 metres Under 16 Men FINAL 6:55 PM T 100 metres Under 16 Women FINAL 7:00 PM T 100 metres Under 20 Men FINAL 7:00 PM F Shot Put Under 14 Men FINAL 7:05 PM T 100 metres -

Velocity and Stride Parameters of World-Class 400-Meter Athletes Compared with Less Experienced Runners Christine Hanon, Bruno Gajer

Velocity and Stride Parameters of World-Class 400-Meter Athletes Compared With Less Experienced Runners Christine Hanon, Bruno Gajer To cite this version: Christine Hanon, Bruno Gajer. Velocity and Stride Parameters of World-Class 400-Meter Athletes Compared With Less Experienced Runners. Journal of Strength and Conditioning Research, Lippin- cott, Williams & Wilkins, 2009, 23 (2), pp.524-531. 10.1519/JSC.0b013e318194e071. hal-01623755 HAL Id: hal-01623755 https://hal-insep.archives-ouvertes.fr/hal-01623755 Submitted on 25 Oct 2017 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. VELOCITY AND STRIDE PARAMETERS OF WORLD-CLASS 400-METER ATHLETES COMPARED WITH LESS EXPERIENCED RUNNERS 1 2 CHRISTINE HANON AND BRUNO GAJER 1Laboratory of Biomechanics and Physiology, French National Institute of Sport, Paris, France; and 2French Athletic Federation, Paris, France ABSTRACT INTRODUCTION Hanon, C and Gajer, B. Velocity and stride parameters of world- ecause small differences in performance generally class 400-meter athletes compared with less experienced determine a competition outcome, information runners. J Strength Cond Res 23(2): 524–531, 2009—The concerning the best way to expend the limited purpose of this study was to determine, based on the time B energetic sources available is of considerable interest. -

Track and Field Qualifying Standards

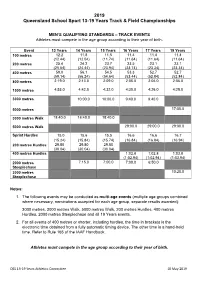

2019 Queensland School Sport 13-19 Years Track & Field Championships MEN’S QUALIFYING STANDARDS – TRACK EVENTS Athletes must compete in the age group according to their year of birth. Event 13 Years 14 Years 15 Years 16 Years 17 Years 19 Years 100 metres 12.2 11.8 11.5 11.4 11.4 11.4 (12.44) (12.04) (11.74) (11.64) (11.64) (11.64) 200 metres 25.4 24.2 23.7 23.5 23.1 23.1 (25.64) (24.44) (23.94) (23.74) (23.34) (23.34) 400 metres 59.0 56.1 54.5 53.3 52.7 52.7 (59.14) (56.24) (54.64) (53.44) (52.84) (52.84) 800 metres 2:19.0 2:13.0 2:09.0 2:06.0 2:06.0 2:06.0 1500 metres 4:53.0 4:42.0 4:32.0 4:30.0 4:26.0 4:28.0 3000 metres 10:00.0 10:00.0 9:40.0 9:40.0 5000 metres 17:00.0 3000 metres Walk 18:40.0 18:40.0 18:40.0 5000 metres Walk 29:00.0 29:00.0 29:00.0 Sprint Hurdles 15.0 15.6 15.5 16.6 16.6 16.7 (15.24) (15.84) (15.74) (16.84) (16.84) (16.94) 200 metres Hurdles 29.80 29.80 29.80 (30.04) (30.04) (30.04) 400 metres Hurdles 1:02.8 1:02.8 1:03.8 (1:02.94) (1:02.94) (1:03.94) 2000 metres 7:15.0 7:00.0 7:00.0 6:50.0 Steeplechase 3000 metres 10:20.0 Steeplechase Notes: 1. -

Athletics Monthly

` June 2012 Athletics Monthly The Journal of the World Famous Scarborough Athletic Club Some of the 19 who competed so very successfully at the final YA League meeting at Whitley Bay. Massive points total, overwhelming win and the League Title achieved. Congratulations to the athletes, coaches and helpers. Full report included in this edition. Ed. Fixtures Lists. Road, Fell and Cross Country Sports Therapy Website. Injury Prevention Article on building stamina. Liz McColgan Athletics Biographies. Sue Louth Cartoon and Quote of the month Road rankings Press Reports From the Editor Welcome to the June Edition of the Club Magazine, and thank you to Sue Louth and Jenna Wheatman for their contribution. For the second consecutive month, I have highlighted the achievements of our younger athletes, who have now won their track and field league in some style. I hope that many of them will stay with us in their older years, and give thus give a boost to the seniors, particularly in the senior track and field league, which so far this season has not been very successful. For what now remains of the Track and Field season, and in particular the English Schools championships, I hope that those concerned can stay injury free, and end the year on a high note. Mick Thompson 01723 864442 [email protected] Fixtures Club members have participated in almost all of the races listed. I can help with further information if required. Ed. Fell See also the Esk Valley Fell Race Club web site for further information, and a very large fixture list.