Introduction to Fuzzing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

User Manual HOFA CD-Burn.DDP.Master (App) HOFA CD-Burn.DDP.Master PRO (App) V2.5.4 Content Introduction

User Manual HOFA CD-Burn.DDP.Master (App) HOFA CD-Burn.DDP.Master PRO (App) V2.5.4 Content Introduction .......................................................................................... 4 Quick Start ............................................................................................ 4 Installation ............................................................................................ 5 Activation ........................................................................................... 5 Evaluation version ............................................................................... 5 Project window ....................................................................................... 6 Audio file import and formats ................................................................... 7 The Audio Editor ..................................................................................... 8 Audio Editor Tracks .............................................................................. 8 Audio Editor Mode ............................................................................... 9 Mode: Insert ................................................................................... 9 Mode: Slide ..................................................................................... 9 Audio Clips ....................................................................................... 10 Zoom ........................................................................................... 11 Using Plugins ................................................................................... -

Download Media Player Codec Pack Version 4.1 Media Player Codec Pack

download media player codec pack version 4.1 Media Player Codec Pack. Description: In Microsoft Windows 10 it is not possible to set all file associations using an installer. Microsoft chose to block changes of file associations with the introduction of their Zune players. Third party codecs are also blocked in some instances, preventing some files from playing in the Zune players. A simple workaround for this problem is to switch playback of video and music files to Windows Media Player manually. In start menu click on the "Settings". In the "Windows Settings" window click on "System". On the "System" pane click on "Default apps". On the "Choose default applications" pane click on "Films & TV" under "Video Player". On the "Choose an application" pop up menu click on "Windows Media Player" to set Windows Media Player as the default player for video files. Footnote: The same method can be used to apply file associations for music, by simply clicking on "Groove Music" under "Media Player" instead of changing Video Player in step 4. Media Player Codec Pack Plus. Codec's Explained: A codec is a piece of software on either a device or computer capable of encoding and/or decoding video and/or audio data from files, streams and broadcasts. The word Codec is a portmanteau of ' co mpressor- dec ompressor' Compression types that you will be able to play include: x264 | x265 | h.265 | HEVC | 10bit x265 | 10bit x264 | AVCHD | AVC DivX | XviD | MP4 | MPEG4 | MPEG2 and many more. File types you will be able to play include: .bdmv | .evo | .hevc | .mkv | .avi | .flv | .webm | .mp4 | .m4v | .m4a | .ts | .ogm .ac3 | .dts | .alac | .flac | .ape | .aac | .ogg | .ofr | .mpc | .3gp and many more. -

Aug2021 CBCS Bsc Computerscience

Choice Based Credit System 140 Credits for 3-Year UG Honours MAKAUT Framework w.e.f. Academic Year: 2021 – 2022 MODEL CURRICULUM for B. Sc.- Computer Science (Hons.) CBCS – MAKAUT UG Degree: B. Sc. - Computer Science (Hons) 140 Credit Subject Semester Semester Semester Semester II Semester V Semester VI Type I III IV CC C1, C2 C3, C4 C5, C6,C7 C8,C9,C10 C11,C12 C13,C14 DSE DSE1, DSE2 DSE3, DSE4 GE GE1 GE2 GE3 GE4 Capstone Project Evaluation AECC AECC 1 AECC 2 SEC SEC 1 SEC 2 4 (20) 4 (20) 5 (26) 5(26) 4 (24) 4 (24) Teaching-Learning-Assessment as per Bloom’s Taxonomy fitment Levels L1: L2: L3: L4: L5: L6: REMEMBER UNDERSTAND APPLY ANALYZE EVALUATE CREATE Courses – T&L and Assessment Levels SEM 1 SEM 2 SEM 3 SEM 4 SEM 5 SEM 6 MOOCs BEGINNER BASIC INTERMEDIA TE ADVANCED CC: Core Course AECC: Ability Enhancement Compulsory Courses GE: Generic Elective Course DSE: Discipline Specific Elective Course SEC: Skill Enhancement Course B. Sc. - Computer Science (Hons.) Curriculum Structure 1st Semester Credit Course Credit Mode of Delivery Subject Type Course Name DistriBution Proposed Code Points MOOCs L P T Offline Online Blended Programming CC1-T CS 101 Fundamental – 4 4 yes using C Language Programming using CC1-P CS 191 2 2 yes CC C CC2-T CS 102 Digital Electronics 4 4 yes Digital Electronics CC2-P CS 192 2 2 yes Lab Any one from GE1 6 yes GE Basket – 1 to 5 AEC Soft Skills (English AECC 1 CS(HU-101) 2 2 yes C Communication) Semester Credits 20 nd 2 Semester Credit Course Credit Mode of Delivery Subject Type Course Name DistriBution Proposed Code Points MOOCs L P T Offline Online Blended CC3-T CS 201 Data Structures 4 4 yes CC Data Structures CC3-P CS 291 2 2 yes Lab Computer CC4-T CS 202 Organization 4 4 yes Computer CC4-P CS 292 2 2 yes Organization Lab. -

The Kid3 Handbook

The Kid3 Handbook Software development: Urs Fleisch The Kid3 Handbook 2 Contents 1 Introduction 11 2 Using Kid3 12 2.1 Kid3 features . 12 2.2 Example Usage . 12 3 Command Reference 14 3.1 The GUI Elements . 14 3.1.1 File List . 14 3.1.2 Edit Playlist . 15 3.1.3 Folder List . 15 3.1.4 File . 16 3.1.5 Tag 1 . 17 3.1.6 Tag 2 . 18 3.1.7 Tag 3 . 18 3.1.8 Frame List . 18 3.1.9 Synchronized Lyrics and Event Timing Codes . 21 3.2 The File Menu . 22 3.3 The Edit Menu . 28 3.4 The Tools Menu . 29 3.5 The Settings Menu . 32 3.6 The Help Menu . 37 4 kid3-cli 38 4.1 Commands . 38 4.1.1 Help . 38 4.1.2 Timeout . 38 4.1.3 Quit application . 38 4.1.4 Change folder . 38 4.1.5 Print the filename of the current folder . 39 4.1.6 Folder list . 39 4.1.7 Save the changed files . 39 4.1.8 Select file . 39 4.1.9 Select tag . 40 The Kid3 Handbook 4.1.10 Get tag frame . 40 4.1.11 Set tag frame . 40 4.1.12 Revert . 41 4.1.13 Import from file . 41 4.1.14 Automatic import . 41 4.1.15 Download album cover artwork . 42 4.1.16 Export to file . 42 4.1.17 Create playlist . 42 4.1.18 Apply filename format . 42 4.1.19 Apply tag format . -

JSP with Javax.Script Languages

Seminar paper BSF4ooRexx: JSP with javax.script Languages Author: Nora Lengyel Matriculation no: 1552636 Class Title: Projektseminar aus Wirtschaftsinformatik (Schiseminar) Instructor: ao.Univ.Prof. Mag. Dr. Rony G. Flatscher Term: Winter Term 2019/2020 Vienna University of Economics and Business Content 1. Introduction ..................................................................................................................................... 3 2. Tomcat .............................................................................................................................................. 4 2.1 Introduction to Tomcat ............................................................................................................... 4 2.2 The Installation of Tomcat ........................................................................................................ 5 2.2.1 Environment Variables ....................................................................................................... 7 2.2.2 Tomcat Web Application Manager ................................................................................... 9 3. Cookie ............................................................................................................................................. 11 3.1 Introduction to Cookies ........................................................................................................... 11 3.2 Functioning of a Cookie ......................................................................................................... -

Ardour Export Redesign

Ardour Export Redesign Thorsten Wilms [email protected] Revision 2 2007-07-17 Table of Contents 1 Introduction 4 4.5 Endianness 8 2 Insights From a Survey 4 4.6 Channel Count 8 2.1 Export When? 4 4.7 Mapping Channels 8 2.2 Channel Count 4 4.8 CD Marker Files 9 2.3 Requested File Types 5 4.9 Trimming 9 2.4 Sample Formats and Rates in Use 5 4.10 Filename Conflicts 9 2.5 Wish List 5 4.11 Peaks 10 2.5.1 More than one format at once 5 4.12 Blocking JACK 10 2.5.2 Files per Track / Bus 5 4.13 Does it have to be a dialog? 10 2.5.3 Optionally store timestamps 5 5 Track Export 11 2.6 General Problems 6 6 MIDI 12 3 Feature Requests 6 7 Steps After Exporting 12 3.1 Multichannel 6 7.1 Normalize 12 3.2 Individual Files 6 7.2 Trim silence 13 3.3 Realtime Export 6 7.3 Encode 13 3.4 Range ad File Export History 7 7.4 Tag 13 3.5 Running a Script 7 7.5 Upload 13 3.6 Export Markers as Text 7 7.6 Burn CD / DVD 13 4 The Current Dialog 7 7.7 Backup / Archiving 14 4.1 Time Span Selection 7 7.8 Authoring 14 4.2 Ranges 7 8 Container Formats 14 4.3 File vs Directory Selection 8 8.1 libsndfile, currently offered for Export 14 4.4 Container Types 8 8.2 libsndfile, also interesting 14 8.3 libsndfile, rather exotic 15 12 Specification 18 8.4 Interesting 15 12.1 Core 18 8.4.1 BWF – Broadcast Wave Format 15 12.2 Layout 18 8.4.2 Matroska 15 12.3 Presets 18 8.5 Problematic 15 12.4 Speed 18 8.6 Not of further interest 15 12.5 Time span 19 8.7 Check (Todo) 15 12.6 CD Marker Files 19 9 Encodings 16 12.7 Mapping 19 9.1 Libsndfile supported 16 12.8 Processing 19 9.2 Interesting 16 12.9 Container and Encodings 19 9.3 Problematic 16 12.10 Target Folder 20 9.4 Not of further interest 16 12.11 Filenames 20 10 Container / Encoding Combinations 17 12.12 Multiplication 20 11 Elements 17 12.13 Left out 21 11.1 Input 17 13 Credits 21 11.2 Output 17 14 Todo 22 1 Introduction 4 1 Introduction 2 Insights From a Survey The basic purpose of Ardour's export functionality is I conducted a quick survey on the Linux Audio Users to create mixdowns of multitrack arrangements. -

Installation Manual

CX-20 Installation manual ENABLING BRIGHT OUTCOMES Barco NV Beneluxpark 21, 8500 Kortrijk, Belgium www.barco.com/en/support www.barco.com Registered office: Barco NV President Kennedypark 35, 8500 Kortrijk, Belgium www.barco.com/en/support www.barco.com Copyright © All rights reserved. No part of this document may be copied, reproduced or translated. It shall not otherwise be recorded, transmitted or stored in a retrieval system without the prior written consent of Barco. Trademarks Brand and product names mentioned in this manual may be trademarks, registered trademarks or copyrights of their respective holders. All brand and product names mentioned in this manual serve as comments or examples and are not to be understood as advertising for the products or their manufacturers. Trademarks USB Type-CTM and USB-CTM are trademarks of USB Implementers Forum. HDMI Trademark Notice The terms HDMI, HDMI High Definition Multimedia Interface, and the HDMI Logo are trademarks or registered trademarks of HDMI Licensing Administrator, Inc. Product Security Incident Response As a global technology leader, Barco is committed to deliver secure solutions and services to our customers, while protecting Barco’s intellectual property. When product security concerns are received, the product security incident response process will be triggered immediately. To address specific security concerns or to report security issues with Barco products, please inform us via contact details mentioned on https://www.barco.com/psirt. To protect our customers, Barco does not publically disclose or confirm security vulnerabilities until Barco has conducted an analysis of the product and issued fixes and/or mitigations. Patent protection Please refer to www.barco.com/about-barco/legal/patents Guarantee and Compensation Barco provides a guarantee relating to perfect manufacturing as part of the legally stipulated terms of guarantee. -

(A/V Codecs) REDCODE RAW (.R3D) ARRIRAW

What is a Codec? Codec is a portmanteau of either "Compressor-Decompressor" or "Coder-Decoder," which describes a device or program capable of performing transformations on a data stream or signal. Codecs encode a stream or signal for transmission, storage or encryption and decode it for viewing or editing. Codecs are often used in videoconferencing and streaming media solutions. A video codec converts analog video signals from a video camera into digital signals for transmission. It then converts the digital signals back to analog for display. An audio codec converts analog audio signals from a microphone into digital signals for transmission. It then converts the digital signals back to analog for playing. The raw encoded form of audio and video data is often called essence, to distinguish it from the metadata information that together make up the information content of the stream and any "wrapper" data that is then added to aid access to or improve the robustness of the stream. Most codecs are lossy, in order to get a reasonably small file size. There are lossless codecs as well, but for most purposes the almost imperceptible increase in quality is not worth the considerable increase in data size. The main exception is if the data will undergo more processing in the future, in which case the repeated lossy encoding would damage the eventual quality too much. Many multimedia data streams need to contain both audio and video data, and often some form of metadata that permits synchronization of the audio and video. Each of these three streams may be handled by different programs, processes, or hardware; but for the multimedia data stream to be useful in stored or transmitted form, they must be encapsulated together in a container format. -

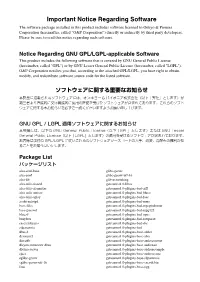

Important Notice Regarding Software

Important Notice Regarding Software The software package installed in this product includes software licensed to Onkyo & Pioneer Corporation (hereinafter, called “O&P Corporation”) directly or indirectly by third party developers. Please be sure to read this notice regarding such software. Notice Regarding GNU GPL/LGPL-applicable Software This product includes the following software that is covered by GNU General Public License (hereinafter, called "GPL") or by GNU Lesser General Public License (hereinafter, called "LGPL"). O&P Corporation notifies you that, according to the attached GPL/LGPL, you have right to obtain, modify, and redistribute software source code for the listed software. ソフトウェアに関する重要なお知らせ 本製品に搭載されるソフトウェアには、オンキヨー & パイオニア株式会社(以下「弊社」とします)が 第三者より直接的に又は間接的に使用の許諾を受けたソフトウェアが含まれております。これらのソフト ウェアに関する本お知らせを必ずご一読くださいますようお願い申し上げます。 GNU GPL / LGPL 適用ソフトウェアに関するお知らせ 本製品には、以下の GNU General Public License(以下「GPL」とします)または GNU Lesser General Public License(以下「LGPL」とします)の適用を受けるソフトウェアが含まれております。 お客様は添付の GPL/LGPL に従いこれらのソフトウェアソースコードの入手、改変、再配布の権利があ ることをお知らせいたします。 Package List パッケージリスト alsa-conf-base glibc-gconv alsa-conf glibc-gconv-utf-16 alsa-lib glib-networking alsa-utils-alsactl gstreamer1.0-libav alsa-utils-alsamixer gstreamer1.0-plugins-bad-aiff alsa-utils-amixer gstreamer1.0-plugins-bad-bluez alsa-utils-aplay gstreamer1.0-plugins-bad-faac avahi-autoipd gstreamer1.0-plugins-bad-mms base-files gstreamer1.0-plugins-bad-mpegtsdemux base-passwd gstreamer1.0-plugins-bad-mpg123 bluez5 gstreamer1.0-plugins-bad-opus busybox gstreamer1.0-plugins-bad-rawparse -

Command-Line Sound Editing Wednesday, December 7, 2016

21m.380 Music and Technology Recording Techniques & Audio Production Workshop: Command-line sound editing Wednesday, December 7, 2016 1 Student presentation (pa1) • 2 Subject evaluation 3 Group picture 4 Why edit sound on the command line? Figure 1. Graphical representation of sound • We are used to editing sound graphically. • But for many operations, we do not actually need to see the waveform! 4.1 Potential applications • • • • • • • • • • • • • • • • 1 of 11 21m.380 · Workshop: Command-line sound editing · Wed, 12/7/2016 4.2 Advantages • No visual belief system (what you hear is what you hear) • Faster (no need to load guis or waveforms) • Efficient batch-processing (applying editing sequence to multiple files) • Self-documenting (simply save an editing sequence to a script) • Imaginative (might give you different ideas of what’s possible) • Way cooler (let’s face it) © 4.3 Software packages On Debian-based gnu/Linux systems (e.g., Ubuntu), install any of the below packages via apt, e.g., sudo apt-get install mplayer. Program .deb package Function mplayer mplayer Play any media file Table 1. Command-line programs for sndfile-info sndfile-programs playing, converting, and editing me- Metadata retrieval dia files sndfile-convert sndfile-programs Bit depth conversion sndfile-resample samplerate-programs Resampling lame lame Mp3 encoder flac flac Flac encoder oggenc vorbis-tools Ogg Vorbis encoder ffmpeg ffmpeg Media conversion tool mencoder mencoder Media conversion tool sox sox Sound editor ecasound ecasound Sound editor 4.4 Real-world -

Neufuzz: Efficient Fuzzing with Deep Neural Network

Received January 15, 2019, accepted February 6, 2019, date of current version April 2, 2019. Digital Object Identifier 10.1109/ACCESS.2019.2903291 NeuFuzz: Efficient Fuzzing With Deep Neural Network YUNCHAO WANG , ZEHUI WU, QIANG WEI, AND QINGXIAN WANG China National Digital Switching System Engineering and Technological Research Center, Zhengzhou 450000, China Corresponding author: Qiang Wei ([email protected]) This work was supported by National Key R&D Program of China under Grant 2017YFB0802901. ABSTRACT Coverage-guided graybox fuzzing is one of the most popular and effective techniques for discovering vulnerabilities due to its nature of high speed and scalability. However, the existing techniques generally focus on code coverage but not on vulnerable code. These techniques aim to cover as many paths as possible rather than to explore paths that are more likely to be vulnerable. When selecting the seeds to test, the existing fuzzers usually treat all seed inputs equally, ignoring the fact that paths exercised by different seed inputs are not equally vulnerable. This results in wasting time testing uninteresting paths rather than vulnerable paths, thus reducing the efficiency of vulnerability detection. In this paper, we present a solution, NeuFuzz, using the deep neural network to guide intelligent seed selection during graybox fuzzing to alleviate the aforementioned limitation. In particular, the deep neural network is used to learn the hidden vulnerability pattern from a large number of vulnerable and clean program paths to train a prediction model to classify whether paths are vulnerable. The fuzzer then prioritizes seed inputs that are capable of covering the likely to be vulnerable paths and assigns more mutation energy (i.e., the number of inputs to be generated) to these seeds. -

Codec Is a Portmanteau of Either

What is a Codec? Codec is a portmanteau of either "Compressor-Decompressor" or "Coder-Decoder," which describes a device or program capable of performing transformations on a data stream or signal. Codecs encode a stream or signal for transmission, storage or encryption and decode it for viewing or editing. Codecs are often used in videoconferencing and streaming media solutions. A video codec converts analog video signals from a video camera into digital signals for transmission. It then converts the digital signals back to analog for display. An audio codec converts analog audio signals from a microphone into digital signals for transmission. It then converts the digital signals back to analog for playing. The raw encoded form of audio and video data is often called essence, to distinguish it from the metadata information that together make up the information content of the stream and any "wrapper" data that is then added to aid access to or improve the robustness of the stream. Most codecs are lossy, in order to get a reasonably small file size. There are lossless codecs as well, but for most purposes the almost imperceptible increase in quality is not worth the considerable increase in data size. The main exception is if the data will undergo more processing in the future, in which case the repeated lossy encoding would damage the eventual quality too much. Many multimedia data streams need to contain both audio and video data, and often some form of metadata that permits synchronization of the audio and video. Each of these three streams may be handled by different programs, processes, or hardware; but for the multimedia data stream to be useful in stored or transmitted form, they must be encapsulated together in a container format.