Tied to the Representation of Numbers

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

MOAT004 1 Eprint Cs.DC/0306067 Computing in High Energy and Nuclear Physics, 24-28 March 2003, La Jolla, California Resources

Computing in High Energy and Nuclear Physics, 24-28 March 2003, La Jolla, California The AliEn system, status and perspectives P. Buncic Institut für Kernphysik, August-Euler-Str. 6, D-60486 Frankfurt, Germany and CERN, 1211, Geneva 23, Switzerland A. J. Peters, P.Saiz CERN, 1211, Geneva 23, Switzerland In preparation of the experiment at CERN's Large Hadron Collider (LHC), the ALICE collaboration has developed AliEn, a production environment that implements several components of the Grid paradigm needed to simulate, reconstruct and analyse data in a distributed way. Thanks to AliEn, the computing resources of a Virtual Organization can be seen and used as a single entity – any available node can execute jobs and access distributed datasets in a fully transparent way, wherever in the world a file or node might be. The system is built aroun d Open Source components, uses the Web Services model and standard network protocols to implement the computing platform that is currently being used to produce and analyse Monte Carlo data at over 30 sites on four continents. Several other HEP experiments as well as medical projects (EU MammoGRID, INFN GP -CALMA) have expressed their interest in AliEn or some components of it. As progress is made in the definition of Grid standards and interoperability, our aim is to interface AliEn to emerging products from both Europe and the US. In particular, it is our intention to make AliEn services compatible with the Open Grid Services Architecture (OGSA). The aim of this paper is to present the current AliEn architecture and outline its future developments in the light of emerging standards. -

The What and Why of Whole Number Arithmetic: Foundational Ideas from History, Language and Societal Changes

Portland State University PDXScholar Mathematics and Statistics Faculty Fariborz Maseeh Department of Mathematics Publications and Presentations and Statistics 3-2018 The What and Why of Whole Number Arithmetic: Foundational Ideas from History, Language and Societal Changes Xu Hu Sun University of Macau Christine Chambris Université de Cergy-Pontoise Judy Sayers Stockholm University Man Keung Siu University of Hong Kong Jason Cooper Weizmann Institute of Science SeeFollow next this page and for additional additional works authors at: https:/ /pdxscholar.library.pdx.edu/mth_fac Part of the Science and Mathematics Education Commons Let us know how access to this document benefits ou.y Citation Details Sun X.H. et al. (2018) The What and Why of Whole Number Arithmetic: Foundational Ideas from History, Language and Societal Changes. In: Bartolini Bussi M., Sun X. (eds) Building the Foundation: Whole Numbers in the Primary Grades. New ICMI Study Series. Springer, Cham This Book Chapter is brought to you for free and open access. It has been accepted for inclusion in Mathematics and Statistics Faculty Publications and Presentations by an authorized administrator of PDXScholar. Please contact us if we can make this document more accessible: [email protected]. Authors Xu Hu Sun, Christine Chambris, Judy Sayers, Man Keung Siu, Jason Cooper, Jean-Luc Dorier, Sarah Inés González de Lora Sued, Eva Thanheiser, Nadia Azrou, Lynn McGarvey, Catherine Houdement, and Lisser Rye Ejersbo This book chapter is available at PDXScholar: https://pdxscholar.library.pdx.edu/mth_fac/253 Chapter 5 The What and Why of Whole Number Arithmetic: Foundational Ideas from History, Language and Societal Changes Xu Hua Sun , Christine Chambris Judy Sayers, Man Keung Siu, Jason Cooper , Jean-Luc Dorier , Sarah Inés González de Lora Sued , Eva Thanheiser , Nadia Azrou , Lynn McGarvey , Catherine Houdement , and Lisser Rye Ejersbo 5.1 Introduction Mathematics learning and teaching are deeply embedded in history, language and culture (e.g. -

Release 0.5.0 Will Mcgugan

PyFilesystem Documentation Release 0.5.0 Will McGugan Aug 09, 2017 Contents 1 Guide 3 1.1 Introduction...............................................3 1.2 Getting Started..............................................4 1.3 Concepts.................................................5 1.4 Opening Filesystems...........................................7 1.5 Filesystem Interface...........................................7 1.6 Filesystems................................................9 1.7 3rd-Party Filesystems.......................................... 10 1.8 Exposing FS objects........................................... 10 1.9 Utility Modules.............................................. 11 1.10 Command Line Applications....................................... 11 1.11 A Guide For Filesystem Implementers.................................. 14 1.12 Release Notes.............................................. 16 2 Code Documentation 17 2.1 fs.appdirfs................................................ 17 2.2 fs.base.................................................. 18 2.3 fs.browsewin............................................... 30 2.4 fs.contrib................................................. 30 2.5 fs.errors.................................................. 32 2.6 fs.expose................................................. 34 2.7 fs.filelike................................................. 40 2.8 fs.ftpfs.................................................. 42 2.9 fs.httpfs.................................................. 43 2.10 fs.memoryfs.............................................. -

Datatype Defining Rewrite Systems for Naturals and Integers

UvA-DARE (Digital Academic Repository) Datatype defining rewrite systems for naturals and integers Bergstra, J.A.; Ponse, A. DOI 10.23638/LMCS-17(1:17)2021 Publication date 2021 Document Version Final published version Published in Logical Methods in Computer Science License CC BY Link to publication Citation for published version (APA): Bergstra, J. A., & Ponse, A. (2021). Datatype defining rewrite systems for naturals and integers. Logical Methods in Computer Science, 17(1), [17]. https://doi.org/10.23638/LMCS- 17(1:17)2021 General rights It is not permitted to download or to forward/distribute the text or part of it without the consent of the author(s) and/or copyright holder(s), other than for strictly personal, individual use, unless the work is under an open content license (like Creative Commons). Disclaimer/Complaints regulations If you believe that digital publication of certain material infringes any of your rights or (privacy) interests, please let the Library know, stating your reasons. In case of a legitimate complaint, the Library will make the material inaccessible and/or remove it from the website. Please Ask the Library: https://uba.uva.nl/en/contact, or a letter to: Library of the University of Amsterdam, Secretariat, Singel 425, 1012 WP Amsterdam, The Netherlands. You will be contacted as soon as possible. UvA-DARE is a service provided by the library of the University of Amsterdam (https://dare.uva.nl) Download date:30 Sep 2021 Logical Methods in Computer Science Volume 17, Issue 1, 2021, pp. 17:1–17:31 Submitted Jan. 15, 2020 https://lmcs.episciences.org/ Published Feb. -

Experimental Methods and Instrumentation for Chemical Engineers Experimental Methods and Instrumentation for Chemical Engineers

Experimental Methods and Instrumentation for Chemical Engineers Experimental Methods and Instrumentation for Chemical Engineers Gregory S. Patience AMSTERDAM • BOSTON • HEIDELBERG • LONDON NEW YORK • OXFORD • PARIS • SAN DIEGO SAN FRANCISCO • SINGAPORE • SYDNEY • TOKYO Elsevier 225 Wyman Street, Waltham, MA 02451, USA The Boulevard, Langford Lane, Kidlington, Oxford OX5 1GB, UK Radarweg 29, PO Box 211, 1000 AE Amsterdam, The Netherlands First edition 2013 Copyright © 2013 Elsevier B.V. All rights reserved. No part of this publication may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopying, recording, or any information storage and retrieval system, without permission in writing from the publisher. Details on how to seek permission, further information about the Publisher’s permissions policies and our arrangements with organizations such as the Copyright Clearance Center and the Copyright Licensing Agency, can be found at our website: www.elsevier.com/permissions. This book and the individual contributions contained in it are protected under copyright by the Publisher (other than as may be noted herein). Notices Knowledge and best practice in this field are constantly changing. As new research and experience broaden our understanding, changes in research methods, professional practices, or medical treatment may become necessary. Practitioners and researchers must always rely on their own experience and knowledge in evaluating and using any information, methods, compounds, or experiments -

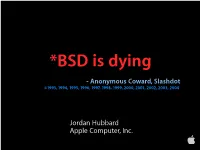

Jordan Hubbard Apple Computer, Inc. Oh Really?

*BSD is dying - Anonymous Coward, Slashdot ©1993, 1994, 1995, 1996, 1997, 1998, 1999, 2000, 2001, 2002, 2003, 2004 Jordan Hubbard Apple Computer, Inc. Oh really? Let’s look at some stats... FreeBSD Users: 2.5 Million Server Installations (Netcraft) 2,500,000 1,875,000 1,250,000 625,000 0 1993 1997 2001 2003 2004 12 Mac OS X Users: 9 6 3 0 12 Million Jul '01 Oct '01 Jan '02 Apr '02 Jul '02 Oct '02 Jan '03 Apr '03 Jun '03 Oct '03 Oct '04 Applications: FreeBSD ports 12,000 9,000 6,000 10,796 9,662 3,000 6,077 2,723 1,161 0 209 1995 1997 1999 2001 2003 2004 Applications: 12,000 Mac OS X Native 12,000 9,000 6,000 3,000 0 Apr '01 Jul '01 Oct '01 Jan '02 Apr '02 Jul '02 Oct '02 Jan '03 Apr '03 Jun '03 Oct '03 Oct '04 Since the arrival of Mac OS X, BSD has become the biggest desktop UNIX variant on the planet. Yes, even bigger than Linux Take that, Anonymous Coward! Selective overview of Mac OS X Mac OS X Architecture Applications User Interface Application Frameworks Graphics and Media System Services OS Foundation Apple Confidential OS Foundation Usermode BSD Commands and Usermode User FileSystem Libraries Drivers Kernel BSD Kernel IOKit Driver FileSystem Network Families Process Management Drivers Mach Kernel VM Scheduling IPC Open Source “Darwin” base OS Foundation Usermode BSD Commands and Usermode User FileSystem Libraries Drivers Kernel BSD Kernel IOKit Driver FileSystem Network Families Process Management Drivers Mach Kernel VM Scheduling IPC BSD Kernel • FreeBSD 5.1 based (networking, vfs, filesystems, etc) • Unified Buffer Cache (different -

Creating User-Mode Device Drivers with a Proxy Galen C

Creating User-Mode Device Drivers with a Proxy Galen C. Hunt [email protected] Department of Computer Science University of Rochester Rochester, NY 14627-0226 Abstract Writing device drivers is complicated because drivers operate as kernel-mode programs. Device drivers have Writing Windows NT device drivers can be a daunting limited access to other OS services and must be task. Device drivers must be fully re-entrant, must use conscious of kernel paging demands. Debugging only limited resources and must be created with special kernel-mode device drivers normally requires two development environments. Executing device drivers machines: one for the driver and another for the in user-mode offers significant coding advantages. debugger. User-mode device drivers have access to all user-mode libraries and applications. They can be developed using Device drivers run in kernel mode to optimize system standard development tools and debugged on a single performance. Kernel-mode device drivers have full machine. Using the Proxy Driver to retrieve I/O access to hardware resources and the data of user-mode requests from the kernel, user-mode drivers can export programs. From kernel mode, device drivers can full device services to the kernel and applications. exploit operations such as DMA and page sharing to User-mode device drivers offer enormous flexibility for transfer data directly into application address spaces. emulating devices and experimenting with new file Device drivers are placed in the kernel to minimize the systems. Experimental results show that in many number of times the CPU must cross the user/kernel cases, the overhead of moving to user-mode for boundary. -

File Organization and Management Com 214 Pdf

File organization and management com 214 pdf Continue 1 1 UNESCO-NIGERIA TECHNICAL - VOCATIONAL EDUCATION REVITALISATION PROJECT-PHASE II NATIONAL DIPLOMA IN COMPUTER TECHNOLOGY FILE Organization AND MANAGEMENT YEAR II- SE MESTER I THEORY Version 1: December 2 2 Content Table WEEK 1 File Concepts... 6 Bit:... 7 Binary figure... 8 Representation... 9 Transmission... 9 Storage... 9 Storage unit... 9 Abbreviation and symbol More than one bit, trit, dontcare, what? RfC on trivial bits Alternative Words WEEK 2 WEEK 3 Identification and File File System Aspects of File Systems File Names Metadata Hierarchical File Systems Means Secure Access WEEK 6 Types of File Systems Disk File Systems File Systems File Systems Transactional Systems File Systems Network File Systems Special Purpose File Systems 3 3 File Systems and Operating Systems Flat File Systems File Systems according to Unix-like Operating Systems File Systems according to Plan 9 from Bell Files under Microsoft Windows WEEK 7 File Storage Backup Files Purpose Storage Primary Storage Secondary Storage Third Storage Out Storage Features Storage Volatility Volatility UncertaintyAbility Availability Availability Performance Key Storage Technology Semiconductor Magnetic Paper Unusual Related Technology Connecting Network Connection Robotic Processing Robotic Processing File Processing Activity 4 4 Technology Execution Program interrupts secure mode and memory control mode Virtual Memory Operating Systems Linux and UNIX Microsoft Windows Mac OS X Special File Systems Journalized File Systems Graphic User Interfaces History Mainframes Microcomputers Microsoft Windows Plan Unix and Unix-like operating systems Mac OS X Real-time Operating Systems Built-in Core Development Hobby Systems Pre-Emptification 5 5 WEEK 1 THIS WEEK SPECIFIC LEARNING OUTCOMES To understand: The concept of the file in the computing concept, field, character, byte and bits in relation to File 5 6 6 Concept Files In this section, we will deal with the concepts of the file and their relationship. -

Pyfilesystem Documentation Release 2.0.21

PyFilesystem Documentation Release 2.0.21 Will McGugan May 12, 2018 Contents 1 Introduction 3 1.1 Installing.................................................3 2 Guide 5 2.1 Why use PyFilesystem?.........................................5 2.2 Opening Filesystems...........................................5 2.3 Tree Printing...............................................6 2.4 Closing..................................................7 2.5 Directory Information..........................................7 2.6 Sub Directories..............................................8 2.7 Working with Files............................................8 2.8 Walking..................................................9 2.9 Moving and Copying...........................................9 3 Concepts 11 3.1 Paths................................................... 11 3.2 System Paths............................................... 12 3.3 Sandboxing................................................ 12 3.4 Errors................................................... 13 4 Resource Info 15 4.1 Info Objects............................................... 15 4.2 Namespaces............................................... 15 4.3 Missing Namespaces........................................... 17 4.4 Raw Info................................................. 18 5 FS URLs 19 5.1 Format.................................................. 19 5.2 URL Parameters............................................. 20 5.3 Opening FS URLS............................................ 20 6 Walking 21 6.1 Walk -

The Evolution of File Systems

The Evolution of File Systems Thomas Rivera, Hitachi Data Systems Craig Harmer, April 2011 SNIA Legal Notice The material contained in this tutorial is copyrighted by the SNIA. Member companies and individuals may use this material in presentations and literature under the following conditions: Any slide or slides used must be reproduced without modification The SNIA must be acknowledged as source of any material used in the body of any document containing material from these presentations. This presentation is a project of the SNIA Education Committee. Neither the Author nor the Presenter is an attorney and nothing in this presentation is intended to be nor should be construed as legal advice or opinion. If you need legal advice or legal opinion please contact an attorney. The information presented herein represents the Author's personal opinion and current understanding of the issues involved. The Author, the Presenter, and the SNIA do not assume any responsibility or liability for damages arising out of any reliance on or use of this information. NO WARRANTIES, EXPRESS OR IMPLIED. USE AT YOUR OWN RISK. The Evolution of File Systems 2 © 2012 Storage Networking Industry Association. All Rights Reserved. 2 Abstract The File Systems Evolution Over time additional file systems appeared focusing on specialized requirements such as: data sharing, remote file access, distributed file access, parallel files access, HPC, archiving, security, etc. Due to the dramatic growth of unstructured data, files as the basic units for data containers are morphing into file objects, providing more semantics and feature- rich capabilities for content processing This presentation will: Categorize and explain the basic principles of currently available file system architectures (e.g. -

Parallel Branch-And-Bound Revisited for Solving Permutation Combinatorial Optimization Problems on Multi-Core Processors and Coprocessors Rudi Leroy

Parallel Branch-and-Bound revisited for solving permutation combinatorial optimization problems on multi-core processors and coprocessors Rudi Leroy To cite this version: Rudi Leroy. Parallel Branch-and-Bound revisited for solving permutation combinatorial optimization problems on multi-core processors and coprocessors. Distributed, Parallel, and Cluster Computing [cs.DC]. Université Lille 1, 2015. English. tel-01248563 HAL Id: tel-01248563 https://hal.inria.fr/tel-01248563 Submitted on 27 Dec 2015 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Ecole Doctorale Sciences Pour l’Ingénieur Université Lille 1 Nord-de-France Centre de Recherche en Informatique, Signal et Automatique de Lille (UMR CNRS 9189) Centre de Recherche INRIA Lille Nord Europe Maison de la Simulation Thèse présentée pour obtenir le grade de docteur Discipline : Informatique Parallel Branch-and-Bound revisited for solving permutation combinatorial optimization problems on multi-core processors and coprocessors. Défendue par : Rudi Leroy Novembre 2012 - Novembre 2015 Devant le jury -

The File Systems Evolution

The File Systems Evolution Christian Bandulet Principal Engineer, Sun Microsystems SNIA Legal Notice The material contained in this tutorial is copyrighted by the SNIA. Member companies and individuals may use this material in presentations and literature under the following conditions: Any slide or slides used must be reproduced without modification The SNIA must be acknowledged as source of any material used in the body of any document containing material from these presentations. This presentation is a project of the SNIA Education Committee. Neither the Author nor the Presenter is an attorney and nothing in this presentation is intended to be nor should be construed as legal advice or opinion. If you need legal advice or legal opinion please contact an attorney. The information presented herein represents the Author's personal opinion and current understanding of the issues involved. The Author, the Presenter, and the SNIA do not assume any responsibility or liability for damages arising out of any reliance on or use of this information. NO WARRANTIES, EXPRESS OR IMPLIED. USE AT YOUR OWN RISK. The File Systems Evolution © 2009 Storage Networking Industry Association. All Rights Reserved. 2 Abstract The File Systems Evolution File Systems impose structure on the address space of one or more physical or virtual devices. Starting with local file systems over time additional file systems appeared focusing on specialized requirements such as data sharing, remote file access, distributed file access, parallel files access, HPC, archiving, security etc.. Due to the dramatic growth of unstructured data files as the basic units for data containers are morphing into file objects providing more semantics and feature-rich capabilities for content processing.