The Evolution of File Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

24 Bit 96 Khz Digital Audio Workstation Using High Performance Be Operating System on a Multiprocessor Intel Machine

24 bit 96 kHz Digital Audio Workstation using high performance Be Operating System on a multiprocessor Intel machine by: Michal Jurewicz - Mytek, Inc., New York, NY, USA Timothy Self - Be, Inc., Menlo Park, CA, USA ABSTRACT Digital Audio Workstation (DAW) has quickly established itself as the most important digital audio production tool. With the advent of high-resolution multi-channel audio formats and Internet audio exchange, the high performance and flawless operations of the desktop DAW have become a necessity. The authors explaining why current popular computer architectures are not suited to these new tasks, explore the possibilities of the new Be Operating System (BeOS)- specifically designed and optimized to handle digital audio and video. New features, unattainable with current operating systems, are discussed. 1. Introduction Ever increasing performance of computers has caused a gradual migration of the key audio production tools from hardware embodiments to the virtual world of computers. This trend will continue, propelled by bottom line economics and the appearance of new features such as network audio exchange. Although current computer hardware is up to the task, existing general purpose operating systems are the actual performance bottleneck . Designed over 10 years ago for general purpose computing, they fail to meet increasing demands for speed and file size. The new BeOS has been designed from ground up to handle high bandwidth digital audio and video in a modern multiprocessing and multitasking environment. The paper focuses solely on the use of commodity personal computers (IBM Compatibles and Apple) and their operating systems (Windows, MacOS, BeOS and Linux). Although number of specialized platforms such as SGI provides superior performance, they were omitted, as their presence in the current professional audio environment is minimal. -

Copy on Write Based File Systems Performance Analysis and Implementation

Copy On Write Based File Systems Performance Analysis And Implementation Sakis Kasampalis Kongens Lyngby 2010 IMM-MSC-2010-63 Technical University of Denmark Department Of Informatics Building 321, DK-2800 Kongens Lyngby, Denmark Phone +45 45253351, Fax +45 45882673 [email protected] www.imm.dtu.dk Abstract In this work I am focusing on Copy On Write based file systems. Copy On Write is used on modern file systems for providing (1) metadata and data consistency using transactional semantics, (2) cheap and instant backups using snapshots and clones. This thesis is divided into two main parts. The first part focuses on the design and performance of Copy On Write based file systems. Recent efforts aiming at creating a Copy On Write based file system are ZFS, Btrfs, ext3cow, Hammer, and LLFS. My work focuses only on ZFS and Btrfs, since they support the most advanced features. The main goals of ZFS and Btrfs are to offer a scalable, fault tolerant, and easy to administrate file system. I evaluate the performance and scalability of ZFS and Btrfs. The evaluation includes studying their design and testing their performance and scalability against a set of recommended file system benchmarks. Most computers are already based on multi-core and multiple processor architec- tures. Because of that, the need for using concurrent programming models has increased. Transactions can be very helpful for supporting concurrent program- ming models, which ensure that system updates are consistent. Unfortunately, the majority of operating systems and file systems either do not support trans- actions at all, or they simply do not expose them to the users. -

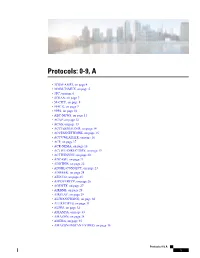

Protocols: 0-9, A

Protocols: 0-9, A • 3COM-AMP3, on page 4 • 3COM-TSMUX, on page 5 • 3PC, on page 6 • 4CHAN, on page 7 • 58-CITY, on page 8 • 914C G, on page 9 • 9PFS, on page 10 • ABC-NEWS, on page 11 • ACAP, on page 12 • ACAS, on page 13 • ACCESSBUILDER, on page 14 • ACCESSNETWORK, on page 15 • ACCUWEATHER, on page 16 • ACP, on page 17 • ACR-NEMA, on page 18 • ACTIVE-DIRECTORY, on page 19 • ACTIVESYNC, on page 20 • ADCASH, on page 21 • ADDTHIS, on page 22 • ADOBE-CONNECT, on page 23 • ADWEEK, on page 24 • AED-512, on page 25 • AFPOVERTCP, on page 26 • AGENTX, on page 27 • AIRBNB, on page 28 • AIRPLAY, on page 29 • ALIWANGWANG, on page 30 • ALLRECIPES, on page 31 • ALPES, on page 32 • AMANDA, on page 33 • AMAZON, on page 34 • AMEBA, on page 35 • AMAZON-INSTANT-VIDEO, on page 36 Protocols: 0-9, A 1 Protocols: 0-9, A • AMAZON-WEB-SERVICES, on page 37 • AMERICAN-EXPRESS, on page 38 • AMINET, on page 39 • AN, on page 40 • ANCESTRY-COM, on page 41 • ANDROID-UPDATES, on page 42 • ANET, on page 43 • ANSANOTIFY, on page 44 • ANSATRADER, on page 45 • ANY-HOST-INTERNAL, on page 46 • AODV, on page 47 • AOL-MESSENGER, on page 48 • AOL-MESSENGER-AUDIO, on page 49 • AOL-MESSENGER-FT, on page 50 • AOL-MESSENGER-VIDEO, on page 51 • AOL-PROTOCOL, on page 52 • APC-POWERCHUTE, on page 53 • APERTUS-LDP, on page 54 • APPLEJUICE, on page 55 • APPLE-APP-STORE, on page 56 • APPLE-IOS-UPDATES, on page 57 • APPLE-REMOTE-DESKTOP, on page 58 • APPLE-SERVICES, on page 59 • APPLE-TV-UPDATES, on page 60 • APPLEQTC, on page 61 • APPLEQTCSRVR, on page 62 • APPLIX, on page 63 • ARCISDMS, -

Early Experiences with Storage Area Networks and CXFS John Lynch

Early Experiences with Storage Area Networks and CXFS John Lynch Aerojet 6304 Spine Road Boulder CO 80516 Abstract This paper looks at the design, integration and application issues involved in deploying an early access, very large, and highly available storage area network. Covered are topics from filesystem failover, issues regarding numbers of nodes in a cluster, and using leading edge solutions to solve complex issues in a real-time data processing network. 1 Introduction SAN technology can be categorized in two distinct approaches. Both Aerojet designed and installed a highly approaches use the storage area network available, large scale Storage Area to provide access to multiple storage Network over spring of 2000. This devices at the same time by one or system due to it size and diversity is multiple hosts. The difference is how known to be one of a kind and is the storage devices are accessed. currently not offered by SGI, but would serve as a prototype system. The most common approach allows the hosts to access the storage devices across The project’s goal was to evaluate Fibre the storage area network but filesystems Channel and SAN technology for its are not shared. This allows either a benefits and applicability in a second- single host to stripe data across a greater generation, real-time data processing number of storage controllers, or to network. SAN technology seemed to be share storage controllers among several the technology of the future to replace systems. This essentially breaks up a the traditional SCSI solution. large storage system into smaller distinct pieces, but allows for the cost-sharing of The approach was to conduct and the most expensive component, the evaluation of SAN technology as a storage controller. -

CXFSTM Client-Only Guide for SGI® Infinitestorage

CXFSTM Client-Only Guide for SGI® InfiniteStorage 007–4507–016 COPYRIGHT © 2002–2008 SGI. All rights reserved; provided portions may be copyright in third parties, as indicated elsewhere herein. No permission is granted to copy, distribute, or create derivative works from the contents of this electronic documentation in any manner, in whole or in part, without the prior written permission of SGI. LIMITED RIGHTS LEGEND The software described in this document is "commercial computer software" provided with restricted rights (except as to included open/free source) as specified in the FAR 52.227-19 and/or the DFAR 227.7202, or successive sections. Use beyond license provisions is a violation of worldwide intellectual property laws, treaties and conventions. This document is provided with limited rights as defined in 52.227-14. TRADEMARKS AND ATTRIBUTIONS SGI, Altix, the SGI cube and the SGI logo are registered trademarks and CXFS, FailSafe, IRIS FailSafe, SGI ProPack, and Trusted IRIX are trademarks of SGI in the United States and/or other countries worldwide. Active Directory, Microsoft, Windows, and Windows NT are registered trademarks or trademarks of Microsoft Corporation in the United States and/or other countries. AIX and IBM are registered trademarks of IBM Corporation. Brocade and Silkworm are trademarks of Brocade Communication Systems, Inc. AMD, AMD Athlon, AMD Duron, and AMD Opteron are trademarks of Advanced Micro Devices, Inc. Apple, Mac, Mac OS, Power Mac, and Xserve are registered trademarks of Apple Computer, Inc. Disk Manager is a registered trademark of ONTRACK Data International, Inc. Engenio, LSI Logic, and SANshare are trademarks or registered trademarks of LSI Corporation. -

Vorlesung-Print.Pdf

1 Betriebssysteme Prof. Dipl.-Ing. Klaus Knopper (C) 2019 <[email protected]> Live GNU/Linux System Schwarz: Transparent,KNOPPIX CD−Hintergrundfarbe (silber) bei Zweifarbdruck, sonst schwarz. Vorlesung an der DHBW Karlsruhe im Sommersemester 2019 Organisatorisches + Vorlesung mit Ubungen¨ Betriebssysteme WWI17B2 jeweils Montags (einzelne Termine) in A369 + http://knopper.net/bs/ (spater¨ moodle) Folie 1 Kursziel µ Grundsatzlichen¨ Aufbau von Betriebssystemen in Theorie und Praxis kennen und verstehen, µ grundlegende Konzepte von Multitasking, Multiuser-Betrieb und Hardware-Unterstutzung¨ / Resource-Sharing erklaren¨ konnen,¨ µ Sicherheitsfragen und Risiken des Ubiquitous und Mobile Computing auf Betriebssystemebene analysieren, µ mit heterogenen Betriebssystemumgebungen und Virtua- lisierung arbeiten, Kompatibilitatsprobleme¨ erkennen und losen.¨ Folie 2 0 Themen (Top-Down) + Ubersicht¨ Betriebssysteme und Anwendungen, Unterschiede in Aufbau und Einsatz, Lizenzen, Distributionen, + GNU/Linux als OSS-Lernsystem fur¨ die Vorlesung, Tracing und Analyse des Bootvorgangs, + User Interface(s), + Dateisystem: VFS, reale Implementierungen, + Multitasking: Scheduler, Interrupts, Speicherverwaltung (VM), Prozessverwaltung (Timesharing), + Multiuser: Benutzerverwaltung, Rechtesystem, + Hardware-Unterstutzung:¨ Kernel und Module vs. Treiber“ - Kon- ” zept, + Kompatibilitat,¨ API-Emulation, Virtualisierung, Softwareentwick- lung. + Sicherheits-Aspekte von Betriebssystemen, Schadsoftware“ und ” forensische Analyse bei Kompromittierung oder Datenverlust. -

Persistent 9P Sessions for Plan 9

Persistent 9P Sessions for Plan 9 Gorka Guardiola, [email protected] Russ Cox, [email protected] Eric Van Hensbergen, [email protected] ABSTRACT Traditionally, Plan 9 [5] runs mainly on local networks, where lost connections are rare. As a result, most programs, including the kernel, do not bother to plan for their file server connections to fail. These programs must be restarted when a connection does fail. If the kernel’s connection to the root file server fails, the machine must be rebooted. This approach suffices only because lost connections are rare. Across long distance networks, where connection failures are more common, it becomes woefully inadequate. To address this problem, we wrote a program called recover, which proxies a 9P session on behalf of a client and takes care of redialing the remote server and reestablishing con- nection state as necessary, hiding network failures from the client. This paper presents the design and implementation of recover, along with performance benchmarks on Plan 9 and on Linux. 1. Introduction Plan 9 is a distributed system developed at Bell Labs [5]. Resources in Plan 9 are presented as synthetic file systems served to clients via 9P, a simple file protocol. Unlike file protocols such as NFS, 9P is stateful: per-connection state such as which files are opened by which clients is maintained by servers. Maintaining per-connection state allows 9P to be used for resources with sophisticated access control poli- cies, such as exclusive-use lock files and chat session multiplexers. It also makes servers easier to imple- ment, since they can forget about file ids once a connection is lost. -

Ebook - Informations About Operating Systems Version: August 15, 2006 | Download

eBook - Informations about Operating Systems Version: August 15, 2006 | Download: www.operating-system.org AIX Internet: AIX AmigaOS Internet: AmigaOS AtheOS Internet: AtheOS BeIA Internet: BeIA BeOS Internet: BeOS BSDi Internet: BSDi CP/M Internet: CP/M Darwin Internet: Darwin EPOC Internet: EPOC FreeBSD Internet: FreeBSD HP-UX Internet: HP-UX Hurd Internet: Hurd Inferno Internet: Inferno IRIX Internet: IRIX JavaOS Internet: JavaOS LFS Internet: LFS Linspire Internet: Linspire Linux Internet: Linux MacOS Internet: MacOS Minix Internet: Minix MorphOS Internet: MorphOS MS-DOS Internet: MS-DOS MVS Internet: MVS NetBSD Internet: NetBSD NetWare Internet: NetWare Newdeal Internet: Newdeal NEXTSTEP Internet: NEXTSTEP OpenBSD Internet: OpenBSD OS/2 Internet: OS/2 Further operating systems Internet: Further operating systems PalmOS Internet: PalmOS Plan9 Internet: Plan9 QNX Internet: QNX RiscOS Internet: RiscOS Solaris Internet: Solaris SuSE Linux Internet: SuSE Linux Unicos Internet: Unicos Unix Internet: Unix Unixware Internet: Unixware Windows 2000 Internet: Windows 2000 Windows 3.11 Internet: Windows 3.11 Windows 95 Internet: Windows 95 Windows 98 Internet: Windows 98 Windows CE Internet: Windows CE Windows Family Internet: Windows Family Windows ME Internet: Windows ME Seite 1 von 138 eBook - Informations about Operating Systems Version: August 15, 2006 | Download: www.operating-system.org Windows NT 3.1 Internet: Windows NT 3.1 Windows NT 4.0 Internet: Windows NT 4.0 Windows Server 2003 Internet: Windows Server 2003 Windows Vista Internet: Windows Vista Windows XP Internet: Windows XP Apple - Company Internet: Apple - Company AT&T - Company Internet: AT&T - Company Be Inc. - Company Internet: Be Inc. - Company BSD Family Internet: BSD Family Cray Inc. -

Globalfs: a Strongly Consistent Multi-Site File System

GlobalFS: A Strongly Consistent Multi-Site File System Leandro Pacheco Raluca Halalai Valerio Schiavoni University of Lugano University of Neuchatelˆ University of Neuchatelˆ Fernando Pedone Etienne Riviere` Pascal Felber University of Lugano University of Neuchatelˆ University of Neuchatelˆ Abstract consistency, availability, and tolerance to partitions. Our goal is to ensure strongly consistent file system operations This paper introduces GlobalFS, a POSIX-compliant despite node failures, at the price of possibly reduced geographically distributed file system. GlobalFS builds availability in the event of a network partition. Weak on two fundamental building blocks, an atomic multicast consistency is suitable for domain-specific applications group communication abstraction and multiple instances of where programmers can anticipate and provide resolution a single-site data store. We define four execution modes and methods for conflicts, or work with last-writer-wins show how all file system operations can be implemented resolution methods. Our rationale is that for general-purpose with these modes while ensuring strong consistency and services such as a file system, strong consistency is more tolerating failures. We describe the GlobalFS prototype in appropriate as it is both more intuitive for the users and detail and report on an extensive performance assessment. does not require human intervention in case of conflicts. We have deployed GlobalFS across all EC2 regions and Strong consistency requires ordering commands across show that the system scales geographically, providing replicas, which needs coordination among nodes at performance comparable to other state-of-the-art distributed geographically distributed sites (i.e., regions). Designing file systems for local commands and allowing for strongly strongly consistent distributed systems that provide good consistent operations over the whole system. -

Andrew File System (AFS) Google File System February 5, 2004

Advanced Topics in Computer Systems, CS262B Prof Eric A. Brewer Andrew File System (AFS) Google File System February 5, 2004 I. AFS Goal: large-scale campus wide file system (5000 nodes) o must be scalable, limit work of core servers o good performance o meet FS consistency requirements (?) o managable system admin (despite scale) 400 users in the “prototype” -- a great reality check (makes the conclusions meaningful) o most applications work w/o relinking or recompiling Clients: o user-level process, Venus, that handles local caching, + FS interposition to catch all requests o interaction with servers only on file open/close (implies whole-file caching) o always check cache copy on open() (in prototype) Vice (servers): o Server core is trusted; called “Vice” o servers have one process per active client o shared data among processes only via file system (!) o lock process serializes and manages all lock/unlock requests o read-only replication of namespace (centralized updates with slow propagation) o prototype supported about 20 active clients per server, goal was >50 Revised client cache: o keep data cache on disk, metadata cache in memory o still whole file caching, changes written back only on close o directory updates are write through, but cached locally for reads o instead of check on open(), assume valid unless you get an invalidation callback (server must invalidate all copies before committing an update) o allows name translation to be local (since you can now avoid round-trip for each step of the path) Revised servers: 1 o move -

High Velocity Kernel File Systems with Bento

High Velocity Kernel File Systems with Bento Samantha Miller, Kaiyuan Zhang, Mengqi Chen, and Ryan Jennings, University of Washington; Ang Chen, Rice University; Danyang Zhuo, Duke University; Thomas Anderson, University of Washington https://www.usenix.org/conference/fast21/presentation/miller This paper is included in the Proceedings of the 19th USENIX Conference on File and Storage Technologies. February 23–25, 2021 978-1-939133-20-5 Open access to the Proceedings of the 19th USENIX Conference on File and Storage Technologies is sponsored by USENIX. High Velocity Kernel File Systems with Bento Samantha Miller Kaiyuan Zhang Mengqi Chen Ryan Jennings Ang Chen‡ Danyang Zhuo† Thomas Anderson University of Washington †Duke University ‡Rice University Abstract kernel-level debuggers and kernel testing frameworks makes this worse. The restricted and different kernel programming High development velocity is critical for modern systems. environment also limits the number of trained developers. This is especially true for Linux file systems which are seeing Finally, upgrading a kernel module requires either rebooting increased pressure from new storage devices and new demands the machine or restarting the relevant module, either way on storage systems. However, high velocity Linux kernel rendering the machine unavailable during the upgrade. In the development is challenging due to the ease of introducing cloud setting, this forces kernel upgrades to be batched to meet bugs, the difficulty of testing and debugging, and the lack of cloud-level availability goals. support for redeployment without service disruption. Existing Slow development cycles are a particular problem for file approaches to high-velocity development of file systems for systems. -

A Survey of Distributed File Systems

A Survey of Distributed File Systems M. Satyanarayanan Department of Computer Science Carnegie Mellon University February 1989 Abstract Abstract This paper is a survey of the current state of the art in the design and implementation of distributed file systems. It consists of four major parts: an overview of background material, case studies of a number of contemporary file systems, identification of key design techniques, and an examination of current research issues. The systems surveyed are Sun NFS, Apollo Domain, Andrew, IBM AIX DS, AT&T RFS, and Sprite. The coverage of background material includes a taxonomy of file system issues, a brief history of distributed file systems, and a summary of empirical research on file properties. A comprehensive bibliography forms an important of the paper. Copyright (C) 1988,1989 M. Satyanarayanan The author was supported in the writing of this paper by the National Science Foundation (Contract No. CCR-8657907), Defense Advanced Research Projects Agency (Order No. 4976, Contract F33615-84-K-1520) and the IBM Corporation (Faculty Development Award). The views and conclusions in this document are those of the author and do not represent the official policies of the funding agencies or Carnegie Mellon University. 1 1. Introduction The sharing of data in distributed systems is already common and will become pervasive as these systems grow in scale and importance. Each user in a distributed system is potentially a creator as well as a consumer of data. A user may wish to make his actions contingent upon information from a remote site, or may wish to update remote information.