Quantum Machine Learning Algorithms: Read the Fine Print

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Quantum Computing Overview

Quantum Computing at Microsoft Stephen Jordan • As of November 2018: 60 entries, 392 references • Major new primitives discovered only every few years. Simulation is a killer app for quantum computing with a solid theoretical foundation. Chemistry Materials Nuclear and Particle Physics Image: David Parker, U. Birmingham Image: CERN Many problems are out of reach even for exascale supercomputers but doable on quantum computers. Understanding biological Nitrogen fixation Intractable on classical supercomputers But a 200-qubit quantum computer will let us understand it Finding the ground state of Ferredoxin 퐹푒2푆2 Classical algorithm Quantum algorithm 2012 Quantum algorithm 2015 ! ~3000 ~1 INTRACTABLE YEARS DAY Research on quantum algorithms and software is essential! Quantum algorithm in high level language (Q#) Compiler Machine level instructions Microsoft Quantum Development Kit Quantum Visual Studio Local and cloud Extensive libraries, programming integration and quantum samples, and language debugging simulation documentation Developing quantum applications 1. Find quantum algorithm with quantum speedup 2. Confirm quantum speedup after implementing all oracles 3. Optimize code until runtime is short enough 4. Embed into specific hardware estimate runtime Simulating Quantum Field Theories: Classically Feynman diagrams Lattice methods Image: Encyclopedia of Physics Image: R. Babich et al. Break down at strong Good for binding energies. coupling or high precision Sign problem: real time dynamics, high fermion density. There’s room for exponential -

Simulating Quantum Field Theory with a Quantum Computer

Simulating quantum field theory with a quantum computer John Preskill Lattice 2018 28 July 2018 This talk has two parts (1) Near-term prospects for quantum computing. (2) Opportunities in quantum simulation of quantum field theory. Exascale digital computers will advance our knowledge of QCD, but some challenges will remain, especially concerning real-time evolution and properties of nuclear matter and quark-gluon plasma at nonzero temperature and chemical potential. Digital computers may never be able to address these (and other) problems; quantum computers will solve them eventually, though I’m not sure when. The physics payoff may still be far away, but today’s research can hasten the arrival of a new era in which quantum simulation fuels progress in fundamental physics. Frontiers of Physics short distance long distance complexity Higgs boson Large scale structure “More is different” Neutrino masses Cosmic microwave Many-body entanglement background Supersymmetry Phases of quantum Dark matter matter Quantum gravity Dark energy Quantum computing String theory Gravitational waves Quantum spacetime particle collision molecular chemistry entangled electrons A quantum computer can simulate efficiently any physical process that occurs in Nature. (Maybe. We don’t actually know for sure.) superconductor black hole early universe Two fundamental ideas (1) Quantum complexity Why we think quantum computing is powerful. (2) Quantum error correction Why we think quantum computing is scalable. A complete description of a typical quantum state of just 300 qubits requires more bits than the number of atoms in the visible universe. Why we think quantum computing is powerful We know examples of problems that can be solved efficiently by a quantum computer, where we believe the problems are hard for classical computers. -

(CST Part II) Lecture 14: Fault Tolerant Quantum Computing

Quantum Computing (CST Part II) Lecture 14: Fault Tolerant Quantum Computing The history of the universe is, in effect, a huge and ongoing quantum computation. The universe is a quantum computer. Seth Lloyd 1 / 21 Resources for this lecture Nielsen and Chuang p474-495 covers the material of this lecture. 2 / 21 Why we need fault tolerance Classical computers perform complicated operations where bits are repeatedly \combined" in computations, therefore if an error occurs, it could in principle propagate to a huge number of other bits. Fortunately, in modern digital computers errors are so phenomenally unlikely that we can forget about this possibility for all practical purposes. Errors do, however, occur in telecommunications systems, but as the purpose of these is the simple transmittal of some information, it suffices to perform error correction on the final received data. In a sense, quantum computing is the worst of both of these worlds: errors do occur with significant frequency, and if uncorrected they will propagate, rendering the computation useless. Thus the solution is that we must correct errors as we go along. 3 / 21 Fault tolerant quantum computing set-up For fault tolerant quantum computing: We use encoded qubits, rather than physical qubits. For example we may use the 7-qubit Steane code to represent each logical qubit in the computation. We use fault tolerant quantum gates, which are defined such thata single error in the fault tolerant gate propagates to at most one error in each encoded block of qubits. By a \block of qubits", we mean (for example) each block of 7 physical qubits that represents a logical qubit using the Steane code. -

Quantum Clustering Algorithms

Quantum Clustering Algorithms Esma A¨ımeur [email protected] Gilles Brassard [email protected] S´ebastien Gambs [email protected] Universit´ede Montr´eal, D´epartement d’informatique et de recherche op´erationnelle C.P. 6128, Succursale Centre-Ville, Montr´eal (Qu´ebec), H3C 3J7 Canada Abstract Multidisciplinary by nature, Quantum Information By the term “quantization”, we refer to the Processing (QIP) is at the crossroads of computer process of using quantum mechanics in order science, mathematics, physics and engineering. It con- to improve a classical algorithm, usually by cerns the implications of quantum mechanics for infor- making it go faster. In this paper, we initiate mation processing purposes (Nielsen & Chuang, 2000). the idea of quantizing clustering algorithms Quantum information is very different from its classi- by using variations on a celebrated quantum cal counterpart: it cannot be measured reliably and it algorithm due to Grover. After having intro- is disturbed by observation, but it can exist in a super- duced this novel approach to unsupervised position of classical states. Classical and quantum learning, we illustrate it with a quantized information can be used together to realize wonders version of three standard algorithms: divisive that are out of reach of classical information processing clustering, k-medians and an algorithm for alone, such as being able to factorize efficiently large the construction of a neighbourhood graph. numbers, with dramatic cryptographic consequences We obtain a significant speedup compared to (Shor, 1997), search in a unstructured database with a the classical approach. quadratic speedup compared to the best possible clas- sical algorithms (Grover, 1997) and allow two people to communicate in perfect secrecy under the nose of an 1. -

Quantum Machine Learning: Benefits and Practical Examples

Quantum Machine Learning: Benefits and Practical Examples Frank Phillipson1[0000-0003-4580-7521] 1 TNO, Anna van Buerenplein 1, 2595 DA Den Haag, The Netherlands [email protected] Abstract. A quantum computer that is useful in practice, is expected to be devel- oped in the next few years. An important application is expected to be machine learning, where benefits are expected on run time, capacity and learning effi- ciency. In this paper, these benefits are presented and for each benefit an example application is presented. A quantum hybrid Helmholtz machine use quantum sampling to improve run time, a quantum Hopfield neural network shows an im- proved capacity and a variational quantum circuit based neural network is ex- pected to deliver a higher learning efficiency. Keywords: Quantum Machine Learning, Quantum Computing, Near Future Quantum Applications. 1 Introduction Quantum computers make use of quantum-mechanical phenomena, such as superposi- tion and entanglement, to perform operations on data [1]. Where classical computers require the data to be encoded into binary digits (bits), each of which is always in one of two definite states (0 or 1), quantum computation uses quantum bits, which can be in superpositions of states. These computers would theoretically be able to solve certain problems much more quickly than any classical computer that use even the best cur- rently known algorithms. Examples are integer factorization using Shor's algorithm or the simulation of quantum many-body systems. This benefit is also called ‘quantum supremacy’ [2], which only recently has been claimed for the first time [3]. There are two different quantum computing paradigms. -

COVID-19 Detection on IBM Quantum Computer with Classical-Quantum Transfer Learning

medRxiv preprint doi: https://doi.org/10.1101/2020.11.07.20227306; this version posted November 10, 2020. The copyright holder for this preprint (which was not certified by peer review) is the author/funder, who has granted medRxiv a license to display the preprint in perpetuity. It is made available under a CC-BY-NC-ND 4.0 International license . Turk J Elec Eng & Comp Sci () : { © TUB¨ ITAK_ doi:10.3906/elk- COVID-19 detection on IBM quantum computer with classical-quantum transfer learning Erdi ACAR1*, Ihsan_ YILMAZ2 1Department of Computer Engineering, Institute of Science, C¸anakkale Onsekiz Mart University, C¸anakkale, Turkey 2Department of Computer Engineering, Faculty of Engineering, C¸anakkale Onsekiz Mart University, C¸anakkale, Turkey Received: .201 Accepted/Published Online: .201 Final Version: ..201 Abstract: Diagnose the infected patient as soon as possible in the coronavirus 2019 (COVID-19) outbreak which is declared as a pandemic by the world health organization (WHO) is extremely important. Experts recommend CT imaging as a diagnostic tool because of the weak points of the nucleic acid amplification test (NAAT). In this study, the detection of COVID-19 from CT images, which give the most accurate response in a short time, was investigated in the classical computer and firstly in quantum computers. Using the quantum transfer learning method, we experimentally perform COVID-19 detection in different quantum real processors (IBMQx2, IBMQ-London and IBMQ-Rome) of IBM, as well as in different simulators (Pennylane, Qiskit-Aer and Cirq). By using a small number of data sets such as 126 COVID-19 and 100 Normal CT images, we obtained a positive or negative classification of COVID-19 with 90% success in classical computers, while we achieved a high success rate of 94-100% in quantum computers. -

Quantum Inductive Learning and Quantum Logic Synthesis

Portland State University PDXScholar Dissertations and Theses Dissertations and Theses 2009 Quantum Inductive Learning and Quantum Logic Synthesis Martin Lukac Portland State University Follow this and additional works at: https://pdxscholar.library.pdx.edu/open_access_etds Part of the Electrical and Computer Engineering Commons Let us know how access to this document benefits ou.y Recommended Citation Lukac, Martin, "Quantum Inductive Learning and Quantum Logic Synthesis" (2009). Dissertations and Theses. Paper 2319. https://doi.org/10.15760/etd.2316 This Dissertation is brought to you for free and open access. It has been accepted for inclusion in Dissertations and Theses by an authorized administrator of PDXScholar. For more information, please contact [email protected]. QUANTUM INDUCTIVE LEARNING AND QUANTUM LOGIC SYNTHESIS by MARTIN LUKAC A dissertation submitted in partial fulfillment of the requirements for the degree of DOCTOR OF PHILOSOPHY in ELECTRICAL AND COMPUTER ENGINEERING. Portland State University 2009 DISSERTATION APPROVAL The abstract and dissertation of Martin Lukac for the Doctor of Philosophy in Electrical and Computer Engineering were presented January 9, 2009, and accepted by the dissertation committee and the doctoral program. COMMITTEE APPROVALS: Irek Perkowski, Chair GarrisoH-Xireenwood -George ^Lendaris 5artM ?teven Bleiler Representative of the Office of Graduate Studies DOCTORAL PROGRAM APPROVAL: Malgorza /ska-Jeske7~Director Electrical Computer Engineering Ph.D. Program ABSTRACT An abstract of the dissertation of Martin Lukac for the Doctor of Philosophy in Electrical and Computer Engineering presented January 9, 2009. Title: Quantum Inductive Learning and Quantum Logic Synhesis Since Quantum Computer is almost realizable on large scale and Quantum Technology is one of the main solutions to the Moore Limit, Quantum Logic Synthesis (QLS) has become a required theory and tool for designing Quantum Logic Circuits. -

Nearest Centroid Classification on a Trapped Ion Quantum Computer

www.nature.com/npjqi ARTICLE OPEN Nearest centroid classification on a trapped ion quantum computer ✉ Sonika Johri1 , Shantanu Debnath1, Avinash Mocherla2,3,4, Alexandros SINGK2,3,5, Anupam Prakash2,3, Jungsang Kim1 and Iordanis Kerenidis2,3,6 Quantum machine learning has seen considerable theoretical and practical developments in recent years and has become a promising area for finding real world applications of quantum computers. In pursuit of this goal, here we combine state-of-the-art algorithms and quantum hardware to provide an experimental demonstration of a quantum machine learning application with provable guarantees for its performance and efficiency. In particular, we design a quantum Nearest Centroid classifier, using techniques for efficiently loading classical data into quantum states and performing distance estimations, and experimentally demonstrate it on a 11-qubit trapped-ion quantum machine, matching the accuracy of classical nearest centroid classifiers for the MNIST handwritten digits dataset and achieving up to 100% accuracy for 8-dimensional synthetic data. npj Quantum Information (2021) 7:122 ; https://doi.org/10.1038/s41534-021-00456-5 INTRODUCTION Thus, one might hope that noisy quantum computers are 1234567890():,; Quantum technologies promise to revolutionize the future of inherently better suited for machine learning computations than information and communication, in the form of quantum for other types of problems that need precise computations like computing devices able to communicate and process massive factoring or search problems. amounts of data both efficiently and securely using quantum However, there are significant challenges to be overcome to resources. Tremendous progress is continuously being made both make QML practical. -

An Ecological Perspective on the Future of Computer Trading

An ecological perspective on the future of computer trading The Future of Computer Trading in Financial Markets - Foresight Driver Review – DR 6 An ecological perspective on the future of computer trading Contents 2. The role of computers in modern markets .................................................................................. 7 2.1 Computerization and trading ............................................................................................... 7 Execution................................................................................................................................7 Investment research ................................................................................................................ 7 Clearing and settlement ........................................................................................................... 7 2.2 A coarse preliminary taxonomy of computer trading .............................................................. 7 Execution algos....................................................................................................................... 8 Algorithmic trading................................................................................................................... 9 High Frequency Trading / Latency arbitrage............................................................................... 9 Algorithmic market making ....................................................................................................... 9 3. Ecology and evolution as key -

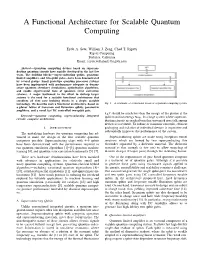

A Functional Architecture for Scalable Quantum Computing

A Functional Architecture for Scalable Quantum Computing Eyob A. Sete, William J. Zeng, Chad T. Rigetti Rigetti Computing Berkeley, California Email: feyob,will,[email protected] Abstract—Quantum computing devices based on supercon- ducting quantum circuits have rapidly developed in the last few years. The building blocks—superconducting qubits, quantum- limited amplifiers, and two-qubit gates—have been demonstrated by several groups. Small prototype quantum processor systems have been implemented with performance adequate to demon- strate quantum chemistry simulations, optimization algorithms, and enable experimental tests of quantum error correction schemes. A major bottleneck in the effort to devleop larger systems is the need for a scalable functional architecture that combines all thee core building blocks in a single, scalable technology. We describe such a functional architecture, based on Fig. 1. A schematic of a functional layout of a quantum computing system. a planar lattice of transmon and fluxonium qubits, parametric amplifiers, and a novel fast DC controlled two-qubit gate. kBT should be much less than the energy of the photon at the Keywords—quantum computing, superconducting integrated qubit transition energy ~!01. In a large system where supercon- circuits, computer architecture. ducting circuits are packed together, unwanted crosstalk among devices is inevitable. To reduce or minimize crosstalk, efficient I. INTRODUCTION packaging and isolation of individual devices is imperative and substantially improves the performance of the system. The underlying hardware for quantum computing has ad- vanced to make the design of the first scalable quantum Superconducting qubits are made using Josephson tunnel computers possible. Superconducting chips with 4–9 qubits junctions which are formed by two superconducting thin have been demonstrated with the performance required to electrodes separated by a dielectric material. -

Quantum Algorithms

An Introduction to Quantum Algorithms Emma Strubell COS498 { Chawathe Spring 2011 An Introduction to Quantum Algorithms Contents Contents 1 What are quantum algorithms? 3 1.1 Background . .3 1.2 Caveats . .4 2 Mathematical representation 5 2.1 Fundamental differences . .5 2.2 Hilbert spaces and Dirac notation . .6 2.3 The qubit . .9 2.4 Quantum registers . 11 2.5 Quantum logic gates . 12 2.6 Computational complexity . 19 3 Grover's Algorithm 20 3.1 Quantum search . 20 3.2 Grover's algorithm: How it works . 22 3.3 Grover's algorithm: Worked example . 24 4 Simon's Algorithm 28 4.1 Black-box period finding . 28 4.2 Simon's algorithm: How it works . 29 4.3 Simon's Algorithm: Worked example . 32 5 Conclusion 33 References 34 Page 2 of 35 An Introduction to Quantum Algorithms 1. What are quantum algorithms? 1 What are quantum algorithms? 1.1 Background The idea of a quantum computer was first proposed in 1981 by Nobel laureate Richard Feynman, who pointed out that accurately and efficiently simulating quantum mechanical systems would be impossible on a classical computer, but that a new kind of machine, a computer itself \built of quantum mechanical elements which obey quantum mechanical laws" [1], might one day perform efficient simulations of quantum systems. Classical computers are inherently unable to simulate such a system using sub-exponential time and space complexity due to the exponential growth of the amount of data required to completely represent a quantum system. Quantum computers, on the other hand, exploit the unique, non-classical properties of the quantum systems from which they are built, allowing them to process exponentially large quantities of information in only polynomial time. -

SECURING CLOUDS – the QUANTUM WAY 1 Introduction

SECURING CLOUDS – THE QUANTUM WAY Marmik Pandya Department of Information Assurance Northeastern University Boston, USA 1 Introduction Quantum mechanics is the study of the small particles that make up the universe – for instance, atoms et al. At such a microscopic level, the laws of classical mechanics fail to explain most of the observed phenomenon. At such a state quantum properties exhibited by particles is quite noticeable. Before we move forward a basic question arises that, what are quantum properties? To answer this question, we'll look at the basis of quantum mechanics – The Heisenberg's Uncertainty Principle. The uncertainty principle states that the more precisely the position is determined, the less precisely the momentum is known in this instant, and vice versa. For instance, if you measure the position of an electron revolving around the nucleus an atom, you cannot accurately measure its velocity. If you measure the electron's velocity, you cannot accurately determine its position. Equation for Heisenberg's uncertainty principle: Where σx standard deviation of position, σx the standard deviation of momentum and ħ is the reduced Planck constant, h / 2π. In a practical scenario, this principle is applied to photons. Photons – the smallest measure of light, can exist in all of their possible states at once and also they don’t have any mass. This means that whatever direction a photon can spin in -- say, diagonally, vertically and horizontally -- it does all at once. This is exactly the same as if you constantly moved east, west, north, south, and up-and-down at the same time.