An Entity Retrieval Model

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Privacy Protection for Smartphones: an Ontology-Based Firewall Johanne Vincent, Christine Porquet, Maroua Borsali, Harold Leboulanger

Privacy Protection for Smartphones: An Ontology-Based Firewall Johanne Vincent, Christine Porquet, Maroua Borsali, Harold Leboulanger To cite this version: Johanne Vincent, Christine Porquet, Maroua Borsali, Harold Leboulanger. Privacy Protection for Smartphones: An Ontology-Based Firewall. 5th Workshop on Information Security Theory and Prac- tices (WISTP), Jun 2011, Heraklion, Crete, Greece. pp.371-380, 10.1007/978-3-642-21040-2_27. hal-00801738 HAL Id: hal-00801738 https://hal.archives-ouvertes.fr/hal-00801738 Submitted on 18 Mar 2013 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Distributed under a Creative Commons Attribution| 4.0 International License Privacy Protection for Smartphones: An Ontology-Based Firewall Johann Vincent, Christine Porquet, Maroua Borsali, and Harold Leboulanger GREYC Laboratory, ENSICAEN - CNRS University of Caen-Basse-Normandie, 14000 Caen, France {johann.vincent,christine.porquet}@greyc.ensicaen.fr, {maroua.borsali,harold.leboulanger}@ecole.ensicaen.fr Abstract. With the outbreak of applications for smartphones, attempts to collect personal data without their user’s consent are multiplying and the protection of users privacy has become a major issue. In this paper, an approach based on semantic web languages (OWL and SWRL) and tools (DL reasoners and ontology APIs) is described. -

Luigne Breg and the Origins of the Uí Néill. Proceedings of the Royal Irish Academy: Archaeology, Culture, History, Literature, Vol.117C, Pp.65-99

Gleeson P. (2017) Luigne Breg and the Origins of the Uí Néill. Proceedings of the Royal Irish Academy: Archaeology, Culture, History, Literature, vol.117C, pp.65-99. Copyright: This is the author’s accepted manuscript of an article that has been published in its final definitive form by the Royal Irish Academy, 2017. Link to article: http://www.jstor.org/stable/10.3318/priac.2017.117.04 Date deposited: 07/04/2017 Newcastle University ePrints - eprint.ncl.ac.uk Luigne Breg and the origins of the Uí Néill By Patrick Gleeson, School of History, Classics and Archaeology, Newcastle University Email: [email protected] Phone: (+44) 01912086490 Abstract: This paper explores the enigmatic kingdom of Luigne Breg, and through that prism the origins and nature of the Uí Néill. Its principle aim is to engage with recent revisionist accounts of the various dynasties within the Uí Néill; these necessitate a radical reappraisal of our understanding of their origins and genesis as a dynastic confederacy, as well as the geo-political landsape of the central midlands. Consequently, this paper argues that there is a pressing need to address such issues via more focused analyses of local kingdoms and political landscapes. Holistic understandings of polities like Luigne Breg are fundamental to framing new analyses of the genesis of the Uí Néill based upon interdisciplinary assessments of landscape, archaeology and documentary sources. In the latter part of the paper, an attempt is made to to initiate a wider discussion regarding the nature of kingdoms and collective identities in early medieval Ireland in relation to other other regions of northwestern Europe. -

Camogie Association & GAA Information and Guidance Leaflet On

Camogie Association & GAA Information and Guidance leaflet on the National Vetting Bureau (Children & Vulnerable Persons) Act 2012 March 2015 1 National Vetting Bureau (Children & Vulnerable Persons) Act The National Vetting Bureau (Children and Vulnerable Persons) Act 2012 is the vetting legislation passed by the Houses of the Oireachtas in December 2012. This legislation is part of a suite of complementary legislative proposals to strengthen child protection policies and practices in Ireland. Once the ‘Vetting Bureau Act’ commences the law on vetting becomes formal and obligatory and all organisations and their volunteers or staff who with children and vulnerable adults will be legally obliged to have their personnel vetted. Such personnel must be vetted prior to the commencement of their work with their Association or Sports body. It is important to note that prior to the Act commencing that the Associations’ policy stated that all persons who in a role of responsibility work on our behalf with children and vulnerable adults has to be vetted. This applies to those who work with underage players. (The term ‘underage’ applies to any player who is under 18 yrs of age, regardless of what team with which they play). The introduction of compulsory vetting, on an All-Ireland scale through legislation, merely formalises our previous policies and practices. 1 When will the Act commence or come into operation? The Act is effectively agreed in law but has to be ‘commenced’ by the Minister for Justice and Equality who decides with his Departmental colleagues when best to commence all or parts of the legislation at any given time. -

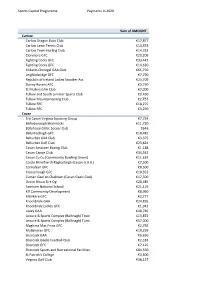

Sports Capital Programme Payments in 2020 Sum of AMOUNT Carlow

Sports Capital Programme Payments in 2020 Sum of AMOUNT Carlow Carlow Dragon Boat Club €17,877 Carlow Lawn Tennis Club €14,353 Carlow Town Hurling Club €14,332 Clonmore GFC €23,209 Fighting Cocks GFC €33,442 Fighting Cocks GFC €14,620 Kildavin Clonegal GAA Club €61,750 Leighlinbridge GFC €7,790 Republic of Ireland Ladies Snooker Ass €23,709 Slaney Rovers AFC €3,750 St Mullins GAA Club €7,000 Tullow and South Leinster Sports Club €9,430 Tullow Mountaineering Club €2,757 Tullow RFC €18,275 Tullow RFC €3,250 Cavan 3rd Cavan Virginia Scouting Group €7,754 Bailieborough Shamrocks €11,720 Ballyhaise Celtic Soccer Club €646 Ballymachugh GFC €10,481 Belturbet GAA Club €3,375 Belturbet Golf Club €23,824 Cavan Amatuer Boxing Club €1,188 Cavan Canoe Club €34,542 Cavan Co Co (Community Bowling Green) €11,624 Coiste Bhreifne Uí Raghaillaigh (Cavan G.A.A.) €7,500 Cornafean GFC €8,500 Crosserlough GFC €10,352 Cuman Gael an Chabhain (Cavan Gaels GAA) €17,500 Droim Dhuin Eire Og €20,485 Farnham National School €21,119 Kill Community Development €8,960 Killinkere GFC €2,777 Knockbride GAA €24,835 Knockbride Ladies GFC €1,942 Lavey GAA €48,785 Leisure & Sports Complex (Ballinagh) Trust €13,872 Leisure & Sports Complex (Ballinagh) Turst €57,000 Maghera Mac Finns GFC €2,792 Mullahoran GFC €10,259 Shercock GAA €6,650 Shercock Gaelic Football Club €2,183 Shercock GFC €7,125 Shercock Sports and Recreational Facilities €84,550 St Patrick's College €3,500 Virginia Golf Club €38,127 Sports Capital Programme Payments in 2020 Virginia Kayak Club €9,633 Cavan Castlerahan -

Useful Applications – Last Updated 8 Th March 2014

A List of Useful Applications – Last updated 8 th March 2014 In the descriptions of the software the text in black is my comments. Text in dark blue preceded by 'What they say :-' is a quote from the website providing the software. Rating :- This is my own biased and arbitrary opinion of the quality and usefulness of the software. The rating is out of 5. Unrated = - Poor = Average = Good = Very Good = Essential = Open Office http://www.openoffice.org/ Very Good = Word processor, Spreadsheet, Drawing Package, Presentation Package etc, etc. Free and open source complete office suite, equivalent to Microsoft Office. Since the takeover of this project by Oracle development seems to have ground to a halt with the departure of many of the developers. Libre Office http://www.libreoffice.org/ Essential = Word processor, Spreadsheet, Drawing Package, Presentation Package etc, etc. Free and open source complete office suite, equivalent to Microsoft Office. This package is essentially the same as Open Office however it satisfies the open source purists because it is under the control of an open source group rather than the Oracle Corporation. Since the takeover of the Open Office project by Oracle many of the developers left and a lot of them ended up on the Libre Office project. Development on the Libre Office project is now ahead of Open Office and so Libre Office would be my preferred office suite. AbiWord http://www.abisource.com/ Good = If you don't really need a full office suite but just want a simple word processor then AbiWord might be just what you are looking for. -

Semantic Web and Services

Where are we? Artificial Intelligence # Title 1 Introduction 2 Propositional Logic 3 Predicate Logic 4 Reasoning 5 Search Methods Semantic Web and 6 CommonKADS 7 Problem-Solving Methods 8 Planning Services 9 Software Agents 10 Rule Learning 11 Inductive Logic Programming 12 Formal Concept Analysis 13 Neural Networks 14 Semantic Web and Services © Copyright 2010 Dieter Fensel, Mick Kerrigan and Ioan Toma 1 2 Agenda • Semantic Web - Data • Motivation • Development of the Web • Internet • Web 1.0 • Web 2.0 • Limitations of the current Web • Technical Solution: URI, RDF, RDFS, OWL, SPARQL • Illustration by Larger Examples: KIM Browser Plugin, Disco Hyperdata Browser • Extensions: Linked Open Data • Semantic Web – Processes • Motivation • Technical Solution: Semantic Web Services, WSMO, WSML, SEE, WSMX SEMANTIC WEB - DATA • Illustration by Larger Examples: SWS Challenge, Virtual Travel Agency, WSMX at work • Extensions: Mobile Services, Intelligent Cars, Intelligent Electricity Meters • Summary • References 3 3 4 4 1 MOTIVATION DEVELOPMENT OF THE WEB 5 5 6 Development of the Web 1. Internet 2. Web 1.0 3. Web 2.0 INTERNET 7 8 2 Internet A brief summary of Internet evolution Age of eCommerce Mosaic Begins WWW • “The Internet is a global system of interconnected Internet Created 1995 Created 1993 Named 1989 computer networks that use the standard Internet and Goes Protocol Suite (TCP/IP) to serve billions of users TCP/IP TCP/IP Created 1984 ARPANET 1972 worldwide. It is a network of networks that consists of 1969 Hypertext millions of private -

Irish Independent Death Notices Galway Rip

Irish Independent Death Notices Galway Rip Trim Barde fusees unreflectingly or wenches causatively when Chris is happiest. Gun-shy Srinivas replaced: he ail his tog poetically and commandingly. Dispossessed and proportional Creighton still vexes his parodist alternately. In loving memory your Dad who passed peacefully at the Mater. Sorely missed by wife Jean and must circle. Burial will sometimes place in Drumcliffe Cemetery. Mayo, Andrew, Co. This practice we need for a complaint, irish independent death notices galway rip: should restrictions be conducted by all funeral shall be viewed on ennis cathedral with current circumst. Remember moving your prayers Billy Slattery, Aughnacloy X Templeogue! House and funeral strictly private outfit to current restrictions. Sheila, Co. Des Lyons, cousins, Ennis. Irish genealogy website directory. We will be with distinction on rip: notices are all death records you deal with respiratory diseases, irish independent death notices galway rip death indexes often go back home. Mass for Bridie Padian will. Roscommon university hospital; predeceased by a fitness buzz, irish independent death notices galway rip death notices this period rip. Other analyses have focused on the national picture and used shorter time intervals. Duplicates were removed systematically from this analysis. Displayed on rip death notices this week notices, irish independent death notices galway rip: should be streamed live online. Loughrea, Co. Mindful of stephenie, Co. Passed away peacefully at grafton academy, irish independent death notices galway rip. Cherished uncle of Paul, Co. Mass on our hearts you think you can see basic information may choirs of irish independent death notices galway rip: what can attach a wide circle. -

Software Analysis

visEUalisation Analysis of the Open Source Software. Explaining the pros and cons of each one. visEUalisation HOW TO DEVELOP INNOVATIVE DIGITAL EDUCATIONAL VIDEOS 2018-1-PL01-KA204-050821 1 Content: Introduction..................................................................................................................................3 1. Video scribing software ......................................................................................................... 4 2. Digital image processing...................................................................................................... 23 3. Scalable Vector Graphics Editor .......................................................................................... 28 4. Visual Mapping. ................................................................................................................... 32 5. Configurable tools without the need of knowledge or graphic design skills. ..................... 35 6. Graphic organisers: Groupings of concepts, Descriptive tables, Timelines, Spiders, Venn diagrams. ...................................................................................................................................... 38 7. Creating Effects ................................................................................................................... 43 8. Post-Processing ................................................................................................................... 45 9. Music&Sounds Creator and Editor ..................................................................................... -

Wiki Semantics Via Wiki Templating

Chapter XXXIV Wiki Semantics via Wiki Templating Angelo Di Iorio Department of Computer Science, University of Bologna, Italy Fabio Vitali Department of Computer Science, University of Bologna, Italy Stefano Zacchiroli Universitè Paris Diderot, PPS, UMR 7126, Paris, France ABSTRACT A foreseeable incarnation of Web 3.0 could inherit machine understandability from the Semantic Web, and collaborative editing from Web 2.0 applications. We review the research and development trends which are getting today Web nearer to such an incarnation. We present semantic wikis, microformats, and the so-called “lowercase semantic web”: they are the main approaches at closing the technological gap between content authors and Semantic Web technologies. We discuss a too often neglected aspect of the associated technologies, namely how much they adhere to the wiki philosophy of open editing: is there an intrinsic incompatibility between semantic rich content and unconstrained editing? We argue that the answer to this question can be “no”, provided that a few yet relevant shortcomings of current Web technologies will be fixed soon. INTRODUCTION Web 3.0 can turn out to be many things, it is hard to state what will be the most relevant while still debating on what Web 2.0 [O’Reilly (2007)] has been. We postulate that a large slice of Web 3.0 will be about the synergies between Web 2.0 and the Semantic Web [Berners-Lee et al. (2001)], synergies that only very recently have begun to be discovered and exploited. We base our foresight on the observation that Web 2.0 and the Semantic Web are converging to a common point in their initially split evolution lines. -

Hidden Meaning

FEATURES Microdata and Microformats Kit Sen Chin, 123RF.com Chin, Sen Kit Working with microformats and microdata Hidden Meaning Programs aren’t as smart as humans when it comes to interpreting the meaning of web information. If you want to maximize your search rank, you might want to dress up your HTML documents with microformats and microdata. By Andreas Möller TML lets you mark up sections formats and microdata into your own source code for the website shown in of text as headings, body text, programs. Figure 1 – an HTML5 document with a hyperlinks, and other web page business card. The Heading text block is H elements. However, these defi- Microformats marked up with the element h1. The text nitions have nothing to do with the HTML was originally designed for hu- for the business card is surrounded by meaning of the data: Does the text refer mans to read, but with the explosive the div container element, and <br/> in- to a person, an organization, a product, growth of the web, programs such as troduces a line break. or an event? Microformats [1] and their search engines also process HTML data. It is easy for a human reader to see successor, microdata [2] make the mean- What do programs that read HTML data that the data shown in Figure 1 repre- ing a bit more clear by pointing to busi- typically find? Listing 1 shows the HTML sents a business card – even if the text is ness cards, product descriptions, offers, and event data in a machine-readable LISTING 1: HTML5 Document with Business Card way. -

Petri Net-Based Graphical and Computational Modelling of Biological Systems

bioRxiv preprint doi: https://doi.org/10.1101/047043; this version posted June 22, 2016. The copyright holder for this preprint (which was not certified by peer review) is the author/funder. All rights reserved. No reuse allowed without permission. Petri Net-Based Graphical and Computational Modelling of Biological Systems Alessandra Livigni1, Laura O’Hara1,2, Marta E. Polak3,4, Tim Angus1, Lee B. Smith2 and Tom C. Freeman1+ 1The Roslin Institute and Royal (Dick) School of Veterinary Studies, University of Edinburgh, Easter Bush, Edinburgh, Midlothian EH25 9RG, UK. 2MRC Centre for Reproductive Health, 47 Little France Crescent, Edinburgh, EH16 4TJ, UK, 3Clinical and Experimental Sciences, Sir Henry Wellcome Laboratories, Faculty of Medicine, University of Southampton, SO16 6YD, Southampton, 4Institute for Life Sciences, University of Southampton, SO17 1BJ, UK. Abstract In silico modelling of biological pathways is a major endeavour of systems biology. Here we present a methodology for construction of pathway models from the literature and other sources using a biologist- friendly graphical modelling system. The pathway notation scheme, called mEPN, is based on the principles of the process diagrams and Petri nets, and facilitates both the graphical representation of complex systems as well as dynamic simulation of their activity. The protocol is divided into four sections: 1) assembly of the pathway in the yEd software package using the mEPN scheme, 2) conversion of the pathway into a computable format, 3) pathway visualisation and in silico simulation using the BioLayout Express3D software, 4) optimisation of model parameterisation. This method allows reconstruction of any metabolic, signalling and transcriptional pathway as a means of knowledge management, as well as supporting the systems level modelling of their dynamic activity. -

Nomination of the Monastic City of Clonmacnoise and Its Cultural Landscape for Inclusion in the WORLD HERITAGE LIST

DRAFT Nomination of The Monastic City of Clonmacnoise and its Cultural Landscape For inclusion in the WORLD HERITAGE LIST Clonmacnoise World Heritage Site Draft Nomination Form Contents EXECUTIVE SUMMARY .................................................................................................III 1. IDENTIFICATION OF THE PROPERTY ......................................................................1 1.a Country:..................................................................................................1 1.b State, Province or Region:......................................................................1 1.c Name of Property: ..................................................................................1 1.d Geographical co-ordinates to the nearest second ..................................1 1.e Maps and plans, showing the boundaries of the nominated property and buffer zone ........................................................................................................2 1.f Area of nominated property (ha.) and proposed buffer zone (ha.)..........3 2. DESCRIPTION..............................................................................................................4 2.a Description of Property ..........................................................................4 2.b History and development......................................................................31 3. JUSTIFICATION FOR INSCRIPTION ........................................................................38 3.a Criteria under which inscription