Analysis and Digital Implementation of the Talk Box Effect

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Download Download

Florian Heesch Voicing the Technological Body Some Musicological Reflections on Combinations of Voice and Technology in Popular Music ABSTRACT The article deals with interrelations of voice, body and technology in popular music from a musicological perspective. It is an attempt to outline a systematic approach to the history of music technology with regard to aesthetic aspects, taking the iden- tity of the singing subject as a main point of departure for a hermeneutic reading of popular song. Although the argumentation is based largely on musicological research, it is also inspired by the notion of presentness as developed by theologian and media scholar Walter Ong. The variety of the relationships between voice, body, and technology with regard to musical representations of identity, in particular gender and race, is systematized alongside the following cagories: (1) the “absence of the body,” that starts with the establishment of phonography; (2) “amplified presence,” as a signifier for uses of the microphone to enhance low sounds in certain manners; and (3) “hybridity,” including vocal identities that blend human body sounds and technological processing, where- by special focus is laid on uses of the vocoder and similar technologies. KEYWORDS recorded popular song, gender in music, hybrid identities, race in music, presence/ absence, disembodied voices BIOGRAPHY Dr. Florian Heesch is professor of popular music and gender studies at the University of Siegen, Germany. He holds a PhD in musicology from the University of Gothenburg, Sweden. He published several books and articles on music and Norse mythology, mu- sic and gender and on diverse aspects of heavy metal studies. -

User's Manual

USER’S MANUAL G-Force GUITAR EFFECTS PROCESSOR IMPORTANT SAFETY INSTRUCTIONS The lightning flash with an arrowhead symbol The exclamation point within an equilateral triangle within an equilateral triangle, is intended to alert is intended to alert the user to the presence of the user to the presence of uninsulated "dan- important operating and maintenance (servicing) gerous voltage" within the product's enclosure that may instructions in the literature accompanying the product. be of sufficient magnitude to constitute a risk of electric shock to persons. 1 Read these instructions. Warning! 2 Keep these instructions. • To reduce the risk of fire or electrical shock, do not 3 Heed all warnings. expose this equipment to dripping or splashing and 4 Follow all instructions. ensure that no objects filled with liquids, such as vases, 5 Do not use this apparatus near water. are placed on the equipment. 6 Clean only with dry cloth. • This apparatus must be earthed. 7 Do not block any ventilation openings. Install in • Use a three wire grounding type line cord like the one accordance with the manufacturer's instructions. supplied with the product. 8 Do not install near any heat sources such • Be advised that different operating voltages require the as radiators, heat registers, stoves, or other use of different types of line cord and attachment plugs. apparatus (including amplifiers) that produce heat. • Check the voltage in your area and use the 9 Do not defeat the safety purpose of the polarized correct type. See table below: or grounding-type plug. A polarized plug has two blades with one wider than the other. -

In the Studio: the Role of Recording Techniques in Rock Music (2006)

21 In the Studio: The Role of Recording Techniques in Rock Music (2006) John Covach I want this record to be perfect. Meticulously perfect. Steely Dan-perfect. -Dave Grohl, commencing work on the Foo Fighters 2002 record One by One When we speak of popular music, we should speak not of songs but rather; of recordings, which are created in the studio by musicians, engineers and producers who aim not only to capture good performances, but more, to create aesthetic objects. (Zak 200 I, xvi-xvii) In this "interlude" Jon Covach, Professor of Music at the Eastman School of Music, provides a clear introduction to the basic elements of recorded sound: ambience, which includes reverb and echo; equalization; and stereo placement He also describes a particularly useful means of visualizing and analyzing recordings. The student might begin by becoming sensitive to the three dimensions of height (frequency range), width (stereo placement) and depth (ambience), and from there go on to con sider other special effects. One way to analyze the music, then, is to work backward from the final product, to listen carefully and imagine how it was created by the engineer and producer. To illustrate this process, Covach provides analyses .of two songs created by famous producers in different eras: Steely Dan's "Josie" and Phil Spector's "Da Doo Ron Ron:' Records, tapes, and CDs are central to the history of rock music, and since the mid 1990s, digital downloading and file sharing have also become significant factors in how music gets from the artists to listeners. Live performance is also important, and some groups-such as the Grateful Dead, the Allman Brothers Band, and more recently Phish and Widespread Panic-have been more oriented toward performances that change from night to night than with authoritative versions of tunes that are produced in a recording studio. -

Dnafx Git Multi Effects Unit User Manual

DNAfx GiT multi effects unit user manual Musikhaus Thomann Thomann GmbH Hans-Thomann-Straße 1 96138 Burgebrach Germany Telephone: +49 (0) 9546 9223-0 E-mail: [email protected] Internet: www.thomann.de 27.11.2019, ID: 478040 Table of contents Table of contents 1 General information................................................................................................................................. 5 1.1 Further information........................................................................................................................... 6 1.2 Notational conventions.................................................................................................................... 7 1.3 Symbols and signal words............................................................................................................... 8 2 Safety instructions.................................................................................................................................. 10 3 Features....................................................................................................................................................... 14 4 Installation.................................................................................................................................................. 15 5 Connections and operating elements........................................................................................... 25 6 Operating................................................................................................................................................... -

Robotic Voice Effects

Robotic voice effects From Wikipedia, the free encyclopedia Source : http://en.wikipedia.org/wiki/Robotic_voice_effects "Robot voices" became a recurring element in popular music starting in the late twentieth century, and several methods of producing variations on this effect have arisen. Though the vocoder is by far the best-known, the following other pieces of music technology are often confused with it: Sonovox This was an early version of the talk box used to create the voice of the piano in the Sparky's Magic Piano series from 1947. It was used as the voice of many musical instruments in Rusty in Orchestraville. It was used as the voice of Casey the Train in Dumbo and The Reluctant Dragon[citation needed]. Radio jingle companies PAMS and JAM Creative Productions also used the sonovox in many stations ID's they produced. Talk box The talk box guitar effect was invented by Doug Forbes and popularized by Peter Frampton. In the talk box effect, amplified sound is actually fed via a tube into the performer's mouth and is then shaped by the performer's lip, tongue, and mouth movements before being picked up by a microphone. In contrast, the vocoder effect is produced entirely electronically. The background riff from "Livin' on a Prayer" by Bon Jovi is a well-known example. "California Love" by 2Pac and Roger Troutman is a more recent recording featuring a talk box fed with a synthesizer instead of guitar. Steven Drozd of the The Flaming Lips used the talk box on parts of the groups eleventh album, At War with the Mystics, to imitate some of Wayne Coyne's repeated lyrics in the "Yeah Yeah Yeah Song". -

Modify Your Wah Pedal by James Haskin on June 2, 2008

Home Sign Up! Browse Community Submit All Art Craft Food Games Green Home Kids Life Music Offbeat Outdoors Pets Photo Ride Science Tech Modify Your Wah Pedal by James Haskin on June 2, 2008 Table of Contents Modify Your Wah Pedal . 1 Intro: Modify Your Wah Pedal . 2 Step 1: Research . 2 Step 2: True Bypass . 4 Step 3: Volume Boost . 7 Step 4: Wah-Volume Pedal . 8 Step 5: Vocal . 9 Step 6: Midrange . 10 Related Instructables . 11 Comments . 11 http://www.instructables.com/id/Modify-Your-Wah-Pedal/ Author:James Haskin author's website [email protected] Intro: Modify Your Wah Pedal After making my talk box I started surfing the web for other guitar related things I could do myself. I found loads of info on the Dunlop GCB-95 wah pedal. This Instructable will walk you through all of the modifications I made to my wah pedal... *True Bypass Mod *Volume Boost Mod *Wah-Volume Pedal Mod *Vocal Mod *Midrange Mod After having completed all of these mod and a few others I recommend doing them one at a time and testing in between mods so if you don't like something, you can easily reverse it. Your gonna need some basic soldering tools... -good soldering iron (mines a Weller WESD51D I got for christmas) -solder -wire cutter/stripper -helping hand (a must!!) also the to wire anything to the board itself you will need 24AWG wire Image Notes 1. Stole for my DS-1 I'm working on when I finish THAT instructable I'll change this to a funk colored chicken head knob :] Step 1: Research I think this is a big step in all of my projects. -

Computer Mediated Music Production: a Study of Abstraction and Activity

Computer mediated music production: A study of abstraction and activity by Matthew Duignan A thesis for the degree of Doctor of Philosophy in Computer Science. Victoria University of Wellington 2008 Abstract Human Computer Interaction research has a unique challenge in under- standing the activity systems of creative professionals, and designing the user-interfaces to support their work. In these activities, the user is involved in the process of building and editing complex digital artefacts through a process of continued refinement, as is seen in computer aided architecture, design, animation, movie-making, 3D modelling, interactive media (such as shockwave-flash), as well as audio and music production. This thesis exam- ines the ways in which abstraction mechanisms present in music production systems interplay with producers’ activity through a collective case study of seventeen professional producers. From the basis of detailed observations and interviews we examine common abstractions provided by the ubiqui- tous multitrack-mixing metaphor and present design implications for future systems. ii Acknowledgements I would like to thank my supervisors Robert Biddle and James Noble for their endless hours of guidance and feedback during this process, and most of all for allowing me to choose such a fun project. Michael Norris and Lissa Meridan from the Victoria University music department were also invaluable for their comments and expertise. I would also like to thank Alan Blackwell for taking the time to discuss my work and provide valuable advice. I am indebted to all of my participants for the great deal of time they selflessly offered, and the deep insights they shared into their professional world. -

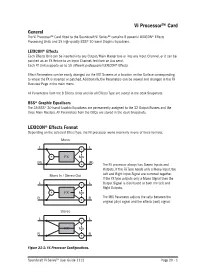

Vi Processor™ Card

Vi Processor™ Card General The Vi Processor™ Card fitted to the Soundcraft Vi Series™ contains 8 powerful LEXICON® Effects Processing Units and 35 high-quality BSS® 30-band Graphic Equalisers. LEXICON® Effects Each Effects Unit can be inserted into any Output/Main Master bus or into any Input Channel, or it can be patched as an FX Return to an Input Channel, fed from an Aux send. Each FX Unit supports up to 30 different professional LEXICON® Effects. Effect Parameters can be easily changed via the VST Screens at a location on the Surface corresponding to where the FX is inserted or patched. Additionally, the Parameters can be viewed and changed in the FX Overview Page in the main menu. All Parameters from the 8 Effects Units and for all Effects Type are stored in the desk Snapshots. BSS® Graphic Equalisers The 35 BSS® 30-band Graphic Equalizers are permanently assigned to the 32 Output Busses and the three Main Masters. All Parameters from the GEQs are stored in the desk Snapshots. LEXICON® Effects Format Depending on the selected Effect Type, the FX processor works internally in one of three formats: The FX processor always has Stereo Inputs and Outputs. If the FX Type needs only a Mono Input, the Left and Right Input Signal are summed together. If the FX Type outputs only a Mono Signal then the Output Signal is distributed to both the Left and Right Outputs. The MIX Parameter adjusts the ratio between the original (dry) signal and the effects (wet) signal. Figure 21-1: FX Processor Configurations. -

SRX PIANO II Software Synthesizer Owner's Manual

SRX PIANO II Software Synthesizer Owner’s Manual © 2020 Roland Corporation 01 Introduction For details on the settings for the DAW software that you’re using, refer to the DAW’s help or manuals. About Trademarks • VST is a trademark and software of Steinberg Media Technologies GmbH. • Roland is a registered trademark or trademark of Roland Corporation in the United States and/or other countries. • Company names and product names appearing in this document are registered trademarks or trademarks of their respective owners. 2 Contents Introduction . 2 EFFECTS Parameters . 34 Applying Effects . 34 Screen Structure . 4 Effect Settings . 34 Using SRX PIANO II . 5 Signal Flow and Parameters (ROUTING). 34 How to Edit Values . 5 Multi-Effect Settings (MFX) . 35 Initializing a value. 5 Chorus and Reverb Settings. 36 About the KEYBOARD button. 5 Effects List . 37 Overview of the SRX PIANO II . 6 Multi-Effects Parameters (MFX) . 37 How the SRX PIANO II is Organized. 6 About RATE and DELAY TIME . 38 How a Patch is Structured . 6 When Using 3D Effects . 38 How a Rhythm Set is Structured . 6 About the STEP RESET function . 38 Calculating the Number of Voices Being Used . 6 Chorus Parameters . 69 About the Effects . 7 Reverb Parameters . 69 Memory and Bank . 8 What is a Memory?. 8 Bank . 8 Changing to Other Bank. 8 Exporting the Bank . 8 Importing a Bank . 8 Creating/Deleting a Bank . 8 Renaming a Bank . 8 Category. 9 Memory . 9 Loading a Memory. 9 Saving the Memory . 9 Renaming the Memory. 9 Changing the Order of the Memories . -

M Audio Black Box Manual

Reloaded: with updated firmware and additional features. User Guide English Table Of Contents English . 3 Hello From Roger Linn . 3 Introduction . 3 What’s in the Box . 4 Minimum Computer System Requirements . 4 New Version 2 Firmware Inside . 4 Front Panel Connectors . 5 Rear Panel Connectors . 5 Top Panel . 6 Presets and Drumbeats . 8 Editing the Guitar Amp and Compression Settings . 9 Amp Descriptions . .10 Editing the Modulation and Filter Effects . .15 Modulation and Filter Effect Descriptions . .16 Editing the Delay and Reverb . 20 Editing the Utilities . 22 Assigning Momentary Foot Switch Pedal Settings . 25 Accessing the Shift Parameters . 26 Tuner Function . 27 How to Store and Name a Preset . 27 Initializing Black Box to Factory Settings . 27 Connecting your Hardware to the Computer . 28 Using the Black Box with the Computer . 29 Driver Installation . 30 Windows XP (SP2) . 30 Mac OS X . 32 Control Panels . 37 Effects & MIDI Page . 37 Latency Page (Windows Only) . 37 Presets & Firmware Page . 38 About Page . 39 Recording with a DAW (digital audio workstation) . 40 Troubleshooting . .41 Warranty . 42 Specifications . 43 MIDI Implementation Charts . 44 Channel Mode, System Common and System Real Time Messages . 44 Universal System Exclusive Messages . 46 System Exclusive Messages . 47 Data Structures . 53 7-Bit Data Packing . 55 © 2006 Avid Technology, Inc. All rights reserved. Product features, specifications, system requirements and availability are subject to change without notice. Avid, M-Audio and Black Box are either trademarks or registered trademarks of Avid Technology, Inc. All other trademarks contained herein are the property of their respective owners. IMPORTANT! Audio equipment should ALWAYS be powered up and down in a certain order. -

Dnafx Git Multi Effects Unit User Manual

DNAfx GiT multi effects unit user manual Musikhaus Thomann Thomann GmbH Hans-Thomann-Straße 1 96138 Burgebrach Germany Telephone: +49 (0) 9546 9223-0 E-mail: [email protected] Internet: www.thomann.de 10.06.2021, ID: 478040 Table of contents Table of contents 1 General information................................................................................................................................. 5 1.1 Further information........................................................................................................................... 6 1.2 Notational conventions.................................................................................................................... 7 1.3 Symbols and signal words............................................................................................................... 8 2 Safety instructions.................................................................................................................................. 10 3 Features....................................................................................................................................................... 12 4 Installation.................................................................................................................................................. 13 5 Connections and operating elements........................................................................................... 23 6 Operating................................................................................................................................................... -

Digital Guitar Amplifier and Effects Unit Shaun Caraway, Matt Evens, and Jan Nevarez Group 5, Senior Design Spring 2014

Digital Guitar Amplifier and Effects Unit Shaun Caraway, Matt Evens, and Jan Nevarez Group 5, Senior Design Spring 2014 Table of Contents 1. Executive Summary ....................................................................................... 1 2. Project Description ......................................................................................... 2 2.1. Project Motivation and Goals ................................................................... 2 2.2. Objectives ................................................................................................ 3 2.3. Project Requirements and Specifications ................................................ 3 3. Research ........................................................................................................ 4 3.1. Digital Signal Processing ......................................................................... 4 3.1.1. Requirements ................................................................................... 4 3.1.1.1. Sampling Rate ............................................................................... 4 3.1.1.2. Audio Word Size ............................................................................ 5 3.1.2. Processor Comparison ..................................................................... 5 3.1.3. Features ............................................................................................ 6 3.1.3.1. Programming Method .................................................................... 6 3.1.3.2. Real-Time OS ...............................................................................