Row-Store / Column-Store / Hybrid-Store

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

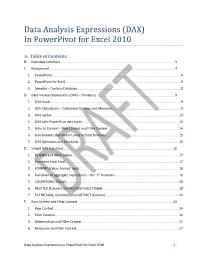

Data Analysis Expressions (DAX) in Powerpivot for Excel 2010

Data Analysis Expressions (DAX) In PowerPivot for Excel 2010 A. Table of Contents B. Executive Summary ............................................................................................................................... 3 C. Background ........................................................................................................................................... 4 1. PowerPivot ...............................................................................................................................................4 2. PowerPivot for Excel ................................................................................................................................5 3. Samples – Contoso Database ...................................................................................................................8 D. Data Analysis Expressions (DAX) – The Basics ...................................................................................... 9 1. DAX Goals .................................................................................................................................................9 2. DAX Calculations - Calculated Columns and Measures ...........................................................................9 3. DAX Syntax ............................................................................................................................................ 13 4. DAX uses PowerPivot data types ......................................................................................................... -

Rdbmss Why Use an RDBMS

RDBMSs • Relational Database Management Systems • A way of saving and accessing data on persistent (disk) storage. 51 - RDBMS CSC309 1 Why Use an RDBMS • Data Safety – data is immune to program crashes • Concurrent Access – atomic updates via transactions • Fault Tolerance – replicated dbs for instant failover on machine/disk crashes • Data Integrity – aids to keep data meaningful •Scalability – can handle small/large quantities of data in a uniform manner •Reporting – easy to write SQL programs to generate arbitrary reports 51 - RDBMS CSC309 2 1 Relational Model • First published by E.F. Codd in 1970 • A relational database consists of a collection of tables • A table consists of rows and columns • each row represents a record • each column represents an attribute of the records contained in the table 51 - RDBMS CSC309 3 RDBMS Technology • Client/Server Databases – Oracle, Sybase, MySQL, SQLServer • Personal Databases – Access • Embedded Databases –Pointbase 51 - RDBMS CSC309 4 2 Client/Server Databases client client client processes tcp/ip connections Server disk i/o server process 51 - RDBMS CSC309 5 Inside the Client Process client API application code tcp/ip db library connection to server 51 - RDBMS CSC309 6 3 Pointbase client API application code Pointbase lib. local file system 51 - RDBMS CSC309 7 Microsoft Access Access app Microsoft JET SQL DLL local file system 51 - RDBMS CSC309 8 4 APIs to RDBMSs • All are very similar • A collection of routines designed to – produce and send to the db engine an SQL statement • an original -

Microsoft Power BI DAX

Course Outline Microsoft Power BI DAX Duration: 1 day This course will cover the ability to use Data Analysis Expressions (DAX) language to perform powerful data manipulations within Power BI Desktop. On our Microsoft Power BI course, you will learn how easy is to import data into the Power BI Desktop and create powerful and dynamic visuals that bring your data to life. But what if you need to create your own calculated columns and measures; to have complete control over the calculations you need to perform on your data? DAX formulas provide this capability and many other important capabilities as well. Learning how to create effective DAX formulas will help you get the most out of your data. When you get the information you need, you can begin to solve real business problems that affect your bottom line. This is Business Intelligence, and DAX will help you get there. To get the most out of this course You should be a competent Microsoft Excel user. You don’t need any experience of using DAX but we recommend that you attend our 2-day Microsoft Power BI course prior to taking this course. What you will learn:- Importing Your Data and Creating the Data Model CALCULATE Function Overview of importing data into the Power BI Desktop Exploring the importance of the CALCULATE function. and creating the Data Model. Using complex filters within CALCULATE using FILTER. Using ALLSELECTED Function. Using DAX Syntax used by DAX. Time Intelligence Functions Understanding DAX Data Types. Why Time Intelligence Functions? Creating a Date Table. Creating Calculated Columns Finding Month to Date, Year To Date, Previous Month How to use DAX expressions in Calculated Columns. -

Column-Stores Vs. Row-Stores: How Different Are They Really?

Column-Stores vs. Row-Stores: How Different Are They Really? Daniel J. Abadi Samuel R. Madden Nabil Hachem Yale University MIT AvantGarde Consulting, LLC New Haven, CT, USA Cambridge, MA, USA Shrewsbury, MA, USA [email protected] [email protected] [email protected] ABSTRACT General Terms There has been a significant amount of excitement and recent work Experimentation, Performance, Measurement on column-oriented database systems (“column-stores”). These database systems have been shown to perform more than an or- Keywords der of magnitude better than traditional row-oriented database sys- tems (“row-stores”) on analytical workloads such as those found in C-Store, column-store, column-oriented DBMS, invisible join, com- data warehouses, decision support, and business intelligence appli- pression, tuple reconstruction, tuple materialization. cations. The elevator pitch behind this performance difference is straightforward: column-stores are more I/O efficient for read-only 1. INTRODUCTION queries since they only have to read from disk (or from memory) Recent years have seen the introduction of a number of column- those attributes accessed by a query. oriented database systems, including MonetDB [9, 10] and C-Store [22]. This simplistic view leads to the assumption that one can ob- The authors of these systems claim that their approach offers order- tain the performance benefits of a column-store using a row-store: of-magnitude gains on certain workloads, particularly on read-intensive either by vertically partitioning the schema, or by indexing every analytical processing workloads, such as those encountered in data column so that columns can be accessed independently. In this pa- warehouses. -

LATERAL LATERAL Before SQL:1999

Still using Windows 3.1? So why stick with SQL-92? @ModernSQL - https://modern-sql.com/ @MarkusWinand SQL:1999 LATERAL LATERAL Before SQL:1999 Select-list sub-queries must be scalar[0]: (an atomic quantity that can hold only one value at a time[1]) SELECT … , (SELECT column_1 FROM t1 WHERE t1.x = t2.y ) AS c FROM t2 … [0] Neglecting row values and other workarounds here; [1] https://en.wikipedia.org/wiki/Scalar LATERAL Before SQL:1999 Select-list sub-queries must be scalar[0]: (an atomic quantity that can hold only one value at a time[1]) SELECT … , (SELECT column_1 , column_2 FROM t1 ✗ WHERE t1.x = t2.y ) AS c More than FROM t2 one column? … ⇒Syntax error [0] Neglecting row values and other workarounds here; [1] https://en.wikipedia.org/wiki/Scalar LATERAL Before SQL:1999 Select-list sub-queries must be scalar[0]: (an atomic quantity that can hold only one value at a time[1]) SELECT … More than , (SELECT column_1 , column_2 one row? ⇒Runtime error! FROM t1 ✗ WHERE t1.x = t2.y } ) AS c More than FROM t2 one column? … ⇒Syntax error [0] Neglecting row values and other workarounds here; [1] https://en.wikipedia.org/wiki/Scalar LATERAL Since SQL:1999 Lateral derived queries can see table names defined before: SELECT * FROM t1 CROSS JOIN LATERAL (SELECT * FROM t2 WHERE t2.x = t1.x ) derived_table ON (true) LATERAL Since SQL:1999 Lateral derived queries can see table names defined before: SELECT * FROM t1 Valid due to CROSS JOIN LATERAL (SELECT * LATERAL FROM t2 keyword WHERE t2.x = t1.x ) derived_table ON (true) LATERAL Since SQL:1999 Lateral -

Columnar Storage in SQL Server 2012

Columnar Storage in SQL Server 2012 Per-Ake Larson Eric N. Hanson Susan L. Price [email protected] [email protected] [email protected] Abstract SQL Server 2012 introduces a new index type called a column store index and new query operators that efficiently process batches of rows at a time. These two features together greatly improve the performance of typical data warehouse queries, in some cases by two orders of magnitude. This paper outlines the design of column store indexes and batch-mode processing and summarizes the key benefits this technology provides to customers. It also highlights some early customer experiences and feedback and briefly discusses future enhancements for column store indexes. 1 Introduction SQL Server is a general-purpose database system that traditionally stores data in row format. To improve performance on data warehousing queries, SQL Server 2012 adds columnar storage and efficient batch-at-a- time processing to the system. Columnar storage is exposed as a new index type: a column store index. In other words, in SQL Server 2012 an index can be stored either row-wise in a B-tree or column-wise in a column store index. SQL Server column store indexes are “pure” column stores, not a hybrid, because different columns are stored on entirely separate pages. This improves I/O performance and makes more efficient use of memory. Column store indexes are fully integrated into the system. To improve performance of typical data warehous- ing queries, all a user needs to do is build a column store index on the fact tables in the data warehouse. -

The Relational Model

The Relational Model Read Text Chapter 3 Laks VS Lakshmanan; Based on Ramakrishnan & Gehrke, DB Management Systems Learning Goals given an ER model of an application, design a minimum number of correct tables that capture the information in it given an ER model with inheritance relations, weak entities and aggregations, design the right tables for it given a table design, create correct tables for this design in SQL, including primary and foreign key constraints compare different table designs for the same problem, identify errors and provide corrections Unit 3 2 Historical Perspective Introduced by Edgar Codd (IBM) in 1970 Most widely used model today. Vendors: IBM, Informix, Microsoft, Oracle, Sybase, etc. “Legacy systems” are usually hierarchical or network models (i.e., not relational) e.g., IMS, IDMS, … Unit 3 3 Historical Perspective Competitor: object-oriented model ObjectStore, Versant, Ontos A synthesis emerging: object-relational model o Informix Universal Server, UniSQL, O2, Oracle, DB2 Recent competitor: XML data model In all cases, relational systems have been extended to support additional features, e.g., objects, XML, text, images, … Unit 3 4 Main Characteristics of the Relational Model Exceedingly simple to understand All kinds of data abstracted and represented as a table Simple query language separate from application language Lots of bells and whistles to do complicated things Unit 3 5 Structure of Relational Databases Relational database: a set of relations Relation: made up of 2 parts: Schema : specifies name of relation, plus name and domain (type) of each field (or column or attribute). o e.g., Student (sid: string, name: string, address: string, phone: string, major: string). -

Redcap FAQ (PDF

REDCap FAQs General ¶ Q: How much experience with programming, networking and/or database construction is required to use REDCap? No programming, networking or database experience is needed to use REDCap. Simple design interfaces within REDCap handle all of these details automatically. It is recommended that once designed, you have a statistician review your project. It is important to consider the planned statistical analysis before collecting any data. A statistician can help assure that you are collecting the appropriate fields, in the appropriate format necessary to perform the needed analysis. Q: Can I still maintain a paper trail for my study, even if I use REDCap? You can use paper forms to collect data first and then enter into REDCap. All REDCap data collection instruments can also be downloaded and printed with data entered as a universal PDF file. Q: Can I transition data collected in other applications (ex: MS Access or Excel) into REDCap? It depends on the project design and application you are transitioning from. For example, there are a few options to get metadata out of MS Access to facilitate the creation of a REDCap data dictionary: For Access 2003 or earlier, there is a third-party software (CSD Tools) that can export field names, types, and descriptions to MS Excel. You can also extract this information yourself using MS Access. Table names can be queried from the hidden system table "MSysObjects", and a table's metadata can be accessed in VBA using the Fields collection of a DAO Recordset, ADO Recordset, or DAO TableDef. The extracted metadata won't give you a complete REDCap data dictionary, but at least it's a start. -

Translation of ER-Diagram Into Relational Schema

TranslationTranslation ofof ERER --diagramdiagram intointo RelationalRelational SchemaSchema Dr. Sunnie S. Chung CIS430/530 LearningLearning ObjectivesObjectives Define each of the following database terms Relation Primary key Foreign key Referential integrity Field Data type Null value Discuss the role of designing databases in the analysis and design of an information system Learn how to transform an entity-relationship (ER) 9.29.2 Diagram into an equivalent set of well-structured relations 2 3 9.49.4 4 5 ProcessProcess ofof DatabaseDatabase DesignDesign • Logical Design – Based upon the conceptual data model – Four key steps 1. Develop a logical data model for each known user interface for the application using normalization principles. 2. Combine normalized data requirements from all user interfaces into one consolidated logical database model 3. Translate the conceptual E-R data model for the application into normalized data requirements 4. Compare the consolidated logical database design with the 9.69.6 translated E-R model and produce one final logical database model for the application 6 9.79.7 7 RelationalRelational DatabaseDatabase ModelModel • Data represented as a set of related tables or relations • Relation – A named, two-dimensional table of data. Each relation consists of a set of named columns and an arbitrary number of unnamed rows – Properties • Entries in cells are simple • Entries in columns are from the same set of values • Each row is unique • The sequence of columns can be interchanged without changing the meaning or use of the relation • The rows may be interchanged or stored in any 9.89.8 sequence 8 RelationalRelational DatabaseDatabase ModelModel • Well-Structured Relation – A relation that contains a minimum amount of redundancy and allows users to insert, modify and delete the rows without errors or inconsistencies 9.99.9 9 TransformingTransforming EE --RR DiagramsDiagrams intointo RelationsRelations • It is useful to transform the conceptual data model into a set of normalized relations • Steps 1. -

Introduction to Data Types and Field Properties Table of Contents OVERVIEW

Introduction to Data Types and Field Properties Table of Contents OVERVIEW ........................................................................................................................................................ 2 WHEN TO USE WHICH DATA TYPE ........................................................................................................... 2 BASIC TYPES ...................................................................................................................................................... 2 NUMBER ............................................................................................................................................................. 3 DATA AND TIME ................................................................................................................................................ 4 YES/NO .............................................................................................................................................................. 4 OLE OBJECT ...................................................................................................................................................... 4 ADDITIONAL FIELD PROPERTIES ........................................................................................................................ 4 DATA TYPES IN RELATIONSHIPS AND JOINS ....................................................................................................... 5 REFERENCE FOR DATA TYPES ................................................................................................................. -

Creating Tables and Relationships

Access 2007 Creating Databases - Fundamentals Contents Database Design Objectives of database design 1 Process of database design 1 Creating a New Database.............................................................................................................. 3 Tables ............................................................................................................................................ 4 Creating a table in design view 4 Defining fields 4 Creating new fields 5 Modifying table design 6 The primary key 7 Indexes 8 Saving your table 9 Field properties 9 Calculated Field Properties (Access 2010 only) 13 Importing Data ............................................................................................................................. 14 Importing data from Excel 14 Lookup fields ................................................................................................................................ 16 Modifying the Data Design of a Table ........................................................................................20 Relationships ................................................................................................................................22 Creating relationships 23 Viewing or editing existing relationships 24 Referential integrity 24 Viewing Sub Datasheets 26 . Page 2 of 29 Database Design Time spent in designing a database is time very well spent. A well-designed database is the key to efficient management of data. You need to think about what information is needed and -

Access: Relationships Table Relationships in a Relational Database, Information About a Particular Subject Is Stored in Its

Access: Relationships Table Relationships In a relational database, information about a particular subject is stored in its own table. The purpose of this is so that you do not need to store redundant data. For example, if you have a database with information about students and classes you would want to store the information about classes separately so that you do not have to enter all the details about a particular class such as time, place, instructor, credits, etc. over again for every student enrolled in that class. A relationship works by matching data in key columns, usually columns with the same name in both tables. In most cases, the relationship matches the primary key from one table, which provides a unique identifier for each row, with the same type of field in the other table. The inclusion of this field in the second table is called a “foreign key”. A foreign key is one or more table fields (columns) that refer to the primary key field or fields in another table. After the tables are created, relationships are defined by linking the related data together through the field(s) they have in common. In the example below, ID# in the Faculty table is the primary key for that table. In the Contracts Table, PI is essentially the same field as ID#, containing the same type of information. PI is a foreign key in the Contracts Table. Note: Linking fields do not have to have the same name. They must be of the same data type and length for a relationship to be established, however.