Real Performance of Fpgas Tops Gpus in the Race to Accelerate AI

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

NVIDIA GEFORCE GT 630 Step up to Better Video, Photo, Game, and Web Performance

NVIDIA GEFORCE GT 630 Step up to better video, photo, game, and web performance. Every PC deserves dedicated graphics. The NVIDIA® GeForce® GT 630 graphics card delivers a premium multimedia experience “Sixty percent of integrated and reliable gaming—every time. Insist on NVIDIA dedicated graphics owners want dedicated graphics for the faster, more immersive performance your graphics in their next PC1”. customers want. GEFORCE GT 630 TECHNICAL SPECIFICATIONS CUDA CORES > 96 GRAPHICS CLOCK > 810 MHz PROCESSOR CLOCK > 1620 MHz Deliver more Accelerate performance by up Give customers the freedom performance—and fun— to 5x over today’s integrated to connect their PC to any MEMORY INTERFACE with amazing HD video, graphics solutions2 and provide 3D-enabled TV for a rich, > 128 Bit photo, web, and gaming. additional dedicated memory. cinematic Blu-ray 3D experience FRAME BUFFER in their homes4. > 512/1024 MB GDDR5 or 1024 MB DDR3 MICROSOFT DIRECTX > 11 BEST-IN-CLASS PERFORMANCE MICROSOFT DIRECTCOMPUTE > Yes BLU-RAY 3D4 > Yes TRUEHD AND DTS-HD AUDIO BITSTREAMING > Yes NVIDIA PHYSX™-READY > Yes MAX. ANALOG / DIGITAL RESOLUTION > 2048x1536 (analog) / 2560x1600 (digital) DISPLAY CONNECTORS > Dual Link DVI, HDMI, VGA NVIDIA GEFORCE GT 630 | SELLSHEET | MAY 12 NVIDIA® GEFORCE® GT 630 Features Benefits HD VIDEOS Get stunning picture clarity, smooth video, accurate color, and precise image scaling for movies and video with NVIDIA PureVideo® HD technology. WEB ACCELERATION Enjoy a 2x faster web experience with the latest generation of GPU-accelerated web browsers (Internet Explorer, Google Chrome, and Firefox) 5. PHOTO EDITING Perfect and share your photos in half the time with popular apps like Adobe® Photoshop® and Nik Silver EFX Pro 25. -

Mac Labs. More Creativity

Mac labs. More creativity. More engagement. More learning. Every Mac comes ready for 21st-century learning. Mac OS X Server creates the ultimate lab environment. Creativity and innovation are critical skills in the modern Mac OS X Server includes simplified tools for creating wikis and workplace, which makes them critical skills to teach today’s blogs, so students and staff can set up and manage them with students. Apple offers comprehensive lab solutions for your little or no help from IT. The Spotlight Server feature makes it school or university that will creatively engage students and easy for students collaborating on projects to find and view motivate them to take an interest in their own learning. The content stored anywhere on the network. Of course, access iLife ’09 suite of applications comes standard on every Mac, controls are built in, so they only get the search results they’re so students can immediately begin creating podcasts, editing authorised to see. Mac OS X Server also allows for real-time videos, composing music, producing photo essays and more. streaming of audio and video content, which lets everyone get right to work instead of waiting for downloads – saving major Mac or PC? Have both. storage across your network. Now one lab really can solve all your computing needs. Every Mac is powered by an Intel processor and features Launch careers from your Mac lab. Mac OS X – the world’s most advanced operating system. As an Apple Authorised Training Centre for Education, your Mac OS X comes with an amazing dual-boot feature called school can build a bridge between students and real-world Boot Camp that lets students run Windows XP or Vista natively careers. -

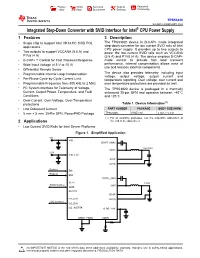

Integrated Step-Down Converter with SVID Interface for Intel® CPU

Product Order Technical Tools & Support & Folder Now Documents Software Community TPS53820 SLUSE33 –FEBRUARY 2020 Integrated Step-Down Converter with SVID Interface for Intel® CPU Power Supply 1 Features 3 Description The TPS53820 device is D-CAP+ mode integrated 1• Single chip to support Intel VR13.HC SVID POL applications step-down converter for low current SVID rails of Intel CPU power supply. It provides up to two outputs to • Two outputs to support VCCANA (5.5 A) and power the low current SVID rails such as VCCANA P1V8 (4 A) (5.5 A) and P1V8 (4 A). The device employs D-CAP+ • D-CAP+™ Control for Fast Transient Response mode control to provide fast load transient • Wide Input Voltage (4.5 V to 15 V) performance. Internal compensation allows ease of use and reduces external components. • Differential Remote Sense • Programmable Internal Loop Compensation The device also provides telemetry, including input voltage, output voltage, output current and • Per-Phase Cycle-by-Cycle Current Limit temperature reporting. Over voltage, over current and • Programmable Frequency from 800 kHz to 2 MHz over temperature protections are provided as well. 2 • I C System Interface for Telemetry of Voltage, The TPS53820 device is packaged in a thermally Current, Output Power, Temperature, and Fault enhanced 35-pin QFN and operates between –40°C Conditions and 125°C. • Over-Current, Over-Voltage, Over-Temperature (1) protections Table 1. Device Information • Low Quiescent Current PART NUMBER PACKAGE BODY SIZE (NOM) • 5 mm × 5 mm, 35-Pin QFN, PowerPAD Package TPS53820 RWZ (35) 5 mm × 5 mm (1) For all available packages, see the orderable addendum at 2 Applications the end of the data sheet. -

Data Sheet: Quadro GV100

REINVENTING THE WORKSTATION WITH REAL-TIME RAY TRACING AND AI NVIDIA QUADRO GV100 The Power To Accelerate AI- FEATURES > Four DisplayPort 1.4 Enhanced Workflows Connectors3 The NVIDIA® Quadro® GV100 reinvents the workstation > DisplayPort with Audio to meet the demands of AI-enhanced design and > 3D Stereo Support with Stereo Connector3 visualization workflows. It’s powered by NVIDIA Volta, > NVIDIA GPUDirect™ Support delivering extreme memory capacity, scalability, and > NVIDIA NVLink Support1 performance that designers, architects, and scientists > Quadro Sync II4 Compatibility need to create, build, and solve the impossible. > NVIDIA nView® Desktop SPECIFICATIONS Management Software GPU Memory 32 GB HBM2 Supercharge Rendering with AI > HDCP 2.2 Support Memory Interface 4096-bit > Work with full fidelity, massive datasets 5 > NVIDIA Mosaic Memory Bandwidth Up to 870 GB/s > Enjoy fluid visual interactivity with AI-accelerated > Dedicated hardware video denoising encode and decode engines6 ECC Yes NVIDIA CUDA Cores 5,120 Bring Optimal Designs to Market Faster > Work with higher fidelity CAE simulation models NVIDIA Tensor Cores 640 > Explore more design options with faster solver Double-Precision Performance 7.4 TFLOPS performance Single-Precision Performance 14.8 TFLOPS Enjoy Ultimate Immersive Experiences Tensor Performance 118.5 TFLOPS > Work with complex, photoreal datasets in VR NVIDIA NVLink Connects 2 Quadro GV100 GPUs2 > Enjoy optimal NVIDIA Holodeck experience NVIDIA NVLink bandwidth 200 GB/s Realize New Opportunities with AI -

Introduction to Intel® FPGA IP Cores

Introduction to Intel® FPGA IP Cores Updated for Intel® Quartus® Prime Design Suite: 20.3 Subscribe UG-01056 | 2020.11.09 Send Feedback Latest document on the web: PDF | HTML Contents Contents 1. Introduction to Intel® FPGA IP Cores..............................................................................3 1.1. IP Catalog and Parameter Editor.............................................................................. 4 1.1.1. The Parameter Editor................................................................................. 5 1.2. Installing and Licensing Intel FPGA IP Cores.............................................................. 5 1.2.1. Intel FPGA IP Evaluation Mode.....................................................................6 1.2.2. Checking the IP License Status.................................................................... 8 1.2.3. Intel FPGA IP Versioning............................................................................. 9 1.2.4. Adding IP to IP Catalog...............................................................................9 1.3. Best Practices for Intel FPGA IP..............................................................................10 1.4. IP General Settings.............................................................................................. 11 1.5. Generating IP Cores (Intel Quartus Prime Pro Edition)...............................................12 1.5.1. IP Core Generation Output (Intel Quartus Prime Pro Edition)..........................13 1.5.2. Scripting IP Core Generation.................................................................... -

Introducing the New 11Th Gen Intel® Core™ Desktop Processors

Product Brief 11th Gen Intel® Core™ Desktop Processors Introducing the New 11th Gen Intel® Core™ Desktop Processors The 11th Gen Intel® Core™ desktop processor family puts you in control of your compute experience. It features an innovative new architecture for reimag- ined performance, immersive display and graphics for incredible visuals, and a range of options and technologies for enhanced tuning. When these advances come together, you have everything you need for fast-paced professional work, elite gaming, inspired creativity, and extreme tuning. The 11th Gen Intel® Core™ desktop processor family gives you the power to perform, compete, excel, and power your greatest contributions. Product Brief 11th Gen Intel® Core™ Desktop Processors PERFORMANCE Reimagined Performance 11th Gen Intel® Core™ desktop processors are intelligently engineered to push the boundaries of performance. The new processor core architecture transforms hardware and software efficiency and takes advantage of Intel® Deep Learning Boost to accelerate AI performance. Key platform improvements include memory support up to DDR4-3200, up to 20 CPU PCIe 4.0 lanes,1 integrated USB 3.2 Gen 2x2 (20G), and Intel® Optane™ memory H20 with SSD support.2 Together, these technologies bring the power and the intelligence you need to supercharge productivity, stay in the creative flow, and game at the highest level. Product Brief 11th Gen Intel® Core™ Desktop Processors Experience rich, stunning, seamless visuals with the high-performance graphics on 11th Gen Intel® Core™ desktop -

Intel® Omni-Path Architecture (Intel® OPA) for Machine Learning

Big Data ® The Intel Omni-Path Architecture (OPA) for Machine Learning Big Data Sponsored by Intel Srini Chari, Ph.D., MBA and M. R. Pamidi Ph.D. December 2017 mailto:[email protected] Executive Summary Machine Learning (ML), a major component of Artificial Intelligence (AI), is rapidly evolving and significantly improving growth, profits and operational efficiencies in virtually every industry. This is being driven – in large part – by continuing improvements in High Performance Computing (HPC) systems and related innovations in software and algorithms to harness these HPC systems. However, there are several barriers to implement Machine Learning (particularly Deep Learning – DL, a subset of ML) at scale: • It is hard for HPC systems to perform and scale to handle the massive growth of the volume, velocity and variety of data that must be processed. • Implementing DL requires deploying several technologies: applications, frameworks, libraries, development tools and reliable HPC processors, fabrics and storage. This is www.cabotpartners.com hard, laborious and very time-consuming. • Training followed by Inference are two separate ML steps. Training traditionally took days/weeks, whereas Inference was near real-time. Increasingly, to make more accurate inferences, faster re-Training on new data is required. So, Training must now be done in a few hours. This requires novel parallel computing methods and large-scale high- performance systems/fabrics. To help clients overcome these barriers and unleash AI/ML innovation, Intel provides a comprehensive ML solution stack with multiple technology options. Intel’s pioneering research in parallel ML algorithms and the Intel® Omni-Path Architecture (OPA) fabric minimize communications overhead and improve ML computational efficiency at scale. -

Download Gtx 970 Driver Download Gtx 970 Driver

download gtx 970 driver Download gtx 970 driver. Completing the CAPTCHA proves you are a human and gives you temporary access to the web property. What can I do to prevent this in the future? If you are on a personal connection, like at home, you can run an anti-virus scan on your device to make sure it is not infected with malware. If you are at an office or shared network, you can ask the network administrator to run a scan across the network looking for misconfigured or infected devices. Another way to prevent getting this page in the future is to use Privacy Pass. You may need to download version 2.0 now from the Chrome Web Store. Cloudflare Ray ID: 67a229f54fd4c3c5 • Your IP : 188.246.226.140 • Performance & security by Cloudflare. GeForce Windows 10 Driver. NVIDIA has been working closely with Microsoft on the development of Windows 10 and DirectX 12. Coinciding with the arrival of Windows 10, this Game Ready driver includes the latest tweaks, bug fixes, and optimizations to ensure you have the best possible gaming experience. Game Ready Best gaming experience for Windows 10. GeForce GTX TITAN X, GeForce GTX TITAN, GeForce GTX TITAN Black, GeForce GTX TITAN Z. GeForce 900 Series: GeForce GTX 980 Ti, GeForce GTX 980, GeForce GTX 970, GeForce GTX 960. GeForce 700 Series: GeForce GTX 780 Ti, GeForce GTX 780, GeForce GTX 770, GeForce GTX 760, GeForce GTX 760 Ti (OEM), GeForce GTX 750 Ti, GeForce GTX 750, GeForce GTX 745, GeForce GT 740, GeForce GT 730, GeForce GT 720, GeForce GT 710, GeForce GT 705. -

Datasheet Quadro K600

ACCELERATE YOUR CREATIVITY NVIDIA® QUADRO® K620 Accelerate your creativity with FEATURES ® ® > DisplayPort 1.2 Connector NVIDIA Quadro —the world’s most > DisplayPort with Audio > DVI-I Dual-Link Connector 1 powerful workstation graphics. > VGA Support ™ The NVIDIA Quadro K620 offers impressive > NVIDIA nView Desktop Management Software power-efficient 3D application performance and Compatibility capability. 2 GB of DDR3 GPU memory with fast > HDCP Support bandwidth enables you to create large, complex 3D > NVIDIA Mosaic2 SPECIFICATIONS models, and a flexible single-slot and low-profile GPU Memory 2 GB DDR3 form factor makes it compatible with even the most Memory Interface 128-bit space and power-constrained chassis. Plus, an all-new display engine drives up to four displays with Memory Bandwidth 29.0 GB/s DisplayPort 1.2 support for ultra-high resolutions like NVIDIA CUDA® Cores 384 3840x2160 @ 60 Hz with 30-bit color. System Interface PCI Express 2.0 x16 Quadro cards are certified with a broad range of Max Power Consumption 45 W sophisticated professional applications, tested by Thermal Solution Ultra-Quiet Active leading workstation manufacturers, and backed by Fansink a global team of support specialists, giving you the Form Factor 2.713” H × 6.3” L, Single Slot, Low Profile peace of mind to focus on doing your best work. Whether you’re developing revolutionary products or Display Connectors DVI-I DL + DP 1.2 telling spectacularly vivid visual stories, Quadro gives Max Simultaneous Displays 2 direct, 4 DP 1.2 you the performance to do it brilliantly. Multi-Stream Max DP 1.2 Resolution 3840 x 2160 at 60 Hz Max DVI-I DL Resolution 2560 × 1600 at 60 Hz Max DVI-I SL Resolution 1920 × 1200 at 60 Hz Max VGA Resolution 2048 × 1536 at 85 Hz Graphics APIs Shader Model 5.0, OpenGL 4.53, DirectX 11.24, Vulkan 1.03 Compute APIs CUDA, DirectCompute, OpenCL™ 1 Via supplied adapter/connector/bracket | 2 Windows 7, 8, 8.1 and Linux | 3 Product is based on a published Khronos Specification, and is expected to pass the Khronos Conformance Testing Process when available. -

Intel® Arria® 10 Device Overview

Intel® Arria® 10 Device Overview Subscribe A10-OVERVIEW | 2020.10.20 Send Feedback Latest document on the web: PDF | HTML Contents Contents Intel® Arria® 10 Device Overview....................................................................................... 3 Key Advantages of Intel Arria 10 Devices........................................................................ 4 Summary of Intel Arria 10 Features................................................................................4 Intel Arria 10 Device Variants and Packages.....................................................................7 Intel Arria 10 GX.................................................................................................7 Intel Arria 10 GT............................................................................................... 11 Intel Arria 10 SX............................................................................................... 14 I/O Vertical Migration for Intel Arria 10 Devices.............................................................. 17 Adaptive Logic Module................................................................................................ 17 Variable-Precision DSP Block........................................................................................18 Embedded Memory Blocks........................................................................................... 20 Types of Embedded Memory............................................................................... 21 Embedded Memory Capacity in -

NVIDIA AI-On-5G Computing Platform Adopted by Leading Service and Network Infrastructure Providers

NVIDIA AI-on-5G Computing Platform Adopted by Leading Service and Network Infrastructure Providers Fujitsu, Google Cloud, Mavenir, Radisys and Wind River to Deliver Solutions for Smart Hospitals, Factories, Warehouses and Stores GTC -- NVIDIA today announced it is teaming with Fujitsu, Google Cloud, Mavenir, Radisys and Wind River to develop solutions for NVIDIA’s AI-on-5G platform, which will speed the creation of smart cities and factories, advanced hospitals and intelligent stores. Enterprises, mobile network operators and cloud service providers that deploy the platform will be able to handle both 5G and edge AI computing in a single, converged platform. The AI-on-5G platform leverages the NVIDIA Aerial™ software development kit with the NVIDIA BlueField®-2 A100 — a converged card that combines GPUs and DPUs including NVIDIA’s “5T for 5G” solution. This creates high-performance 5G RAN and AI applications in an optimal platform to manage precision manufacturing robots, automated guided vehicles, drones, wireless cameras, self-checkout aisles and hundreds of other transformational projects. “In this era of continuous, accelerated computing, network operators are looking to take advantage of the security, low latency and ability to connect hundreds of devices on one node to deliver the widest range of services in ways that are cost-effective, flexible and efficient,” said Ronnie Vasishta, senior vice president of Telecom at NVIDIA. “With the support of key players in the 5G industry, we’re delivering on the promise of AI everywhere.” NVIDIA and several collaborators in this new AI-on-5G ecosystem are members of the O-RAN Alliance, which is developing standards for more intelligent, open, virtualized and fully interoperable mobile networks. -

Nvidia A100 Supercharges Cfd with Altair and Google Cloud

NVIDIA A100 SUPERCHARGES CFD WITH ALTAIR AND GOOGLE CLOUD Image courtesy of Altair Ultra-Fast CFD powered by Altair, Google TAILORED FOR YOU Cloud, and NVIDIA > Get exactly as many GPUs as you need, for exactly as long as you need them, and pay The newest A2 VM family on Google Cloud Platform was designed to just for that. meet today’s most demanding applications. With up to 16 NVIDIA® A100 Tensor Core GPUs in a node, each offering up to 20X the compute FASTER OUT OF THE BOX performance of the previous generation and a whopping total of up to > The new Ampere GPU architecture on Google Cloud A2 instances delivers 640GB of GPU memory, CFD simulations with Altair ultraFluidX™ were the unprecedented acceleration and flexibility ideal candidate to test the performance and benefits of the new normal. to power the world’s highest-performing elastic data centers for AI, data analytics, With ultraFluidX, highly resolved transient aerodynamics simulations and HPC applications. can be performed on a single server. The Lattice Boltzmann Method is a perfect fit for the massively parallel architecture of NVIDIA GPUs, THINK THE UNTHINKABLE and sets the stage for unprecedented turnaround times. What used to > With a total of up to 312 TFLOPS FP32 peak be overnight runs on single nodes now becomes possible within working performance in the same node linked with NVLink technology, with an aggregate hours by utilizing state-of-the-art GPU-optimized algorithms, the new bandwidth of 9.6TB/s, new solutions to NVIDIA A100, and Google Cloud’s A2 VM family, while delivering the high many tough challenges are within reach.