Introduction

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

BY DMYTRO TKACHUK COMPUTER GRAPHICS SEMINAR Non Photorealistic Rendering

Non Photorealistic Rendering BY DMYTRO TKACHUK COMPUTER GRAPHICS SEMINAR Non photorealistic rendering Non photorealistic rendering (NPR) is a process by which computer engineers try to animate and represent items inspired by paintings, drawings, cartoons and other sources that do not feature photorealism. Usage of NPR 1. Entertainment • Cartoons • Movies • Games • Illustrations 2. Technical illustrations • Architectural drawings • Assemblies • Exploded view diagrams 3. Smart depiction systems Cel Shading Cel Shading, also called toon shading, is a 3D technique based on a specific shading method, which recreates the look of traditional 2D animation cels with the use of flat colours and used for shading 3D objects in a unrealistic way. But it’s not only referred to a shading method, nowadays Cel Shading is known also and more generally as an artistic style/method of making 3D graphics seem cartoonish with the use of specifically colored textures, and also using outlines to simulate drawing lines. Cel shading Cel shading: how it works Shading Cel shading effect is generated from 3D object’s normals. Each normal has it’s own angle, which is determined between its direction and the lighting point. It calculates the respective cosine and applies a specific tone to that faces/area. Consequently, when the angle between normal and light is zero, the tone will be brighter. When the angle increases the tone will become darker. Cel shading: how it works Shading The different tones are flat and change without gradients, simulating cell painting style. Depending on the style, the number of tones can be increased or decreased. Cel shading: how it works Outline Sometimes to achieve cartoon look, computer graphics developers include black outlines simulating drawing strokes. -

ECSE 4961/6961: Computer Vision and Graphics for Digital Arts Dvds for the Week of September 24–28

ECSE 4961/6961: Computer Vision and Graphics for Digital Arts DVDs for the week of September 24–28 What Dreams May Come (Sept 24) Start with Chapter 6 (“Painting Your Own Surroundings”) about 24 minutes in. The setting is that the Robin Williams character has died and finds out that heaven, for him, is to be inside one of his wife’s paintings. There are some interesting effects reminiscent (although probably not technically related to) Hertzmann’s paper on painterly rendering. At about 25:30 there’s a shot with the camera pushing forward through flowers and plants. At about 28:00 there are some effects of sun-dappled water that capture a painterly feel. At 29:00 there’s a short clip of a bird flying through an expressionistic sky. You can stop watching around 32:00 (when the characters enter the house). Next, go to the extras and select the featurette. Skip forward to about 11:00 through 13:30 when the director and effects artists discuss how the painterly effect was achieved, and show some raw footage and steps to achieving the final effect. Discuss similarities and differences to Hertzmann, extensions and inspirations, technical strengths and weaknesses, etc. A Scanner Darkly (Sept 24) The whole movie is probably worth watching, but in the interest of keeping the video part to 15-20 minutes, I suggest going directly to the extras section of the disc. Watch the Theatrical Trailer, which gives a pretty good overview of the animation style in different scenes. Then watch the extra titled “The Weight of the Line: Animation Tales”. -

AIM Awards Level 3 Certificate in Creative and Digital Media (QCF) Qualification Specification V2 ©Version AIM Awards 2 – 2014May 2015

AIM Awards Level 3 Certificate in Creative and Digital Media (QCF) AIM Awards Level 3 Certificate In Creative And Digital Media (QCF) Qualification Specification V2 ©Version AIM Awards 2 – 2014May 2015 AIM Awards Level 3 Certificate in Creative and Digital Media (QCF) 601/3355/7 2 AIM Awards Level 3 Certificate In Creative And Digital Media (QCF) Qualification Specification V2 © AIM Awards 2014 Contents Page Section One – Qualification Overview 4 Section Two - Structure and Content 9 Section Three – Assessment and Quality Assurance 277 Section Four – Operational Guidance 283 Section Five – Appendices 285 Appendix 1 – AIM Awards Glossary of Assessment Terms 287 Appendix 2 – QCF Level Descriptors 290 3 AIM Awards Level 3 Certificate In Creative And Digital Media (QCF) Qualification Specification V2 © AIM Awards 2014 Section 1 Qualification Overview 4 AIM Awards Level 3 Certificate In Creative And Digital Media (QCF) Qualification Specification V2 © AIM Awards 2014 Section One Qualification Overview Introduction Welcome to the AIM Awards Qualification Specification. We want to make your experience of working with AIM Awards as pleasant as possible. AIM Awards is a national Awarding Organisation, offering a large number of Ofqual regulated qualifications at different levels and in a wide range of subject areas. Our qualifications are flexible enough to be delivered in a range of settings, from small providers to large colleges and in the workplace both nationally and internationally. We pride ourselves on offering the best possible customer service, and are always on hand to help if you have any questions. Our organisational structure and business processes enable us to be able to respond quickly to the needs of customers to develop new products that meet their specific needs. -

Art Styles in Computer Games and the Default Bias

University of Huddersfield Repository Jarvis, Nathan Photorealism versus Non-Photorealism: Art styles in computer games and the default bias. Original Citation Jarvis, Nathan (2013) Photorealism versus Non-Photorealism: Art styles in computer games and the default bias. Masters thesis, University of Huddersfield. This version is available at http://eprints.hud.ac.uk/id/eprint/19756/ The University Repository is a digital collection of the research output of the University, available on Open Access. Copyright and Moral Rights for the items on this site are retained by the individual author and/or other copyright owners. Users may access full items free of charge; copies of full text items generally can be reproduced, displayed or performed and given to third parties in any format or medium for personal research or study, educational or not-for-profit purposes without prior permission or charge, provided: • The authors, title and full bibliographic details is credited in any copy; • A hyperlink and/or URL is included for the original metadata page; and • The content is not changed in any way. For more information, including our policy and submission procedure, please contact the Repository Team at: [email protected]. http://eprints.hud.ac.uk/ THE UNIVERSITY OF HUDDERSFIELD Photorealism versus Non-Photorealism: Art styles in computer games and the default bias. Master of Research (MRes) Thesis Nathan Jarvis - U0859020010 18/09/2013 Supervisor: Daryl Marples Co-Supervisor: Duke Gledhill 1.0.0 – Contents. 1.0.0 – CONTENTS. 1 2.0.0 – ABSTRACT. 4 2.1.0 – LITERATURE REVIEW. 4 2.2.0 – SUMMARY OF CHANGES (SEPTEMBER 2013). -

The Uses of Animation 1

The Uses of Animation 1 1 The Uses of Animation ANIMATION Animation is the process of making the illusion of motion and change by means of the rapid display of a sequence of static images that minimally differ from each other. The illusion—as in motion pictures in general—is thought to rely on the phi phenomenon. Animators are artists who specialize in the creation of animation. Animation can be recorded with either analogue media, a flip book, motion picture film, video tape,digital media, including formats with animated GIF, Flash animation and digital video. To display animation, a digital camera, computer, or projector are used along with new technologies that are produced. Animation creation methods include the traditional animation creation method and those involving stop motion animation of two and three-dimensional objects, paper cutouts, puppets and clay figures. Images are displayed in a rapid succession, usually 24, 25, 30, or 60 frames per second. THE MOST COMMON USES OF ANIMATION Cartoons The most common use of animation, and perhaps the origin of it, is cartoons. Cartoons appear all the time on television and the cinema and can be used for entertainment, advertising, 2 Aspects of Animation: Steps to Learn Animated Cartoons presentations and many more applications that are only limited by the imagination of the designer. The most important factor about making cartoons on a computer is reusability and flexibility. The system that will actually do the animation needs to be such that all the actions that are going to be performed can be repeated easily, without much fuss from the side of the animator. -

Enhanced Cartoon and Comic Rendering

EUROGRAPHICS 2006 / D. W. Fellner and C. Hansen Short Papers Enhanced Cartoon and Comic Rendering Martin Spindler † and Niklas Röber and Robert Döhring ‡ and Maic Masuch Department of Simulation and Graphics, Otto-von-Guericke University of Magdeburg, Germany Abstract In this work we present an extension to common cel shading techniques, and describe four new cartoon-like ren- dering styles applicable for real-time implementations: stylistic shadows, double contour lines, soft cel shading, and pseudo edges. Our work was mainly motivated by the rich set of stylistic elements and expressional possibili- ties within the medium comic. In particular, we were inspired by Miller’s “Sin City” and McFarlane’s “Spawn”. We designed algorithms for these styles, developed a real-time implementation and integrated it into a regular 3D game engine. Categories and Subject Descriptors (according to ACM CCS): I.3.7 [Three-Dimensional Graphics and Realism]: Color, shading, shadowing, and texture 1. Introduction many cases, these techniques are better in emphasizing the essential aspects of a concept, when compared to a photore- For some years, the field of non-photorealistic rendering atlistic rendition. (NPR) has also focused on the representation of comic-like computer graphics, being limited to typical characteristics Another traditional technique, known as cartoon- or cel of cel shading. The gap in the expressiveness of traditional shading, has also found its way into the field of computer comics over their adoption in computer applications still re- graphics [Dec96, LMHB00]. Since its origin is in comic mains, because only little research has been done in reflect- books and cartoon movies, this technique is often used in ing, which artistic style is appropriate for a certain purpose, entertaining environments, such as computer games [Ubi]. -

Trigger Happy: Videogames and the Entertainment Revolution

Free your purchased eBook form adhesion DRM*! * DRM = Digtal Rights Management Trigger Happy VIDEOGAMES AND THE ENTERTAINMENT REVOLUTION by Steven Poole Contents ACKNOWLEDGMENTS............................................ 8 1 RESISTANCE IS FUTILE ......................................10 Our virtual history....................................................10 Pixel generation .......................................................13 Meme machines .......................................................18 The shock of the new ...............................................28 2 THE ORIGIN OF SPECIES ....................................35 Beginnings ...............................................................35 Art types...................................................................45 Happiness is a warm gun .........................................46 In my mind and in my car ........................................51 Might as well jump ..................................................56 Sometimes you kick.................................................61 Heaven in here .........................................................66 Two tribes ................................................................69 Running up that hill .................................................72 It’s a kind of magic ..................................................75 We can work it out...................................................79 Family fortunes ........................................................82 3 UNREAL CITIES ....................................................85 -

Artistic Styles Used in Computer Games

Artistic Styles Used in Computer Games Pixel Art: Pixel art is an style that uses older looking visuals that were used on retro games. Pixel art is used in games as it is cheaper and easier to produce for indie companies and it is a throwback to the older generation of games. It is used in modern games like Fez which is an example of a pixel art game that is 3D which wasn't an available option in older generations of gaming as most pixel art games were 2D. An example of a pixel art game is minecraft which uses pixel art as it best fits with minecrafts block theme and block mechanics. Minecraft was made by a relatively small independant studio so the use of pixel art would have been cheaper and easier than using high resolution graphics. Also pixel art is appealing to adults who would have played older games with pixel graphics and children as the graphics are simple and not intimidating. Pixel art games are created on raster graphics software for example Microsoft Paint, Deluxe Paint and RPG Maker. Pros of pixel art are: ● It can be relatively quick and easy to create which is useful for quick projects or small companies. ● Pixel art has an indie feel about it which can attract an audience. ● Pixel art is a throwback to the older generations of games which can attract an older audience who grew up with games in that art style. Cons of pixel art: ● It can make the game look cheap and boring to some due to the simple graphics in an age of photorealistic games. -

Ray Tracing + Cel Shading Renderer Philip Carr and Thomas Leing CS 174

Ray Tracing + Cel Shading Renderer Philip Carr and Thomas Leing CS 174 1 Overview Project Goals: ● Produce a rendering engine that was capable of the following: ○ CPU and GPU-accelerated (CUDA) ray tracing (non-OpenGL GPU implementation done for the CS 179 project) ○ CPU and GPU-accelerated (GLSL) cel shading ○ Water wave simulation ● Due to limited time, water wave simulation was attempted, but not completed 2 Introduction: Ray Tracing ● Form of photorealistic rendering ● Simulates the way in which light would naturally illuminate the scene, as perceived by the camera/viewer ● Send rays out from camera direction/position into scene ○ Each pixel corresponds to a ray sent into the scene in the direction of the pixel in ndc space ○ Detect intersection between ray and some location on an object ○ If intersection occurs, color of point determined using Phong lighting model ○ Shadows implemented by sending ray from intersection location to light source and determining whether this ray intersects another location of some object along the way ● KDTree data structure used to dramatically speed up ray-object intersections, especially when ray tracing with triangle meshes ○ KDTree spatially organizes triangle mesh for logarithmic-time speedup 3 Ray Tracing Examples Nvidia RTX demos https://www.awn.com/news/nvidia-unveils-quadro- https://devblogs.nvidia.com/ray-tracing-games- rtx-worlds-first-ray-tracing-gpu nvidia-rtx/ 4 Ray Tracing Algorithm Overview ● Produce a KDTree for every triangle mesh object in the scene ● Given XRES x YRES screen resolution, -

Edexcel BTEC Levels 4 and 5 Higher Nationals Specification in Creative Media Production

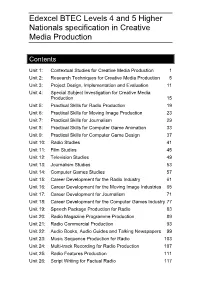

Edexcel BTEC Levels 4 and 5 Higher Nationals specification in Creative Media Production Contents Unit 1: Contextual Studies for Creative Media Production 1 Unit 2: Research Techniques for Creative Media Production 5 Unit 3: Project Design, Implementation and Evaluation 11 Unit 4: Special Subject Investigation for Creative Media Production 15 Unit 5: Practical Skills for Radio Production 19 Unit 6: Practical Skills for Moving Image Production 23 Unit 7: Practical Skills for Journalism 29 Unit 8: Practical Skills for Computer Game Animation 33 Unit 9: Practical Skills for Computer Game Design 37 Unit 10: Radio Studies 41 Unit 11: Film Studies 45 Unit 12: Television Studies 49 Unit 13: Journalism Studies 53 Unit 14: Computer Games Studies 57 Unit 15: Career Development for the Radio Industry 61 Unit 16: Career Development for the Moving Image Industries 65 Unit 17: Career Development for Journalism 71 Unit 18: Career Development for the Computer Games Industry 77 Unit 19: Speech Package Production for Radio 83 Unit 20: Radio Magazine Programme Production 89 Unit 21: Radio Commercial Production 93 Unit 22: Audio Books, Audio Guides and Talking Newspapers 99 Unit 23: Music Sequence Production for Radio 103 Unit 24: Multi-track Recording for Radio Production 107 Unit 25: Radio Features Production 111 Unit 26: Script Writing for Factual Radio 117 Unit 27: Interview and Presentation Techniques for Radio 121 Unit 28: Producing Multi-platform Radio Programmes 127 Unit 29: Radio Studio Technology 131 Unit 30: Camera and Lighting Techniques for Moving -

What Is Cel Shading?

What is Cel Shading? A type of non-photorealistic rendering to make 3D graphics appear flat by using less shading colour rather than a gradient, tint or shade The term ‘cel shading’ is popularly used to refer to the application of the ‘ink’ outlining process in animation and games; to imitate the style of comic books or cartoons The style is often used in, but not exclusive to video games Also useful for rendering elements in 2D productions, in scenes that are difficult to animate by hand for example Some animated series are produced entirely using cel shading; often for similar reasons Technical info Lighting values are calculated for each pixel and then quantized (a process reducing the number of distinct colours used in an image) to a small number of shades to create a characteristic flat look; where shadows and highlights appear as blocks of colour, rather than a smooth mix A brief history st First appeared around the beginning of the 21 century Named after cels (or celluloid); clear sheets of acetate painted on for use in traditional 2D animation Cel shading has become synonymous with games, with Jet Set Radio being one of the first released incorperating the style in 2000 on the Sega Dreamcast. There have been many more titles since including: The Legend of Zelda: The Wind Waker Ōkami - incorporating the sumi-e style of ink painting and the Borderlands series How to does it work? The characteristic black ‘ink’ and contour lines can be created in a variety of ways A common way is to render a black outline slightly larger than the object itself, using inverted backface culling with the triangles and / or polygons drawn in black. -

Toon Shading and Surface Patches

Toon Shading and Surface Patches Computer Graphics CSE 167 Lecture 14 CSE 167: Computer graphics • Toon shading – A non‐photorealistic rendering method • Surface patches – Generalization of curves CSE 167, Winter 2018 2 Based on slides courtesy of Jurgen Schulze Toon shading • Also called Cel Shading (“Cel” is short for “celluloid” sheets, on which animation was hand‐drawn) • Gives any 3D model a cartoon‐style look • Emphasizes silhouettes • Discrete steps for diffuse shading, highlights • Non‐photorealistic rendering method (NPR) • Programmable shaders allow real‐time performance Source: Wikipedia CSE 167, Winter 2018 3 Off-line toon shader GLSL toon shader Approach • Start with regular 3D model • Apply two rendering tricks – Silhouette edges • Emphasize pixels with normals perpendicular to viewing direction – Discretized shading • Conventional (smooth) lighting values calculated for each pixel, then mapped to a small number of discrete shades Source: Wikipedia CSE 167, Winter 2018 4 Silhouette edges • Silhouette edge detection – Compute dot product of viewing direction v and normal n – Use cutoff value for edge: • If edge < 0.01 draw black, else do not change pixel color – Use 1D texture to define edge ramp for smoother edgeramp transition 1 uniform sample1D edgeramp; e = texture1D(edgeramp,edge); 0 edge CSE 167, Winter 2018 5 Discretized shading • Compute diffuse and specular shading • Discretize shading – Approaches • If then else tree comparing values against thresholds if (diffuse < A) diffuse = 0.0; else if (diffuse < B) diffuse