PA-RISC 8X00 Family of Microprocessors with Focus on PA-8700

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Computer Science 246 Computer Architecture Spring 2010 Harvard University

Computer Science 246 Computer Architecture Spring 2010 Harvard University Instructor: Prof. David Brooks [email protected] Dynamic Branch Prediction, Speculation, and Multiple Issue Computer Science 246 David Brooks Lecture Outline • Tomasulo’s Algorithm Review (3.1-3.3) • Pointer-Based Renaming (MIPS R10000) • Dynamic Branch Prediction (3.4) • Other Front-end Optimizations (3.5) – Branch Target Buffers/Return Address Stack Computer Science 246 David Brooks Tomasulo Review • Reservation Stations – Distribute RAW hazard detection – Renaming eliminates WAW hazards – Buffering values in Reservation Stations removes WARs – Tag match in CDB requires many associative compares • Common Data Bus – Achilles heal of Tomasulo – Multiple writebacks (multiple CDBs) expensive • Load/Store reordering – Load address compared with store address in store buffer Computer Science 246 David Brooks Tomasulo Organization From Mem FP Op FP Registers Queue Load Buffers Load1 Load2 Load3 Load4 Load5 Store Load6 Buffers Add1 Add2 Mult1 Add3 Mult2 Reservation To Mem Stations FP adders FP multipliers Common Data Bus (CDB) Tomasulo Review 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 LD F0, 0(R1) Iss M1 M2 M3 M4 M5 M6 M7 M8 Wb MUL F4, F0, F2 Iss Iss Iss Iss Iss Iss Iss Iss Iss Ex Ex Ex Ex Wb SD 0(R1), F0 Iss Iss Iss Iss Iss Iss Iss Iss Iss Iss Iss Iss Iss M1 M2 M3 Wb SUBI R1, R1, 8 Iss Ex Wb BNEZ R1, Loop Iss Ex Wb LD F0, 0(R1) Iss Iss Iss Iss M Wb MUL F4, F0, F2 Iss Iss Iss Iss Iss Ex Ex Ex Ex Wb SD 0(R1), F0 Iss Iss Iss Iss Iss Iss Iss Iss Iss M1 M2 -

Subchapter 2.4–Hp Server Rp5400 Series

Chapter 2 hp server rp5400 series Subchapter 2.4—hp server rp5400 series hp server rp5470 Table 2.4.1 HP Server rp5470 Specifications Server model number rp5470 Max. Network Interface Cards (cont.)–see supported I/O table Server product number A6144B ATM 155 Mb/s–MMF 10 Number of Processors 1-4 ATM 155 Mb/s–UTP5 10 Supported Processors ATM 622 Mb/s–MMF 10 PA-RISC PA-8700 Processor @ 650 and 750 MHz 802.5 Token Ring 4/16/100 Mb/s 10 Cache–Instr/data per CPU (KB) 750/1500 Dual port X.25/SDLC/FR 10 Floating Point Coprocessor included Yes Quad port X.25/FR 7 FDDI 10 Max. Additional Interface Cards–see supported I/O table 8 port Terminal Multiplexer 4 64 port Terminal Multiplexer 10 PA-RISC PA-8600 Processor @ 550 MHz Graphics/USB kit 1 kit (2 cards) Cache–Instr/data/CPU (KB) 512/1024 Public Key Cryptography 10 Floating Point Coprocessor included Yes HyperFabric 7 Electrical Characteristics TPM estimate (4 CPUs) 34,500 AC Input power 100-240V 50/60 Hz SPECweb99 (4 CPUs) 3,750 Hotswap Power supplies 2 included, 3rd for N+1 Redundant AC power inputs 2 required, 3rd for N+1 Min. memory 256 MB Current requirements at 200V 6.5 A (shared across inputs) Max. memory capacity 16 GB Typical Power dissipation (watts) 1008 W Internal Disks Maximum Power dissipation (watts) 1 1360 W Max. disk mechanisms 4 Power factor at full load .98 Max. disk capacity 292 GB kW rating for UPS loading1 1.3 Standard Integrated I/O Maximum Heat dissipation (BTUs/hour) 1 4380 - (3000 typical) Ultra2 SCSI–LVD Yes Site Preparation 10/100Base-T (RJ-45 connector) Yes Site planning and installation included No RS-232 serial ports (multiplexed from DB-25 port) 3 Depth (mm/inches) 774 mm/30.5 Web Console (including 10Base-T port) Yes Width (mm/inches) 482 mm/19 I/O buses and slots Rack Height (EIA/mm/inches) 7 EIA/311/12.25 Total PCI Slots (supports 66/33 MHz×64/32 bits) 10 Deskside Height (mm/inches) 368 mm/14.5 2 Hot-Plug Twin-Turbo (500 MB/s) and 6 Hot-Plug Turbo slots (250 MB/s) Weight (kg/lbs) Max. -

Analysis of GPGPU Programs for Data-Race and Barrier Divergence

Analysis of GPGPU Programs for Data-race and Barrier Divergence Santonu Sarkar1, Prateek Kandelwal2, Soumyadip Bandyopadhyay3 and Holger Giese3 1ABB Corporate Research, India 2MathWorks, India 3Hasso Plattner Institute fur¨ Digital Engineering gGmbH, Germany Keywords: Verification, SMT Solver, CUDA, GPGPU, Data Races, Barrier Divergence. Abstract: Todays business and scientific applications have a high computing demand due to the increasing data size and the demand for responsiveness. Many such applications have a high degree of parallelism and GPGPUs emerge as a fit candidate for the demand. GPGPUs can offer an extremely high degree of data parallelism owing to its architecture that has many computing cores. However, unless the programs written to exploit the architecture are correct, the potential gain in performance cannot be achieved. In this paper, we focus on the two important properties of the programs written for GPGPUs, namely i) the data-race conditions and ii) the barrier divergence. We present a technique to identify the existence of these properties in a CUDA program using a static property verification method. The proposed approach can be utilized in tandem with normal application development process to help the programmer to remove the bugs that can have an impact on the performance and improve the safety of a CUDA program. 1 INTRODUCTION ans that the program will never end-up in an errone- ous state, or will never stop functioning in an arbitrary With the order of magnitude increase in computing manner, is a well-known and critical property that an demand in the business and scientific applications, de- operational system should exhibit (Lamport, 1977). -

OS and Compiler Considerations in the Design of the IA-64 Architecture

OS and Compiler Considerations in the Design of the IA-64 Architecture Rumi Zahir (Intel Corporation) Dale Morris, Jonathan Ross (Hewlett-Packard Company) Drew Hess (Lucasfilm Ltd.) This is an electronic reproduction of “OS and Compiler Considerations in the Design of the IA-64 Architecture” originally published in ASPLOS-IX (the Ninth International Conference on Architectural Support for Programming Languages and Operating Systems) held in Cambridge, MA in November 2000. Copyright © A.C.M. 2000 1-58113-317-0/00/0011...$5.00 Permission to make digital or hard copies of part or all of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full cita- tion on the first page. Copyrights for components of this work owned by others than ACM must be honored. Abstracting with credit is permitted. To copy otherwise, to republish, to post on servers, or to redistribute to lists, requires prior specific permis- sion and/or a fee. Request permissions from Publications Dept, ACM Inc., fax +1 (212) 869-0481, or [email protected]. ASPLOS 2000 Cambridge, MA Nov. 12-15 , 2000 212 OS and Compiler Considerations in the Design of the IA-64 Architecture Rumi Zahir Jonathan Ross Dale Morris Drew Hess Intel Corporation Hewlett-Packard Company Lucas Digital Ltd. 2200 Mission College Blvd. 19447 Pruneridge Ave. P.O. Box 2459 Santa Clara, CA 95054 Cupertino, CA 95014 San Rafael, CA 94912 [email protected] [email protected] [email protected] [email protected] ABSTRACT system collaborate to deliver higher-performance systems. -

Selective Eager Execution on the Polypath Architecture

Selective Eager Execution on the PolyPath Architecture Artur Klauser, Abhijit Paithankar, Dirk Grunwald [klauser,pvega,grunwald]@cs.colorado.edu University of Colorado, Department of Computer Science Boulder, CO 80309-0430 Abstract average misprediction latency is the sum of the architected latency (pipeline depth) and a variable latency, which depends on data de- Control-flow misprediction penalties are a major impediment pendencies of the branch instruction. The overall cycles lost due to high performance in wide-issue superscalar processors. In this to branch mispredictions are the product of the total number of paper we present Selective Eager Execution (SEE), an execution mispredictions incurred during program execution and the average model to overcome mis-speculation penalties by executing both misprediction latency. Thus, reduction of either component helps paths after diffident branches. We present the micro-architecture decrease performance loss due to control mis-speculation. of the PolyPath processor, which is an extension of an aggressive In this paper we propose Selective Eager Execution (SEE) and superscalar, out-of-order architecture. The PolyPath architecture the PolyPath architecture model, which help to overcome branch uses a novel instruction tagging and register renaming mechanism misprediction latency. SEE recognizes the fact that some branches to execute instructions from multiple paths simultaneously in the are predicted more accurately than others, where it draws from re- same processor pipeline, while retaining maximum resource avail- search in branch confidence estimation [4, 6]. SEE behaves like ability for single-path code sequences. a normal monopath speculative execution architecture for highly Results of our execution-driven, pipeline-level simulations predictable branches; it predicts the most likely successor path of a show that SEE can improve performance by as much as 36% for branch, and evaluates instructions only along this path. -

Igpu: Exception Support and Speculative Execution on Gpus

Appears in the 39th International Symposium on Computer Architecture, 2012 iGPU: Exception Support and Speculative Execution on GPUs Jaikrishnan Menon, Marc de Kruijf, Karthikeyan Sankaralingam Department of Computer Sciences University of Wisconsin-Madison {menon, dekruijf, karu}@cs.wisc.edu Abstract the evolution of traditional CPUs to illuminate why this pro- gression appears natural and imminent. Since the introduction of fully programmable vertex Just as CPU programmers were forced to explicitly man- shader hardware, GPU computing has made tremendous age CPU memories in the days before virtual memory, for advances. Exception support and speculative execution are almost a decade, GPU programmers directly and explicitly the next steps to expand the scope and improve the usabil- managed the GPU memory hierarchy. The recent release ity of GPUs. However, traditional mechanisms to support of NVIDIA’s Fermi architecture and AMD’s Fusion archi- exceptions and speculative execution are highly intrusive to tecture, however, has brought GPUs to an inflection point: GPU hardware design. This paper builds on two related in- both architectures implement a unified address space that sights to provide a unified lightweight mechanism for sup- eliminates the need for explicit memory movement to and porting exceptions and speculation on GPUs. from GPU memory structures. Yet, without demand pag- First, we observe that GPU programs can be broken into ing, something taken for granted in the CPU space, pro- code regions that contain little or no live register state at grammers must still explicitly reason about available mem- their entry point. We then also recognize that it is simple to ory. The drawbacks of exposing physical memory size to generate these regions in such a way that they are idempo- programmers are well known. -

Evolving GPU Machine Code

Journal of Machine Learning Research 16 (2015) 673-712 Submitted 11/12; Revised 7/14; Published 4/15 Evolving GPU Machine Code Cleomar Pereira da Silva [email protected] Department of Electrical Engineering Pontifical Catholic University of Rio de Janeiro (PUC-Rio) Rio de Janeiro, RJ 22451-900, Brazil Department of Education Development Federal Institute of Education, Science and Technology - Catarinense (IFC) Videira, SC 89560-000, Brazil Douglas Mota Dias [email protected] Department of Electrical Engineering Pontifical Catholic University of Rio de Janeiro (PUC-Rio) Rio de Janeiro, RJ 22451-900, Brazil Cristiana Bentes [email protected] Department of Systems Engineering State University of Rio de Janeiro (UERJ) Rio de Janeiro, RJ 20550-013, Brazil Marco Aur´elioCavalcanti Pacheco [email protected] Department of Electrical Engineering Pontifical Catholic University of Rio de Janeiro (PUC-Rio) Rio de Janeiro, RJ 22451-900, Brazil Leandro Fontoura Cupertino [email protected] Toulouse Institute of Computer Science Research (IRIT) University of Toulouse 118 Route de Narbonne F-31062 Toulouse Cedex 9, France Editor: Una-May O'Reilly Abstract Parallel Graphics Processing Unit (GPU) implementations of GP have appeared in the lit- erature using three main methodologies: (i) compilation, which generates the individuals in GPU code and requires compilation; (ii) pseudo-assembly, which generates the individuals in an intermediary assembly code and also requires compilation; and (iii) interpretation, which interprets the codes. This paper proposes a new methodology that uses the concepts of quantum computing and directly handles the GPU machine code instructions. Our methodology utilizes a probabilistic representation of an individual to improve the global search capability. -

A Survey of Published Attacks on Intel

1 A Survey of Published Attacks on Intel SGX Alexander Nilsson∗y, Pegah Nikbakht Bideh∗, Joakim Brorsson∗zx falexander.nilsson,pegah.nikbakht_bideh,[email protected] ∗Lund University, Department of Electrical and Information Technology, Sweden yAdvenica AB, Sweden zCombitech AB, Sweden xHyker Security AB, Sweden Abstract—Intel Software Guard Extensions (SGX) provides a Unfortunately, a relatively large number of flaws and attacks trusted execution environment (TEE) to run code and operate against SGX have been published by researchers over the last sensitive data. SGX provides runtime hardware protection where few years. both code and data are protected even if other code components are malicious. However, recently many attacks targeting SGX have been identified and introduced that can thwart the hardware A. Contribution defence provided by SGX. In this paper we present a survey of all attacks specifically targeting Intel SGX that are known In this paper, we present the first comprehensive review to the authors, to date. We categorized the attacks based on that includes all known attacks specific to SGX, including their implementation details into 7 different categories. We also controlled channel attacks, cache-attacks, speculative execu- look into the available defence mechanisms against identified tion attacks, branch prediction attacks, rogue data cache loads, attacks and categorize the available types of mitigations for each presented attack. microarchitectural data sampling and software-based fault in- jection attacks. For most of the presented attacks, there are countermeasures and mitigations that have been deployed as microcode patches by Intel or that can be employed by the I. INTRODUCTION application developer herself to make the attack more difficult (or impossible) to exploit. -

Readingsample

Embedded Robotics Mobile Robot Design and Applications with Embedded Systems Bearbeitet von Thomas Bräunl Neuausgabe 2008. Taschenbuch. xiv, 546 S. Paperback ISBN 978 3 540 70533 8 Format (B x L): 17 x 24,4 cm Gewicht: 1940 g Weitere Fachgebiete > Technik > Elektronik > Robotik Zu Inhaltsverzeichnis schnell und portofrei erhältlich bei Die Online-Fachbuchhandlung beck-shop.de ist spezialisiert auf Fachbücher, insbesondere Recht, Steuern und Wirtschaft. Im Sortiment finden Sie alle Medien (Bücher, Zeitschriften, CDs, eBooks, etc.) aller Verlage. Ergänzt wird das Programm durch Services wie Neuerscheinungsdienst oder Zusammenstellungen von Büchern zu Sonderpreisen. Der Shop führt mehr als 8 Millionen Produkte. CENTRAL PROCESSING UNIT . he CPU (central processing unit) is the heart of every embedded system and every personal computer. It comprises the ALU (arithmetic logic unit), responsible for the number crunching, and the CU (control unit), responsible for instruction sequencing and branching. Modern microprocessors and microcontrollers provide on a single chip the CPU and a varying degree of additional components, such as counters, timing coprocessors, watchdogs, SRAM (static RAM), and Flash-ROM (electrically erasable ROM). Hardware can be described on several different levels, from low-level tran- sistor-level to high-level hardware description languages (HDLs). The so- called register-transfer level is somewhat in-between, describing CPU compo- nents and their interaction on a relatively high level. We will use this level in this chapter to introduce gradually more complex components, which we will then use to construct a complete CPU. With the simulation system Retro [Chansavat Bräunl 1999], [Bräunl 2000], we will be able to actually program, run, and test our CPUs. -

MT-088: Analog Switches and Multiplexers Basics

MT-088 TUTORIAL Analog Switches and Multiplexers Basics INTRODUCTION Solid-state analog switches and multiplexers have become an essential component in the design of electronic systems which require the ability to control and select a specified transmission path for an analog signal. These devices are used in a wide variety of applications including multi- channel data acquisition systems, process control, instrumentation, video systems, etc. Switches and multiplexers of the late 1960s were designed with discrete MOSFET devices and were manufactured in small PC boards or modules. With the development of CMOS processes (yielding good PMOS and NMOS transistors on the same substrate), switches and multiplexers rapidly gravitated to integrated circuit form in the mid-1970s, with product introductions such as the Analog Devices' popular AD7500-series (introduced in 1973). A dielectrically-isolated family of these parts introduced in 1976 allowed input overvoltages of ± 25 V (beyond the supply rails) and was insensitive to latch-up. These early CMOS switches and multiplexers were typically designed to handle signal levels up to ±10 V while operating on ±15-V supplies. In 1979, Analog Devices introduced the popular ADG200-series of switches and multiplexers, and in 1988 the ADG201-series was introduced which was fabricated on a proprietary linear-compatible CMOS process (LC2MOS). These devices allowed input signals to ±15 V when operating on ±15-V supplies. A large number of switches and multiplexers were introduced in the 1980s and 1990s, with the trend toward lower on-resistance, faster switching, lower supply voltages, lower cost, lower power, and smaller surface-mount packages. Today, analog switches and multiplexers are available in a wide variety of configurations, options, etc., to suit nearly all applications. -

Processor-103

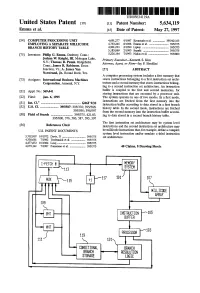

US005634119A United States Patent 19 11 Patent Number: 5,634,119 Emma et al. 45 Date of Patent: May 27, 1997 54) COMPUTER PROCESSING UNIT 4,691.277 9/1987 Kronstadt et al. ................. 395/421.03 EMPLOYING ASEPARATE MDLLCODE 4,763,245 8/1988 Emma et al. ........................... 395/375 BRANCH HISTORY TABLE 4,901.233 2/1990 Liptay ...... ... 395/375 5,185,869 2/1993 Suzuki ................................... 395/375 75) Inventors: Philip G. Emma, Danbury, Conn.; 5,226,164 7/1993 Nadas et al. ............................ 395/800 Joshua W. Knight, III, Mohegan Lake, Primary Examiner-Kenneth S. Kim N.Y.; Thomas R. Puzak. Ridgefield, Attorney, Agent, or Firm-Jay P. Sbrollini Conn.; James R. Robinson, Essex Junction, Vt.; A. James Wan 57 ABSTRACT Norstrand, Jr., Round Rock, Tex. A computer processing system includes a first memory that 73 Assignee: International Business Machines stores instructions belonging to a first instruction set archi Corporation, Armonk, N.Y. tecture and a second memory that stores instructions belong ing to a second instruction set architecture. An instruction (21) Appl. No.: 369,441 buffer is coupled to the first and second memories, for storing instructions that are executed by a processor unit. 22 Fied: Jan. 6, 1995 The system operates in one of two modes. In a first mode, instructions are fetched from the first memory into the 51 Int, C. m. G06F 9/32 instruction buffer according to data stored in a first branch 52 U.S. Cl. ....................... 395/587; 395/376; 395/586; history table. In the second mode, instructions are fetched 395/595; 395/597 from the second memory into the instruction buffer accord 58 Field of Search ........................... -

A Remotely Accessible Network Processor-Based Router for Network Experimentation

A Remotely Accessible Network Processor-Based Router for Network Experimentation Charlie Wiseman, Jonathan Turner, Michela Becchi, Patrick Crowley, John DeHart, Mart Haitjema, Shakir James, Fred Kuhns, Jing Lu, Jyoti Parwatikar, Ritun Patney, Michael Wilson, Ken Wong, David Zar Department of Computer Science and Engineering Washington University in St. Louis fcgw1,jst,mbecchi,crowley,jdd,mah5,scj1,fredk,jl1,jp,ritun,mlw2,kenw,[email protected] ABSTRACT Keywords Over the last decade, programmable Network Processors Programmable routers, network testbeds, network proces- (NPs) have become widely used in Internet routers and other sors network components. NPs enable rapid development of com- plex packet processing functions as well as rapid response to 1. INTRODUCTION changing requirements. In the network research community, the use of NPs has been limited by the challenges associ- Multi-core Network Processors (NPs) have emerged as a ated with learning to program these devices and with using core technology for modern network components. This has them for substantial research projects. This paper reports been driven primarily by the industry's need for more flexi- on an extension to the Open Network Laboratory testbed ble implementation technologies that are capable of support- that seeks to reduce these \barriers to entry" by providing ing complex packet processing functions such as packet clas- a complete and highly configurable NP-based router that sification, deep packet inspection, virtual networking and users can access remotely and use for network experiments. traffic engineering. Equally important is the need for sys- The base router includes support for IP route lookup and tems that can be extended during their deployment cycle to general packet filtering, as well as a flexible queueing sub- meet new service requirements.