Coprocessors: Failures and Successes

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Intel® IA-64 Architecture Software Developer's Manual

Intel® IA-64 Architecture Software Developer’s Manual Volume 1: IA-64 Application Architecture Revision 1.1 July 2000 Document Number: 245317-002 THIS DOCUMENT IS PROVIDED “AS IS” WITH NO WARRANTIES WHATSOEVER, INCLUDING ANY WARRANTY OF MERCHANTABILITY, NONINFRINGEMENT, FITNESS FOR ANY PARTICULAR PURPOSE, OR ANY WARRANTY OTHERWISE ARISING OUT OF ANY PROPOSAL, SPECIFICATION OR SAMPLE. Information in this document is provided in connection with Intel products. No license, express or implied, by estoppel or otherwise, to any intellectual property rights is granted by this document. Except as provided in Intel's Terms and Conditions of Sale for such products, Intel assumes no liability whatsoever, and Intel disclaims any express or implied warranty, relating to sale and/or use of Intel products including liability or warranties relating to fitness for a particular purpose, merchantability, or infringement of any patent, copyright or other intellectual property right. Intel products are not intended for use in medical, life saving, or life sustaining applications. Intel may make changes to specifications and product descriptions at any time, without notice. Designers must not rely on the absence or characteristics of any features or instructions marked "reserved" or "undefined." Intel reserves these for future definition and shall have no responsibility whatsoever for conflicts or incompatibilities arising from future changes to them. Intel® IA-64 processors may contain design defects or errors known as errata which may cause the product to deviate from published specifications. Current characterized errata are available on request. Copies of documents which have an order number and are referenced in this document, or other Intel literature, may be obtained by calling 1-800- 548-4725, or by visiting Intel’s website at http://developer.intel.com/design/litcentr. -

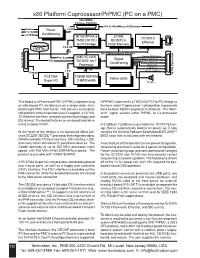

X86 Platform Coprocessor/Prpmc (PC on a PMC)

x86 Platform Coprocessor/PrPMC (PC on a PMC) 32b/33MHz PCI bus PN1/PN2 +5V to Kbd/Mouse/USB power Vcore Power +3.3V +2.5v Conditioning +3.3VIO 1K100 FPGA & 512KB 10/100TX TMS2250 PCI BIOS/PLA Ethernet RJ45 Compact to PCI bridge Flash Memory PLA I/O Flash site 8 32b/33MHz Internal PCI bus Analog SVGA Video Pwr Seq AMD SC2200 Signal COM 1 (RXD/TXD only) IDE "GEODE (tm)" Conditioning COM 2 (RXD/TXD only) Processor USB Port 1 Rear I/O PN4 I/O Rear 64 USB Port 2 LPC Keyboard/Mouse Floppy 36 Pin 36 Pin Connector PC87364 128MB SDRAM Status LEDs Super I/O (16MWx64b) PC Spkr This board is a Processor PMC (PrPMC) implementing A PrPMC implements a TMS2250 PCI-to-PCI bridge to an x86-based PC architecture on a single-wide, stan- the host, and a “Coprocessor” cofniguration implements dard height PMC form factor. This delivers a complete back-to-back 16550 compatible COM ports. The “Mon- x86 platform with comprehensive I/O support, a 10/100- arch” signal selects either PrPMC or Co-processor TX Ethernet interface, and disk access (both floppy and mode. IDE drives). The board features an on-board hard drive using Compact Flash. A 512Kbyte FLASH memory holds the 1K100 PLA im- age that is automatically loaded on power up. It also At the heart of the design is an Advanced Micro De- contains the General Software Embedded BIOS 2000™ vices SC2200 GEODE™ processor that integrates video, BIOS code that is included with each board. -

Convey Overview

THE WORLD’S FIRST HYBRID-CORE COMPUTER. CONVEY HYBRID-CORE COMPUTING Hybrid-core Computing Convey HC-1 High Performance of application- specific hardware Heterogenous solutions • can be much more efficient Performance/ • still hard to program Programmability and Power efficiency deployment ease of an x86 server Application Multicore solutions • don’t always scale well • parallel programming is hard Low Difficult Ease of Deployment Easy 1/22/2010 3 Hybrid-Core Computing Application-Specific Personalities Applications • Extend the x86 instruction set • Implement key operations in Convey Compilers hardware Life Sciences x86-64 ISA Custom ISA CAE Custom Financial Oil & Gas Shared Virtual Memory Cache-coherent, shared memory • Both ISAs address common memory *ISA: Instruction Set Architecture 7/12/2010 4 HC-1 Hardware PCI I/O FPGA FPGA Intel Personalities Chipset FPGA FPGA 8 GB/s 80 GB/s Memory Memory Cache Coherent, Shared Virtual Memory 1/22/2010 5 Using Personalities C/C++ Fortran • Personalities are user specifies reloadable personality at instruction sets Convey Software compile time Development Suite • Compiler instruction generates x86 descriptions and coprocessor instructions from Hybrid-Core Executable P ANSI standard x86-64 and Coprocessor Personalities C/C++ & Fortran Instructions • Executable can run on x86 nodes FPGA Convey HC-1 or Convey Hybrid- bitfiles Core nodes Intel x86 Coprocessor personality loaded at runtime by OS 1/22/2010 6 SYSTEM ARCHITECTURE HC-1 Architecture “Commodity” Intel Server Convey FPGA-based coprocessor Direct -

インテル® Parallel Studio XE 2020 リリースノート

インテル® Parallel Studio XE 2020 2019 年 12 月 5 日 内容 1 概要 ..................................................................................................................................................... 2 2 製品の内容 ........................................................................................................................................ 3 2.1 インテルが提供するデバッグ・ソリューションの追加情報 ..................................................... 5 2.2 Microsoft* Visual Studio* Shell の提供終了 ........................................................................ 5 2.3 インテル® Software Manager .................................................................................................... 5 2.4 サポートするバージョンとサポートしないバージョン ............................................................ 5 3 新機能 ................................................................................................................................................ 6 3.1 インテル® Xeon Phi™ 製品ファミリーのアップデート ......................................................... 12 4 動作環境 .......................................................................................................................................... 13 4.1 プロセッサーの要件 ...................................................................................................................... 13 4.2 ディスク空き容量の要件 .............................................................................................................. 13 4.3 オペレーティング・システムの要件 ............................................................................................. 13 -

Appendix D an Alternative to RISC: the Intel 80X86

D.1 Introduction D-2 D.2 80x86 Registers and Data Addressing Modes D-3 D.3 80x86 Integer Operations D-6 D.4 80x86 Floating-Point Operations D-10 D.5 80x86 Instruction Encoding D-12 D.6 Putting It All Together: Measurements of Instruction Set Usage D-14 D.7 Concluding Remarks D-20 D.8 Historical Perspective and References D-21 D An Alternative to RISC: The Intel 80x86 The x86 isn’t all that complex—it just doesn’t make a lot of sense. Mike Johnson Leader of 80x86 Design at AMD, Microprocessor Report (1994) © 2003 Elsevier Science (USA). All rights reserved. D-2 I Appendix D An Alternative to RISC: The Intel 80x86 D.1 Introduction MIPS was the vision of a single architect. The pieces of this architecture fit nicely together and the whole architecture can be described succinctly. Such is not the case of the 80x86: It is the product of several independent groups who evolved the architecture over 20 years, adding new features to the original instruction set as you might add clothing to a packed bag. Here are important 80x86 milestones: I 1978—The Intel 8086 architecture was announced as an assembly language– compatible extension of the then-successful Intel 8080, an 8-bit microproces- sor. The 8086 is a 16-bit architecture, with all internal registers 16 bits wide. Whereas the 8080 was a straightforward accumulator machine, the 8086 extended the architecture with additional registers. Because nearly every reg- ister has a dedicated use, the 8086 falls somewhere between an accumulator machine and a general-purpose register machine, and can fairly be called an extended accumulator machine. -

Introduction to Intel Xeon Phi Programming Models

Introduction to Intel Xeon Phi programming models F.Affinito F. Salvadore SCAI - CINECA Part I Introduction to the Intel Xeon Phi architecture Trends: transistors Trends: clock rates Trends: cores and threads Trends: summarizing... The number of transistors increases The power consumption must not increase The density cannot increase on a single chip Solution : Increase the number of cores GP-GPU and Intel Xeon Phi.. Coupled to the CPU To accelerate highly parallel kernels, facing with the Amdahl Law What is Intel Xeon Phi? 7100 / 5100 / 3100 Series available 5110P: Intel Xeon Phi clock: 1053 MHz 60 cores in-order ~ 1 TFlops/s DP peak performance (2 Tflops SP) 4 hardware threads per core 8 GB DDR5 memory 512-bit SIMD vectors (32 registers) Fully-coherent L1 and L2 caches PCIe bus (rev. 2.0) Max Memory bandwidth (theoretical) 320 GB/s Max TDP: 225 W MIC vs GPU naïve comparison The comparison is naïve System K20s 5110P # cores 2496 60 (*4) Memory size 5 GB 8 GB Peak performance 3.52 TFlops 2 TFlops (SP) Peak performance 1.17 TFlops 1 TFlops (DP) Clock rate 0.706 GHz 1.053 GHz Memory bandwidth 208 GB/s (ECC off) 320 GB/s Terminology MIC = Many Integrated Cores is the name of the architecture Xeon Phi = Commercial name of the Intel product based on the MIC architecture Knight's corner, Knight's landing, Knight's ferry are development names of MIC architectures We will often refer to the CPU as HOST and Xeon Phi as DEVICE Is it an accelerator? YES: It can be used to “accelerate” hot-spots of the code that are highly parallel and computationally extensive In this sense, it works alongside the CPU It can be used as an accelerator using the “offload” programming model An important bottleneck is represented by the communication between host and device (through PCIe) Under this respect, it is very similar to a GPU Is it an accelerator? / 2 NOT ONLY: the Intel Xeon Phi can behave as a many-core X86 node. -

Exploiting Free Silicon for Energy-Efficient Computing Directly

Exploiting Free Silicon for Energy-Efficient Computing Directly in NAND Flash-based Solid-State Storage Systems Peng Li Kevin Gomez David J. Lilja Seagate Technology Seagate Technology University of Minnesota, Twin Cities Shakopee, MN, 55379 Shakopee, MN, 55379 Minneapolis, MN, 55455 [email protected] [email protected] [email protected] Abstract—Energy consumption is a fundamental issue in today’s data A well-known solution to the memory wall issue is moving centers as data continue growing dramatically. How to process these computing closer to the data. For example, Gokhale et al [2] data in an energy-efficient way becomes more and more important. proposed a processor-in-memory (PIM) chip by adding a processor Prior work had proposed several methods to build an energy-efficient system. The basic idea is to attack the memory wall issue (i.e., the into the main memory for computing. Riedel et al [9] proposed performance gap between CPUs and main memory) by moving com- an active disk by using the processor inside the hard disk drive puting closer to the data. However, these methods have not been widely (HDD) for computing. With the evolution of other non-volatile adopted due to high cost and limited performance improvements. In memories (NVMs), such as phase-change memory (PCM) and spin- this paper, we propose the storage processing unit (SPU) which adds computing power into NAND flash memories at standard solid-state transfer torque (STT)-RAM, researchers also proposed to use these drive (SSD) cost. By pre-processing the data using the SPU, the data NVMs as the main memory for data-intensive applications [10] to that needs to be transferred to host CPUs for further processing improve the system energy-efficiency. -

Introduction to High Performance Computing

Introduction to High Performance Computing Shaohao Chen Research Computing Services (RCS) Boston University Outline • What is HPC? Why computer cluster? • Basic structure of a computer cluster • Computer performance and the top 500 list • HPC for scientific research and parallel computing • National-wide HPC resources: XSEDE • BU SCC and RCS tutorials What is HPC? • High Performance Computing (HPC) refers to the practice of aggregating computing power in order to solve large problems in science, engineering, or business. • Purpose of HPC: accelerates computer programs, and thus accelerates work process. • Computer cluster: A set of connected computers that work together. They can be viewed as a single system. • Similar terminologies: supercomputing, parallel computing. • Parallel computing: many computations are carried out simultaneously, typically computed on a computer cluster. • Related terminologies: grid computing, cloud computing. Computing power of a single CPU chip • Moore‘s law is the observation that the computing power of CPU doubles approximately every two years. • Nowadays the multi-core technique is the key to keep up with Moore's law. Why computer cluster? • Drawbacks of increasing CPU clock frequency: --- Electric power consumption is proportional to the cubic of CPU clock frequency (ν3). --- Generates more heat. • A drawback of increasing the number of cores within one CPU chip: --- Difficult for heat dissipation. • Computer cluster: connect many computers with high- speed networks. • Currently computer cluster is the best solution to scale up computer power. • Consequently software/programs need to be designed in the manner of parallel computing. Basic structure of a computer cluster • Cluster – a collection of many computers/nodes. • Rack – a closet to hold a bunch of nodes. -

CUDA What Is GPGPU

What is GPGPU ? • General Purpose computation using GPU in applications other than 3D graphics CUDA – GPU accelerates critical path of application • Data parallel algorithms leverage GPU attributes – Large data arrays, streaming throughput Slides by David Kirk – Fine-grain SIMD parallelism – Low-latency floating point (FP) computation • Applications – see //GPGPU.org – Game effects (FX) physics, image processing – Physical modeling, computational engineering, matrix algebra, convolution, correlation, sorting Previous GPGPU Constraints CUDA • Dealing with graphics API per thread per Shader Input Registers • “Compute Unified Device Architecture” – Working with the corner cases per Context • General purpose programming model of the graphics API Fragment Program Texture – User kicks off batches of threads on the GPU • Addressing modes Constants – GPU = dedicated super-threaded, massively data parallel co-processor – Limited texture size/dimension Temp Registers • Targeted software stack – Compute oriented drivers, language, and tools Output Registers • Shader capabilities • Driver for loading computation programs into GPU FB Memory – Limited outputs – Standalone Driver - Optimized for computation • Instruction sets – Interface designed for compute - graphics free API – Data sharing with OpenGL buffer objects – Lack of Integer & bit ops – Guaranteed maximum download & readback speeds • Communication limited – Explicit GPU memory management – Between pixels – Scatter a[i] = p 1 Parallel Computing on a GPU Extended C • Declspecs • NVIDIA GPU Computing Architecture – global, device, shared, __device__ float filter[N]; – Via a separate HW interface local, constant __global__ void convolve (float *image) { – In laptops, desktops, workstations, servers GeForce 8800 __shared__ float region[M]; ... • Keywords • 8-series GPUs deliver 50 to 200 GFLOPS region[threadIdx] = image[i]; on compiled parallel C applications – threadIdx, blockIdx • Intrinsics __syncthreads() – __syncthreads .. -

Accelerators for HP Proliant Servers Enable Scalable and Efficient High-Performance Computing

Family data sheet Accelerators for HP ProLiant servers Enable scalable and efficient high-performance computing November 2014 Family data sheet | Accelerators for HP ProLiant servers HP high-performance computing has made it possible to accelerate innovation at any scale. But traditional CPU technology is no longer capable of sufficiently scaling performance to address the skyrocketing demand for compute resources. HP high-performance computing solutions are built on HP ProLiant servers using industry-leading accelerators to dramatically increase performance with lower power requirements. Innovation is the foundation for success What is hybrid computing? Accelerators are revolutionizing high performance computing A hybrid computing model is one where High-performance computing (HPC) is being used to address many of modern society’s biggest accelerators (known as GPUs or coprocessors) challenges, such as designing new vaccines and genetically engineering drugs to fight diseases, work together with CPUs to perform computing finding and extracting precious oil and gas resources, improving financial instruments, and tasks. designing more fuel efficient engines. As parallel processors, accelerators can split computations into hundreds or thousands of This rapid pace of innovation has created an insatiable demand for compute power. At the same pieces and calculate them simultaneously. time, multiple strict requirements are placed on system performance, power consumption, size, response, reliability, portability, and design time. Modern HPC systems are rapidly evolving, Offloading the most compute-intensive portions of already reaching petaflop and targeting exaflop performance. applications to accelerators dramatically increases both application performance and computational All of these challenges lead to a common set of requirements: a need for more computing efficiency. -

Quick-Reference Guide to Optimization with Intel® Compilers

Quick Reference Guide to Optimization with Intel® C++ and Fortran Compilers v19.1 For IA-32 processors, Intel® 64 processors, Intel® Xeon Phi™ processors and compatible non-Intel processors. Contents Application Performance .............................................................................................................................. 2 General Optimization Options and Reports ** ............................................................................................. 3 Parallel Performance ** ................................................................................................................................ 4 Recommended Processor-Specific Optimization Options ** ....................................................................... 5 Optimizing for the Intel® Xeon Phi™ x200 product family ............................................................................ 6 Interprocedural Optimization (IPO) and Profile-Guided Optimization (PGO) Options ................................ 7 Fine-Tuning (All Processors) ** ..................................................................................................................... 8 Floating-Point Arithmetic Options .............................................................................................................. 10 Processor Code Name With Instruction Set Extension Name Synonym .................................................... 11 Frequently Used Processor Names in Compiler Options ........................................................................... -

Broadwell Skylake Next Gen* NEW Intel NEW Intel NEW Intel Microarchitecture Microarchitecture Microarchitecture

15 лет доступности IOTG is extending the product availability for IOTG roadmap products from a minimum of 7 years to a minimum of 15 years when both processor and chipset are on 22nm and newer process technologies. - Xeon Scalable (w/ chipsets) - E3-12xx/15xx v5 and later (w/ chipsets) - 6th gen Core and later (w/ chipsets) - Bay Trail (E3800) and later products (Braswell, N3xxx) - Atom C2xxx (Rangeley) and later - Не включает в себя Xeon-D (7 лет) и E5-26xx v4 (7 лет) 2 IOTG Product Availability Life-Cycle 15 year product availability will start with the following products: Product Discontinuance • Intel® Xeon® Processor Scalable Family codenamed Skylake-SP and later with associated chipsets Notification (PDN)† • Intel® Xeon® E3-12xx/15xx v5 series (Skylake) and later with associated chipsets • 6th Gen Intel® Core™ processor family (Skylake) and later (includes Intel® Pentium® and Celeron® processors) with PDNs will typically be issued no later associated chipsets than 13.5 years after component • Intel Pentium processor N3700 (Braswell) and later and Intel Celeron processors N3xxx (Braswell) and J1900/N2xxx family introduction date. PDNs are (Bay Trail) and later published at https://qdms.intel.com/ • Intel® Atom® processor C2xxx (Rangeley) and E3800 family (Bay Trail) and late Last 7 year product availability Time Last Last Order Ship Last 15 year product availability Time Last Last Order Ship L-1 L L+1 L+2 L+3 L+4 L+5 L+6 L+7 L+8 L+9 L+10 L+11 L+12 L+13 L+14 L+15 Years Introduction of component family † Intel may support this extended manufacturing using reasonably Last Time Order/Ship Periods Component family introduction dates are feasible means deemed by Intel to be appropriate.