Managing Vulnerabilities in Machine Learning and Artificial Intelligence Systems Featuring Allen Householder, Jonathan Spring, and Nathan Vanhoudnos

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Congressional Record—Senate S2006

S2006 CONGRESSIONAL RECORD — SENATE March 24, 2020 well as the efficacy—how well does it The reason we are here tonight is Those 1,700 restaurants and bars, in work?—over the course of several that we are both upset that people are this bill, if we pass it, can go to a cred- weeks. still talking about adding to the bill 48 it union or a bank. You can borrow We have some other amazing, mir- hours after having been on the 5-yard money to pay your employees up to acle drugs. They are called monoclonal line. We may be on the 1-yard line, but, $8,000. You can borrow money to pay antibodies. That is the technical term apparently, there are 20 people on de- the rent, pay yourself as the owner, for them. When you take that drug, it fense when we need to get the ball in and we will forgive it and make it a provides protection against the the end zone. grant for 6 or 8 weeks. That will change coronavirus so that you don’t even get I am just begging of my colleagues everybody’s life all over South Caro- the COVID–19 disease. who have worked very hard—and I am lina. Here is the challenge, Senator GRA- not being critical as much as I am It is time to change people’s lives. It HAM. We have to be operating on par- being insistent—because enough is is time to stop negotiating. It is time allel paths right now to make sure we enough. -

Rafm 10-22-2015

S. Hrg. 114–139 IMPROVING PAY FLEXIBILITIES IN THE FEDERAL WORKFORCE HEARING BEFORE THE SUBCOMMITTEE ON REGULATORY AFFAIRS AND FEDERAL MANAGEMENT OF THE COMMITTEE ON HOMELAND SECURITY AND GOVERNMENTAL AFFAIRS UNITED STATES SENATE ONE HUNDRED FOURTEENTH CONGRESS FIRST SESSION OCTOBER 22, 2015 Available via http://www.fdsys.gov Printed for the use of the Committee on Homeland Security and Governmental Affairs ( U.S. GOVERNMENT PUBLISHING OFFICE 97–881 PDF WASHINGTON : 2016 For sale by the Superintendent of Documents, U.S. Government Publishing Office Internet: bookstore.gpo.gov Phone: toll free (866) 512–1800; DC area (202) 512–1800 Fax: (202) 512–2104 Mail: Stop IDCC, Washington, DC 20402–0001 COMMITTEE ON HOMELAND SECURITY AND GOVERNMENTAL AFFAIRS RON JOHNSON, Wisconsin, Chairman JOHN MCCAIN, Arizona THOMAS R. CARPER, Delaware ROB PORTMAN, Ohio CLAIRE MCCASKILL, Missouri RAND PAUL, Kentucky JON TESTER, Montana JAMES LANKFORD, Oklahoma TAMMY BALDWIN, Wisconsin MICHAEL B. ENZI, Wyoming HEIDI HEITKAMP, North Dakota KELLY AYOTTE, New Hampshire CORY A. BOOKER, New Jersey JONI ERNST, Iowa GARY C. PETERS, Michigan BEN SASSE, Nebraska KEITH B. ASHDOWN, Staff Director GABRIELLE A. BATKIN, Minority Staff Director JOHN P. KILVINGTON, Minority Deputy Staff Director LAURA W. KILBRIDE, Chief Clerk SUBCOMMITTEE ON REGULATORY AFFAIRS AND FEDERAL MANAGEMENT JAMES LANKFORD, Oklahoma, Chairman JOHN MCCAIN, Arizona HEIDI HEITKAMP, North Dakota ROB PORTMAN, Ohio JON TESTER, Montana MICHAEL B. ENZI, Wyoming CORY A. BOOKER, New Jersey JONI ERNST, Iowa GARY C. PETERS, Michigan BEN SASSE, Nebraska JOHN CUADERESS, Staff Director ERIC BURSCH, Minority Staff Director RACHEL NITSCHE, Chief Clerk (II) C O N T E N T S Opening statement: Page Senator Lankford ............................................................................................. -

1 1 2 3 4 5 6 National Governors Association 7 Opening Session 8 9 Growing State Economy 10 11

1 1 2 3 4 5 6 NATIONAL GOVERNORS ASSOCIATION 7 OPENING SESSION 8 9 GROWING STATE ECONOMY 10 11 12 Virginia Ballroom 13 Williamsburg Lodge Conference Center 14 310 South England Street 15 Williamsburg, Virginia 16 July 13, 2012 17 18 19 20 21 ------------------------------------- 22 TAYLOE ASSOCIATES, INC. 23 Registered Professional Reporters 24 Telephone: (757) 461-1984 25 Norfolk, Virginia 2 1 PARTICIPANTS: 2 GOVERNOR DAVE HEINEMAN, NEBRASKA, CHAIR 3 GOVERNOR JACK MARKELL, DELAWARE, VICE CHAIR 4 5 6 GUEST: 7 JIM COLLINS, AUTHOR 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 3 1 P R O C E E D I N G S 2 CHAIRMAN HEINEMAN: Good afternoon, 3 governors and distinguished guests. I call to order 4 the 104th Annual Meeting of the National Governors 5 Association. 6 We have a full agenda for the next two 7 and a half days. Following this session, the 8 Education and Workforce Committee will discuss the 9 reauthorization of the Elementary and Secondary 10 Education Act, with Secretary of Education Arne Duncan 11 and former secretary Margaret Spellings. All 12 governors are encouraged to attend, and it will be in 13 this room here. 14 Saturday's business agenda begins with 15 the concurrent meetings of the Economic Development 16 Commerce Committee and the Health and Human Services 17 Committee. 18 We will then have a governors-only lunch 19 and business session followed by the meetings of the 20 Natural Resources Committee and the Special Committee 21 on Homeland Security and Public Safety. -

Rembrandt Remembers – 80 Years of Small Town Life

Rembrandt School Song Purple and white, we’re fighting for you, We’ll fight for all things that you can do, Basketball, baseball, any old game, We’ll stand beside you just the same, And when our colors go by We’ll shout for you, Rembrandt High And we'll stand and cheer and shout We’re loyal to Rembrandt High, Rah! Rah! Rah! School colors: Purple and White Nickname: Raiders and Raiderettes Rembrandt Remembers: 80 Years of Small-Town Life Compiled and Edited by Helene Ducas Viall and Betty Foval Hoskins Des Moines, Iowa and Harrisonburg, Virginia Copyright © 2002 by Helene Ducas Viall and Betty Foval Hoskins All rights reserved. iii Table of Contents I. Introduction . v Notes on Editing . vi Acknowledgements . vi II. Graduates 1920s: Clifford Green (p. 1), Hilda Hegna Odor (p. 2), Catherine Grigsby Kestel (p. 4), Genevieve Rystad Boese (p. 5), Waldo Pingel (p. 6) 1930s: Orva Kaasa Goodman (p. 8), Alvin Mosbo (p. 9), Marjorie Whitaker Pritchard (p. 11), Nancy Bork Lind (p. 12), Rosella Kidman Avansino (p. 13), Clayton Olson (p. 14), Agnes Rystad Enderson (p. 16), Alice Haroldson Halverson (p. 16), Evelyn Junkermeier Benna (p. 18), Edith Grodahl Bates (p. 24), Agnes Lerud Peteler (p. 26), Arlene Burwell Cannoy (p. 28 ), Catherine Pingel Sokol (p. 29), Loren Green (p. 30), Phyllis Johnson Gring (p. 34), Ken Hadenfeldt (p. 35), Lloyd Pressel (p. 38), Harry Edwall (p. 40), Lois Ann Johnson Mathison (p. 42), Marv Erichsen (p. 43), Ruth Hill Shankel (p. 45), Wes Wallace (p. 46) 1940s: Clement Kevane (p. 48), Delores Lady Risvold (p. -

LET's GO FLYING Book, at the Perfect Landing Restaurant, Centennial Airport Near Denver, Colorado

Cover:Layout 1 12/1/09 11:02 AM Page 1 LET’S GO LET’S FLYING G O FLYING Educational Programs Directorate & Drug Demand Reduction Program Civil Air Patrol National Headquarters 105 South Hansell Street Maxwell Air Force Base, Ala. 36112 Let's Go Fly Chapter 1:Layout 1 12/15/09 7:33 AM Page i CIVIL AIR PATROL DIRECTOR, EDUCATIONAL PROGRAMS DIRECTORATE Mr. James L. Mallett CHIEF, DRUG DEMAND REDUCTION PROGRAM Mr. Michael Simpkins CHIEF, AEROSPACE EDUCATION Dr. Jeff Montgomery AUTHOR & PROJECT DIRECTOR Dr. Ben Millspaugh EDITORIAL TEAM Ms. Gretchen Clayson; Ms. Susan Mallett; Lt. Col. Bruce Hulley, CAP; Ms. Crystal Bloemen; Lt. Col. Barbara Gentry, CAP; Major Kaye Ebelt, CAP; Capt. Russell Grell, CAP; and Mr. Dekker Zimmerman AVIATION CAREER SUPPORT TEAM Maj. Gen. Tandy Bozeman, USAF Ret.; Lt. Col. Ron Gendron, USAF; Lt. Col. Patrick Hanlon, USAF; Capt. Billy Mitchell, Capt. Randy Trujillo; and Capt. Rick Vigil LAYOUT & DESIGN Ms. Barb Pribulick PHOTOGRAPHY Mr. Adam Wright; Mr. Alex McMahon and Mr. Frank E. Mills PRINTING Wells Printing Montgomery, AL 2nd Edition Let's Go Fly Chapter 1:Layout 1 12/15/09 7:33 AM Page ii Contents Part One — Introduction to the world of aviation — your first flight on a commercial airliner . 1 Part Two — So you want to learn to fly — this is your introduction into actual flight training . 23 Part Three — Special programs for your aviation interest . 67 Part Four Fun things you can do with an interest in aviation . 91 Part Five Getting your “ticket” & passing the medical . 119 Part Six Interviews with aviation professionals . -

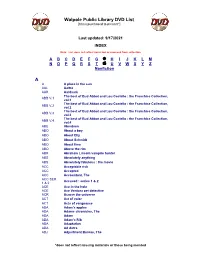

Walpole Public Library DVD List A

Walpole Public Library DVD List [Items purchased to present*] Last updated: 9/17/2021 INDEX Note: List does not reflect items lost or removed from collection A B C D E F G H I J K L M N O P Q R S T U V W X Y Z Nonfiction A A A place in the sun AAL Aaltra AAR Aardvark The best of Bud Abbot and Lou Costello : the Franchise Collection, ABB V.1 vol.1 The best of Bud Abbot and Lou Costello : the Franchise Collection, ABB V.2 vol.2 The best of Bud Abbot and Lou Costello : the Franchise Collection, ABB V.3 vol.3 The best of Bud Abbot and Lou Costello : the Franchise Collection, ABB V.4 vol.4 ABE Aberdeen ABO About a boy ABO About Elly ABO About Schmidt ABO About time ABO Above the rim ABR Abraham Lincoln vampire hunter ABS Absolutely anything ABS Absolutely fabulous : the movie ACC Acceptable risk ACC Accepted ACC Accountant, The ACC SER. Accused : series 1 & 2 1 & 2 ACE Ace in the hole ACE Ace Ventura pet detective ACR Across the universe ACT Act of valor ACT Acts of vengeance ADA Adam's apples ADA Adams chronicles, The ADA Adam ADA Adam’s Rib ADA Adaptation ADA Ad Astra ADJ Adjustment Bureau, The *does not reflect missing materials or those being mended Walpole Public Library DVD List [Items purchased to present*] ADM Admission ADO Adopt a highway ADR Adrift ADU Adult world ADV Adventure of Sherlock Holmes’ smarter brother, The ADV The adventures of Baron Munchausen ADV Adverse AEO Aeon Flux AFF SEAS.1 Affair, The : season 1 AFF SEAS.2 Affair, The : season 2 AFF SEAS.3 Affair, The : season 3 AFF SEAS.4 Affair, The : season 4 AFF SEAS.5 Affair, -

Television Academy

Television Academy 2014 Primetime Emmy Awards Ballot Outstanding Directing For A Comedy Series For a single episode of a comedy series. Emmy(s) to director(s). VOTE FOR NO MORE THAN FIVE achievements in this category that you have seen and feel are worthy of nomination. (More than five votes in this category will void all votes in this category.) 001 About A Boy Pilot February 22, 2014 Will Freeman is single, unemployed and loving it. But when Fiona, a needy, single mom and her oddly charming 11-year-old son, Marcus, move in next door, his perfect life is about to hit a major snag. Jon Favreau, Director 002 About A Boy About A Rib Chute May 20, 2014 Will is completely heartbroken when Sam receives a job opportunity she can’t refuse in New York, prompting Fiona and Marcus to try their best to comfort him. With her absence weighing on his mind, Will turns to Andy for his sage advice in figuring out how to best move forward. Lawrence Trilling, Directed by 003 About A Boy About A Slopmaster April 15, 2014 Will throws an afternoon margarita party; Fiona runs a school project for Marcus' class; Marcus learns a hard lesson about the value of money. Jeffrey L. Melman, Directed by 004 Alpha House In The Saddle January 10, 2014 When another senator dies unexpectedly, Gil John is asked to organize the funeral arrangements. Louis wins the Nevada primary but Robert has to face off in a Pennsylvania debate to cool the competition. Clark Johnson, Directed by 1 Television Academy 2014 Primetime Emmy Awards Ballot Outstanding Directing For A Comedy Series For a single episode of a comedy series. -

Department of Homeland Security Appropriations for Fiscal Year 2013

S. HRG. 112–838 DEPARTMENT OF HOMELAND SECURITY APPROPRIATIONS FOR FISCAL YEAR 2013 HEARINGS BEFORE A SUBCOMMITTEE OF THE COMMITTEE ON APPROPRIATIONS UNITED STATES SENATE ONE HUNDRED TWELFTH CONGRESS SECOND SESSION ON H.R. 5855/S. 3216 AN ACT MAKING APPROPRIATIONS FOR THE DEPARTMENT OF HOME- LAND SECURITY FOR THE FISCAL YEAR ENDING SEPTEMBER 30, 2013, AND FOR OTHER PURPOSES Department of Homeland Security Nondepartmental Witnesses Printed for the use of the Committee on Appropriations ( Available via the World Wide Web: http://www.gpo.gov/fdsys/browse/ committee.action?chamber=senate&committee=appropriations U.S. GOVERNMENT PRINTING OFFICE 72–317 PDF WASHINGTON : 2013 For sale by the Superintendent of Documents, U.S. Government Printing Office Internet: bookstore.gpo.gov Phone: toll free (866) 512–1800; DC area (202) 512–1800 Fax: (202) 512–2104 Mail: Stop IDCC, Washington, DC 20402–0001 COMMITTEE ON APPROPRIATIONS DANIEL K. INOUYE, Hawaii, Chairman PATRICK J. LEAHY, Vermont THAD COCHRAN, Mississippi TOM HARKIN, Iowa MITCH MCCONNELL, Kentucky BARBARA A. MIKULSKI, Maryland RICHARD C. SHELBY, Alabama HERB KOHL, Wisconsin KAY BAILEY HUTCHISON, Texas PATTY MURRAY, Washington SUSAN COLLINS, Maine DIANNE FEINSTEIN, California LISA MURKOWSKI, Alaska RICHARD J. DURBIN, Illinois LINDSEY GRAHAM, South Carolina TIM JOHNSON, South Dakota MARK KIRK, Illinois MARY L. LANDRIEU, Louisiana DANIEL COATS, Indiana JACK REED, Rhode Island ROY BLUNT, Missouri FRANK R. LAUTENBERG, New Jersey JERRY MORAN, Kansas BEN NELSON, Nebraska JOHN HOEVEN, North Dakota MARK PRYOR, Arkansas RON JOHNSON, Wisconsin JON TESTER, Montana SHERROD BROWN, Ohio CHARLES J. HOUY, Staff Director BRUCE EVANS, Minority Staff Director SUBCOMMITTEE ON THE DEPARTMENT OF HOMELAND SECURITY MARY L. -

Tax Credits Issued

TAX CREDITS ISSUED TOTAL APPLICANT CREDIT PRODUCTION NAME APPLICANT ADDRESS Curious Holdings LLC dba Curious Pictures Dan Zanes Show Pilot 440 Lafayette Street, 6th floor New York, N.Y. 10003 New York New York Productions, Inc. Good Shepherd 10 East Lee Street, Suite 2705 Baltimore, MD 21202 Forever the Film LLC Never Forever 180 Varick Street, Ste. 1002 New York, N.Y. 10014 c/o Trevanna Post, Inc. 161 Avenue of the Americas, #902 New Pretty Good Productions, Inc Win Win York, N.Y. 10013 Prime Film Production, LLC Prime 10850 Wilshire Blvd.,6th floor, Los Angeles, CA. 90024 The Ten Film, LLC Ten 6 East 39th Street, New York, N.Y. 10016 Fast Lane Productions Inc, dba FILMAURO Christmas (Natale) in NY 9623 Charleville Blvd. Beverly Hills, CA 90212 Girl in the Park, LLC Girl in the Park 9242 Beverly Blvd., Suite 200 Beverly Hills, CA 90210 57th & Irving The Cake Eaters LLC Cake Eaters 19 W 21st Street, Suite 706 New York, N.Y. 10010 c/o River Road Entertainment 60 South 6th Street, Suite 4050 FURTHEFILM, LLC Fur Minneapolis, MN 55402 Freedomland Productions, Inc. Freedomland 2900 West Olympic Blvd. 2nd floor Santa Monica, CA 90404 Griffin & Phoenix Productions LLC Griffin and Phoenix 9420 Wilshire Blvd., Suite 250 Beverly Hills, CA 90212 Reason For Leaving LP Wreck, The 601 W. 26th St. Suite 1255, New York, N.Y. 10001 Eric Nadler Transbeman 10 Jay Street, #204, Brooklyn, New York 11201 Gregory Lamberson Slime City Massacre 1824 Kensington Avenue, Cheektowaga, N.Y. 14215 Whitest Kids You Know Season 4; Whitest Kids You Know Season 3; Whitest Kids You L.C Incorporated $987,780 Know Season 5 c/o GRF LLP, 360 Hamilton Ave, #100 White Plains, NY 10601 Persona Films Inc. -

Cyber Security—2010 Hearings Committee On

S. Hrg. 111–1103 CYBER SECURITY—2010 HEARINGS BEFORE THE COMMITTEE ON HOMELAND SECURITY AND GOVERNMENTAL AFFAIRS UNITED STATES SENATE ONE HUNDRED ELEVENTH CONGRESS SECOND SESSION JUNE 15, 2010 PROTECTING CYBERSPACE AS A NATIONAL ASSET: COMPREHENSIVE LEGISLATION FOR THE 21ST CENTURY NOVEMBER 17, 2010 SECURING CRITICAL INFRASTRUCTURE IN THE AGE OF STUXNET Available via the World Wide Web: http://www.fdsys.gov/ Printed for the use of the Committee on Homeland Security and Governmental Affairs ( U.S. GOVERNMENT PRINTING OFFICE 58–034 PDF WASHINGTON : 2011 For sale by the Superintendent of Documents, U.S. Government Printing Office Internet: bookstore.gpo.gov Phone: toll free (866) 512–1800; DC area (202) 512–1800 Fax: (202) 512–2104 Mail: Stop IDCC, Washington, DC 20402–0001 VerDate Nov 24 2008 14:00 Nov 14, 2011 Jkt 058034 PO 00000 Frm 00001 Fmt 5011 Sfmt 5011 P:\DOCS\58034.TXT SAFFAIRS PsN: PAT COMMITTEE ON HOMELAND SECURITY AND GOVERNMENTAL AFFAIRS JOSEPH I. LIEBERMAN, Connecticut, Chairman CARL LEVIN, Michigan SUSAN M. COLLINS, Maine DANIEL K. AKAKA, Hawaii TOM COBURN, Oklahoma THOMAS R. CARPER, Delaware SCOTT P. BROWN, Massachusetts MARK L. PRYOR, Arkansas JOHN MCCAIN, Arizona MARY L. LANDRIEU, Louisiana GEORGE V. VOINOVICH, Ohio CLAIRE MCCASKILL, Missouri JOHN ENSIGN, Nevada JON TESTER, Montana LINDSEY GRAHAM, South Carolina ROLAND W. BURRIS, Illinois EDWARD E. KAUFMAN, Delaware * CHRISTOPHER A. COONS, Delaware * MICHAEL L. ALEXANDER, Staff Director DEBORAH P. PARKINSON, Senior Professional Staff Member ADAM R, SEDGEWICK, Professional Staff Member BRANDON L. MILHORN, Minority Staff Director and Chief Counsel ROBERT L. STRAYER, Minority Director of Homeland Security Affairs DEVIN F. -

Gone to Ground Brian Blair [email protected]

University of Missouri, St. Louis IRL @ UMSL Theses UMSL Graduate Works 11-27-2018 Gone To Ground Brian Blair [email protected] Follow this and additional works at: https://irl.umsl.edu/thesis Part of the Fiction Commons Recommended Citation Blair, Brian, "Gone To Ground" (2018). Theses. 341. https://irl.umsl.edu/thesis/341 This Thesis is brought to you for free and open access by the UMSL Graduate Works at IRL @ UMSL. It has been accepted for inclusion in Theses by an authorized administrator of IRL @ UMSL. For more information, please contact [email protected]. Gone to Ground: Stories Brian A. Blair B.A. English, University of Missouri-Columbia, 2002 A Thesis Submitted to The Graduate School at the University of Missouri-St. Louis in partial fulfillment of the requirements for the degree Master of Fine-Arts in Creative Writing December 2018 Advisory Committee John Dalton, MFA Chairperson Dr. Minsoo Kang, Ph.D. Mary Troy, MFA 1 Abstract Gone to Ground is a collection of short stories that explores the possibilities beyond the edge of the everyday. They are an attempt to peek beyond the imaginary boundaries we erect for ourselves in the name of danger or the unknown. Each story is an opportunity to see our own familiar humanity in others, no matter the accidents of fortune that separate us. Though the stories in Gone to Ground often touch the surreal or the magical, they are firmly rooted in what could be out there, on the other side of our walls, whether real or imagined. These are stories that resist telling the reader where they end, but where they may begin again. -

Transforming Government for the 21St Century

S. Hrg. 109–7 TRANSFORMING GOVERNMENT FOR THE 21ST CENTURY HEARING BEFORE THE COMMITTEE ON HOMELAND SECURITY AND GOVERNMENTAL AFFAIRS UNITED STATES SENATE ONE HUNDRED NINTH CONGRESS FIRST SESSION FEBRUARY 16, 2005 Printed for the use of the Committee on Homeland Security and Governmental Affairs ( U.S. GOVERNMENT PRINTING OFFICE 20–173 PDF WASHINGTON : 2005 For sale by the Superintendent of Documents, U.S. Government Printing Office Internet: bookstore.gpo.gov Phone: toll free (866) 512–1800; DC area (202) 512–1800 Fax: (202) 512–2250 Mail: Stop SSOP, Washington, DC 20402–0001 VerDate 0ct 09 2002 09:12 Apr 07, 2005 Jkt 020173 PO 00000 Frm 00001 Fmt 5011 Sfmt 5011 C:\DOCS\20173.TXT SAFFAIRS PsN: PHOGAN COMMITTEE ON HOMELAND SECURITY AND GOVERNMENTAL AFFAIRS SUSAN M. COLLINS, Maine, Chairman TED STEVENS, Alaska JOSEPH I. LIEBERMAN, Connecticut GEORGE V. VOINOVICH, Ohio CARL LEVIN, Michigan NORM COLEMAN, Minnesota DANIEL K. AKAKA, Hawaii TOM COBURN, Oklahoma THOMAS R. CARPER, Delaware LINCOLN D. CHAFEE, Rhode Island MARK DAYTON, Minnesota ROBERT F. BENNETT, Utah FRANK LAUTENBERG, New Jersey PETE V. DOMENICI, New Mexico MARK PRYOR, Arkansas JOHN W. WARNER, Virginia MICHAEL D. BOPP, Staff Director and Chief Counsel TOM ELDRIDGE, Senior Counsel DON BUMGARDNER, Professional Staff Member JOYCE A. RECHTSCHAFFEN, Minority Staff Director and Counsel DAVID BERICK, Minority Professional Staff Member AMY B. NEWHOUSE, Chief Clerk (II) VerDate 0ct 09 2002 09:12 Apr 07, 2005 Jkt 020173 PO 00000 Frm 00002 Fmt 5904 Sfmt 5904 C:\DOCS\20173.TXT SAFFAIRS PsN: PHOGAN C O N T E N T S Opening statement: Page Senator Collins ................................................................................................