Infrastructure and Primitives for Hardware Security in Integrated Circuits

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ESC-470: ARM 9 Instruction Set Architecture with Performance

ARM 9 Instruction Set Architecture Introduction with Performance Perspective Joe-Ming Cheng, Ph.D. ARM-family processors are positioned among the leaders in key embedded applications. Many presentations and short lectures have already addressed the ARM’s applications and capabilities. In this introduction, we intend to discuss the ARM’s instruction set uniqueness from the performance prospective. This introduction is also trying to follow the approaches established by two outstanding textbooks of David Patterson and John Hennessey [PetHen00] [HenPet02]. 1.0 ARM Instruction Set Architecture Processor instruction set architecture (ISA) choices have evolved from accumulator, stack, register-to- memory, to register-register (load-store) organization. ARM 9 ISA is a load-store machine. ARM 9 ISA takes advantage of its smaller set of registers (16 vs. many 32-register processors) to incorporate more direct controls and achieve high encoding density. ARM’s load or store multiple register instruction, for example , allows enlisting of all possible registers and conditional execution in one instruction. The Thumb mode instruction set is another exa mple of how ARM ISA facilitates higher encode density. Rather than compressing the code, Thumb -mode instructions are two 16-bit instructions packed in a 32-bit ARM-mode instruction space. The Thumb -mode instructions are a subset of ARM instructions. When executing in Thumb mode, a single 32-bit instruction fetch cycle effectively brings in two instructions. Thumb code reduces access bandwidth, code size, and improves instruction cache hit rate. Another way ARM achieves cycle time reduction is by using Harvard architecture. The architecture facilitates independent data and instruction buses. -

RISC-V Geneology

RISC-V Geneology Tony Chen David A. Patterson Electrical Engineering and Computer Sciences University of California at Berkeley Technical Report No. UCB/EECS-2016-6 http://www.eecs.berkeley.edu/Pubs/TechRpts/2016/EECS-2016-6.html January 24, 2016 Copyright © 2016, by the author(s). All rights reserved. Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. To copy otherwise, to republish, to post on servers or to redistribute to lists, requires prior specific permission. Introduction RISC-V is an open instruction set designed along RISC principles developed originally at UC Berkeley1 and is now set to become an open industry standard under the governance of the RISC-V Foundation (www.riscv.org). Since the instruction set architecture (ISA) is unrestricted, organizations can share implementations as well as open source compilers and operating systems. Designed for use in custom systems on a chip, RISC-V consists of a base set of instructions called RV32I along with optional extensions for multiply and divide (RV32M), atomic operations (RV32A), single-precision floating point (RV32F), and double-precision floating point (RV32D). The base and these four extensions are collectively called RV32G. This report discusses the historical precedents of RV32G. We look at 18 prior instruction set architectures, chosen primarily from earlier UC Berkeley RISC architectures and major proprietary RISC instruction sets. Among the 122 instructions in RV32G: ● 6 instructions do not have precedents among the selected instruction sets, ● 98 instructions of the 116 with precedents appear in at least three different instruction sets. -

A CAD Tool for Synthesizing Optimized Variants of Altera's Nios II Soft-Core Processor

A CAD Tool for Synthesizing Optimized Variants of Altera's Nios II Soft-Core Processor By Omar Al Rayahi A Thesis Submitted to the Faculty of Graduate Studies through Electrical and Computer Engineering in Partial Fulfillment of the Requirements for the Degree of Master of Applied Science at the University of Windsor Windsor, Ontario, Canada 2008 Library and Bibliotheque et 1*1 Archives Canada Archives Canada Published Heritage Direction du Branch Patrimoine de I'edition 395 Wellington Street 395, rue Wellington Ottawa ON K1A0N4 Ottawa ON K1A0N4 Canada Canada Your file Votre reference ISBN: 978-0-494-47050-3 Our file Notre reference ISBN: 978-0-494-47050-3 NOTICE: AVIS: The author has granted a non L'auteur a accorde une licence non exclusive exclusive license allowing Library permettant a la Bibliotheque et Archives and Archives Canada to reproduce, Canada de reproduire, publier, archiver, publish, archive, preserve, conserve, sauvegarder, conserver, transmettre au public communicate to the public by par telecommunication ou par Plntemet, prefer, telecommunication or on the Internet, distribuer et vendre des theses partout dans loan, distribute and sell theses le monde, a des fins commerciales ou autres, worldwide, for commercial or non sur support microforme, papier, electronique commercial purposes, in microform, et/ou autres formats. paper, electronic and/or any other formats. The author retains copyright L'auteur conserve la propriete du droit d'auteur ownership and moral rights in et des droits moraux qui protege cette these. this thesis. Neither the thesis Ni la these ni des extraits substantiels de nor substantial extracts from it celle-ci ne doivent etre imprimes ou autrement may be printed or otherwise reproduits sans son autorisation. -

Small Soft Core up Inventory ©2019 James Brakefield Opencore and Other Soft Core Processors Reverse-U16 A.T

tool pip _uP_all_soft opencores or style / data inst repor com LUTs blk F tool MIPS clks/ KIPS ven src #src fltg max max byte adr # start last secondary web status author FPGA top file chai e note worthy comments doc SOC date LUT? # inst # folder prmary link clone size size ter ents ALUT mults ram max ver /inst inst /LUT dor code files pt Hav'd dat inst adrs mod reg year revis link n len Small soft core uP Inventory ©2019 James Brakefield Opencore and other soft core processors reverse-u16 https://github.com/programmerby/ReVerSE-U16stable A.T. Z80 8 8 cylcone-4 James Brakefield11224 4 60 ## 14.7 0.33 4.0 X Y vhdl 29 zxpoly Y yes N N 64K 64K Y 2015 SOC project using T80, HDMI generatorretro Z80 based on T80 by Daniel Wallner copyblaze https://opencores.org/project,copyblazestable Abdallah ElIbrahimi picoBlaze 8 18 kintex-7-3 James Brakefieldmissing block622 ROM6 217 ## 14.7 0.33 2.0 57.5 IX vhdl 16 cp_copyblazeY asm N 256 2K Y 2011 2016 wishbone extras sap https://opencores.org/project,sapstable Ahmed Shahein accum 8 8 kintex-7-3 James Brakefieldno LUT RAM48 or block6 RAM 200 ## 14.7 0.10 4.0 104.2 X vhdl 15 mp_struct N 16 16 Y 5 2012 2017 https://shirishkoirala.blogspot.com/2017/01/sap-1simple-as-possible-1-computer.htmlSimple as Possible Computer from Malvinohttps://www.youtube.com/watch?v=prpyEFxZCMw & Brown "Digital computer electronics" blue https://opencores.org/project,bluestable Al Williams accum 16 16 spartan-3-5 James Brakefieldremoved clock1025 constraint4 63 ## 14.7 0.67 1.0 41.1 X verilog 16 topbox web N 4K 4K N 16 2 2009 -

Design of the RISC-V Instruction Set Architecture

Design of the RISC-V Instruction Set Architecture Andrew Waterman Electrical Engineering and Computer Sciences University of California at Berkeley Technical Report No. UCB/EECS-2016-1 http://www.eecs.berkeley.edu/Pubs/TechRpts/2016/EECS-2016-1.html January 3, 2016 Copyright © 2016, by the author(s). All rights reserved. Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. To copy otherwise, to republish, to post on servers or to redistribute to lists, requires prior specific permission. Design of the RISC-V Instruction Set Architecture by Andrew Shell Waterman A dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Computer Science in the Graduate Division of the University of California, Berkeley Committee in charge: Professor David Patterson, Chair Professor Krste Asanovi´c Associate Professor Per-Olof Persson Spring 2016 Design of the RISC-V Instruction Set Architecture Copyright 2016 by Andrew Shell Waterman 1 Abstract Design of the RISC-V Instruction Set Architecture by Andrew Shell Waterman Doctor of Philosophy in Computer Science University of California, Berkeley Professor David Patterson, Chair The hardware-software interface, embodied in the instruction set architecture (ISA), is arguably the most important interface in a computer system. Yet, in contrast to nearly all other interfaces in a modern computer system, all commercially popular ISAs are proprietary. -

A VHDL Model of a Superscalar Implementation of the DLX Instruction Set Architcture

Rochester Institute of Technology RIT Scholar Works Theses 10-1-1996 A VHDL model of a superscalar implementation of the DLX instruction set architcture Paul Ferno Follow this and additional works at: https://scholarworks.rit.edu/theses Recommended Citation Ferno, Paul, "A VHDL model of a superscalar implementation of the DLX instruction set architcture" (1996). Thesis. Rochester Institute of Technology. Accessed from This Thesis is brought to you for free and open access by RIT Scholar Works. It has been accepted for inclusion in Theses by an authorized administrator of RIT Scholar Works. For more information, please contact [email protected]. A VHDL MODEL OF A SUPERSCALAR IMPLEMENTATION OF THE DLX INSTRUCTION SET ARCHITECTURE by Paul A. Femo A thesis submitted in partial fulfillment ofthe requirements for the degree of Masters ofScience in Computer Engineering Department ofComputer Engineering College ofEngineering Rochester Institute ofTechnology Rochester, New York October, 1996 Approvedby _ Dr. Kevin Shank, Assistant Professor Dr. Tony Chang, Professor Dr. Roy Czemikowski, Dept. Head and Professor THESIS RELEASE PERMISSION FORM ROCHESTER INSTITUTE OF TECHNOLOGY COLLEGE OF ENGINEERING Title: A VHDL Model ofa Superscalar Implementation ofthe DLX Instruction Set Architecture I, Paul A. Femo, hereby grant permission to the Wallace Memorial Library to reproduce my thesis in whole or part. Signature: _ Date: I(t-?£ ii Abstract The complexity of today's microprocessors demands that designers have an extensive knowledge of superscalar design techniques; this knowledge is difficult to acquire outside of a professional design team. Presently, there are a limited number of adequate resources available for the student, both in textual and model form. -

Open-Source 32-Bit RISC Soft-Core Processors

IOSR Journal of VLSI and Signal Processing (IOSR-JVSP) Volume 2, Issue 4 (May. – Jun. 2013), PP 43-46 e-ISSN: 2319 – 4200, p-ISSN No. : 2319 – 4197 www.iosrjournals.org Open-Source 32-Bit RISC Soft-Core Processors Rahul R.Balwaik, Shailja R.Nayak, Prof. Amutha Jeyakumar Department of Electrical Engineering, VJTI, Mumbai-19, INDIA Abstract: A soft-core processor build using a Field-Programmable Gate Array (FPGA)’s general-purpose logic represents an embedded processor commonly used for implementation. In a large number of applications; soft-core processors play a vital role due to their ease of usage. Soft-core processors are more advantageous than their hard-core counterparts due to their reduced cost, flexibility, platform independence and greater immunity to obsolescence. This paper presents a survey of a considerable number of soft core processors available from the open-source communities. Some real world applications of these soft-core processors are also discussed followed by the comparison of their several features and characteristics. The increasing popularity of these soft-core processors will inevitably lead to more widespread usage in embedded system design. This is due to the number of significant advantages that soft-core processors hold over their hard-core counterparts. Keywords: Field-Programmable Gate Array (FPGA), Application-Specific Integrated Circuit (ASIC), open- source, soft-core processors. I. INTRODUCTION Field-Programmable Gate Array (FPGA) has grown in capacity and performance, and is now one of the main implementation fabrics for designs, particularly where the products do not demand for custom integrated circuits. And in recent past due to the increased capacity and falling cost of the FPGA’s relatively fast and high density devices are today becoming available to the general public. -

Computer Architecture Instruction Set Architecture

Computer Architecture Instruction Set Architecture Virendra Singh Associate Professor Computer Architecture and Dependable Systems Lab Department of Electrical Engineering Indian Institute of Technology Bombay http://www.ee.iitb.ac.in/~viren/ E-mail: [email protected] CS-683: Advanced Computr Architcture Lecture 2 (26 July 2013) CADSL What Are the Components of an ISA? • Some&mes known as The Programmer’s Model of the machine • Storage cells Ø General and special purpose registers in the CPU Ø Many general purpose cells of same size in memory Ø Storage associated with I/O devices • The machine instruc&on set Ø The instruc&on set is the en&re repertoire of machine operaons Ø Makes use of storage cells, formats, and results of the fetch/ execute cycle Ø i.e., register transfers 26 July 2013 CS683@IITB 2 CADSL What Are the Components of an ISA? • The instruc&on format Ø Size and meaning of fields within the instruc&on • The nature of the fetch-execute cycle Ø Things that are done before the operaon code is known 26 July 2013 CS683@IITB 3 CADSL Instruction • C Statement f = (g+h) – (i+j) Ø Assembly instruc&ons add t0, g, h add t1, I, j sub f, t0, t1 • Opcode/mnemonic, operand , source/ des&naon 26 July 2013 CS683@IITB 4 CADSL Why not Bigger Instructions? • Why not “f = (g+h) – (i+j)” as one instruc&on? • Church’s thesis: A very primi&ve computer can compute anything that a fancy computer can compute – you need only logical func&ons, read and write to memory, and data dependent decisions • Therefore, ISA selec&on is for prac&cal reasons – Performance and cost not computability • Regularity tends to improve both – E.g, H/W to handle arbitrary number of operands is complex and slow, and UNNECESSARY 26 July 2013 CS683@IITB 5 CADSL What Must an Instruction Specify?(I) Data Flow • Which operaon to perform add r0, r1, r3 – Ans: Op code: add, load, branch, etc. -

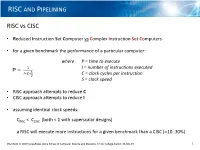

RISC + Pipelines.Pdf

RISC AND PIPELINING RISC vs CISC • Reduced Instruction Set Computer vs Complex Instruction Set Computers • for a given benchmark the performance of a particular computer: where P = time to execute 1 I = number of instructions executed P = 1 퐼∗퐶∗ 푆 C = clock cycles per instruction S = clock speed • RISC approach attempts to reduce C • CISC approach attempts to reduce I • assuming identical clock speeds: CRISC < CCISC [both < 1 with superscalar designs] a RISC will execute more instructions for a given benchmark than a CISC [10..30%] CSU34021 © 2019 [email protected] School of Computer Science and Statistics, Trinity College Dublin 31-Oct-19 1 RISC AND PIPELINING RISC-I • history • RISC-1 designed by MSc students under the direction of David Patterson and Carlo H. Séquin at UCLA Berkeley • released in 1982 • first RISC now accepted to be the IBM 801 [1980], but design not made public at the time • John Cocke later won both the Turing award and the Presidential Medal of Science for his work on the 801 • RISC-1 similar to SPARC [Sun, Oracle] and DLX/MIPS [discussing its pipeline later] • http://www.eecs.berkeley.edu/Pubs/TechRpts/1982/CSD-82-106.pdf CSU34021 © 2019 [email protected] School of Computer Science and Statistics, Trinity College Dublin 31-Oct-19 2 RISC AND PIPELINING RISC-I Design Criteria For an effective single chip solution artificially placed the following design constraints: • execute one instruction per cycle [instructions must be simple to be executed in one clock cycle] • make all instructions the same size [simplifies instruction decoding] • access main memory with load and store instructions [load/store architecture] • ONLY one addressing mode [indexed] • limited support for high level languages [which means C and hence Unix] procedure calling, local variables, constants, … CSU34021 © 2019 [email protected] School of Computer Science and Statistics, Trinity College Dublin 31-Oct-19 3 RISC AND PIPELINING RISC-I architecture • 32 x 32 bit registers r0 . -

A Thesis Entitled Design of a Hardware Security PUF Immune To

A Thesis Entitled Design of a Hardware Security PUF Immune to Machine Learning Attacks By Nitin K. Pundir Submitted to the Graduate Faculty as partial fulfillment of the requirements for the Master of Science Degree in Electrical Engineering ________________________________________ Dr. Mohammed Niamat, Committee Chair ________________________________________ Dr. Mansoor Alam, Committee Member ________________________________________ Dr. Hong Wang, Committee Member ________________________________________ Dr. Amanda Bryant-Friedrich, Dean College of Graduate Studies The University of Toledo December 2017 Copyright 2017, Nitin K. Pundir This document is copyrighted material. Under copyright law, no parts of this document may be reproduced without the expressed permission of the author. An Abstract of Design of a Hardware Security PUF Immune to Machine Learning Attacks By Nitin K. Pundir Submitted to the Graduate Faculty as partial fulfillment of the requirements for the Master of Science Degree in Electrical Engineering The University of Toledo December 2017 The technology and cyberspace sector is losing billions each year to hardware security threats. The incidents of usage of counterfeiting chips are doubling each year. The Electronic Resellers Association International (ERAI) reported that in the year 2011 more than 1300 counterfeits were reported. The incidents were double of what were reported in 2008. The report from Federal Contracts acknowledges the threats emanating from counterfeit chips and says it threatens the successful operations of US Weapon Systems. Meanwhile, electronic counterfeiting of chips continues to be a very profitable business on the dark web by crooked operatives. Physical Unclonable Functions (PUFs) are emerging as hardware security primitives to deal with security issues such as cloning, hacking, copying, and detection of Trojans. -

Chapter 13 the ISA of a Simplified

Chapter 13 The ISA of a simplified DLX In this chapter we describe a specification of a simple microprocessor called the simplified DLX. The specification is called an instruction set architecture (ISA). The ISA consists of objects and a machine language which is simply a list of instructions. The ISA describes the set of objects (memory and registers) and how they are manipulated by the machine language instructions. 13.1 Why use abstractions? The term architecture according to the Collins Dictionary means the art of planning, designing, and constructing buildings. Computer architecture refers to computers rather than buildings. Computers are rather complicated; even a very simple microprocessor is built from tens of thousands of gates and an operating system spans thousands of lines of code. To simplify things, people focus at a given time on certain aspects of computers and ignore other aspects. For example, the hardware designer ignores questions such as: which programs will be executed by the computer? The programmer, on the other hand, often does not even know exactly which type of computer will be executing the program she is writing. It is the task of the architect to be aware of different aspects so that the designed system meets certain price and performance goals. To facilitate focusing on certain aspects, abstractions are used. Several abstractions are used in computer systems. For example, the C programmer uses the abstraction of a computer that runs C programs, owns a private memory, and has access to various peripheral devices (such as a printer, a monitor, a keyboard, etc.). Supporting this abstraction requires software tools (e.g., editor, compiler, linker, loader, debugger). -

Is Hardware More Secure Than Software?

Is Hardware More Secure than Software? Lianying Zhao Carleton University David Lie University of Toronto Abstract—Computer hardware is usually perceived as more secure than software. However, recent trends lead us to reexamine this belief. We draw attention to the “firmwarization” of hardware and argue for revisiting the role of hardware and software in systems security. TRADITIONALLY, computer systems can be quick search on Google Scholar returns at least thought of as composed of two types of compo- 1,750 academic papers with the title containing nents: hardware and software. Hardware refers to both “hardware” and “security” as of the time the physical components that perform a fixed set of writing. While this is a conservative under- of operations. Software on the other hand, defines approximation of the actual number of such pa- logical components, instantiated as data and in- pers (as it only checks the title), and regardless structions, which can specify arbitrary sequences of whether these papers propose a larger role of hardware operations, as well as inputs to those of hardware in security, or seek to examine the operations. A function can be implemented using vulnerability of hardware to security attacks, the a various mixes of hardware and software compo- sheer number informally illustrates that the se- nents (or even entirely in hardware). The mix of curity community has a strong interest in the hardware and software can have implications on relationship between computer hardware and se- certain properties of the function implementation curity. Another recent trend is the implementation (e.g., performance or security). of hardware mechanisms to address well-known Hardware Security.