Diversity, Value and Limitations of the Journal Impact Factor and Alternative Metrics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

On the Relation Between the Wos Impact Factor, the Eigenfactor, the Scimago Journal Rank, the Article Influence Score and the Journal H-Index

1 On the relation between the WoS impact factor, the Eigenfactor, the SCImago Journal Rank, the Article Influence Score and the journal h-index Ronald ROUSSEAU 1 and the STIMULATE 8 GROUP 2 1 KHBO, Dept. Industrial Sciences and Technology, Oostende, Belgium [email protected] 2Vrije Universiteit Brussel (VUB), Brussels, Belgium The STIMULATE 8 Group consists of: Anne Sylvia ACHOM (Uganda), Helen Hagos BERHE (Ethiopia), Sangeeta Namdev DHAMDHERE (India), Alicia ESGUERRA (The Philippines), Nguyen Thi Ngoc HOAN (Vietnam), John KIYAGA (Uganda), Sheldon Miti MAPONGA (Zimbabwe), Yohannis MARTÍ- LAHERA (Cuba), Kelefa Tende MWANTIMWA (Tanzania), Marlon G. OMPOC (The Philippines), A.I.M. Jakaria RAHMAN (Bangladesh), Bahiru Shifaw YIMER (Ethiopia). Abstract Four alternatives to the journal Impact Factor (IF) indicator are compared to find out their similarities. Together with the IF, the SCImago Journal Rank indicator (SJR), the EigenfactorTM score, the Article InfluenceTM score and the journal h- index of 77 journals from more than ten fields were collected. Results show that although those indicators are calculated with different methods and even use different databases, they are strongly correlated with the WoS IF and among each other. These findings corroborate results published by several colleagues and show the feasibility of using free alternatives to the Web of Science for evaluating scientific journals. Keywords: WoS impact factor, Eigenfactor, SCImago Journal Rank (SJR), Article Influence Score, journal h-index, correlations Introduction STIMULATE stands for Scientific and Technological Information Management in Universities and Libraries: an Active Training Environment. It is an international training programme in information management, supported by the Flemish Interuniversity Council (VLIR), aiming at young scientists and professionals from 2 developing countries. -

The Journal Ranking System Undermining the Impact of 2 Brazilian Science 3 4 Rodolfo Jaffé1 5 6 1 Instituto Tecnológico Vale, Belém-PA, Brazil

bioRxiv preprint doi: https://doi.org/10.1101/2020.07.05.188425; this version posted July 6, 2020. The copyright holder for this preprint (which was not certified by peer review) is the author/funder, who has granted bioRxiv a license to display the preprint in perpetuity. It is made available under aCC-BY-NC-ND 4.0 International license. 1 QUALIS: The journal ranking system undermining the impact of 2 Brazilian science 3 4 Rodolfo Jaffé1 5 6 1 Instituto Tecnológico Vale, Belém-PA, Brazil. Email: [email protected] 7 8 Abstract 9 10 A journal ranking system called QUALIS was implemented in Brazil in 2009, intended to rank 11 graduate programs from different subject areas and promote selected national journals. Since this 12 system uses a complicated suit of criteria (differing among subject areas) to group journals into 13 discrete categories, it could potentially create incentives to publish in low-impact journals ranked 14 highly by QUALIS. Here I assess the influence of the QUALIS journal ranking system on the 15 global impact of Brazilian science. Results reveal a steeper decrease in the number of citations 16 per document since the implementation of this QUALIS system, compared to the top Latin 17 American countries publishing more scientific articles. All the subject areas making up the 18 QUALIS system showed some degree of bias, with social sciences being usually more biased 19 than natural sciences. Lastly, the decrease in the number of citations over time proved steeper in a 20 more biased area, suggesting a faster shift towards low-impact journals ranked highly by 21 QUALIS. -

Download PDF (41.8

Abbreviations and acronyms AAAS American Association for the Advancement of Science ABDC Australian Business Deans Council ABS Chartered Association of Business Schools AER American Economic Review AERES Agence d’Evaluation de la Recherche et de l’Enseignement Supérieur AIDS Acquired Immune Deficiency Syndrome APA American Psychology Association ARC Australian Research Council ARCH autoregressive conditional heteroscedasticity AWCR age-weighted citation rate BARDsNET Business Academic Research Directors’ Network BIS Bank for International Settlements BMOP bring money or perish CEO Chief Executive Officer CIP covered interest parity CSSE Computer Science and Software Engineering Conference CTV Canadian Television Network CV curriculum vitae CWPA Council of Writing Program Administrators DOAJ Directory of Open Access Journals ECR early-career researcher EJ Economic Journal EL Economics Letters EMH efficient market hypothesis ERA Excellence in Research for Australia ESF European Science Foundation FNEGE Foundation National pour l’Enseignement de la Gestion des Entreprises FoR field of research GTS Good Teaching Scale HASS humanities, arts and social sciences HEC Hautes Etudes Commerciales HEFCE Higher Education Funding Council for England ix Imad A. Moosa - 9781786434937 Downloaded from Elgar Online at 09/30/2021 02:19:42PM via free access MOOSA TEXT.indd 9 06/12/2017 08:53 x Publish or perish HP Hodrick-Prescott IEEE Institute of Electrical and Electronic Engineers IF impact factor IJED International Journal of Exotic Dancing IMF International -

New Perspectives Welcome to the First Research Trends Maga- Zine, Which Accompanies Our 15Th Issue of Research Trends Online

r ReseaRch TRendst magazine New perspectives Welcome to the first Research Trends maga- zine, which accompanies our 15th issue of Research Trends online. Research Trends 15 is a special issue devoted to the role of bibliometric indicators in journal evaluation and research assessment. Over the past 40 years, academic evaluation has changed radically, in both its objectives and methods. Bibliometrics has grown to meet emerging challenges, and new metrics are frequently introduced to measure different aspects of academic performance and improve upon earlier, pioneering work. it is becoming increasingly apparent that assessment and benchmarking are here to stay, and bibliometrics are an intrinsic aspect of today’s evaluation landscape, whether of journals, researchers or insti- tutes. This is not uncontroversial, and we have featured many critics of bibliometric analysis in general and of specific tools or their applications in past issues (please visit www.researchtrends.com). The bibliometric community acknowl- edges these shortcomings and is tirelessly campaigning for better understanding and careful use of the metrics they produce. Therefore, in this issue, we speak to three of the world’s leading researchers in this area, who all agree that bibliometric indi- cators should always be used with exper- tise and care, and that they should never completely supercede more traditional methods of evaluation, such as peer review. in fact, they all advocate a wider choice of more carefully calibrated metrics as the only way to ensure fair assessment. if you would like to read any of our back issues or subscribe to our bi-monthly e-newsletter, please visit www.researchtrends.com Kind regards, The Research Trends editorial Board Research Trends is a bi-monthly online newsletter providing objective, up-to-the-minute insights into scientific trends based on bibliometric analysis. -

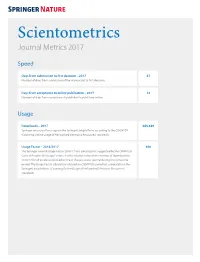

Scientometrics Journal Metrics 2017

Scientometrics Journal Metrics 2017 Speed Days from submission to first decision – 2017 47 Number of days from submission of the manuscript to first decision. Days from acceptance to online publication – 2017 13 Number of days from acceptance at publisher to published online. Usage Downloads – 2017 688,649 Springer measures the usage on the SpringerLink platform according to the COUNTER (Counting Online Usage of NeTworked Electronic Resources) standards. Usage Factor – 2016/2017 406 The Springer Journal Usage Factor 2016/17 was calculated as suggested by the COUNTER Code of Practice for Usage Factors. It is the median value of the number of downloads in 2016/17 for all articles published online in that particular journal during the same time period. The Usage Factor calculation is based on COUNTER-compliant usage data on the SpringerLink platform. (Counting Online Usage of NeTworked Electronic Resources) standards. Journal Metrics Impact Impact Factor – 2016 2.147 Journal Impact Factors are published each summer by Thomson Reuters via Journal Citation Reports®. Impact Factors and ranking data are presented for the preceding calendar year. 5 Year Impact Factor – 2016 2.346 The 5-year journal Impact Factor is the average number of times articles from the journal published in the past five years that have been cited in the JCR year. It is calculated by dividing the number of citations in the JCR year by the total number of articles published in the five previous years. SNIP – 2016 1.319 Source Normalized Impact per Paper (SNIP) measures contextual citation impact by weighting citations based on the total number of citations in a subject field. -

Scimago, Eigenfactor Score, and H5 Index Journal Rank Indicator: a Study of Journals in the Area of Construction and Building Technologies

DESIDOC Journal of Library & Information Technology, Vol. 38, No. 4, July 2018, pp. 278-285, DOI : 10.14429/djlit.38.4.11503 2018, DESIDOC SCImago, Eigenfactor Score, and H5 Index Journal Rank Indicator: A Study of Journals in the area of Construction and Building Technologies Shakil Ahmad#, Md. Sohail$,*, Abu Waris#, Amir Elginaid%, and Isam Mohammed Abdel-Magid# #Imam Abdulrahman bin Faisal University, Dammam, Kingdom of Saudi Arabia $Fiji National University, Nasinu, Fiji Island %Dar AlHandasah Consultants, Kingdom of Saudi Arabia *E-mail: [email protected] ABSTRACT Present work studies the role of bibliometric indicators and scientometric instruments for checking the qualities of journals published in the area of construction and building technology. Journal quality review targeted comparisons between indicators of significance and merit from reputable databases. Likewise, present work stressed on performance of well cited journals and their informational correlations as predicted by selected indicators complex algorithms. Main research objective focused on review of scientific journal quality indices that included: Journal Impact Factor (JIF), Eigenfactor Score (ES), SCImago Journal Rank indicator (SJR) and H5 index. Dependable construction and building technology journals were chosen from their category within Web of Science. JIFs and ESs are obtained from Journal Citation Report and the SJR from the SCImago Journal and country rank website. Sixty one construction and building technology journals were selected for this work and their related data records and information documents retrieved from their primary sites in relating designated quality indicators (JIF, SJR, ES and H5). Correlations between indicators were elucidated by means of Pearson’s and Spearman’s statistical correlations produced by SPSS software. -

Journal Metric Indicators in More Detail

Contents Introduction .............................................................................................. 2 Journal metric indicators in more detail .............................................................................. 2 SNIP (Source Normalised Impact per Paper) for citation potential ............... 2 SNIP – identifying titles in top journal percentiles ..................................... 3 SNIP – identifying subject/disciplinary rankings ........................................ 4 SJR (Scimago Journal and Country Rank) for citation potential ................... 6 SJR - identifying subject/disciplinary rankings and quartiles ....................... 7 Journal Citation Reports (JCR): Journal Impact Factor (IF) ......................... 8 Where to find Journal Impact Factors? .................................................... 9 JCR - identifying subject rankings and journal quartiles ........................... 10 JCR – Immediacy Index....................................................................... 11 JCR – other key metric indicators ......................................................... 11 1 Journal Metric Indicators. Version 1.0 VB Introduction Historically journal citation metrics acted as an indicator of the overall influence of a journal and how it performed against other titles in the wider publishing world. Although functioning as a loose proxy for journal quality, these metrics do not account for variant citation behaviour in different disciplines. The most well-known of these traditional journal -

A Review of Journal Citation Indicators and Ranking in Business and Management

Evaluating Journal Quality: A Review of Journal Citation Indicators and Ranking in Business and Management John Mingers (Corresponding author) Kent Business School, University of Kent, Canterbury CT7 2PE, UK [email protected] 01227 824008 Liying Yang National Science Library, Chinese Academy of Sciences, 33 Beisihuan Xilu, Beijing 100190, China [email protected] Abstract: Evaluating the quality of academic journal is becoming increasing important within the context of research performance evaluation. Traditionally, journals have been ranked by peer review lists such as that of the Association of Business Schools (UK) or though their journal impact factor (JIF). However, several new indicators have been developed, such as the h-index, SJR, SNIP and the Eigenfactor which take into account different factors and therefore have their own particular biases. In this paper we evaluate these metrics both theoretically and also through an empirical study of a large set of business and management journals. We show that even though the indicators appear highly correlated in fact they lead to large differences in journal rankings. We contextualise our results in terms of the UK’s large scale research assessment exercise (the RAE/REF) and particularly the ABS journal ranking list. We conclude that no one indicator is superior but that the h-index (which includes the productivity of a journal) and SNIP (which aims to normalize for field effects) may be the most effective at the moment. Keywords: OR in scientometrics, ABS journal list, Eigenfactor, h-index, impact factor, journal indicators, journal ranking, normalisation, REF, SJR, SNIP 1. INTRODUCTION The evaluation of research performance, whether at the level of individuals, departments, research groups or whole universities, is becoming ever more important and the results of exercises such as the UK’s Research Excellence Framework (REF) have major consequences in terms of funding and individual academics’ careers. -

Citation Based Comparative Analysis of Library Hi-Tech and Library Quarterly Journals Using Scimago Journal Rank

University of Nebraska - Lincoln DigitalCommons@University of Nebraska - Lincoln Library Philosophy and Practice (e-journal) Libraries at University of Nebraska-Lincoln January 2020 CITATION BASED COMPARATIVE ANALYSIS OF LIBRARY HI-TECH AND LIBRARY QUARTERLY JOURNALS USING SCIMAGO JOURNAL RANK Stephen G [email protected] Follow this and additional works at: https://digitalcommons.unl.edu/libphilprac Part of the Library and Information Science Commons G, Stephen, "CITATION BASED COMPARATIVE ANALYSIS OF LIBRARY HI-TECH AND LIBRARY QUARTERLY JOURNALS USING SCIMAGO JOURNAL RANK" (2020). Library Philosophy and Practice (e-journal). 3692. https://digitalcommons.unl.edu/libphilprac/3692 CITATION BASED COMPARATIVE ANALYSIS OF LIBRARY HI-TECH AND LIBRARY QUARTERLY JOURNALS USING SCIMAGO JOURNAL RANK Dr.G.Stephen, Assistant Librarian, NIELIT – Itanagar Centre, Arunachal Pradesh. Abstract Journal rankings are widely used in academic circles in evaluating the impact and quality of academic journals. The purpose of a journal ranking is to reflect the location of a journal within its field, the relative difficulty of being published in that journal, and the reputation associated with it. SCImago Journal Rank - A measure of the scientific impact of scholarly journals that accounts for both the number of citations received by a journal and the importance or reputation of the journals from which such citations come. This paper examined citation-based analysis using the SCImago journal rank to compare Library Quarterly and Library Hi-Tech journals are published from 1999 onwards particularly in the fields of library and information science. This study found that in 2018 SJR ranking, H indexes and best quartile etc. For Library Hi-Tech Journal SJR 0.75, h index is 33, Q1 is the best quartile and in 2018 about Library Quarterly Journal SJR 0.73, h index 34, and Q1 best quartile. -

Research Evaluation Metrics

Research Evaluation Metrics Gali Halevi, MLS, PhD Chief Director – Mount Sinai Health System Libraries Assistant Professor – Department of Medicine ▶ Impact Factor (IF) = “a measure of the frequency with which an ‘average article’ in a journal has been cited in a particular year or period” wokinfo.com/essays/impact-factor/ 2005 IF of a journal = 2005 cites to articles published in 2003-04 number of articles published in 2003-04 Impact factor In the early 1960s Irving H. Sher and Eugene Garfield created the journal impact factor to help select journals for the Science Citation Index… [Garfield] expected that “it would be used constructively while recognizing that in the wrong hands it might be abused” The problem(s) with the Impact Factor ▶ The distribution of citations is highly skewed ▶ Thomson Reuters calculates the Impact Factor – Coverage has limitations – Prone to errors ▶ Impact Factor was never meant to be used as a quality measurement for researchers. And lately in the news… Publish or Perish – 74 years later ▶ Tenure, promotions and funding are still highly influenced by: – Number of publications – Publishing in high impact journals – Number of citations ▶ Decades of research has shown that these measures are highly flawed mainly because: – Databased are selective – They do not accurately capture interdisciplinary research and science that becomes more specialized Is there anything else out there? 7 SJR: Scimago Journal Rank Indicator SCImago Journal Rank (SJR) is a prestige metric based on the idea that 'all citations are not created equal'. SJR is a measure of scientific influence of scholarly journals. It accounts for both the number of citations received by a journal and the importance or prestige of the journals where such citations come from. -

Research Metrics Quick Reference

Metrics illuminate the impact of your research outputs. Promotion and tenure committees, funders, advisors, Research metrics research team leaders and potential collaborators are all interested in information about impact. But where to start? quick reference Your library can advise you on metrics that can help you to: Decide where Update your Enrich promotion Apply/report Benchmark a collection of to publish online profile & tenure portfolio to funders1 research outputs ( for team leaders) • CiteScore • h-index • h-index • Percentile benchmark • Percentile benchmark • SJR: SCImago Journal Rank • Percentile benchmark • Percentile benchmark • Journal metrics (e.g., CiteScore) • Field-Weighted Citation Impact • SNIP: Source Normalized • Usage • Usage • Usage • h-index (if in the same field) Impact per Paper • Captures • Captures • Captures • Field-Weighted Download Impact 2 • Journal Impact Factor • Mentions • Mentions • Mentions • Social media • Social media • Social media • Citations • Citations Document* Author Journal PlumX metrics Citation count Document count Field-Weighted Citation Impact (FWCI) # of citations accrued since publication # of items published by an individual or group of individuals # of citations received by a document A simple measure of attention for an article, journal or A researcher using document count should also provide a list expected # of citations for similar documents researcher. As with all citation-based measures, it is important of document titles with links. If authors use an ORCID iD—a Similar documents are ones in the same discipline, of the same to be aware of citation practices. Citation counts can include persistent scholarly identifier—they can draw on numerous type (e.g., article, letter, review) and of the same age. An FWCI measures of societal impact, such as patent, policy and sources for document count including Scopus, ResearcherID, of 1 means that the output performs just as expected against the clinical citations. -

A Scientometric and Economic Evaluation

Open Access Original Article DOI: 10.7759/cureus.12069 Publishing in Hematology Journals: A Scientometric and Economic Evaluation Gokhan Tazegul 1 , Unal Atas 2 , Turgay Ulas 3 , Tayfur Toptas 4 , Ozan Salim 2 1. Internal Medicine, Ankara Polatlı Duatepe State Hospital, Ankara, TUR 2. Hematology, Akdeniz University, Antalya, TUR 3. Hematology, Near East University, Nicosia, CYP 4. Hematology, Marmara University, Istanbul, TUR Corresponding author: Gokhan Tazegul, [email protected] Abstract Introduction: Herein, we aimed to compare the scientometric data of hematology journals, and compare the publication models, especially the scientometric data of journals with all-open access (OA) and hybrid-OA publication models. Methods: Data were obtained from Scimago Journal & Country Rank and Clarivate Analytics InCites websites. Fifty-four journals indexed in Science Citation Index (SCI) and SCI-Expanded were evaluated. Bibliometric data and impact factor (IF), scientific journal rank (SJR), eigenfactor score (ES), and Hirsch (h)- index of the journals were obtained. United States dollar (USD) was used as the requested article publishing charge (APC). Statistics Package for the Social Sciences (SPSS, IBM Corp., Armonk, NY) version 23.0 was used for data analysis. Results: As a publication model, Hybrid-OA was the most common. One journal had subscription-only, and two journals had a free-OA model. Nine journals had a mandatory OA with the APC model and 42 journals used a hybrid model. The Median OA fee was 3400 USD. Hybrid-OA journals had a significantly higher median h-index (72 vs. 40, p=0.03) compared to all-OA journals. Other scientometric indexes were similar. When APCs were compared, all-OA journals were median 900 USD lower than hybrid-OA journals (2490 vs.