A Review of Journal Citation Indicators and Ranking in Business and Management

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Journal Rankings Paper

NBER WORKING PAPER SERIES NEOPHILIA RANKING OF SCIENTIFIC JOURNALS Mikko Packalen Jay Bhattacharya Working Paper 21579 http://www.nber.org/papers/w21579 NATIONAL BUREAU OF ECONOMIC RESEARCH 1050 Massachusetts Avenue Cambridge, MA 02138 September 2015 We thank Bruce Weinberg, Vetla Torvik, Neil Smalheiser, Partha Bhattacharyya, Walter Schaeffer, Katy Borner, Robert Kaestner, Donna Ginther, Joel Blit and Joseph De Juan for comments. We also thank seminar participants at the University of Illinois at Chicago Institute of Government and Public Affairs, at the Research in Progress Seminar at Stanford Medical School, and at the National Bureau of Economic Research working group on Invention in an Aging Society for helpful feedback. Finally, we thank the National Institute of Aging for funding for this research through grant P01-AG039347. We are solely responsible for the content and errors in the paper. The views expressed herein are those of the authors and do not necessarily reflect the views of the National Bureau of Economic Research. NBER working papers are circulated for discussion and comment purposes. They have not been peer- reviewed or been subject to the review by the NBER Board of Directors that accompanies official NBER publications. © 2015 by Mikko Packalen and Jay Bhattacharya. All rights reserved. Short sections of text, not to exceed two paragraphs, may be quoted without explicit permission provided that full credit, including © notice, is given to the source. Neophilia Ranking of Scientific Journals Mikko Packalen and Jay Bhattacharya NBER Working Paper No. 21579 September 2015 JEL No. I1,O3,O31 ABSTRACT The ranking of scientific journals is important because of the signal it sends to scientists about what is considered most vital for scientific progress. -

On the Relation Between the Wos Impact Factor, the Eigenfactor, the Scimago Journal Rank, the Article Influence Score and the Journal H-Index

1 On the relation between the WoS impact factor, the Eigenfactor, the SCImago Journal Rank, the Article Influence Score and the journal h-index Ronald ROUSSEAU 1 and the STIMULATE 8 GROUP 2 1 KHBO, Dept. Industrial Sciences and Technology, Oostende, Belgium [email protected] 2Vrije Universiteit Brussel (VUB), Brussels, Belgium The STIMULATE 8 Group consists of: Anne Sylvia ACHOM (Uganda), Helen Hagos BERHE (Ethiopia), Sangeeta Namdev DHAMDHERE (India), Alicia ESGUERRA (The Philippines), Nguyen Thi Ngoc HOAN (Vietnam), John KIYAGA (Uganda), Sheldon Miti MAPONGA (Zimbabwe), Yohannis MARTÍ- LAHERA (Cuba), Kelefa Tende MWANTIMWA (Tanzania), Marlon G. OMPOC (The Philippines), A.I.M. Jakaria RAHMAN (Bangladesh), Bahiru Shifaw YIMER (Ethiopia). Abstract Four alternatives to the journal Impact Factor (IF) indicator are compared to find out their similarities. Together with the IF, the SCImago Journal Rank indicator (SJR), the EigenfactorTM score, the Article InfluenceTM score and the journal h- index of 77 journals from more than ten fields were collected. Results show that although those indicators are calculated with different methods and even use different databases, they are strongly correlated with the WoS IF and among each other. These findings corroborate results published by several colleagues and show the feasibility of using free alternatives to the Web of Science for evaluating scientific journals. Keywords: WoS impact factor, Eigenfactor, SCImago Journal Rank (SJR), Article Influence Score, journal h-index, correlations Introduction STIMULATE stands for Scientific and Technological Information Management in Universities and Libraries: an Active Training Environment. It is an international training programme in information management, supported by the Flemish Interuniversity Council (VLIR), aiming at young scientists and professionals from 2 developing countries. -

The Journal Ranking System Undermining the Impact of 2 Brazilian Science 3 4 Rodolfo Jaffé1 5 6 1 Instituto Tecnológico Vale, Belém-PA, Brazil

bioRxiv preprint doi: https://doi.org/10.1101/2020.07.05.188425; this version posted July 6, 2020. The copyright holder for this preprint (which was not certified by peer review) is the author/funder, who has granted bioRxiv a license to display the preprint in perpetuity. It is made available under aCC-BY-NC-ND 4.0 International license. 1 QUALIS: The journal ranking system undermining the impact of 2 Brazilian science 3 4 Rodolfo Jaffé1 5 6 1 Instituto Tecnológico Vale, Belém-PA, Brazil. Email: [email protected] 7 8 Abstract 9 10 A journal ranking system called QUALIS was implemented in Brazil in 2009, intended to rank 11 graduate programs from different subject areas and promote selected national journals. Since this 12 system uses a complicated suit of criteria (differing among subject areas) to group journals into 13 discrete categories, it could potentially create incentives to publish in low-impact journals ranked 14 highly by QUALIS. Here I assess the influence of the QUALIS journal ranking system on the 15 global impact of Brazilian science. Results reveal a steeper decrease in the number of citations 16 per document since the implementation of this QUALIS system, compared to the top Latin 17 American countries publishing more scientific articles. All the subject areas making up the 18 QUALIS system showed some degree of bias, with social sciences being usually more biased 19 than natural sciences. Lastly, the decrease in the number of citations over time proved steeper in a 20 more biased area, suggesting a faster shift towards low-impact journals ranked highly by 21 QUALIS. -

Ranking Forestry Journals Using the H-Index

Ranking forestry journals using the h-index Journal of Informetrics, in press Jerome K Vanclay Southern Cross University PO Box 157, Lismore NSW 2480, Australia [email protected] Abstract An expert ranking of forestry journals was compared with journal impact factors and h -indices computed from the ISI Web of Science and internet-based data. Citations reported by Google Scholar offer an efficient way to rank all journals objectively, in a manner consistent with other indicators. This h-index exhibited a high correlation with the journal impact factor (r=0.92), but is not confined to journals selected by any particular commercial provider. A ranking of 180 forestry journals is presented, on the basis of this index. Keywords : Hirsch index, Research quality framework, Journal impact factor, journal ranking, forestry Introduction The Thomson Scientific (TS) Journal Impact Factor (JIF; Garfield, 1955) has been the dominant measure of journal impact, and is often used to rank journals and gauge relative importance, despite several recognised limitations (Hecht et al., 1998; Moed et al., 1999; van Leeuwen et al., 1999; Saha et al., 2003; Dong et al., 2005; Moed, 2005; Dellavalle et al., 2007). Other providers offer alternative journal rankings (e.g., Lim et al., 2007), but most deal with a small subset of the literature in any discipline. Hirsch’s h-index (Hirsch, 2005; van Raan, 2006; Bornmann & Daniel, 2007a) has been suggested as an alternative that is reliable, robust and easily computed (Braun et al., 2006; Chapron and Husté, 2006; Olden, 2007; Rousseau, 2007; Schubert and Glänzel, 2007; Vanclay, 2007; 2008). -

Australian Business Deans Council 2019 Journal Quality List Review Final Report 6 December 2019

Australian Business Deans Council 2019 Journal Quality List Review Final Report 6 December 2019 1 About the Australian Business Deans Council The Australian Business Deans Council (ABDC) is the peak body of Australian university business schools. Our 38 members graduate one-third of all Australian university students and more than half of the nation’s international tertiary students. ABDC’s mission is to make Australian business schools better, and to foster the national and global impact of Australian business education and research. ABDC does this by: • Being the collective and collegial voice of university business schools • Providing opportunities for members to share knowledge and best practice • Creating and maintaining strong, collaborative relationships with affiliated national and international peak industry, higher education, professional and government bodies • Engaging in strategic initiatives and activities that further ABDC’s mission. Australian Business Deans Council Inc. UNSW Business School, Deans Unit, Level 6, West Lobby, College Road, Kensington, NSW, Australia 2052 T: +61 (0)2 6162 2970 E: [email protected] 2 Table of Contents Acknowledgements 4 Background and Context 4 Method and Approach 7 Outcomes 10 Beyond the 2019 Review 13 Appendix 1 – Individual Panel Reports 14 Information Systems 15 Economics 20 Accounting 37 Finance including Actuarial Studies 57 Management, Commercial Services and Transport and Logistics 63 (and Other, covering 1599) Marketing and Tourism 78 Business and Taxation Law 85 Appendix 2 – Terms -

Understanding Research Metrics INTRODUCTION Discover How to Monitor Your Journal’S Performance Through a Range of Research Metrics

Understanding research metrics INTRODUCTION Discover how to monitor your journal’s performance through a range of research metrics. This page will take you through the “basket of metrics”, from Impact Factor to usage, and help you identify the right research metrics for your journal. Contents UNDERSTANDING RESEACH METRICS 2 What are research metrics? What are research metrics? Research metrics are the fundamental tools used across the publishing industry to measure performance, both at journal- and author-level. For a long time, the only tool for assessing journal performance was the Impact Factor – more on that in a moment. Now there are a range of different research metrics available. This “basket of metrics” is growing every day, from the traditional Impact Factor to Altmetrics, h-index, and beyond. But what do they all mean? How is each metric calculated? Which research metrics are the most relevant to your journal? And how can you use these tools to monitor your journal’s performance? For a quick overview, download our simple guide to research metrics. Or keep reading for a more in-depth look at what’s in the “basket of metrics” and how to interpret it. UNDERSTANDING RESEACH METRICS 3 Citation-based metrics Citation-based metrics IMPACT FACTOR What is the Impact Factor? The Impact Factor is probably the most well-known metric for assessing journal performance. Designed to help librarians with collection management in the 1960s, it has since become a common proxy for journal quality. The Impact Factor is a simple research metric: it’s the average number of citations received by articles in a journal within a two-year window. -

Download PDF (41.8

Abbreviations and acronyms AAAS American Association for the Advancement of Science ABDC Australian Business Deans Council ABS Chartered Association of Business Schools AER American Economic Review AERES Agence d’Evaluation de la Recherche et de l’Enseignement Supérieur AIDS Acquired Immune Deficiency Syndrome APA American Psychology Association ARC Australian Research Council ARCH autoregressive conditional heteroscedasticity AWCR age-weighted citation rate BARDsNET Business Academic Research Directors’ Network BIS Bank for International Settlements BMOP bring money or perish CEO Chief Executive Officer CIP covered interest parity CSSE Computer Science and Software Engineering Conference CTV Canadian Television Network CV curriculum vitae CWPA Council of Writing Program Administrators DOAJ Directory of Open Access Journals ECR early-career researcher EJ Economic Journal EL Economics Letters EMH efficient market hypothesis ERA Excellence in Research for Australia ESF European Science Foundation FNEGE Foundation National pour l’Enseignement de la Gestion des Entreprises FoR field of research GTS Good Teaching Scale HASS humanities, arts and social sciences HEC Hautes Etudes Commerciales HEFCE Higher Education Funding Council for England ix Imad A. Moosa - 9781786434937 Downloaded from Elgar Online at 09/30/2021 02:19:42PM via free access MOOSA TEXT.indd 9 06/12/2017 08:53 x Publish or perish HP Hodrick-Prescott IEEE Institute of Electrical and Electronic Engineers IF impact factor IJED International Journal of Exotic Dancing IMF International -

New Perspectives Welcome to the First Research Trends Maga- Zine, Which Accompanies Our 15Th Issue of Research Trends Online

r ReseaRch TRendst magazine New perspectives Welcome to the first Research Trends maga- zine, which accompanies our 15th issue of Research Trends online. Research Trends 15 is a special issue devoted to the role of bibliometric indicators in journal evaluation and research assessment. Over the past 40 years, academic evaluation has changed radically, in both its objectives and methods. Bibliometrics has grown to meet emerging challenges, and new metrics are frequently introduced to measure different aspects of academic performance and improve upon earlier, pioneering work. it is becoming increasingly apparent that assessment and benchmarking are here to stay, and bibliometrics are an intrinsic aspect of today’s evaluation landscape, whether of journals, researchers or insti- tutes. This is not uncontroversial, and we have featured many critics of bibliometric analysis in general and of specific tools or their applications in past issues (please visit www.researchtrends.com). The bibliometric community acknowl- edges these shortcomings and is tirelessly campaigning for better understanding and careful use of the metrics they produce. Therefore, in this issue, we speak to three of the world’s leading researchers in this area, who all agree that bibliometric indi- cators should always be used with exper- tise and care, and that they should never completely supercede more traditional methods of evaluation, such as peer review. in fact, they all advocate a wider choice of more carefully calibrated metrics as the only way to ensure fair assessment. if you would like to read any of our back issues or subscribe to our bi-monthly e-newsletter, please visit www.researchtrends.com Kind regards, The Research Trends editorial Board Research Trends is a bi-monthly online newsletter providing objective, up-to-the-minute insights into scientific trends based on bibliometric analysis. -

Ten Good Reasons to Use the Eigenfactor Metrics in Our Opinion, There Are Enough Good Reasons to Use the Eigenfactor Method to Evaluate Journal Influence: 1

Ten good reasons to use the EigenfactorTMmetrics✩ Massimo Franceschet Department of Mathematics and Computer Science, University of Udine Via delle Scienze 206 – 33100 Udine, Italy [email protected] Abstract The Eigenfactor score is a journal influence metric developed at the De- partment of Biology of the University of Washington and recently intro- duced in the Science and Social Science Journal Citation Reports maintained by Thomson Reuters. It provides a compelling measure of journal status with solid mathematical background, sound axiomatic foundation, intriguing stochastic interpretation, and many interesting relationships to other ranking measures. In this short contribution, we give ten reasons to motivate the use of the Eigenfactor method. Key words: Journal influence measures; Eigenfactor metrics; Impact Factor. 1. Introduction The Eigenfactor metric is a measure of journal influence (Bergstrom, 2007; Bergstrom et al., 2008; West et al., 2010). Unlike traditional met- rics, like the popular Impact Factor, the Eigenfactor method weights journal citations by the influence of the citing journals. As a result, a journal is influential if it is cited by other influential journals. The definition is clearly recursive in terms of influence and the computation of the Eigenfactor scores involves the search of a stationary distribution, which corresponds to the leading eigenvector of a perturbed citation matrix. The Eigenfactor method was initially developed by Jevin West, Ben Alt- house, Martin Rosvall, and Carl Bergstrom at the University of Washington ✩Accepted for publication in Information Processing & Management. DOI: 10.1016/j.ipm.2010.01.001 Preprint submitted to Elsevier January 18, 2010 and Ted Bergstrom at the University of California Santa Barbara. -

Positioning Open Access Journals in a LIS Journal Ranking

Positioning Open Access Journals in a LIS Journal Ranking Jingfeng Xia This research uses the h-index to rank the quality of library and information science journals between 2004 and 2008. Selected open access (OA) journals are included in the ranking to assess current OA development in support of scholarly communication. It is found that OA journals have gained momentum supporting high-quality research and publication, and some OA journals have been ranked as high as the best traditional print journals. The findings will help convince scholars to make more contri- butions to OA journal publications, and also encourage librarians and information professionals to make continuous efforts for library publishing. ccording to the Budapest the complexity of publication behaviors, Open Access Initiatives, open various approaches have been developed access (OA) denotes “its free to assist in journal ranking, of which availability on the public comparing the rates of citation using internet, permitting any users to read, citation indexes to rate journals has been download, copy, distribute, print, search, popularly practiced and recognized in or link to the full texts of these articles, most academic disciplines. ISI’s Journal crawl them for indexing, pass them as Citation Reports (JCR) is among the most data to software, or use them for any other used rankings, which “offers a systematic, lawful purpose, without financial, legal, objective means to critically evaluate the or technical barriers other than those world’s leading journals, with quantifi- inseparable from gaining access to the able, statistical information based on internet itself.”1 Therefore, an OA journal citation data.”4 Yet, citation-based journal is referred to as one that is freely available rankings, such as JCR, have included few online in full text. -

New Approaches to Ranking Economics Journals

05‐12 New Approaches to Ranking Economics Journals Yolanda K. Kodrzycki and Pingkang Yu Abstract: We develop a flexible, citations‐ and reference‐intensity‐adjusted ranking technique that allows a specified set of journals to be evaluated using a range of alternative criteria. We also distinguish between the influence of a journal and that of a journal article, with the latter concept arguably being more relevant for measuring research productivity. The list of top economics journals can (but does not necessarily) change noticeably when one examines citations in the social science and policy literatures, and when one measures citations on a per‐ article basis. The changes in rankings are due to the broad interest in applied microeconomics and economic development, to differences in citation norms and in the relative importance assigned to theoretical and empirical contributions, and to the lack of a systematic effect of journal size on influence per article. We also find that economics is comparatively self‐ contained but nevertheless draws knowledge from a range of other disciplines. JEL Classifications: A10, A12 Keywords: economics journals, social sciences journals, policy journals, finance journals, rankings, citations, research productivity, interdisciplinary communications. Yolanda Kodrzycki is a Senior Economist and Policy Advisor at the Federal Reserve Bank of Boston. Her email address is [email protected]. Pingkang Yu is a Ph.D. student at George Washington University. His email address is [email protected]. Much of the research for this paper was conducted while Yu was with the Federal Reserve Bank of Boston. This paper, which may be revised, is available on the web site of the Federal Reserve Bank of Boston at http://www.bos.frb.org/economic/wp/index.htm. -

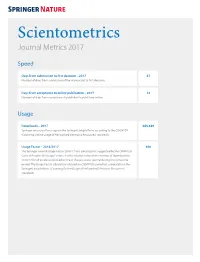

Scientometrics Journal Metrics 2017

Scientometrics Journal Metrics 2017 Speed Days from submission to first decision – 2017 47 Number of days from submission of the manuscript to first decision. Days from acceptance to online publication – 2017 13 Number of days from acceptance at publisher to published online. Usage Downloads – 2017 688,649 Springer measures the usage on the SpringerLink platform according to the COUNTER (Counting Online Usage of NeTworked Electronic Resources) standards. Usage Factor – 2016/2017 406 The Springer Journal Usage Factor 2016/17 was calculated as suggested by the COUNTER Code of Practice for Usage Factors. It is the median value of the number of downloads in 2016/17 for all articles published online in that particular journal during the same time period. The Usage Factor calculation is based on COUNTER-compliant usage data on the SpringerLink platform. (Counting Online Usage of NeTworked Electronic Resources) standards. Journal Metrics Impact Impact Factor – 2016 2.147 Journal Impact Factors are published each summer by Thomson Reuters via Journal Citation Reports®. Impact Factors and ranking data are presented for the preceding calendar year. 5 Year Impact Factor – 2016 2.346 The 5-year journal Impact Factor is the average number of times articles from the journal published in the past five years that have been cited in the JCR year. It is calculated by dividing the number of citations in the JCR year by the total number of articles published in the five previous years. SNIP – 2016 1.319 Source Normalized Impact per Paper (SNIP) measures contextual citation impact by weighting citations based on the total number of citations in a subject field.