Deep Convolutional Neural Network for Hand Sign Language Recognition Using Model E

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

SFG1325 V6 GOVERNMENT of the SPECIAL CAPITAL CITY REGION of JAKARTA PROVINCE WATER RESOURCES AGENCY Jalan Taman Jatibaru No

SFG1325 V6 GOVERNMENT OF THE SPECIAL CAPITAL CITY REGION OF JAKARTA PROVINCE WATER RESOURCES AGENCY Jalan Taman Jatibaru No. 1 Telephone (021) 3846608 – 3848435 Facsimile (021) 3850255 Website: www.sumberdayaair.jakarta.go.id E-mail: [email protected] J A K A R T A Postal Code: 10150 Public Disclosure Authorized LAND ACQUISITION AND RESETTLEMENT ACTION PLAN (LARAP) Public Disclosure Authorized LOCATION WEST BANJIR CANAL PLUIT SUB-DISTRICT, PENJARINGAN DISTRICT Public Disclosure Authorized NORTH JAKARTA Public Disclosure Authorized REVISION: APRIL 2017 LAND ACQUISITION AND RESETTLEMENT ACTION PLAN (LARAP) WEST BANJIR CANAL (WBC) Table of Contents I. INTRODUCTION ………………………………………………………………… 1 1.1. Background ………………………………………………………………. 1 1.2. General Description of Project Location ……………………………… 1 1.3. Project Activity Plan …………………………………………………….. 2 1.4. Potential Impacts of Project on Community…………………………….. 3 1.5. LARAP Preparation Objective ………………………………………….. 3 II. CHARACTERISTICS OF PEOPLE, LANDS AND POTENTIALLY STRUCTURES AFFECTED BY PROJECT ………………………………….. 4 2.1. Description of Affected Lands ………………………………… 4 2.2. Description of Affected Structures ………………………….. 5 2.3. Description of Potential Affected People ………………….. ………….. 6 III. IMPLEMENTATION PLAN FOR THE HANDLING OF PROJECT AFFECTED PEOPLE …………………………………………………….... 13 3.1. Policies of the Government of DKI Jakarta Province ……………… 13 3.2. Legal Analysis …………………………………………………………… 16 3.3. Institution …………………………………………………………………. 18 3.4. Monitoring and Evaluation ……………………………………………… 21 3.5. Complaint Handling ……………………………………………………… 22 3.6. Implementation of Handling of Project Affected People ………….. 22 i | P a g e LAND ACQUISITION AND RESETTLEMENT ACTION PLAN (LARAP) WEST BANJIR CANAL (WBC) LIST OF TABLES Table 1 Activity Plan …………………………………………………………….. 2 Table 2 Summary on Affected Lands …………………….……………………. 5 Table 3 Summary on Affected Structures ……………………………. 5 Table 4 Summary on Profile of Project Affected People ……………………. -

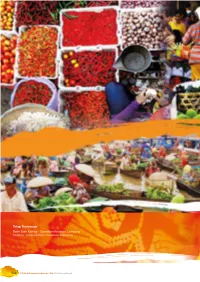

Corporate Data Financial Statements & Analysis Responsibility

Highlights Management Reports Company Profile Tetap Tersenyum Ratih Diah Kartika - Operation Kedaton, Lampung First Place - Traditional Market Photography Competition 556 PT Bank Danamon Indonesia, Tbk. 2014 Annual Report Management Discussion Operational Review Corporate Governance Corporate Social Corporate Data Financial Statements & Analysis Responsibility Corporate Data 2014 Annual Report PT Bank Danamon Indonesia, Tbk. 557 Highlights Management Reports Company Profile Products and Services DANAMON SIMPAN PINJAM TabunganKU Low cost cheap savings, without monthly Dana Pinter 50 (DP50) administration fee with low opening deposit and Financing facility (with collateral) for small and micro easily accessible by the public. scale entrepreneur (SME), merchant and individual, with a quick credit approval following the receipt Capital Solution (SM) of complete documents. The maximum financing Loan facility without collateral for small and micro provided shall be Rp100 million. entrepreneur (SME), merchant and individual with quick approval process for business expansion. Dana Pinter 200 (DP200) The maximum financing provided shall be Rp50 Loan facility (with collateral) for small and micro million. scale entrepreneur (SME), merchant and individual, with a quick credit approval following the receipt Special Capital Solution of complete documents. The maximum financing Loan facility without collateral for small and micro provided shall be Rp500 million. entrepreneur (SME), merchant and individual with quick approval process for business expansion with Current Account Loan (PRK) a condition of having a minimum loan history of 12 Loan for working capital needs for small and micro months. The maximum financing provided shall be scale entrepreneur (SME), merchant and individual Rp50 million. with a term of 1 year and may be extended. -

No. Kecamatan Kelurahan Jumlah Nilai Kepuasan 1 CENGKARENG RAWA BUAYA 434 92.40 KAPUK 376 87.15 DURI KOSAMBI 111 86.46 CENGKAREN

Nilai SKM dan Jumlah Responden Perkecamatan-Kelurahan untuk pelayanan Ketertiban Umum Tahun 2020 Nilai No. Kecamatan Kelurahan Jumlah Kepuasan 1 CENGKARENG RAWA BUAYA 434 92.40 KAPUK 376 87.15 DURI KOSAMBI 111 86.46 CENGKARENG BARAT 192 86.24 KEDAUNG KALIANGKE 196 86.00 CENGKARENG TIMUR 312 83.85 CENGKARENG Total 1621 87.63 2 GROGOL PETAMBURAN TANJUNG DUREN SELATAN 126 99.18 JELAMBAR BARU 354 89.30 TANJUNG DUREN UTARA 293 88.26 JELAMBAR 202 88.15 WIJAYA KUSUMA 370 88.06 GROGOL 189 87.29 TOMANG 358 86.75 GROGOL PETAMBURAN Total 1892 88.75 3 KALIDERES KAMAL 146 94.63 PEGADUNGAN 400 87.74 SEMANAN 324 87.32 TEGAL ALUR 362 86.51 KALIDERES 373 85.17 KALIDERES Total 1605 87.41 4 KEBON JERUK KEDOYA UTARA 108 93.54 KELAPA DUA 118 90.73 SUKABUMI UTARA 333 88.71 SUKABUMI SELATAN 292 86.81 KEDOYA SELATAN 318 86.40 KEBON JERUK 180 86.34 DURI KEPA 340 81.47 KEBON JERUK Total 1689 86.69 5 KEMBANGAN KEMBANGAN UTARA 139 94.70 KEMBANGAN SELATAN 117 92.47 SRENGSENG 124 90.23 JOGLO 164 88.77 MERUYA UTARA 208 88.43 MERUYA SELATAN 303 86.21 KEMBANGAN Total 1055 89.33 6 PALMERAH SLIPI 160 92.43 KOTA BAMBU SELATAN 405 91.02 JATI PULO 126 87.65 KOTA BAMBU UTARA 287 85.29 PAL MERAH 527 83.89 KEMANGGISAN 224 83.56 PALMERAH Total 1729 86.81 Nilai No. Kecamatan Kelurahan Jumlah Kepuasan 7 TAMANSARI GLODOK 105 90.77 TANGKI 112 89.88 KRUKUT 135 89.07 PINANGSIA 122 87.34 TAMAN SARI 126 87.06 KEAGUNGAN 233 86.97 MANGGA BESAR 234 86.67 MAHPAR 193 85.48 TAMANSARI Total 1260 87.53 8 TAMBORA DURI SELATAN 129 98.77 DURI UTARA 214 96.78 ROA MALAKA 110 93.03 TAMBORA 204 92.37 TANAH SEREAL 396 91.42 KALI ANYAR 382 87.94 KRENDANG 415 87.04 JEMBATAN LIMA 196 86.39 ANGKE 308 83.58 JEMBATAN BESI 220 82.50 PEKOJAN 239 81.00 TAMBORA Total 2813 88.39 Nilai SKM dan Jumlah Responden Perkecamatan-Kelurahan untuk pelayanan PPSU Tahun 2020 Nilai No. -

126C0e7fffda51c891e5cf453753

INFORMASI PEMBANGUNAN KOTA ADMINISTRASI JAKARTA BARAT TAHUN 2018 RAPBD SKPD/UKPD USULAN MUSRENBANG Tindak Lanjut Musrenbang KETERANGAN SKPD/UKPD No Kecamatan Kelurahan RW Masalah Lokasi Usulan Kegiatan Volume Satuan Anggaran Indikatif Kegiatan Anggaran Tujuan 1 Grogol Jelambar 1 jalan tidak ada trotoarnya, Jl Hadiah 1 sepanjang Blok D 14 Pembangunan trotoar 615 m2 273.367.500,0 SUKU DINAS BINA Pembangunan trotoar dan bangunan 273.367.500 Petamburan sehingga pejalan kaki tidak aman menghadap selatan, berbatasan MARGA KOTA - pelengkap jalan di Kota Adm. Jakarta dan nyaman dengan jalur rel KA (RW03)#615 m2# JAKBAR Barat 2 Grogol Jelambar 1 Trotoar dan got ditimbun oknum Jl Hadiah 2 dan Hadiah utama 5 di Pembangunan saluran 100 m 224.378.700,0 SUKU DINAS BINA Pembangunan/peningkatan jalan di 224.378.700 Petamburan sehingga tidak ada got dan samping sekolah Darma Jaya RT07 (tepi jalan) MARGA KOTA - Kota Adm. Jakarta Barat trotoar, padahal dulu ada. RW01 Kel. Jelambar Kec Grogol JAKBAR Petamburan Jakarta Barat#100 meter# 3 Grogol Jelambar 2 Jalanan rendah JL. Jelambar Uatama 3, RT 011 RW 02 , Perbaikan Jalan 192 m2 36.864.000,0 SUKU DINAS BINA Pemeliharaan jalan lingkungan dan 36.864.000 Petamburan Gang Rukun 3, Jelambar , Grogol Lingkungan/Orang dengan MARGA KOTA - orang di Kota Adm. Jakarta Barat Petamburan, Jakarta Barat#2# Beton JAKBAR 4 Grogol Jelambar 2 Jalanan Rendah Jl. Jelambar Utama 3, RT 011 RW 02, Perbaikan Jalan 218 m2 41.856.000,0 SUKU DINAS BINA Pemeliharaan jalan lingkungan dan 41.856.000 Petamburan #3# Lingkungan/Orang dengan MARGA KOTA - orang di Kota Adm. -

SD SMA Abimas Gustaviansyah 1701371044 SDN KRAMAT PELA

Sekolah Nama Kelompok NIM SD SMA SDN KRAMAT PELA 07 PG. Jl. Abimas 1701371044 Gandaria Tengah II Blok D No. 1, gustaviansyah Kebayoran Baru, SMA NEGERI 29 Jl. Kramat No.6, Abraham 1601277290 Kebayoran Lama SDN KEBON JERUK 18 PG. Adam Rivaldi 1601222341 Jl. Kemiri, Kebon Jeruk Adhitia Rizki 1701354076 Hermawan Putra SDN PONDOK LABU 04 PG. Adi Riswanto 1701316512 Jl. H. Saleh No. 37, Cilandak SDN KARET TENGSIN 14 PT. Jl. Karet Pasar Baru Adistiara Kasyfi 1701357973 Barat 1 No. 16, Tanah Abang SDN GROGOL SELATAN 13 PG. Adlan Awwala 1701311820 Jl. Komp. Hamkan Cidodol, Kebayoran Lama, Adrian Citra SDN SRENGSENG 05 PG. Jl. Raya 1701348883 Pradana Srengseng No. 56, Kembangan SDN CIPULIR 04 PT. Jl. SMK NEGERI 37 Jl. Pertanian III, Adrian Hartanto 1701331482 Ciledug Raya, Kebayoran Lama Pasar Minggu Agnes Wijaya 1701351181 Agung Damassaputra 1701325214 SDN JATI PULO 03 PG. Jl. SMA NEGERI 25 Jl. AM. Sangaji No. AGUS SALIM 1701313990 Semangka II No. 34, Palmerah 22-24, Gambir SDN TANJUNG DUREN SELATAN Agustinus Kevin SMK NEGERI 38 Jl. Kebon Sirih No. 1701312880 01 PG. Jl. Tanjung Duren Timur Pongoh 38, Gambir V/26, Grogol Petamburan, SDN PESANGGRAHAN 02 Ahmad Ilham PG. Jl. Raya Kodam Jaya Aulia 1601265233 Bintaro, Pesanggrahan, Ahmad Syifa SDN DURI KEPA 01 PG. Jl. Raya SMK NEGERI 60 Jl. Duri Raya No. 1701290034 Rahmatullah Duri Kepa, Kebon Jeruk 15, Kebon Jeruk SDN KEB. LAMA SELATAN 19 PG. JL. DHARMA PUTRA RAYA SMA NEGERI 109 Jl. Gardu No. 31, aiko olivia 1701339831 NO 51 KOMP.KOSTRAD, Jagakarsa Kebayoran Lama, Aileen Natalia SDN KELAPA DUA 01 PG. -

NO. NAMA SEKOLAH ALAMAT 1 SMP ABDI SISWA Jl. Patra Raya Tomang Raya No. 1, Kebon Jeruk 2 SMP ABDI SISWA II 1, Kebon Jeruk 3 SMP AD-DA'wah Jl

NO. NAMA SEKOLAH ALAMAT 1 SMP ABDI SISWA Jl. Patra Raya Tomang Raya No. 1, Kebon Jeruk 2 SMP ABDI SISWA II 1, Kebon Jeruk 3 SMP AD-DA'WAH Jl. Madrasah Tanah Koja Rt. 007/02, Cengkareng 4 SMP AL ABROR Jl. Srengseng, Kembangan 5 SMP AL AZHAR Jl. H. Sa'aba Raya Komp. Unilever, Kembangan 6 SMP AL BAYAN ISLAMIC SCHOOL Jl. Bazoka Raya No. 6, Kembangan 7 SMP AL CHASANAH Jl. Tg. Duren Barat III/1, Grogol Petamburan 8 SMP AL HAMIDIYAH Jl. Raya Kedoya Selatan No.50, Kebon Jeruk Jl. Daud No. 15 Sukabumi Utara Kebon Jeruk, 9 SMP AL HASANAH Kebon Jeruk 10 SMP AL HUDA Jl. Utama Raya No. 2, Cengkareng 11 SMP AL HUDA Jl. H. Alimun Kelapa Dua, Kebon Jeruk 12 SMP AL INAYAH JL. SMU 57, Kebon Jeruk 13 SMP AL KAMAL Jl. Raya Al Kamal No.2 Kedoya Selatan, Kebon Jeruk 14 SMP AL MU'MIN Jl. Kapuk Kamal, Kalideres 15 SMP AL MUKHLISH Jl. Asia Baru Duri Kepa No. 60, Kebon Jeruk Jl. Belimbing Raya Komp. Bojong Indah, 16 SMP AL MUKHLISIN Cengkareng 17 SMP AL QOMAR Jl. Kamal Raya No. 1, Kalideres 18 SMP AL WASHILAH Jl. Kembangan Baru No.20, Kembangan 19 SMP AL-ABASIYAH Jl. Kebun Kelapa, Kalideres 20 SMP AL-JIHAD Jl. Kapuk Kebun Jahe, Cengkareng 21 SMP AL-MANSHURIYAH Jl. Kembangan Baru No. 109, Kembangan 22 SMP AL-YAUMA Jl. Masjid Al Istiqomah, Cengkareng 23 SMP AMITAYUS Jl. Seni Budaya Raya I, Grogol Petamburan 24 SMP AR ROHMAN Jl. Benda Raya No. 07, Kalideres 25 SMP ASH SOLIHIN Jl. -

NO. NAMA INVESTOR ALAMAT1 ALAMAT2(LANJUTAN) L/A STATUS INVESTOR NAMA PEMEGANG REKENING JUMLAH PERSEN 1 Aditia Arta Kustiwan Jl

DAFTAR PEMEGANG SAHAM PT. MERCK SHARP DOHME PHARMA, Tbk PER TANGGAL 29 MARET 2018 NO. NAMA INVESTOR ALAMAT1 ALAMAT2(LANJUTAN) L/A STATUS INVESTOR NAMA PEMEGANG REKENING JUMLAH PERSEN 1 Aditia Arta Kustiwan Jl. Kembang Molek 1 Blok J3/9 L INDIVIDUAL - DOMESTIC NISP SEKURITAS, PT 123 0.00 2 ARVAN CARLO DJOHANSJAH JL KRAMAT SENTIONG NO 1 - 32 RT/RW 010/007 KEL.KRAMAT KEC.SENEN L INDIVIDUAL - DOMESTIC PT Anugerah Sekuritas Indonesia 300 0.01 3 DARWIN GUNAWAN Jl. Mangga Besar IV/27 Rt 4/2 Taman Sari Jakarta Barat L INDIVIDUAL - DOMESTIC PT OSO SEKURITAS INDONESIA 300 0.01 4 DJOHAN ALAMSYAH, DR JL.KRAMAT SENTIONG NO.I/32 RT/RW.010/007 KEL.KRAMAT KEC.SENEN L INDIVIDUAL - DOMESTIC PT Anugerah Sekuritas Indonesia 100 0.00 5 EDIJANTO JUSUF PT. IFARS PHARMACEUTICAL LABORATORIES JL. BALAI PUSTAKA TIMUR NO. 39 BLOK F/3 RAWAMANGUN L INDIVIDUAL - DOMESTIC PT DBS VICKERS SEKURITAS INDONESIA 2,500 0.07 6 GLOBAL INTER CAPITAL, PT Jl. Medan Merdeka Selatan No. 14 Gambir Jakarta Pusat L PERUSAHAAN TERBATAS NPWP DANAREKSA SEKURITAS, PT 50 0.00 7 Kustiwan Kamarga Jl Kembang Molek 1 BLK J3/9 Rt/Rw 010/003 Kel.Kembangan Selatan Kec.Kembangan L INDIVIDUAL - DOMESTIC PT UOB KAY HIAN SEKURITAS 141 0.00 8 LIAUW FOENG SENG BUKIT DURI PERMAI BLOK A NO. 1 L INDIVIDUAL - DOMESTIC PT MNC SEKURITAS 50 0.00 9 LIDIAWATI LINGGADIHARDJA JL. PULOMAS TIMUR B/11 RT. 011 RW. 011 KEL. KAYU PUTIH KEC. PULO GADUNG L INDIVIDUAL - DOMESTIC PT Mega Capital Sekuritas 550 0.02 10 Lusiana Pramana JELAMBAR BARU 2 NO.3A JAKARTA BARAT L INDIVIDUAL - DOMESTIC PT PRATAMA CAPITAL SEKURITAS 150 0.00 11 LYNN CHOEANNATA JL. -

DAFTAR PEMEGANG SAHAM PT Hotel Fitra Internasional Tbk Per 30 Juni 2020

DAFTAR PEMEGANG SAHAM PT Hotel Fitra Internasional Tbk Per 30 Juni 2020 Nama No Nama Alamat1 Alamat2 Propinsi L / A Status Pemegang Jumlah Saham % Rekening PT. KGI JL. TARUMANEGARA CIREUNDEU, CIPUTAT INDIVIDUAL - 1 WIENA HANIDA Banten L Sekuritas 37,102,400 6.18 B.1 RT. 005 RW. 004 TIMUR DOMESTIC Indonesia RT009/007 PT LOTUS HENDRA JL.PURI KENCANA BLK. Kel.KEMBANGAN INDIVIDUAL - 2 Jakarta L ANDALAN 35,000,000 5.83 SUTANTO M5 NO.3 SELATAN DOMESTIC SEKURITAS Kec.KEMBANGAN PT JASA RT 017/015 Kel. Jl. Kelapa Hibrida III INDIVIDUAL - UTAMA 3 Jon Fieris Pegangsaan Dua Kec. Jakarta L 15,000,000 2.50 RB-6/8 DOMESTIC CAPITAL Kelapa Gading SEKURITAS PT. KGI KOMP. BEA CUKAI BLK INDIVIDUAL - 4 SUSAEDI MUNIF SUKAPURA, CILINCING Jakarta L Sekuritas 12,467,900 2.08 R 5/2 RT. 014 RW. 007 DOMESTIC Indonesia PT VALBURY SUSANTY JL.JOMAS NO.21 A KEL.MERUYA UTARA INDIVIDUAL - 5 Jakarta L SEKURITAS 8,918,800 1.49 LUKMAN RT.007 RW.005 KEC.KEMBANGAN DOMESTIC INDONESIA JL.JERUK BALI V/34, PT KIWOOM INDIVIDUAL - 6 BUDIYANTO RT005/006, DURI KEPA, JAKARTA Jakarta L SEKURITAS 8,549,700 1.42 DOMESTIC KEBON JERUK INDONESIA RT/RW. 015/005 Kel. PT PANCA PERUM BUNGA LESTARI INDIVIDUAL - 7 NENI TRIBUANI KEDUNGARUM Kec. Jawa Barat L GLOBAL 8,250,000 1.37 BLOK B18 DOMESTIC KUNINGAN SEKURITAS PT FAC HENDRA JL. KALI CIPINANG, INDIVIDUAL - 8 Jakarta L SEKURITAS 7,961,500 1.33 TARIPAR RT.002/004 DOMESTIC INDONESIA BNI CAROLINA 002/011 ANCOL INDIVIDUAL - 9 JL PARANG TRITIS I/18 Jakarta L SEKURITAS, 7,829,200 1.30 KUSUMA PADEMANGAN DOMESTIC PT MUHAMAD TOMANG BANJIR KANAL INDIVIDUAL - PT MNC 10 Jakarta L 6,747,300 1.12 SUKRON RT9 RW14 DOMESTIC SEKURITAS PARAMITRA Gg. -

Part-D Formulation of Master Plan

PART-D FORMULATION OF MASTER PLAN The Project for Capacity Development of Wastewater Sector Through Reviewing the Wastewater Management Master Plan in DKI Jakarta PART-D FORMULATION OF MASTER PLAN D1 General Considerations D1.1 Improvement Targets Targeted items for improvement by the implementation of sewerage and sanitation projects to be proposed in the New M/P and the coverage ratios for each target are as follows in view of the implementation plan by the Ministry of Public Works and the view point of securing the water sources for water supply services in the future. Table SMR-D1-1 Improvement Targets and Development of Coverage Ratio Medium-term Short-term plan Long-term plan plan (2012 – 2020) (2031 – 2050) Improvement Target Unit (2021 – 2030) Y2012 Y2014 Y2020 Y2025 Y2030 Y2035 Y2040 Y2045 Y2050 1,000 Design Population 12,665 12,665 12,665 12,665 12,665 12,665 12,665 12,665 12,665 person Administration 1,000 10,035 10,361 11,284 11,994 12,665 12,665 12,665 12,665 12,665 Population person Facility Coverage % 2 7 20 30 40 50 65 75 80 Ratio*1 Service Cover 2 % 2 4 15 25 35 45 55 70 80 age Ratio* Wastewater 1,000m3/day 34 77 337 577 896 1,133 1,404 1,692 2,011 Flow*3 Served Off-site (sewerage) (sewerage) Off-site 1,000 Population for 168 387 1,685 2,884 4,478 5,775 7,130 8,572 10,166 person Off-site On-site Defecation % 85 96 85 75 65 55 45 30 20 Ratio CST Coverage % 83 81 64 47 32 20 11 4 0 Ratio MST Coverage % 2 15 21 28 32 34 33 28 20 Ratio Served Populati 1,000 4 8,567 9,974 9,599 9,110 8,188 6,890 5,535 4,093 2,500 on for On-site* -

Region Kabupaten Kecamatan Kelurahan Alamat Agen Agen Id Nama Agen Pic Agen Jaringan Kantor Jabo Inner Jakarta Barat Cengkareng

REGION KABUPATEN KECAMATAN KELURAHAN ALAMAT AGEN AGEN ID NAMA AGEN PIC AGEN JARINGAN KANTOR JABO INNER JAKARTA BARAT CENGKARENG CENGKARENG BARAT JL. MELATI IV NO. 14 RT/RW 010/001 KEL. CENGKARENG BARAT KEC. CENGKARENG 213BB0116P000002 WARUNG MAKAN DEVI DEVI WIDIASTUTI BTPN PURNABAKTI DAAN MOGOT JABO INNER JAKARTA BARAT CENGKARENG CENGKARENG BARAT JL. SLANTAN RT/RW 010/001 KEL. CENGKARENG BARAT KEC. CENGKARENG 213BB0116P000005 PRIMA ABADI LAUNDRY YUNIARTI KURNIAWATI BTPN PURNABAKTI DAAN MOGOT JABO INNER JAKARTA BARAT CENGKARENG CENGKARENG BARAT JL. CC I NO. 18 A RT/RW 005/004 KEL. CENGKARENG BARAT KEC. CENGKARENG 213BB0110P000056 KETUA RT IRFAIH IRFA'IH BTPN PURNABAKTI DAAN MOGOT JABO INNER JAKARTA BARAT CENGKARENG CENGKARENG BARAT JL. RAWA BENGKEL NO. 19 RT/RW 005/007 KEL. CENGKARENG BARAT KEC. CENGKARENG 213BB0109P000059 GALAXY CELL RUDY SANTOSO BTPN PURNABAKTI DAAN MOGOT JABO INNER JAKARTA BARAT CENGKARENG CENGKARENG BARAT JL. UTAMA RAYA NO. 47 RT/RW 009/004 KEL. CENGKARENG BARAT KEC. CENGKARENG 213BB0109P000061 RAFI CELL IRWAN SUSILO BTPN PURNABAKTI DAAN MOGOT JABO INNER JAKARTA BARAT CENGKARENG CENGKARENG BARAT JL. KAMAL RAYA FLAMBOYAN NO. 12 RT/RW 002/008 KEL. CENGKARENG BARAT KEC. CENGKARENG 213BB0116P000087 NEW HARPENS CELL SUHARDI BTPN PURNABAKTI DAAN MOGOT JABO INNER JAKARTA BARAT CENGKARENG CENGKARENG BARAT JL. JAYA RAYA RT/RW 001/009 NO. 52 KEL. CENGKARENG BARAT KEC. CENGKARENG 213BB0116P000093 TOKO SRI WIJAYA ABDUL RAHMAN BTPN PURNABAKTI DAAN MOGOT JABO INNER JAKARTA BARAT CENGKARENG CENGKARENG BARAT RUSUN DINAS KEBERSIHAN RT/RW 014/005 KEL. CENGKARENG BARAT KEC. CENGKARENG 213BB0109P000060 AHMAD YANI AHMAD YANI BTPN PURNABAKTI DAAN MOGOT JABO INNER JAKARTA BARAT CENGKARENG CENGKARENG BARAT RUSUN FLAMBOYAN ELT RT/RW 014/010 KEL. -

ELMEKON HOME PAGE 14 November 2010

Home ELMEKON, PT. Business Link Leading Technics for the 21st Century ELMEKON, PT. Vision & Values Facilities Social Responsibility Home Corporate Governance Established in 2000, ELMEKON, PT. is an Integrated Electrical, Mechanical, Trading, Transportation, Data Networking, Contracting & Installation Company. To oversee its multidiscipline activities, the Company has three divisions and four business segments Profile reaching all parts of Indonesia with its Jakarta head office. The Company’s products & services portfolio includes 6 business units and five departments in Indonesia. In 2006, the Company established a third business segment, called Support & Service, which is S.H.E. made of four business units offering value-add services for customers requiring installation, supply chain, IT and air conditioning. Human Capital CORPORATE Management Team This portal provides overview information regarding the Company. Please enter to read more about the Company, its history, its people and its business commitments. Career information may also be accessed through this portal. Inside ELMEKON COMMERCIAL This portal provides information and links to our products and services. Please enter to Job Opportunities contact our Customer Service. Products by Industry INVESTOR This portal provides an array of reports for the keen investor. From annual reports to audit reports, from investor newsletters to share price history, reports that are Mechanical & Electrical specifically published for our analysts and investors can be found through this portal. -

985Dc401ac078210d464099107

INFORMASI PEMBANGUNAN KOTA ADMINISTRASI JAKARTA BARAT TAHUN 2018 RAPBD SKPD/UKPD USULAN MUSRENBANG Tindak Lanjut Musrenbang KETERANGAN SKPD/UKPD No Kecamatan Kelurahan RW Masalah Lokasi Usulan Kegiatan Volume Satuan Anggaran Indikatif Kegiatan Anggaran Tujuan 1 Grogol Jelambar Baru 2 JALAN RUSAK SUDAH LAMA TIDAK DI JALAN JELAMBAR JAYA V RT 010 RT Perbaikan Jalan 300 m2 57.600.000,0 SUKU DINAS BINA Pemeliharaan jalan lingkungan dan orang 57.600.000 Petamburan PERBAIKI HUJAN JADI KUBANGAN 011 RW 02#panjang jalan 50 M# Lingkungan/Orang dengan MARGA KOTA - di Kota Adm. Jakarta Barat AIR Beton JAKBAR 2 Grogol Jelambar Baru 3 Saluran air tersendat dikarenakan Jl. Jelambar Selatan III RT. 03, 04, 05 Perbaikan/peningkatan 370 m 754.728.430,9 SUKU DINAS Pemeliharaan saluran tepi jalan, saluran 754.728.431 Petamburan saluraan kecil tidak sesuai dengan dan RW. 03 Kelurahan Jelambar Baru Saluran Tepi Jalan Lokal SUMBER DAYA penghubung dan kelengkapannya di volume air yang masuk di Jl. #370 Cm# (lebar jalan > 3m) AIR KOTA - wilayah Jakarta Barat Jelambar Selatan III RT. 03, RT 05, JAKBAR dan RT. 06 RW. 03 3 Grogol Jelambar Baru 4 Sanitasi saluran air tidak berfungsi Jalan Jelambar Selatan XIV Rt.010/04 , Perbaikan/peningkatan 80 m 163.184.525,6 SUKU DINAS Pemeliharaan saluran tepi jalan, saluran 163.184.526 Petamburan karena sudah menjadi gundukan Kelurahan Jelambar Baru , Kecamatan Saluran Tepi Jalan Lokal SUMBER DAYA penghubung dan kelengkapannya di tanah Grogol Petamburan , Jakarta Barat (lebar jalan > 3m) AIR KOTA - wilayah Jakarta Barat 11460#80 meter# JAKBAR 4 Grogol Jelambar Baru 5 saluran sepanjang Jl.