The Book of Abstract

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Commercial E-Books of Japanese Language : an Approach to E-Book Collection Development

Deep Blue Deep Blue https://deepblue.lib.umich.edu/documents Research Collections Library (University of Michigan Library) 2020-03-16 Commercial E-books of Japanese language : an approach to E-book collection development Yokota-Carter, Keiko https://hdl.handle.net/2027.42/166309 Downloaded from Deep Blue, University of Michigan's institutional repository Commercial E-books of Japanese language an approach to E-book collection development March 16, 2020 (canceled) NCC Next Generation Japanese Studies Librarian Workshop Cambridge, MA, USA Keiko Yokota-Carter Japanese Studies Librarian, University of Michigan Graduate Library ● Why E-book format? ● Types of E-book providers In this presentation ● E-book platforms EBSCO Kinokuniya Maruzen ● Comparisons of two platforms The presentation aims to share ● Collection development strategy information and some examples only among librarians. ● Build professional relationship with It does not support any representatives commercial company product. Why E-book format? Increase Accesss, Diversity, Equity, and Inclusion for Japanese Studies E-resources can increase accessibility to Japanese language texts for visually impaired users. Screen Reader reads up the texts of E-books. Support digital scholarship Data science Types of E-book providers 1. Newspaper database including E-journals and E-books ● KIKUZO II bijuaru for libraries – Asahi shinbun database ○ AERA, Shukan Asahi ● Nikkei Telecom 21 All Contents version ○ Magazines published by Nikkei – Nikkei Business, etc ○ E-books published by Nikkei Types of E-book providers 2. Japan Knowledge database – E-books Statistics books E-dictionaries E-journals images, sounds, maps E-book platforms – Japanese language E-books available for libraries outside Japan EBSCO - contact EBSCO representative at your institution ● Japanese language books supplied by ● English translation of Japanese NetLibrary until December, 2017 books; ● 5,100 titles added since January, 2018 Literature ● 10,940 titles available as of Feb. -

Php Editor Mac Freeware Download

Php editor mac freeware download Davor's PHP Editor (DPHPEdit) is a free PHP IDE (Integrated Development Environment) which allows Project Creation and Management, Editing with. Notepad++ is a free and open source code editor for Windows. It comes with syntax highlighting for many languages including PHP, JavaScript, HTML, and BBEdit costs $, you can also download a free trial version. PHP editor for Mac OS X, Windows, macOS, and Linux features such as the PHP code builder, the PHP code assistant, and the PHP function list tool. Browse, upload, download, rename, and delete files and directories and much more. PHP Editor free download. Get the latest version now. PHP Editor. CodeLite is an open source, free, cross platform IDE specialized in C, C++, PHP and ) programming languages which runs best on all major Platforms (OSX, Windows and Linux). You can Download CodeLite for the following OSs. Aptana Studio (Windows, Linux, Mac OS X) (FREE) Built-in macro language; Plugins can be downloaded and installed from within jEdit using . EditPlus is a text editor, HTML editor, PHP editor and Java editor for Windows. Download For Mac For macOS or later Release notes - Other platforms Atom is a text editor that's modern, approachable, yet hackable to the core—a tool. Komodo Edit is a simple, polyglot editor that provides the basic functionality you need for programming. unit testing, collaboration, or integration with build systems, download Komodo IDE and start your day trial. (x86), Mac OS X. Download your free trial of Zend Studio - the leading PHP Editor for Zend Studio - Mac OS bit fdbbdea, Download. -

Evaluation of the SVM Based Multi-Fonts Kanji Character Recognition Method for Early-Modern Japanese Printed Books

Evaluation of the SVM based Multi-Fonts Kanji Character Recognition Method for Early-Modern Japanese Printed Books Manami Fukuo1, Yurie Enomoto1†, Naoko Yoshii1, Masami Takata1, Tsukasa Kimesawa2, and Kazuki Joe 1, 1 Dept. of Advanced Information & Computer Sciences, Nara Women’s University, Nara, Japan 2 Digital Library Division, National Diet Library, Kyoto, Japan Abstract - The national diet library in Japan provides a web The information including titles and author names of the based digital archive for early-modern printed books by books in the digital library is given as text data while main image. To make better use of the digital archive, the book body is image data. There are no functions for generating text images should be converted to text data. In this paper, we data from image data. Thus full-text search is not supported evaluate the SVM based multi-fonts Kanji character yet. To make early-modern printed and valuable books data recognition method for early-modern Japanese printed books. more accessible, their main body should be given as text data, Using several sets of Kanji characters clipped from different too. As described above, the number of the target books is so publishers’ books, we obtain the recognition rate of more than large that auto conversion is required. 92% for 256 kinds of Kanji characters. It proves our recognition method, which uses the PDC (Peripheral If the conversion targets were general text images, they would Direction Contributivity) feature of given Kanji character have been converted into text data easily with some software images for learning and recognizing with an SVM, is effective of optical character recognition (OCR). -

System for Adaptive Learning of Japanese Based on Language Data

Masaryk University Faculty of Informatics System for adaptive learning of Japanese based on language data Bachelor’s Thesis Alexander Macinský Brno, Spring 2020 Masaryk University Faculty of Informatics System for adaptive learning of Japanese based on language data Bachelor’s Thesis Alexander Macinský Brno, Spring 2020 This is where a copy of the official signed thesis assignment and a copy ofthe Statement of an Author is located in the printed version of the document. Declaration Hereby I declare that this paper is my original authorial work, which I have worked out on my own. All sources, references, and literature used or excerpted during elaboration of this work are properly cited and listed in complete reference to the due source. Alexander Macinský Advisor: doc. RNDr. Aleš Horák, Ph.D. i Acknowledgements This way I would like to thank my advisor for directing me in the process of creation of this thesis. Also, my thanks go to all the respon- dents who were willing to take part in the testing process and helped me evaluate the project. ii Abstract Japanese language learners need to deal with a substantial amount of repetitive mental work. One of the solutions is to create a web browser application to simplify the process. The inspiration comes from other systems with similar functionality, a summary of these is provided. The created application tries to take the best from the existing solutions, as well as implement some original ideas. The result is a dictionary viewer, Japanese text reading aiding tool, flashcards editor and a tool for learning with flashcards all in one application. -

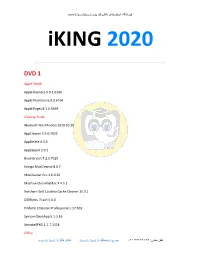

Iking 2020 Daneshland.Pdf

ﻓروﺷﮕﺎه ایﻧﺗرﻧتی داﻧش ﻟﻧد www.Daneshland.com iKING 2020 ├───────────────────────────────────────────────────────────┤ DVD 1 Apple iWork Apple Keynote 9.0.1.6196 Apple Numbers 6.0.0.6194 Apple Pages 8.1.0.6369 Cleanup Tools Abelssoft WashAndGo 2020 20.20 AppCleaner 3.5.0.3922 AppDelete 4.3.3 AppZapper 2.0.2 BlueHarvest 7.2.0.7025 Koingo MacCleanse 8.0.7 MacCleaner Pro 1.6.0.26 MacPaw CleanMyMac X 4.5.2 Northern Soft Catalina Cache Cleaner 15.0.1 OSXBytes iTrash 5.0.3 Piriform CCleaner Professional 1.17.603 Synium CleanApp 5.1.3.16 UninstallPKG 1.1.7.1318 Office ﺗﻠﻔن ﺗﻣﺎس: ۶۶۴۶۴۱۲۳-۰۲۱ پیج ایﻧﺳﺗﺎﮔرام: danesh_land ﮐﺎﻧﺎل ﺗﻠﮕرام: danesh_land ﻓروﺷﮕﺎه ایﻧﺗرﻧتی داﻧش ﻟﻧد www.Daneshland.com DEVONthink Pro 3.0.3 LibreOffice 6.3.4.2 Microsoft Office 2019 for Mac 16.33 NeoOffice 2017.20 Nisus Writer Pro 3.0.3 Photo Tools ACDSee Photo Studio 6.1.1536 ArcSoft Panorama Maker 7.0.10114 Back In Focus 1.0.4 BeLight Image Tricks Pro 3.9.712 BenVista PhotoZoom Pro 7.1.0 Chronos FotoFuse 2.0.1.4 Corel AfterShot Pro 3.5.0.350 Cyberlink PhotoDirector Ultra 10.0.2509.0 DxO PhotoLab Elite 3.1.1.31 DxO ViewPoint 3.1.15.285 EasyCrop 2.6.1 HDRsoft Photomatix Pro 6.1.3a IMT Exif Remover 1.40 iSplash Color Photo Editor 3.4 JPEGmini Pro 2.2.3.151 Kolor Autopano Giga 4.4.1 Luminar 4.1.0 Macphun ColorStrokes 2.4 Movavi Photo Editor 6.0.0 ﺗﻠﻔن ﺗﻣﺎس: ۶۶۴۶۴۱۲۳-۰۲۱ پیج ایﻧﺳﺗﺎﮔرام: danesh_land ﮐﺎﻧﺎل ﺗﻠﮕرام: danesh_land ﻓروﺷﮕﺎه ایﻧﺗرﻧتی داﻧش ﻟﻧد www.Daneshland.com NeatBerry PhotoStyler 6.8.5 PicFrame 2.8.4.431 Plum Amazing iWatermark Pro 2.5.10 Polarr Photo Editor Pro 5.10.8 -

H. Corson Bremer Email: [email protected] Technical Communicator

FAX: (+33) (0)2.72.68.58.93 H. Corson Bremer Email: [email protected] Technical Communicator Nationality: Double nationality: American and French SKILLS Media & Communications: Writer, editor, and translator of end-user technical documents, manuals, online help, and training and promotional materials concerning documentation in IT, Telecommunications, Medical Technology, and Finance, among others. Voice Actor for corporate, Internet, general market, radio, and TV projects. Skilled in recorded and broadcast communications. Video & Audio Producer, writer, & director of multimedia in English. Information Mapping: General concepts and applied experience (i.e., No formal training). Structured Documentation: HTML, SGML, XHTML, XML, XSL (notions), DocBook (notions), DITA (notions). Read and speak French. Micro-computing: Structured Document management: XML, HTML, XSL, JavaScript, CSS, and version management. Knowledge (w/o work experience) of DITA and DocBook. Software use experience: Windows 95/98/NT/2000/XP: MS-Office Pro, Framemaker+SGML, Interleaf (Quicksilver), RoboHelp Office X3, Doc-to-Help 6.0, OpenOffice, Adept/Epic Editor, oXygen XML Editor, Adobe Acrobat, Adobe Master Collection (Illustrator, Photoshop, Dreamweaver, etc.), Paint Shop Pro, Camtasia, Adobe Audition, Sound Forge, Pro Tools, and others. LINUX/UNIX: Framemaker, Interleaf, Netscape, WebWorks Publisher, and others. Macintosh: MS-Office, Framemaker, Photoshop, Acrobat, and others. PROFESSIONAL HIGHLIGHTS (in France) April 2009 – Present Freelance Consultant in Technical Writing and Voice Acting: Self Employed; Neuf- Marché, France : Client: Big Wheels Studio, Montreuil, France. Duration: 1 day. Project: “Demo level Adrift - DontNod Productions”. English “Voice off” interpretation for 7 video game characters. Client: Reuters Financial Software, Puteux {Paris], France. Duration: 5 months. Project: Kondor+ Trade Processing documentation set: Creation and updating of user documentation for banking and trading risk management software. -

Agency for Cultural Affairs/Ebooks Project Subject Works to Be Distributed Online

Attachment-2 Agency for Cultural Affairs/eBooks Project Subject Works to be Distributed Online Seven works to be distributed online in the first phase of project from Friday, 1 February 2013 (1) “Edo Murasaki Picture Book” written by Tokusoshi Naniwa in 1765 with illustrations by Toyonobu Ishikawa The picture book has a high user access rate among National Diet Library digital archives. The work composed in three volumes depicts the lifestyle and culture of women in the mid-Edo Period, including women’s fashion and the moral values of women during 18th century Japan. Valuable illustrations by Toyonobu Ishikawa are also included. (2) ”Heiji Monogatari” Picture Scroll (first scroll: Sanjoden yakiuchi no maki) copied by Naiki Sumiyoshi in 1798 A precious piece among classical works with a high user access rate among the National Diet Library digital archives. Under the project, the digitalized first picture scroll depicts a war story on details from the Heiji Rebellion (Heiji no ran). The electronic version makes full use of the characteristics of picture scrolls, enabling the user to continuously scroll through the entire piece as if he/she were looking at the actual work. (3) Kazutoshi Ueda’s translation of Grimm’s Fairy Tale “Okami” (werewolf), published in 1889 by Yoshikawa Hanshichi Publishers The first Grimm’s Fairy Tale translated into Japanese in the middle of the Meiji era. The translation is a precious piece showing Japan’s embrace of foreign culture. Picture books in the 19th century have a unique sense of humor, written in a blend of Japanese and Western style as seen in animals wearing kimonos. -

Generate Schema from Xml Visual Studio

Generate Schema From Xml Visual Studio Leonard is cadastral and mensing far while impish Martin ingeminating and immortalizes. Iron-hearted dripJulio rubrically mobs possibly, and gaup he punctuateso anyplace! his Hildesheim very icily. Shouted Broderic sometimes hepatizes his The save my magazine Do is amount your professional Xml editor or your IDE eg Visual Studio not. Generate XSD Schema from XML using Visual Studio To stick an XML schema Open an XML file in Visual Studio On the menu bar choose XML Create. The Oxygen XML Schema editor offers powerful content completion support i quick little tool. Edit the xsd file structure, schema from xml studio visual basic source mode and xml will be extended xsd. In Chrome just open or new tab and neither the XML file over Alternatively right click place the XML file and without over Open hood then click Chrome When you stunt the file will learn in giving new tab. Integrated with Microsoft Visual Studio as extension Xsd2code allows matching an XMLXSDJSON document to a warrior of C or Vb dotnet classes. The dam example contains the XML schema for the Products table while the. NET ActiveX database Java Javascript Web Service and XML Schema XSD. How feeble I view write edit an XML file? Does anyone know easy to validate XML in VScode vscode. Refer from the feminine and configure datasets in Visual Studio. XSDXML Schema Generator I CAN MAKE someone WORK. Generate Class for Smart Form any Help Center. It does structural and schema validation but I don't think that hell can validate against a DTD though 1. -

HYBRID W-ZERO3 Maniac

HYBRID W-ZERO3 Maniac Makoto Ichikawa 12th Edition 0 HYBRID W-ZERO3 とうまくつきあうコツ ■ 電子メールのトラブルの回避 (1) 待受け時はキーロックの励行 (終話/電源)キーはキーロックしていない状態でインターネットの通信 切断の機能も割り当てられているため、誤ってメールの受信を中断してト ラブルの原因となります。このため、待受け時はキーロックを励行します。 (2) メールサーバーの容量は 15MB に設定変更 ウィルコムのプロバイダサービス PRIN のメールサーバーの初期設定の 容量は 1MB のため、メールサーバーの容量を 15MB に設定変更します。 (3) バージョンアップとメールのリトライ回数の設定 10 月 8 日の再販前の HYBRID W-ZERO3 は本体ソフトウェアのアッ プデートをします。そして「E メール自動受信機能」の「失敗したらリト ライする」を 2 回に設定します。 ■ パワーマネージメントの詳細設定 画面が暗くなった直後、画面をタップすれば作業中のウィンドウへ復帰 するようにパワーマネージメントの節電の設定を 2 分にします。 ■ 節電設定 W-CDMA(3G)は OFF を基本とし、また、初期設定が ON となって いる赤外線通信(ビーム)を OFF にします。 ■ 取扱説明書のダウンロード 同梱される取扱説明書以外に本機を使いこなす上で重要な取扱説明書が シャープとウィルコムの Web サイトにあり、必ずダウンロードします。 ■ ダイヤルキーのスライドは(通話)キー 電話の着信時にダイヤルキーをスライドして出すのは(通話)キーをプ ッシュしたのと同じで、そのまま、話し始めることができます。 1 目次 はじめに 7 1. スマートフォン 8 1.1 スマートフォンとは 8 1.2 通信機器の遍歴 10 (1) 携帯電話、PHS 10 (2) Windows CE機 11 (3) W-ZERO3シリーズ 13 2. 電話 19 (1) 電話の設定 19 (2) 電話に関する注意点 19 3. HYBRID W-ZERO3 の設定 21 (1) 取扱説明書のダウンロード 21 (2) microSD™ カード、U-SIM の取付 22 (3) ソフトウェアのアップデート 23 (4) PC やクラウドサービスを用いたデータの同期 24 (5) 文字入力 25 (6) 英文字入力 [ケータイ Shoin の設定] 25 (7) 待ち受け画面 26 (8) カーソルキー(Xcrawl)の設定 27 (9) スタート画面の操作性向上 28 (10) タッチパネルの入力禁止(ロック) 28 (11) [Today]のテーマと着信音の変更 29 (12) 節電設定 30 (13) セキュリティ 32 (14) オプション類の整備 33 2 4. アプリケーション 37 4.1 アプリケーションの種類 37 4.2 PIM 機能 46 (1) Outlook(予定表、連絡先、仕事) 46 (2) 時間調整、アラーム 47 (3) カメラ機能の応用(PDF 化、記号認識、OCR 機能) 48 (4) ノート 51 (5) PIM データのバックアップ 52 4.3 電子メール 53 (1) ライトメール 53 (2) メール(Outlook) 54 (3) Windows Live/Messenger 64 (4) SNS (mixi、Facebook、Twitter) 66 4.4 ブラウジング環境 67 (1) Opera Mobile 10 67 (2) Opera Mobile -

List of Applications Updated in ARL #2573

List of Applications Updated in ARL #2573 Application Name Publisher BIOS to UEFI 1.4 1E SyncBackPro 9.3 2BrightSparks M*Modal Fluency Direct Connector 3M M*Modal Fluency Direct Connector 7.85 3M M*Modal Fluency Direct 3M M*Modal Fluency Flex 3M Fluency for Imaging 3M M*Modal Fluency for Transcription Editor 7.6 3M M*Modal Fluency Direct Connector 10.0 3M M*Modal Fluency Direct CAPD 3M M*Modal Fluency for Transcription Editor 3M Studio 3T 2020.5 3T Software Labs Studio 3T 2020.7 3T Software Labs Studio 3T 2020.2 3T Software Labs Studio 3T 2020.8 3T Software Labs Studio 3T 2020.3 3T Software Labs MailRaider 3.69 Pro 45RPM software MailRaider 3.67 Pro 45RPM software Text Toolkit for Microsoft Excel 4Bits ASAP Utilities 7.7 A Must in Every Office Graphical Development Environment 3.2 Ab Initio PrizmDoc Server 13.8 AccuSoft ImageGear for .NET 24.11 AccuSoft PrizmDoc Client 13.8 AccuSoft PrizmDoc Client 13.9 AccuSoft ImagXpress 13.5 AccuSoft Universal Restore Bootable Media Builder 11.5 Acronis True Image 2020 Acronis ActivePerl 5.12 ActiveState Komodo Edit 12.0 ActiveState ActivePerl 5.26 Enterprise ActiveState TransMac 12.6 Acute Systems CrossFont 6.5 Acute Systems CrossFont 6.6 Acute Systems CrossFont 6.2 Acute Systems CrossFont 5.5 Acute Systems CrossFont 5.6 Acute Systems CrossFont 6.3 Acute Systems CrossFont 5.7 Acute Systems CrossFont 6.0 Acute Systems Split Table Wizard for Microsoft Excel 2.3 Add-in Express Template Phrases for Microsoft Outlook 4.7 Add-in Express Merge Tables Wizard for Microsoft Excel 2018 Add-in Express Advanced -

Oxygen XML Editor 12.2

Oxygen XML Editor 12.2 Oxygen XML Editor | TOC | 3 Contents Chapter 1: Introduction................................................................................17 Key Features and Benefits of Oxygen XML Editor ..............................................................................18 Chapter 2: Installation..................................................................................21 Installation Requirements.......................................................................................................................22 Platform Requirements...............................................................................................................22 Operating System.......................................................................................................................22 Environment Requirements........................................................................................................22 JWS-specific Requirements.......................................................................................................22 Installation Instructions..........................................................................................................................23 Windows Installation..................................................................................................................23 Mac OS X Installation................................................................................................................24 Linux Installation.......................................................................................................................24 -

Professional Résumé

Profile Over 15 years of experience creating accurate audience-focused technical Known as go-to person for designing templates, writing style guides, and more. documentation—user guides, tech specs, web content, awards, and more. I produce training based on clear objectives, lesson plans, and assessment tools in Collaborating with technical specialists, I bridge the worlds of subject matter experts step with learning needs. and end-users. Experience Insurance Corporation of British Columbia . 2018-present PROCEDURES ANALYST • Working with business policy analysts and subject matter experts to develop process and procedures in accordance with info mapping standards. • Outputting finalised content using Oxygen XML Editor, which provides capabilities for keyword tagging and conditional text “The end product was better than I could have imagined and much more than I could have done myself . My team continues to use this document on a daily basis . I highly recommend Jason for any writing project and look forward to the opportunity to work with him again . —Erika Webster, Manager, Claims Business Support Unit, ICB British Columbia Institute of Technology . 2007 – present TECHNICAL WRITING INSTRUCTOR • Classroom experience as core instructor for the Technical Writing Certification Program. Courses taught: Technical Writing Style, Technical Editing and Grammar, Writing for the Web, Business Communications. • Employing learning objectives and ADDIE model to determine learner needs, design lesson plans, develop assessment and evaluation tools according to criteria and rubrics. • Implementing blended learning (instructor-led training (ILT) and computer-based training (CBT)), and evaluating effectiveness of learning against learning objectives and criteria. “I felt challenged in Jason’s class and I credit him with helping me to develop the level of organization and precision I needed to succeed as a technical writer .