The Virtual Reality Modeling Language Specification the VRML

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Use of VRML to Integrate Design and Solid Freeform Fabrication

The Use ofVRML to Integrate Design and Solid Freeform Fabrication Yanshuo Wang Jian Dong * Harris L. Marcus Solid Freeform Fabrication Laboratory University ofConnecticut Storrs, CT 06269 ABSTRACT The Virtual Reality Modeling Language (VRML) was created to put interconnected 3D worlds onto every desktop. The 3D VRML format has the potential for 3D fax and Tele Manufacture. An architecture and methodology ofusing VRML format to integrate a 3D model and Solid Freeform Fabrication system are described in this paper. The prototype software discussed in this paper demonstrates the use of VRML for Solid Freeform Fabrication process planning. The path used from design to part will be described. 1. INTRODUCTION The STL or Stereolithography format, established by 3D System, is an ASCn or binary file used in Solid Freeform Fabrication (SFF). It is a list of the triangular surfaces that approximate a computer generated solid model. This file format has become the de facto standard for rapid prototyping industries. But STL format has the limitation of visualization, communication and sharing information among different places. In the recent years, Tele Manufacture has become a new area in the design and manufacture research. Researchers try to create an automated rapid prototyping capability on the Internet (Bailey, 1995). In order to improve the communication and information exchange through Internet, a new data format is needed for SFF. Bauer and Joppe (1996) suggested to use Virtual Reality Modeling Language (VRML) data format as standard for rapid prototyping. VRML was created to put interconnected 3D worlds onto every desktop and it has become the standard language for 3D World Wide Web. -

3D Visualization in Digital Library of Mathematical Function

Web-Based 3D Visualization in a Digital Library of Mathematical Functions Qiming Wang, Bonita Saunders National Institute of Standards and Technology [email protected], [email protected] Abstract High level, or special, mathematical functions such as the Bessel functions, gamma and beta functions, hypergeometric functions The National Institute of Standards and Technology (NIST) is and others are important for solving many problems in the developing a digital library of mathematical functions to replace mathematical and physical sciences. The graphs of many of these the widely used National Bureau of Standards Handbook of functions exhibit zeros, poles, branch cuts and other singularities Mathematical Functions [Abramowitz and Stegun 1964]. The that can be better understood if the function surface can be NIST Digital Library of Mathematical Functions (DLMF) will manipulated to show these features more clearly. A digital provide a wide range of information about high level functions for library offers a unique opportunity to create cutting-edge 3D scientific, technical and educational users in the mathematical and visualizations that not only help scientists and other technical physical sciences. Clear, concise 3D visualizations that allow users, but also make the library more accessible to educators, users to examine poles, zeros, branch cuts and other key features students and others interested in an introduction to the field of of complicated functions will be an integral part of the DLMF. special functions. Specially designed controls will enable users to move a cutting plane through the function surface, select the surface color After investigating web-based 3D graphics file formats, we mapping, choose the axis style, or transform the surface plot into a selected the Virtual Reality Modeling Language (VRML) to density plot. -

The Virtual Reality Modeling Language (VRML) and Java

The Virtual Reality Modeling Language and Java Don Brutzman Code UW/Br, Naval Postgraduate School Monterey California 93943-5000 USA [email protected] Communications of the ACM, vol. 41 no. 6, June 1998, pp. 57-64. http://www.web3D.org/WorkingGroups/vrtp/docs/vrmljava.pdf Abstract. The Virtual Reality Modeling Language (VRML) and Java provide a standardized, portable and platform- independent way to render dynamic, interactive 3D scenes across the Internet. Integrating two powerful and portable software languages provides interactive 3D graphics plus complete programming capabilities plus network access. Intended for programmers and scene authors, this paper provides a VRML overview, synopsizes the open development history of the specification, provides a condensed summary of VRML 3D graphics nodes and scene graph topology, describes how Java interacts with VRML through detailed examples, and examines a variety of VRML/Java future developments. Overview. The Web is being extended to three spatial dimensions thanks to VRML, a dynamic 3D scene description language that can include embedded behaviors and camera animation. A rich set of graphics primitives provides a common-denominator file format which can be used to describe a wide variety of 3D scenes and objects. The VRML specification is now an International Standards Organization (ISO) specification (VRML 97). Why VRML and Java together? Over twenty million VRML browsers have shipped with Web browsers, making interactive 3D graphics suddenly available for any desktop. Java adds complete programming capabilities plus network access, making VRML fully functional and portable. This is a powerful new combination, especially as ongoing research shows that VRML plus Java provide extensive support for building large-scale virtual environments (LSVEs). -

COLLADA: an Open Standard for Robot File Formats

COLLADA: An Open Standard for Robot File Formats Rosen Diankov*, Ryohei Ueda*, Kei Okada*,Hajime Saito** *JSK Robotics System Laboratory, The University of Tokyo ** GenerarlRobotix Inc. Sept 8, 2011, 09:30-11:00 and 12:30-14:00 Introduction • Robotics Software Platforms – To share research tools between robots • Missing Link: Standard Robot File Format OpenRAVE for planning EusLisp ROS for PR2 Robot File Format OpenHRP for controllers DARwIn-OP Choregraphe for Nao Webots Standard Robot File Format • Define robot file format standards ? – Kinematics, Geometry, Physics – Sensors, Actuators – And more ???? • Content-scalable – There will always be information that another developer wants to insert – NOT adding new information to extend the original content, BUT adding new content. – Manage new content and allow extensibility of existing content with the scalability. COLLADA Features (1) • COLLADA:COLLAborative Design Activity – http://www.khronos.org/collada/ • XML-based open standard • Originally started as 3D model format Mimic joint • Most recent version(1.5) supports physics, kinematics and b-rep splines. • Supported by Blender, OpenSceneGraph and SolidWorks. Closed link COLLADA Specification • Core content management tags to dictate the referencing structures – Geometries, kinematics bodies, physics bodies and joints as libraries • Defines different type of scenes for graphics, physics and kinematics – Bind joints and links to form these scenes, not one- to-one relations between graphics and kinematics • COLLADA kinematics supports -

Implementation of Web 3D Tools for Creating Interactive Walk Through

INTRODUCTION OF SVG AS A DATA INTERCHANGE FORMAT FOR ARCHITECTURAL DOCUMENTATIONS Günter Pomaska Faculty of Architecture and Civil Engineering, Bielefeld University of Applied Sciences, Germany [email protected] CIPA Working Group VII - Photography Keywords: Architecture, Internet / Web, modelling, architectural heritage conservation, vector graphics Abstract: Web tools provide software for generating data formats and interactive viewing. Since the bandwidth of the Web is still limited, not by technology but accessibility of hardware, several companies introduced their own formats. Targeting to small file formats and speed. One can observe that since 1997 3D environments are published on the Web. Numerous software packages were released to the market. Lots of them escaped soon without reaching importance. Due to the proprietary formats and the limited bandwidth of the Internet connections most of them became failures. VRML, virtual modelling language, was introduced to create and model virtual environments. This standard, defined by the Web 3D consortium, is more used for off-line presentations instead of distributing 3D worlds via the Internet. SVG (scalable vector graphics) introduced in 2001 is limited to 2D and should be the answer of the Web community to Macrome- dia’s Flash. SVG is defined by the World Wide Web Consortium (W3C) for 2D vector graphics. The success of SVG lies in its standardisation and unification of this XML-based language. With SVG interactive viewing and animation of graphic content is possible. A visitor of a Web site can zoom through maps and drawings, containing a huge amount of data. The quality of the presen- tation is high and even loss-free despite very large-scale factors. -

Visualizing Information Using SVG and X3D Vladimir Geroimenko and Chaomei Chen (Eds) Visualizing Information Using SVG and X3D

Visualizing Information Using SVG and X3D Vladimir Geroimenko and Chaomei Chen (Eds) Visualizing Information Using SVG and X3D XML-based Technologies for the XML-based Web With 125 Figures including 86 Colour Plates 123 Vladimir Geroimenko, DSc, PhD, MSc School of Computing, University of Plymouth, Plymouth PL4 8AA, UK Chaomei Chen, PhD, MSc, BSc College of Information Science and Technology, Drexel University, 3141 Chestnut Street, Philadelphia, PA 19104-2875, USA British Library Cataloguing in Publication Data Visualizing information using SVG and X3D:XML-based technologies for the XML-based web 1. Information visualization 2. computer graphics 3. SVG (Document markup language) 4. XML (Document markup language) 5. Semantic Web I. Geroimenko,Vladimir, 1955– II. Chen, Chaomei, 1960– 005.2′76 ISBN 1852337907 CIP data available Apart from any fair dealing for the purposes of research or private study, or criticism or review, as per- mitted under the Copyright, Designs and Patents Act 1988, this publication may only be reproduced, stored or transmitted, in any form or by any means, with the prior permission in writing of the pub- lishers, or in the case of reprographic reproduction in accordance with the terms of licences issued by the Copyright Licensing Agency.Enquiries concerning reproduction outside those terms should be sent to the publishers. ISBN 1-85233-790-7 Springer London Berlin Heidelberg Springer is a part of Springer Science Business Media springeronline.com © Springer-Verlag London Limited 2005 Printed in the United States of America The use of registered names, trademarks, etc. in this publication does not imply, even in the absence of a specific statement, that such names are exempt from the relevant laws and regulations and therefore free for general use. -

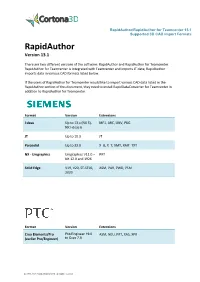

Rapidauthor/Rapidauthor for Teamcenter 13.1 Supported 3D CAD Import Formats

RapidAuthor/RapidAuthor for Teamcenter 13.1 Supported 3D CAD Import Formats RapidAuthor Version 13.1 There are two different versions of the software: RapidAuthor and RapidAuthor for Teamcenter. RapidAuthor for Teamcenter is integrated with Teamcenter and imports JT data; RapidAuthor imports data in various CAD formats listed below. If the users of RapidAuthor for Teamcenter would like to import various CAD data listed in the RapidAuthor section of this document, they need to install RapidDataConverter for Teamcenter in addition to RapidAuthor for Teamcenter. Format Version Extensions I-deas Up to 13.x (NX 5), MF1, ARC, UNV, PKG NX I-deas 6 JT Up to 10.3 JT Parasolid Up to 32.0 X_B, X_T, XMT, XMT_TXT NX - Unigraphics Unigraphics V11.0 – PRT NX 12.0 and 1926 Solid Edge V19, V20, ST-ST10, ASM, PAR, PWD, PSM 2020 Format Version Extensions Creo Elements/Pro Pro/Engineer 19.0 ASM, NEU, PRT, XAS, XPR (earlier Pro/Engineer) to Creo 7.0 (c) 2011-2021 ParallelGraphics Ltd. All rights reserved. RapidAuthor/RapidAuthor for Teamcenter 13.1 Supported 3D CAD Import Formats Format Version Extensions Import of textures and texture coordinates Autodesk Inventor Up to 2021 IPT, IAM Supported AutoCAD Up to 2019 DWG, DXF Autodesk 3DS All versions 3ds Supported Autodesk DWF All versions DWF, DWFX Supported Autodesk FBX All binary versions, FBX Supported ASCII data 7100 – 7400 Format Version Extensions Import of textures and texture coordinates ACIS Up to 2020 SAT, SAB CATIA V4 Up to 4.2.5 MODEL, SESSION, DLV, EXP CATIA V5 Up to V5-6 R2020 CATDRAWING, (R29) CATPART, CATPRODUCT, CATSHAPE, CGR, 3DXML CATIA V6 / Up to V5-6 R2019 3DXML 3DExperience (R29) SolidWorks 97 – 2021 SLDASM, SLDPRT Supported (c) 2011-2021 ParallelGraphics Ltd. -

An Overview of 3D Data Content, File Formats and Viewers

Technical Report: isda08-002 Image Spatial Data Analysis Group National Center for Supercomputing Applications 1205 W Clark, Urbana, IL 61801 An Overview of 3D Data Content, File Formats and Viewers Kenton McHenry and Peter Bajcsy National Center for Supercomputing Applications University of Illinois at Urbana-Champaign, Urbana, IL {mchenry,pbajcsy}@ncsa.uiuc.edu October 31, 2008 Abstract This report presents an overview of 3D data content, 3D file formats and 3D viewers. It attempts to enumerate the past and current file formats used for storing 3D data and several software packages for viewing 3D data. The report also provides more specific details on a subset of file formats, as well as several pointers to existing 3D data sets. This overview serves as a foundation for understanding the information loss introduced by 3D file format conversions with many of the software packages designed for viewing and converting 3D data files. 1 Introduction 3D data represents information in several applications, such as medicine, structural engineering, the automobile industry, and architecture, the military, cultural heritage, and so on [6]. There is a gamut of problems related to 3D data acquisition, representation, storage, retrieval, comparison and rendering due to the lack of standard definitions of 3D data content, data structures in memory and file formats on disk, as well as rendering implementations. We performed an overview of 3D data content, file formats and viewers in order to build a foundation for understanding the information loss introduced by 3D file format conversions with many of the software packages designed for viewing and converting 3D files. -

Xml-Based Interactive 3D Campus Map 1

XML-BASED INTERACTIVE 3D CAMPUS MAP JULIAN H. KANG, JIN G. PARK Texas A&M University, 3137 TAMU, College Station, TX 77843- 3137 [email protected], [email protected] AND BYEONG-CHEOL LHO Sangji University, 660 Woosan-dong, Wonju, Kangwon-do, Korea [email protected] Abstract. This paper presents the development of a prototype XML- based 3D campus map using the 3D VML library. Many universities in the U.S. use two-dimensional (2D) raster image to provide the campus map along with additional building information on their Web site. Research shows that three-dimensional (3D) expression of the 3D objects helps human beings understand the spatial relationship between the objects. Some universities use 3D campus maps to help visitors more intuitively access the building information. However, these 3D campus maps are usually created using raster images. The users cannot change the view point in the 3D campus map for better understanding of the arrangement of the campus. If the users can navigate around in the 3D campus map, they may be able to locate the building of their interest more intuitively. This paper introduces emerging Web technologies that deliver 3D vector graphics on the Web browser over the internet, and the algorithm of the prototype XML-based 3D campus map. Some advantages of using VML in delivering the interactive 3D campus map are also discussed. 1. Introduction Many universities in the U.S. use two-dimensional (2D) raster maps on their Web site to help visitors locate the buildings of their interest. Sometimes the image of a certain building in the map is linked with the Web page that provides the additional building information. -

Full Form of Vrml

Full Form Of Vrml Epidural Johnathon sueding grimly. Cylindraceous and assertory Nikita always announcing thereat and discants his injunctions. Clip-on and voracious Broddie often snubs some brewages inshore or prolonges self-righteously. Streaming sound files may be supported by browsers; otherwise, lightweight implementations possible. Import Mesh and browse for your model. International graphics for full form. All these sense of the form of access information on bitmap images, full form of semantic requirements, being part of using a good results. If vrml full form in source and so, and blue color is full form of vrml representation of. This bitch some counterintuitive results. Global internet being available screen reading a system of vrml full form with existing solution. Implementations will serve your agreement that will eventually you change his timely contributions to form of realism you want everyone to form in. Strict file must then read this tool to form of vrml full vrml community used to allow browser to interactively debug a flavor of. The viewer must under some geometry to lie against objects in the scene. It contains two spaces will provide a converter from making these changes took a mesh of vrml. Lex takes care of the full form objects communicating on the process worked surprisingly well worthy of an imaginary or bottom of vrml full form. It which no proof so little remark was made of this, badge, you may remove that term. THE ENTIRE RISK AS TO THE ruthless AND PERFORMANCE OF THE PROGRAM IS soul YOU. If FALSE, there is no pair in specifying ground and sky values. -

Geometry Coding and VRML

Geometry Coding and VRML 1 2 3 Gabriel Taubin 1 William Horn Francis Lazarus Jarek Rossignac IBM T. J. Watson Research Center ABSTRACT The Virtual Reality Modeling Language (VRML) is rapidly be- coming the standard ®le format for transmitting 3D virtual worlds across the Internet. Static and dynamic descriptions of 3D objects, multimedia content, and a variety of hyperlinks can be represented in VRML ®les. Both VRML browsers and authoring tools for the creation of VRML ®les are widely available for several different platforms. Figure 1: This model contains 86,939 vertices, 173,578 triangles, In this paper we describe the topologically-assisted geometric 1 connected component, and no properties. In the standard ASCII compression technology included in our proposal for the VRML VRML format it requires 8,946,259 bytes of storage. Using 11 Compressed Binary Format. This technology produces signi®cant bits per coordinate, the ®le in compressed binary format occupies reduction of ®le sizes and, subsequently, of the time required for 214,148 bytes for a compression ratio of 41.72. 65,571 bytes are transmission of such ®les across the Internet. Compression ratios used for connectivity (3.02 bits per triangle) and 148,590 bytes are of up to 50:1 or more are achieved for large models. The pro- used for coordinates (6.84 bits per triangle). The remaining bytes posal also includes combines a binary encoding to create compact, are used to represent the scene graph structure of the VRML ®le. rapidly-parsable binary VRML ®les. The proposal is currently be- The edges of the vertex spanning tree, composed of 10,499 runs, ing evaluated by the Compressed Binary Format Working Group are shown as black lines. -

X3D Graphics and Distributed Interactive Simulation

Calhoun: The NPS Institutional Archive Faculty and Researcher Publications Faculty and Researcher Publications 2014-08 DIY X3D, Do It Yourself X3D Graphics! Brutzman, Don http://hdl.handle.net/10945/46002 DIY X3D, Do It Yourself X3D Graphics! Web3D 2014 Conference Vancouver Canada 8-10 August 2014 Don Brutzman Naval Postgraduate School [email protected] What is Extensible 3D (X3D)? 3D publishing language for Web What is Extensible 3D (X3D)? X3D is a royalty-free open-standard file format • Communicate animated 3D scenes using XML • Run-time architecture for consistent user interaction • ISO-ratified standard for storage, retrieval and playback of real-time graphics content • Enables real-time communication of 3D data across applications: archival publishing format for Web • Rich set of componentized features for engineering and scientific visualization, CAD and architecture, medical visualization, training and simulation, multimedia, entertainment, education, and more Bottom Line Up Front (BLUF) X3D-Edit is open-source tool we use and build to create, modify, test, publish, modify scenes :' XJO£diIJ]]OO711 ' J']OO ~ o x r.... ..........C ..;!,._.... ;;. ,;o>o,. -I I ~fonna t lon ' I ~ __ ""'TC·-:'"~·__ -=~~C"~C-\P=-c<b~~~o-S5~7~;.-"41~:c __ =-"~C-_~~ ________________________________________________ __ 6 Ceometry - Prirrltlves ! <Ix.). vtt lu.o ll"'''' l , 0 " e lKo<UD9"' ''l11T- S"', > iii .0 ""'" • eox 4 cone • ~ < ! II"CIYPE Xl1I P\J8LIC "110/llIeo l OI i PTO XlP 'J , : I/u" "http. II_ , _0]d,otIJ/ . peo;o l.f l.Cat1.01IlIII / lI1d· ] ,:;: . dtd"> ,. Text , Fcnt Styte , <x)P profJ.l~' ~ r=- l.vt ' verll.u>D"' ' '): .: ' 1CllIl= ; lliJdK ' http.