Journal Rankings in Sociology

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ENERGY DARWINISM the Evolution of the Energy Industry

ENERGY DARWINISM The Evolution of the Energy Industry Citi GPS: Global Perspectives & Solutions October 2013 J ason Channell Heath R J ansen Alastair R Syme Sofia Savvantidou Edward L Morse Anthony Yuen Citi is one of the world’s largest financial institutions, operating in all major established and emerging markets. Across these world markets, our employees conduct an ongoing multi-disciplinary global conversation – accessing information, analyzing data, developing insights, and formulating advice for our clients. As our premier thought-leadership product, Citi GPS is designed to help our clients navigate the global economy’s most demanding challenges, identify future themes and trends, and help our clients profit in a fast-changing and interconnected world. Citi GPS accesses the best elements of our global conversation and harvests the thought leadership of a wide range of senior professionals across our firm. This is not a research report and does not constitute advice on investments or a solicitation to buy or sell any financial instrument. For more information on Citi GPS, please visit www.citi.com/citigps. Citi GPS: Global Perspectives & Solutions October 2013 Jason Channell is a Director and Global Head of Citi's Alternative Energy and Cleantech equity research team. Throughout his career Jason's research has spanned the energy spectrum of utilities, oil & gas, and alternative energy. He has worked for both buy and sell-side firms, including Goldman Sachs and Fidelity Investments, and has been highly ranked in the Institutional Investor, Extel and Starmine external surveys. His knowledge has led to significant interaction with regulators and policymakers, most notably presenting to members of the US Senate Energy and Finance committees, and to United Nations think-tanks. -

1 Data Policies of Highly-Ranked Social Science

1 Data policies of highly-ranked social science journals1 Mercè Crosas2, Julian Gautier2, Sebastian Karcher3, Dessi Kirilova3, Gerard Otalora2, Abigail Schwartz2 Abstract By encouraging and requiring that authors share their data in order to publish articles, scholarly journals have become an important actor in the movement to improve the openness of data and the reproducibility of research. But how many social science journals encourage or mandate that authors share the data supporting their research findings? How does the share of journal data policies vary by discipline? What influences these journals’ decisions to adopt such policies and instructions? And what do those policies and instructions look like? We discuss the results of our analysis of the instructions and policies of 291 highly-ranked journals publishing social science research, where we studied the contents of journal data policies and instructions across 14 variables, such as when and how authors are asked to share their data, and what role journal ranking and age play in the existence and quality of data policies and instructions. We also compare our results to the results of other studies that have analyzed the policies of social science journals, although differences in the journals chosen and how each study defines what constitutes a data policy limit this comparison. We conclude that a little more than half of the journals in our study have data policies. A greater share of the economics journals have data policies and mandate sharing, followed by political science/international relations and psychology journals. Finally, we use our findings to make several recommendations: Policies should include the terms “data,” “dataset” or more specific terms that make it clear what to make available; policies should include the benefits of data sharing; journals, publishers, and associations need to collaborate more to clarify data policies; and policies should explicitly ask for qualitative data. -

Constructing an Emerging Field of Sociology Eddie Hartmann, Potsdam University

DOI: 10.4119/UNIBI/ijcv.623 IJCV: Vol. 11/2017 Violence: Constructing an Emerging Field of Sociology Eddie Hartmann, Potsdam University Vol. 11/2017 The IJCV provides a forum for scientific exchange and public dissemination of up-to-date scientific knowledge on conflict and violence. The IJCV is independent, peer reviewed, open access, and included in the Social Sciences Citation Index (SSCI) as well as other relevant databases (e.g., SCOPUS, EBSCO, ProQuest, DNB). The topics on which we concentrate—conflict and violence—have always been central to various disciplines. Con- sequently, the journal encompasses contributions from a wide range of disciplines, including criminology, econom- ics, education, ethnology, history, political science, psychology, social anthropology, sociology, the study of reli- gions, and urban studies. All articles are gathered in yearly volumes, identified by a DOI with article-wise pagination. For more information please visit www.ijcv.org Author Information: Eddie Hartmann, Potsdam University [email protected] Suggested Citation: APA: Hartmann, E. (2017). Violence: Constructing an Emerging Field of Sociology. International Journal of Conflict and Violence, 11, 1-9. doi: 10.4119/UNIBI/ijcv.623 Harvard: Hartmann, Eddie. 2017. Violence: Constructing an Emerging Field of Sociology. International Journal of Conflict and Violence 11:1-9. doi: 10.4119/UNIBI/ijcv.623 This work is licensed under the Creative Commons Attribution-NoDerivatives License. ISSN: 1864–1385 IJCV: Vol. 11/2017 Hartmann: Violence: Constructing an Emerging Field of Sociology 1 Violence: Constructing an Emerging Field of Sociology Eddie Hartmann, Potsdam University @ Recent research in the social sciences has explicitly addressed the challenge of bringing violence back into the center of attention. -

Justice in the Global Work Life: the Right to Know, To

Pathways to Sustainability: Evolution or Revolution?* Nicholas A. Ashford Massachusetts Institute of Technology, Cambridge, MA 02139, USA Introduction The purposes of this chapter is to delve more deeply into the processes and determinants of technological, organisational, and social innovation and to discuss their implications for selecting instruments and policies to stimulate the kinds of innovation necessary for the transformation of industrial societies into sustainable ones. Sustainable development must be seen as a broad concept, incorporating concerns for the economy, the environment, and employment. All three are driven/affected by both technological innovation [Schumpeter, 1939] and globalised trade [Ekins et al., 1994; Diwan et al., 1997]. They are also in a fragile balance, are inter-related, and need to be addressed together in a coherent and mutually reinforcing way [Ashford, 2001]. Here we will argue for the attainment of ‘triple sustainability’ – improvements in competitiveness (or productiveness) and long-term dynamic efficiency, social cohesion (work/employment), and environment (including resource productivity, environmental pollution, and climate disruption)1. The figure below depicts the salient features and determinates of sustainability. They are, in turn, influenced by both public and private-sector initiatives and policies. Toxic Pollution, Resource Depletion & Climate Change environment Effects of environmental policies on employment, Trade and environment and health & safety Investment and environment Uncoordinated -

Journal Rankings Paper

NBER WORKING PAPER SERIES NEOPHILIA RANKING OF SCIENTIFIC JOURNALS Mikko Packalen Jay Bhattacharya Working Paper 21579 http://www.nber.org/papers/w21579 NATIONAL BUREAU OF ECONOMIC RESEARCH 1050 Massachusetts Avenue Cambridge, MA 02138 September 2015 We thank Bruce Weinberg, Vetla Torvik, Neil Smalheiser, Partha Bhattacharyya, Walter Schaeffer, Katy Borner, Robert Kaestner, Donna Ginther, Joel Blit and Joseph De Juan for comments. We also thank seminar participants at the University of Illinois at Chicago Institute of Government and Public Affairs, at the Research in Progress Seminar at Stanford Medical School, and at the National Bureau of Economic Research working group on Invention in an Aging Society for helpful feedback. Finally, we thank the National Institute of Aging for funding for this research through grant P01-AG039347. We are solely responsible for the content and errors in the paper. The views expressed herein are those of the authors and do not necessarily reflect the views of the National Bureau of Economic Research. NBER working papers are circulated for discussion and comment purposes. They have not been peer- reviewed or been subject to the review by the NBER Board of Directors that accompanies official NBER publications. © 2015 by Mikko Packalen and Jay Bhattacharya. All rights reserved. Short sections of text, not to exceed two paragraphs, may be quoted without explicit permission provided that full credit, including © notice, is given to the source. Neophilia Ranking of Scientific Journals Mikko Packalen and Jay Bhattacharya NBER Working Paper No. 21579 September 2015 JEL No. I1,O3,O31 ABSTRACT The ranking of scientific journals is important because of the signal it sends to scientists about what is considered most vital for scientific progress. -

A Bibliometric and Visual Analysis of Rural Development Research

sustainability Review A Bibliometric and Visual Analysis of Rural Development Research Ying Lu * and Walter Timo de Vries Chair of Land Management, Department of Aerospace and Geodesy, Technical University of Munich (TUM), 80333 Munich, Germany; [email protected] * Correspondence: [email protected] Abstract: Rural development research integrates numerous theoretical and empirical studies and has evolved over the past few decades. However, few systematic literature reviews have explored the changing landscape. This study aims to obtain an overview of rural development research by applying a bibliometric and visual analysis. In this paper, we introduce four computer-based software tools, including HistCite™, CiteSpace, VOSviewer, and Map and Alluvial Generator, to help with data collection, data analysis, and visualization. The dataset consists of 6968 articles of rural development research, which were downloaded from the database Web of Science. The period covers 1957 to 2020 and the analysis units include journals, categories, authors, references, and keywords. Co-occurrence and co-citation analysis are conducted, and the results are exported in the format of networks. We analyze the trends of publications and explore the discipline distribution and identify the most influential authors and articles at different times. The results show that this field of study has attracted progressively more scholars from a variety of research fields and has become multidisciplinary and interdisciplinary. The changing knowledge domains of rural development research also reflect the dynamics and complexity of rural contexts. Keywords: rural development research; bibliometric analysis; visualization Citation: Lu, Y.; de Vries, W.T. A Bibliometric and Visual Analysis of Rural Development Research. -

Medicare Claims Processing Manual, Chapter 3, Inpatient Hospital Billing

Medicare Claims Processing Manual Chapter 3 - Inpatient Hospital Billing Table of Contents (Rev. 10952, Issued: 09-20-21) Transmittals for Chapter 3 10 - General Inpatient Requirements 10.1 - Claim Formats 10.2 - Focused Medical Review (FMR) 10.3 - Spell of Illness 10.4 - Payment of Nonphysician Services for Inpatients 10.5 - Hospital Inpatient Bundling 20 - Payment Under Prospective Payment System (PPS) Diagnosis Related Groups (DRGs) 20.1 - Hospital Operating Payments Under PPS 20.1.1 - Hospital Wage Index 20.1.2 - Outliers 20.1.2.1 - Cost to Charge Ratios 20.1.2.2 - Statewide Average Cost to Charge Ratios 20.1.2.3 - Threshold and Marginal Cost 20.1.2.4 - Transfers 20.1.2.5 - Reconciliation 20.1.2.6 - Time Value of Money 20.1.2.7 - Procedure for Medicare contractors to Perform and Record Outlier Reconciliation Adjustments 20.1.2.8 - Specific Outlier Payments for Burn Cases 20.1.2.9 - Medical Review and Adjustments 20.1.2.10 - Return Codes for Pricer 20.2 - Computer Programs Used to Support Prospective Payment System 20.2.1 - Medicare Code Editor (MCE) 20.2.1.1 - Paying Claims Outside of the MCE 20.2.1.1.1 - Requesting to Pay Claims Without MCE Approval 20.2.1.1.2 - Procedures for Paying Claims Without Passing through the MCE 20.2.2 - DRG GROUPER Program 20.2.3 - PPS Pricer Program 20.2.3.1 - Provider-Specific File 20.3 - Additional Payment Amounts for Hospitals with Disproportionate Share of Low-Income Patients 20.3.1 - Clarification of Allowable Medicaid Days in the Medicare Disproportionate Share Hospital (DSH) Adjustment Calculation -

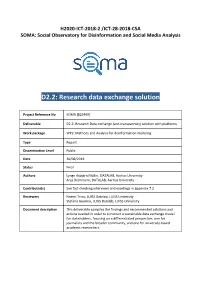

D2.2: Research Data Exchange Solution

H2020-ICT-2018-2 /ICT-28-2018-CSA SOMA: Social Observatory for Disinformation and Social Media Analysis D2.2: Research data exchange solution Project Reference No SOMA [825469] Deliverable D2.2: Research Data exchange (and transparency) solution with platforms Work package WP2: Methods and Analysis for disinformation modeling Type Report Dissemination Level Public Date 30/08/2019 Status Final Authors Lynge Asbjørn Møller, DATALAB, Aarhus University Anja Bechmann, DATALAB, Aarhus University Contributor(s) See fact-checking interviews and meetings in appendix 7.2 Reviewers Noemi Trino, LUISS Datalab, LUISS University Stefano Guarino, LUISS Datalab, LUISS University Document description This deliverable compiles the findings and recommended solutions and actions needed in order to construct a sustainable data exchange model for stakeholders, focusing on a differentiated perspective, one for journalists and the broader community, and one for university-based academic researchers. SOMA-825469 D2.2: Research data exchange solution Document Revision History Version Date Modifications Introduced Modification Reason Modified by v0.1 28/08/2019 Consolidation of first DATALAB, Aarhus draft University v0.2 29/08/2019 Review LUISS Datalab, LUISS University v0.3 30/08/2019 Proofread DATALAB, Aarhus University v1.0 30/08/2019 Final version DATALAB, Aarhus University 30/08/2019 Page | 1 SOMA-825469 D2.2: Research data exchange solution Executive Summary This report provides an evaluation of current solutions for data transparency and exchange with social media platforms, an account of the historic obstacles and developments within the subject and a prioritized list of future scenarios and solutions for data access with social media platforms. The evaluation of current solutions and the historic accounts are based primarily on a systematic review of academic literature on the subject, expanded by an account on the most recent developments and solutions. -

A Comprehensive Analysis of the Journal Evaluation System in China

A comprehensive analysis of the journal evaluation system in China Ying HUANG1,2, Ruinan LI1, Lin ZHANG1,2,*, Gunnar SIVERTSEN3 1School of Information Management, Wuhan University, China 2Centre for R&D Monitoring (ECOOM) and Department of MSI, KU Leuven, Belgium 3Nordic Institute for Studies in Innovation, Research and Education, Tøyen, Oslo, Norway Abstract Journal evaluation systems are important in science because they focus on the quality of how new results are critically reviewed and published. They are also important because the prestige and scientific impact of journals is given attention in research assessment and in career and funding systems. Journal evaluation has become increasingly important in China with the expansion of the country’s research and innovation system and its rise as a major contributor to global science. In this paper, we first describe the history and background for journal evaluation in China. Then, we systematically introduce and compare the most influential journal lists and indexing services in China. These are: the Chinese Science Citation Database (CSCD); the journal partition table (JPT); the AMI Comprehensive Evaluation Report (AMI); the Chinese STM Citation Report (CJCR); the “A Guide to the Core Journals of China” (GCJC); the Chinese Social Sciences Citation Index (CSSCI); and the World Academic Journal Clout Index (WAJCI). Some other lists published by government agencies, professional associations, and universities are briefly introduced as well. Thereby, the tradition and landscape of the journal evaluation system in China is comprehensively covered. Methods and practices are closely described and sometimes compared to how other countries assess and rank journals. Keywords: scientific journal; journal evaluation; peer review; bibliometrics 1 1. -

Analyzing Social Disadvantage in Rural Peripheries in Czechia and Eastern Germany: Conceptual Model and Study Design

A Service of Leibniz-Informationszentrum econstor Wirtschaft Leibniz Information Centre Make Your Publications Visible. zbw for Economics Keim-Klärner, Sylvia et al. Working Paper Analyzing social disadvantage in rural peripheries in Czechia and Eastern Germany: Conceptual model and study design Thünen Working Paper, No. 170 Provided in Cooperation with: Johann Heinrich von Thünen Institute, Federal Research Institute for Rural Areas, Forestry and Fisheries Suggested Citation: Keim-Klärner, Sylvia et al. (2021) : Analyzing social disadvantage in rural peripheries in Czechia and Eastern Germany: Conceptual model and study design, Thünen Working Paper, No. 170, ISBN 978-3-86576-218-4, Johann Heinrich von Thünen-Institut, Braunschweig, http://dx.doi.org/10.3220/WP1614067689000 This Version is available at: http://hdl.handle.net/10419/232538 Standard-Nutzungsbedingungen: Terms of use: Die Dokumente auf EconStor dürfen zu eigenen wissenschaftlichen Documents in EconStor may be saved and copied for your Zwecken und zum Privatgebrauch gespeichert und kopiert werden. personal and scholarly purposes. Sie dürfen die Dokumente nicht für öffentliche oder kommerzielle You are not to copy documents for public or commercial Zwecke vervielfältigen, öffentlich ausstellen, öffentlich zugänglich purposes, to exhibit the documents publicly, to make them machen, vertreiben oder anderweitig nutzen. publicly available on the internet, or to distribute or otherwise use the documents in public. Sofern die Verfasser die Dokumente unter Open-Content-Lizenzen (insbesondere CC-Lizenzen) zur Verfügung gestellt haben sollten, If the documents have been made available under an Open gelten abweichend von diesen Nutzungsbedingungen die in der dort Content Licence (especially Creative Commons Licences), you genannten Lizenz gewährten Nutzungsrechte. may exercise further usage rights as specified in the indicated licence. -

Black Lives Matter: (Re)Framing the Next Wave of Black Liberation Amanda D

RESEARCH IN SOCIAL MOVEMENTS, CONFLICTS AND CHANGE RESEARCH IN SOCIAL MOVEMENTS, CONFLICTS AND CHANGE Series Editor: Patrick G. Coy Recent Volumes: Volume 27: Research in Social Movements, Conflicts and Change À Edited by Patrick G. Coy Volume 28: Research in Social Movements, Conflicts and Change À Edited by Patrick G. Coy Volume 29: Pushing the Boundaries: New Frontiers in Conflict Resolution and Collaboration À Edited by Rachel Fleishman, Catherine Gerard and Rosemary O’Leary Volume 30: Research in Social Movements, Conflicts and Change À Edited by Patrick G. Coy Volume 31: Research in Social Movements, Conflicts and Change À Edited by Patrick G. Coy Volume 32: Critical Aspects of Gender in Conflict Resolution, Peacebuilding, and Social Movements À Edited by Anna Christine Snyder and Stephanie Phetsamay Stobbe Volume 33: Media, Movements, and Political Change À Edited by Jennifer Earl and Deana A. Rohlinger Volume 34: Nonviolent Conflict and Civil Resistance À Edited by Sharon Erickson Nepstad and Lester R. Kurtz Volume 35: Advances in the Visual Analysis of Social Movments À Edited by Nicole Doerr, Alice Mattoni and Simon Teune Volume 36: Research in Social Movements, Conflicts and Change À Edited by Patrick G. Coy Volume 37: Intersectionality and Social Change À Edited by Lynne M. Woehrle Volume 38: Research in Social Movements, Conflicts and Change À Edited by Patrick G. Coy Volume 39: Protest, Social Movements, and Global Democracy since 2011: New Perspectives À Edited by Thomas Davies, Holly Eva Ryan and Alejandro Peña Volume 40: Narratives of Identity in Social Movements, Conflicts and Change À Edited by Landon E. -

Sociology and Social Anthropology Fall Term 2020 Social Movements

Sociology and Social Anthropology Fall Term 2020 Social Movements SOCL 5373 (2 credits) Instructor: Jean-Louis Fabiani [email protected] Social Movements have been From the start a central object of the social sciences: how do people behave collectively? How do they coordinate? What are the costs of a mobilization? What are the tools used (gatherings, voice, violence and so on) Who gets involved, and who does not? What triggers a movement, what makes it Fail? How are movements remembered and replicated in other settings? The First attempts to understand collective behavior developed in the area of crowd psychology, at the beginning of the 20th century: explaining the protesting behavior oF the dominated classes was the goal oF a rather conservative discourse that aimed to avoid disruptive attitudes. Gustave Lebon's rather pseudo-scientiFic attempts were a case in point: collective action was identiFied as a Form of panic or of madness. Later on, more sympathetic approaches appeared, since social movements were considered as an excellent illustration of a democratic state. Recently, globalization has brought about new objects oF contention but also the internationalization oF protests. Mobilizations in the post-socialist countries and the "Arab Springs" are characterized by new Forms of claims and new Forms of action. For many social scientists, their study demands a new theoretical and methodological equipment. The Field of social movements has been an innovative place For new concepts and new methods: "resource mobilization theory", "repertoire of action" and "eventful sociology" are good examples. Right now, this area oF study hosts the most intense, and perhaps the most exciting debates in the social sciences.