On Imbalanced Classification of Benthic Macroinvertebrates: Metrics and Loss-Functions

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ARTHROPOD COMMUNITIES and PASSERINE DIET: EFFECTS of SHRUB EXPANSION in WESTERN ALASKA by Molly Tankersley Mcdermott, B.A./B.S

Arthropod communities and passerine diet: effects of shrub expansion in Western Alaska Item Type Thesis Authors McDermott, Molly Tankersley Download date 26/09/2021 06:13:39 Link to Item http://hdl.handle.net/11122/7893 ARTHROPOD COMMUNITIES AND PASSERINE DIET: EFFECTS OF SHRUB EXPANSION IN WESTERN ALASKA By Molly Tankersley McDermott, B.A./B.S. A Thesis Submitted in Partial Fulfillment of the Requirements for the Degree of Master of Science in Biological Sciences University of Alaska Fairbanks August 2017 APPROVED: Pat Doak, Committee Chair Greg Breed, Committee Member Colleen Handel, Committee Member Christa Mulder, Committee Member Kris Hundertmark, Chair Department o f Biology and Wildlife Paul Layer, Dean College o f Natural Science and Mathematics Michael Castellini, Dean of the Graduate School ABSTRACT Across the Arctic, taller woody shrubs, particularly willow (Salix spp.), birch (Betula spp.), and alder (Alnus spp.), have been expanding rapidly onto tundra. Changes in vegetation structure can alter the physical habitat structure, thermal environment, and food available to arthropods, which play an important role in the structure and functioning of Arctic ecosystems. Not only do they provide key ecosystem services such as pollination and nutrient cycling, they are an essential food source for migratory birds. In this study I examined the relationships between the abundance, diversity, and community composition of arthropods and the height and cover of several shrub species across a tundra-shrub gradient in northwestern Alaska. To characterize nestling diet of common passerines that occupy this gradient, I used next-generation sequencing of fecal matter. Willow cover was strongly and consistently associated with abundance and biomass of arthropods and significant shifts in arthropod community composition and diversity. -

Trichoptera) from Finnmark, Northern Norway

© Norwegian Journal of Entomology. 5 December 2012 Caddisflies (Trichoptera) from Finnmark, northern Norway TROND ANDERSEN & LINN KATRINE HAGENLUND Andersen, T. & Hagenlund, L.K. 2012. Caddisflies (Trichoptera) from Finnmark, northern Norway. Norwegian Journal of Entomology 59, 133–154. Records of 108 species of Trichoptera from Finnmark, northern Norway, are presented based partly on material collected in 2010 and partly on older material housed in the entomological collection at the University Museum of Bergen. Rhyacophila obliterata McLachlan, 1863, must be regarded as new to Norway and Rhyacophila fasciata Hagen, 1859; Glossosoma nylanderi McLachlan, 1879; Agapetus ochripes Curtis, 1834; Agraylea cognatella McLachlan, 1880; Ithytrichia lamellaris Eaton, 1873; Oxyethira falcata Morton, 1893; O. sagittifera Ris, 1897; Wormaldia subnigra McLachlan, 1865; Hydropsyche newae Kolenati, 1858; H. saxonica McLachlan, 1884; Brachycentrus subnubilis Curtis, 1834; Apatania auricula (Forsslund, 1930); A. dalecarlica Forsslund, 1934; Annitella obscurata (McLachlan, 1876); Limnephilus decipiens (Kolenati, 1848); L. externus Hagen, 1865; L. femoratus (Zetterstedt, 1840); L. politus McLachlan, 1865; L. sparsus Curtis, 1834; L. stigma Curtis, 1834; L. subnitidus McLachlan, 1875; L. vittatus (Fabricius, 1798); Phacopteryx brevipennis (Curtis, 1834); Halesus tesselatus (Rambur, 1842); Stenophylax sequax (McLachlan, 1875); Beraea pullata (Curtis, 1834); Beraeodes minutus (Linnaeus, 1761); Athripsodes commutatus (Rostock, 1874); Ceraclea fulva (Rambur, -

TB142: Mayflies of Maine: an Annotated Faunal List

The University of Maine DigitalCommons@UMaine Technical Bulletins Maine Agricultural and Forest Experiment Station 4-1-1991 TB142: Mayflies of aine:M An Annotated Faunal List Steven K. Burian K. Elizabeth Gibbs Follow this and additional works at: https://digitalcommons.library.umaine.edu/aes_techbulletin Part of the Entomology Commons Recommended Citation Burian, S.K., and K.E. Gibbs. 1991. Mayflies of Maine: An annotated faunal list. Maine Agricultural Experiment Station Technical Bulletin 142. This Article is brought to you for free and open access by DigitalCommons@UMaine. It has been accepted for inclusion in Technical Bulletins by an authorized administrator of DigitalCommons@UMaine. For more information, please contact [email protected]. ISSN 0734-9556 Mayflies of Maine: An Annotated Faunal List Steven K. Burian and K. Elizabeth Gibbs Technical Bulletin 142 April 1991 MAINE AGRICULTURAL EXPERIMENT STATION Mayflies of Maine: An Annotated Faunal List Steven K. Burian Assistant Professor Department of Biology, Southern Connecticut State University New Haven, CT 06515 and K. Elizabeth Gibbs Associate Professor Department of Entomology University of Maine Orono, Maine 04469 ACKNOWLEDGEMENTS Financial support for this project was provided by the State of Maine Departments of Environmental Protection, and Inland Fisheries and Wildlife; a University of Maine New England, Atlantic Provinces, and Quebec Fellow ship to S. K. Burian; and the Maine Agricultural Experiment Station. Dr. William L. Peters and Jan Peters, Florida A & M University, pro vided support and advice throughout the project and we especially appreci ated the opportunity for S.K. Burian to work in their laboratory and stay in their home in Tallahassee, Florida. -

Diversity and Ecosystem Services of Trichoptera

Review Diversity and Ecosystem Services of Trichoptera John C. Morse 1,*, Paul B. Frandsen 2,3, Wolfram Graf 4 and Jessica A. Thomas 5 1 Department of Plant & Environmental Sciences, Clemson University, E-143 Poole Agricultural Center, Clemson, SC 29634-0310, USA; [email protected] 2 Department of Plant & Wildlife Sciences, Brigham Young University, 701 E University Parkway Drive, Provo, UT 84602, USA; [email protected] 3 Data Science Lab, Smithsonian Institution, 600 Maryland Ave SW, Washington, D.C. 20024, USA 4 BOKU, Institute of Hydrobiology and Aquatic Ecology Management, University of Natural Resources and Life Sciences, Gregor Mendelstr. 33, A-1180 Vienna, Austria; [email protected] 5 Department of Biology, University of York, Wentworth Way, York Y010 5DD, UK; [email protected] * Correspondence: [email protected]; Tel.: +1-864-656-5049 Received: 2 February 2019; Accepted: 12 April 2019; Published: 1 May 2019 Abstract: The holometabolous insect order Trichoptera (caddisflies) includes more known species than all of the other primarily aquatic orders of insects combined. They are distributed unevenly; with the greatest number and density occurring in the Oriental Biogeographic Region and the smallest in the East Palearctic. Ecosystem services provided by Trichoptera are also very diverse and include their essential roles in food webs, in biological monitoring of water quality, as food for fish and other predators (many of which are of human concern), and as engineers that stabilize gravel bed sediment. They are especially important in capturing and using a wide variety of nutrients in many forms, transforming them for use by other organisms in freshwaters and surrounding riparian areas. -

Microsoft Outlook

Joey Steil From: Leslie Jordan <[email protected]> Sent: Tuesday, September 25, 2018 1:13 PM To: Angela Ruberto Subject: Potential Environmental Beneficial Users of Surface Water in Your GSA Attachments: Paso Basin - County of San Luis Obispo Groundwater Sustainabilit_detail.xls; Field_Descriptions.xlsx; Freshwater_Species_Data_Sources.xls; FW_Paper_PLOSONE.pdf; FW_Paper_PLOSONE_S1.pdf; FW_Paper_PLOSONE_S2.pdf; FW_Paper_PLOSONE_S3.pdf; FW_Paper_PLOSONE_S4.pdf CALIFORNIA WATER | GROUNDWATER To: GSAs We write to provide a starting point for addressing environmental beneficial users of surface water, as required under the Sustainable Groundwater Management Act (SGMA). SGMA seeks to achieve sustainability, which is defined as the absence of several undesirable results, including “depletions of interconnected surface water that have significant and unreasonable adverse impacts on beneficial users of surface water” (Water Code §10721). The Nature Conservancy (TNC) is a science-based, nonprofit organization with a mission to conserve the lands and waters on which all life depends. Like humans, plants and animals often rely on groundwater for survival, which is why TNC helped develop, and is now helping to implement, SGMA. Earlier this year, we launched the Groundwater Resource Hub, which is an online resource intended to help make it easier and cheaper to address environmental requirements under SGMA. As a first step in addressing when depletions might have an adverse impact, The Nature Conservancy recommends identifying the beneficial users of surface water, which include environmental users. This is a critical step, as it is impossible to define “significant and unreasonable adverse impacts” without knowing what is being impacted. To make this easy, we are providing this letter and the accompanying documents as the best available science on the freshwater species within the boundary of your groundwater sustainability agency (GSA). -

This Work Is Licensed Under the Creative Commons Attribution-Noncommercial-Share Alike 3.0 United States License

This work is licensed under the Creative Commons Attribution-Noncommercial-Share Alike 3.0 United States License. To view a copy of this license, visit http://creativecommons.org/licenses/by-nc-sa/3.0/us/ or send a letter to Creative Commons, 171 Second Street, Suite 300, San Francisco, California, 94105, USA. THE ADULT POLYCENTROPODIDAE OF CANADA AND ADJACENT UNITED STATES Andrew P. Nimmo Department of Entomology University of Alberta Edmonton, Alberta, T6G 2E3 Quaestiones Entomologicae CANADA 22:143-252 1986 ABSTRACT Of the 46 species reported herefrom Canada and adjacent States of the United States, four belong to the genus Cernotina Ross, one to Cyrnellus (Banks), three to Neureclipsis McLachlan, five to Nyctiophylax Brauer, and 33 to Polycentropus Curtis. Keys are provided (for males and females, both, where possible) to genera and species. For each species the habitus is given in some detail, with diagnostic statements for the genitalia. Also included are brief statements on biology (if known), and distribution. Distributions are mapped, and genitalia are fully illustrated. RESUME Quarante six especes de Polycentropodidae sont mentionneespour le Canada et les Hats frontaliers des Etats-Vnis, representant les genres suivants: Cernotina Ross (4), Cyrnellus (Banks) (I), Neureclipsis McLachlan (3), Nyctiophylax Brauer (5) et Polycentropus Curtis (33). Des clefs d'identification au genre et a I'espece (pour les males et les feme I les) sont presentees par I'auteur. Un habitus ainsi qui une description diagnostique des pieces genitals sont donnes pour chacune des especes. Une description resume ce qui il y a de connir sur I'histoire naturelle et la repartition geographique de ces especes. -

Trichopterological Literature This List Is Informative Which Means

49 Trichopterological literature Springer, Monika 2006 Clave taxonomica para larvas de las familias del orden Trichoptera This list is informative which means- that it will include any papers (Insecta) de Costa Rica. - Revista de Biologia Tropical 54 from which fellow workers can get information on caddisflies, (Suppl.1):273-286. including dissertations, short notes, newspaper articles ect. It is not limited to formal publications, peer-reviewed papers or publications Szcz§sny, B. 2006 with high impact factor etc. However, a condition is that a minimum The types of caddis fly (Insecta: Trichoptera). - Scientific collections of one specific name of a caddisfly must be given (with the of the State Natural History Museum, Issue 2: R.J.Godunko, exception of fundamental papers e.g. on fossils). The list does not V.K.Voichyshyn, O.S.KIymyshyn (eds.): Name-bearing types and include publications from the internet. - To make the list as complete type series (1). - HaqioanbHa axafleMia Hay« YKpamM. as possible, it is essential that authors send me reprints or ), pp.98-104. xerocopies of their papers, and, if possible, also papers by other authors which they learn of and when I do not know of them. If only Torralba Burrial, Antonio 2006 references of such publications are available, please send these to Contenido estomacal de Lepomis gibbosus (L.1758) (Perciformes: me with the complete citation. - The list is in the interest of the Centrarchidae), incluyendo la primera cita de Ecnomus tenellus caddis workers' community. (Rambur, 1842) (Trichoptera: Ecnomidae) para Aragon (NE Espana). - Boletin de la SEA 39:411-412. 1999 Tsuruishi.Tatsu; Ketavan, Chitapa; Suwan, Kayan; Sirikajornjaru, rionoB.AneKCM 1999 Warunee 2006 Kpacwviup KyiwaHCKM Ha 60 TOAMHU. -

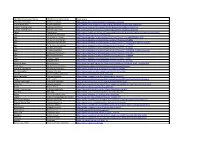

FAUNA Vernacular Name FAUNA Scientific Name Read More

FAUNA Vernacular Name FAUNA Scientific Name Read more a European Hoverfly Pocota personata https://www.naturespot.org.uk/species/pocota-personata a small black wasp Stigmus pendulus https://www.bwars.com/wasp/crabronidae/pemphredoninae/stigmus-pendulus a spider-hunting wasp Anoplius concinnus https://www.bwars.com/wasp/pompilidae/pompilinae/anoplius-concinnus a spider-hunting wasp Anoplius nigerrimus https://www.bwars.com/wasp/pompilidae/pompilinae/anoplius-nigerrimus Adder Vipera berus https://www.woodlandtrust.org.uk/trees-woods-and-wildlife/animals/reptiles-and-amphibians/adder/ Alga Cladophora glomerata https://en.wikipedia.org/wiki/Cladophora Alga Closterium acerosum https://www.algaebase.org/search/species/detail/?species_id=x44d373af81fe4f72 Alga Closterium ehrenbergii https://www.algaebase.org/search/species/detail/?species_id=28183 Alga Closterium moniliferum https://www.algaebase.org/search/species/detail/?species_id=28227&sk=0&from=results Alga Coelastrum microporum https://www.algaebase.org/search/species/detail/?species_id=27402 Alga Cosmarium botrytis https://www.algaebase.org/search/species/detail/?species_id=28326 Alga Lemanea fluviatilis https://www.algaebase.org/search/species/detail/?species_id=32651&sk=0&from=results Alga Pediastrum boryanum https://www.algaebase.org/search/species/detail/?species_id=27507 Alga Stigeoclonium tenue https://www.algaebase.org/search/species/detail/?species_id=60904 Alga Ulothrix zonata https://www.algaebase.org/search/species/detail/?species_id=562 Algae Synedra tenera https://www.algaebase.org/search/species/detail/?species_id=34482 -

Ephemeroptera, Plecoptera, Megaloptera, and Trichoptera of Great Smoky Mountains National Park

The Great Smoky Mountains National Park All Taxa Biodiversity Inventory: A Search for Species in Our Own Backyard 2007 Southeastern Naturalist Special Issue 1:159–174 Ephemeroptera, Plecoptera, Megaloptera, and Trichoptera of Great Smoky Mountains National Park Charles R. Parker1,*, Oliver S. Flint, Jr.2, Luke M. Jacobus3, Boris C. Kondratieff 4, W. Patrick McCafferty3, and John C. Morse5 Abstract - Great Smoky Mountains National Park (GSMNP), situated on the moun- tainous border of North Carolina and Tennessee, is recognized as one of the most highly diverse protected areas in the temperate region. In order to provide baseline data for the scientifi c management of GSMNP, an All Taxa Biodiversity Inventory (ATBI) was initiated in 1998. Among the goals of the ATBI are to discover the identity and distribution of as many as possible of the species of life that occur in GSMNP. The authors have concentrated on the orders of completely aquatic insects other than odonates. We examined or utilized others’ records of more than 53,600 adult and 78,000 immature insects from 545 locations. At present, 469 species are known from GSMNP, including 120 species of Ephemeroptera (mayfl ies), 111 spe- cies of Plecoptera (stonefl ies), 7 species of Megaloptera (dobsonfl ies, fi shfl ies, and alderfl ies), and 231 species of Trichoptera (caddisfl ies). Included in this total are 10 species new to science discovered since the ATBI began. Introduction Great Smoky Mountains National Park (GSMNP) is situated on the border of North Carolina and Tennessee and is comprised of 221,000 ha. GSMNP is recognized as one of the most diverse protected areas in the temperate region (Nichols and Langdon 2007). -

Rachel Voyt 8.15.2013 How Does Algal Biomass and Species

Rachel Voyt 8.15.2013 How does algal biomass and species composition in caddisfly nets differ from adjacent rocky substrate? Abstract This study attempts to characterize differences in algal biomass and species composition in caddisfly nets relative to their adjacent rocky substrate. To test this, we compared algae species present in caddisfly nets versus substrates and characterized differences in biomass by comparing chlorophyll a content in each sample. Results showed a significantly higher biomass in net relative to rock samples and a high degree of overlap between algae species in net and rock samples. This suggests that net-spinning caddisflies act as a non-selective trap for particulate matter in the drift and have little effect on the abiotic variables directly affecting algae composition. Introduction Algal communities are highly variable due to influences by both biotic and abiotic factors. In river systems, substrate type, current velocity, and macroinvertebrates are all key to this variability (Finlay et al. 1999, Stief and Becker 2005). In general, lower velocity currents allow for higher levels of algal biomass to accumulate (Poff et al. 1990), although some studies have shown that biomass accumulation increases with higher currents after initial establishment (DeNicola and McIntire 1990). The effects of current velocities can also change with substrate composition, which influence algal species establishment and thus, species composition (DeNicola and McIntire 1990). These factors also play a role in determining macroinvertebrate establishment, which have their own distinct effects on algal communities (Wallace 1996). Much of the studies on algal composition in river systems have been based on the influence of abiotic factors. -

Departamento De Biodiversidad Y Gestión Ambiental (Zoología) Facultad De Ciencias Biológicas Y Ambientales, Universidad De León 24071 León, SPAIN [email protected]

The Coleopterists Bulletin, 64(3): 201–219. 2010. DIVERSITY OF WATER BEETLES IN PICOS DE EUROPA NATIONAL PARK,SPAIN: INVENTORY COMPLETENESS AND CONSERVATION ASSESSMENT LUIS F. VALLADARES Departamento de Biodiversidad y Gestión Ambiental (Zoología) Facultad de Ciencias Biológicas y Ambientales, Universidad de León 24071 León, SPAIN [email protected] ANDRÉS BASELGA Departamento de Zoología Facultad de Biología, Universidad de Santiago de Compostela 15782 Santiago de Compostela, SPAIN [email protected] AND JOSEFINA GARRIDO Departamento de Ecología y Biología Animal Facultad de Biología, Universidad de Vigo 36310 Vigo, SPAIN [email protected] ABSTRACT The diversity of true water beetles (Coleoptera: Gyrinidae, Haliplidae, Dytiscidae, Helophoridae, Hydrochidae, Hydrophilidae, Hydraenidae, Elmidae, and Dryopidae) in Picos de Europa National Park (Cantabrian Mountains, Spain) was examined. Taking into account historic long-term sampling (all collections from 1882 to the present), a total of 117 species are recorded. Species accumulation models and non-parametric estimators were used to estimate the actual species richness of aquatic Coleoptera occurring in Picos de Europa National Park. Estimates were generated by ana- lyzing both the collector’s curve from the long-term sampling and the historic cumulative curve of species recorded from the park since 1882. Values of species richness estimated by different methods range from 127 to 170 species (mean = 148 ± 15 SD). Therefore, it seems that the current inventory has reached a reasonably good level of completeness as estimates indicate that about 80% of the water beetle fauna has already been recorded. The inventory is used to analyze the biological uniqueness of the park and its outstanding level of species richness and endemism (33 Iberian endemic species). -

Groundwater Ecology: Invertebrate Community Distribution Across the Benthic, Hyporheic and Phreatic Habitats of a Chalk Aquifer in Southeast England

Groundwater Ecology: Invertebrate Community Distribution across the Benthic, Hyporheic and Phreatic Habitats of a Chalk Aquifer in Southeast England Jessica M. Durkota Thesis submitted in partial fulfilment of the requirements for the degree of Doctor of Philosophy University College London Declaration of the Author I, Jessica M. Durkota, confirm that the work presented in this thesis is my own. Where information has been derived from other sources, I confirm that this has been indicated in the thesis. This work was undertaken with the partial support of the Environment Agency. The views expressed in this publication are mine and mine alone and not necessarily those of the Environment Agency or University College London (UCL). 2 Abstract Groundwater is an important resource for drinking water, agriculture, and industry, but it also plays an essential role in supporting the functioning of freshwater ecosystems and providing habitat for a number of rare species. However, despite its importance, groundwater ecology often receives little attention in environmental legislation or research. This study aims to improve our understanding of the organisms living in groundwater-dependent habitats and the influence of environmental conditions on their distribution. Invertebrate communities occurring in the benthic, hyporheic and phreatic habitats were surveyed at twelve sites over four years across the Stour Chalk Block, a lowland catchment in southern England. A diverse range of stygoxenes, stygophiles and stygobionts, including the first record of Gammarus fossarum in the British Isles, were identified using morphological and molecular techniques. The results indicate that under normal conditions, each habitat provided differing environmental conditions which supported a distinctive invertebrate community.