CCD Photometry Using Filters John E. Hoot SSC Observatory IAU #676

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

BRAS Newsletter August 2013

www.brastro.org August 2013 Next meeting Aug 12th 7:00PM at the HRPO Dark Site Observing Dates: Primary on Aug. 3rd, Secondary on Aug. 10th Photo credit: Saturn taken on 20” OGS + Orion Starshoot - Ben Toman 1 What's in this issue: PRESIDENT'S MESSAGE....................................................................................................................3 NOTES FROM THE VICE PRESIDENT ............................................................................................4 MESSAGE FROM THE HRPO …....................................................................................................5 MONTHLY OBSERVING NOTES ....................................................................................................6 OUTREACH CHAIRPERSON’S NOTES .........................................................................................13 MEMBERSHIP APPLICATION .......................................................................................................14 2 PRESIDENT'S MESSAGE Hi Everyone, I hope you’ve been having a great Summer so far and had luck beating the heat as much as possible. The weather sure hasn’t been cooperative for observing, though! First I have a pretty cool announcement. Thanks to the efforts of club member Walt Cooney, there are 5 newly named asteroids in the sky. (53256) Sinitiere - Named for former BRAS Treasurer Bob Sinitiere (74439) Brenden - Named for founding member Craig Brenden (85878) Guzik - Named for LSU professor T. Greg Guzik (101722) Pursell - Named for founding member Wally Pursell -

Multiple Star Systems Observed with Corot and Kepler

Multiple star systems observed with CoRoT and Kepler John Southworth Astrophysics Group, Keele University, Staffordshire, ST5 5BG, UK Abstract. The CoRoT and Kepler satellites were the first space platforms designed to perform high-precision photometry for a large number of stars. Multiple systems dis- play a wide variety of photometric variability, making them natural benefactors of these missions. I review the work arising from CoRoT and Kepler observations of multiple sys- tems, with particular emphasis on eclipsing binaries containing giant stars, pulsators, triple eclipses and/or low-mass stars. Many more results remain untapped in the data archives of these missions, and the future holds the promise of K2, TESS and PLATO. 1 Introduction The CoRoT and Kepler satellites represent the first generation of astronomical space missions capable of large-scale photometric surveys. The large quantity – and exquisite quality – of the data they pro- vided is in the process of revolutionising stellar and planetary astrophysics. In this review I highlight the immense variety of the scientific results from these concurrent missions, as well as the context provided by their precursors and implications for their successors. CoRoT was led by CNES and ESA, launched on 2006/12/27,and retired in June 2013 after an irre- trievable computer failure in November 2012. It performed 24 observing runs, each lasting between 21 and 152days, with a field of viewof 2×1.3◦ ×1.3◦, obtaining light curves of 163000 stars [42]. Kepler was a NASA mission, launched on 2009/03/07and suffering a critical pointing failure on 2013/05/11. It observed the same 105deg2 sky area for its full mission duration, obtaining high-precision light curves of approximately 191000 stars. -

The Minor Planet Bulletin

THE MINOR PLANET BULLETIN OF THE MINOR PLANETS SECTION OF THE BULLETIN ASSOCIATION OF LUNAR AND PLANETARY OBSERVERS VOLUME 36, NUMBER 3, A.D. 2009 JULY-SEPTEMBER 77. PHOTOMETRIC MEASUREMENTS OF 343 OSTARA Our data can be obtained from http://www.uwec.edu/physics/ AND OTHER ASTEROIDS AT HOBBS OBSERVATORY asteroid/. Lyle Ford, George Stecher, Kayla Lorenzen, and Cole Cook Acknowledgements Department of Physics and Astronomy University of Wisconsin-Eau Claire We thank the Theodore Dunham Fund for Astrophysics, the Eau Claire, WI 54702-4004 National Science Foundation (award number 0519006), the [email protected] University of Wisconsin-Eau Claire Office of Research and Sponsored Programs, and the University of Wisconsin-Eau Claire (Received: 2009 Feb 11) Blugold Fellow and McNair programs for financial support. References We observed 343 Ostara on 2008 October 4 and obtained R and V standard magnitudes. The period was Binzel, R.P. (1987). “A Photoelectric Survey of 130 Asteroids”, found to be significantly greater than the previously Icarus 72, 135-208. reported value of 6.42 hours. Measurements of 2660 Wasserman and (17010) 1999 CQ72 made on 2008 Stecher, G.J., Ford, L.A., and Elbert, J.D. (1999). “Equipping a March 25 are also reported. 0.6 Meter Alt-Azimuth Telescope for Photometry”, IAPPP Comm, 76, 68-74. We made R band and V band photometric measurements of 343 Warner, B.D. (2006). A Practical Guide to Lightcurve Photometry Ostara on 2008 October 4 using the 0.6 m “Air Force” Telescope and Analysis. Springer, New York, NY. located at Hobbs Observatory (MPC code 750) near Fall Creek, Wisconsin. -

PACS Sky Fields and Double Sources for Photometer Spatial Calibration

Document: PACS-ME-TN-035 PACS Date: 27th July 2009 Herschel Version: 2.7 Fields and Double Sources for Spatial Calibration Page 1 PACS Sky Fields and Double Sources for Photometer Spatial Calibration M. Nielbock1, D. Lutz2, B. Ali3, T. M¨uller2, U. Klaas1 1Max{Planck{Institut f¨urAstronomie, K¨onigstuhl17, D-69117 Heidelberg, Germany 2Max{Planck{Institut f¨urExtraterrestrische Physik, Giessenbachstraße, D-85748 Garching, Germany 3NHSC, IPAC, California Institute of Technology, Pasadena, CA 91125, USA Document: PACS-ME-TN-035 PACS Date: 27th July 2009 Herschel Version: 2.7 Fields and Double Sources for Spatial Calibration Page 2 Contents 1 Scope and Assumptions 4 2 Applicable and Reference Documents 4 3 Stars 4 3.1 Optical Star Clusters . .4 3.2 Bright Binaries (V -band search) . .5 3.3 Bright Binaries (K-band search) . .5 3.4 Retrieval from PACS Pointing Calibration Target List . .5 3.5 Other stellar sources . 13 3.5.1 Herbig Ae/Be stars observed with ISOPHOT . 13 4 Galactic ISOCAM fields 13 5 Galaxies 13 5.1 Quasars and AGN from the Veron catalogue . 13 5.2 Galaxy pairs . 14 5.2.1 Galaxy pairs from the IRAS Bright Galaxy Sample with VLA radio observations 14 6 Solar system objects 18 6.1 Asteroid conjunctions . 18 6.2 Conjunctions of asteroids with pointing stars . 22 6.3 Planetary satellites . 24 Appendices 26 A 2MASS images of fields with suitable double stars from the K-band 26 B HIRES/2MASS overlays for double stars from the K-band search 32 C FIR/NIR overlays for double galaxies 38 C.1 HIRES/2MASS overlays for double galaxies . -

Perturbations in the Motion of the Quasicomplanar Minor Planets For

Publications of the Department of Astronom} - Beograd, N2 ID, 1980 UDC 523.24; 521.1/3 osp PERTURBATIONS IN THE MOTION OF THE QUASICOMPLANAR MI NOR PLANETS FOR THE CASE PROXIMITIES ARE UNDER 10000 KM J. Lazovic and M. Kuzmanoski Institute of Astronomy, Faculty of Sciences, Beograd Received January 30, 1980 Summary. Mutual gravitational action during proximities of 12 quasicomplanar minor pla nets pairs have been investigated, whose minimum distances were under 10000 km. In five of the pairs perturbations of several orbital elements have been stated, whose amounts are detectable by observations from the Earth. J. Lazovic, M. Kuzmanoski, POREMECAJI ELEMENATA KRETANJA KVAZIKOM PLANARNIH MALIH PLANETA U PROKSIMITETIMA NJIHOVIH PUTANJA SA DA LJINAMA MANJIM OD 10000 KM - Ispitali smo medusobna gravitaciona de;stva pri proksi mitetima 12 parova kvazikomplanarnih malih planeta sa minimalnim daljinama ispod 10000 km. Kod pet parova nadeni su poremecaji u vi~e putanjskih elemenata, ~iji bi se iznosi mogli ustano viti posmatranjima sa Zemlje. Earlier we have found out 13 quasicomplanar minor planets pairs (the angle I between their orbital planes less than O~500), whose minimum mutual distances (pmin) were less than 10000 km (Lazovic, Kuzmanoski, 1978). The shortest pro ximity distance of only 600 km among these pairs has been stated with minor planet pair (215) Oenone and 1851 == 1950 VA. The corresponding perturbation effects in this pair have been calculated (Lazovic, Kuzmanoski, 1979a). This motivated us to investigate the mutual perturbing actions in the 12 remaining minor planets pairs for the case they found themselves within the proximities of their correspon ding orbits. -

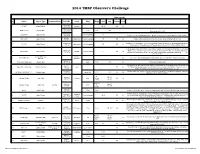

2014 Observers Challenge List

2014 TMSP Observer's Challenge Atlas page #s # Object Object Type Common Name RA, DEC Const Mag Mag.2 Size Sep. U2000 PSA 18h31m25s 1 IC 1287 Bright Nebula Scutum 20'.0 295 67 -10°47'45" 18h31m25s SAO 161569 Double Star 5.77 9.31 12.3” -10°47'45" Near center of IC 1287 18h33m28s NGC 6649 Open Cluster 8.9m Integrated 5' -10°24'10" Can be seen in 3/4d FOV with above. Brightest star is 13.2m. Approx 50 stars visible in Binos 18h28m 2 NGC 6633 Open Cluster Ophiuchus 4.6m integrated 27' 205 65 Visible in Binos and is about the size of a full Moon, brightest star is 7.6m +06°34' 17h46m18s 2x diameter of a full Moon. Try to view this cluster with your naked eye, binos, and a small scope. 3 IC 4665 Open Cluster Ophiuchus 4.2m Integrated 60' 203 65 +05º 43' Also check out “Tweedle-dee and Tweedle-dum to the east (IC 4756 and NGC 6633) A loose open cluster with a faint concentration of stars in a rich field, contains about 15-20 stars. 19h53m27s Brightest star is 9.8m, 5 stars 9-11m, remainder about 12-13m. This is a challenge obJect to 4 Harvard 20 Open Cluster Sagitta 7.7m integrated 6' 162 64 +18°19'12" improve your observation skills. Can you locate the miniature coathanger close by at 19h 37m 27s +19d? Constellation star Corona 5 Corona Borealis 55 Trace the 7 stars making up this constellation, observe and list the colors of each star asterism Borealis 15H 32' 55” Theta Corona Borealis Double Star 4.2m 6.6m .97” 55 Theta requires about 200x +31° 21' 32” The direction our Sun travels in our galaxy. -

Binocular Double Star Logbook

Astronomical League Binocular Double Star Club Logbook 1 Table of Contents Alpha Cassiopeiae 3 14 Canis Minoris Sh 251 (Oph) Psi 1 Piscium* F Hydrae Psi 1 & 2 Draconis* 37 Ceti Iota Cancri* 10 Σ2273 (Dra) Phi Cassiopeiae 27 Hydrae 40 & 41 Draconis* 93 (Rho) & 94 Piscium Tau 1 Hydrae 67 Ophiuchi 17 Chi Ceti 35 & 36 (Zeta) Leonis 39 Draconis 56 Andromedae 4 42 Leonis Minoris Epsilon 1 & 2 Lyrae* (U) 14 Arietis Σ1474 (Hya) Zeta 1 & 2 Lyrae* 59 Andromedae Alpha Ursae Majoris 11 Beta Lyrae* 15 Trianguli Delta Leonis Delta 1 & 2 Lyrae 33 Arietis 83 Leonis Theta Serpentis* 18 19 Tauri Tau Leonis 15 Aquilae 21 & 22 Tauri 5 93 Leonis OΣΣ178 (Aql) Eta Tauri 65 Ursae Majoris 28 Aquilae Phi Tauri 67 Ursae Majoris 12 6 (Alpha) & 8 Vul 62 Tauri 12 Comae Berenices Beta Cygni* Kappa 1 & 2 Tauri 17 Comae Berenices Epsilon Sagittae 19 Theta 1 & 2 Tauri 5 (Kappa) & 6 Draconis 54 Sagittarii 57 Persei 6 32 Camelopardalis* 16 Cygni 88 Tauri Σ1740 (Vir) 57 Aquilae Sigma 1 & 2 Tauri 79 (Zeta) & 80 Ursae Maj* 13 15 Sagittae Tau Tauri 70 Virginis Theta Sagittae 62 Eridani Iota Bootis* O1 (30 & 31) Cyg* 20 Beta Camelopardalis Σ1850 (Boo) 29 Cygni 11 & 12 Camelopardalis 7 Alpha Librae* Alpha 1 & 2 Capricorni* Delta Orionis* Delta Bootis* Beta 1 & 2 Capricorni* 42 & 45 Orionis Mu 1 & 2 Bootis* 14 75 Draconis Theta 2 Orionis* Omega 1 & 2 Scorpii Rho Capricorni Gamma Leporis* Kappa Herculis Omicron Capricorni 21 35 Camelopardalis ?? Nu Scorpii S 752 (Delphinus) 5 Lyncis 8 Nu 1 & 2 Coronae Borealis 48 Cygni Nu Geminorum Rho Ophiuchi 61 Cygni* 20 Geminorum 16 & 17 Draconis* 15 5 (Gamma) & 6 Equulei Zeta Geminorum 36 & 37 Herculis 79 Cygni h 3945 (CMa) Mu 1 & 2 Scorpii Mu Cygni 22 19 Lyncis* Zeta 1 & 2 Scorpii Epsilon Pegasi* Eta Canis Majoris 9 Σ133 (Her) Pi 1 & 2 Pegasi Δ 47 (CMa) 36 Ophiuchi* 33 Pegasi 64 & 65 Geminorum Nu 1 & 2 Draconis* 16 35 Pegasi Knt 4 (Pup) 53 Ophiuchi Delta Cephei* (U) The 28 stars with asterisks are also required for the regular AL Double Star Club. -

Lick Observatory Records: Photographs UA.036.Ser.07

http://oac.cdlib.org/findaid/ark:/13030/c81z4932 Online items available Lick Observatory Records: Photographs UA.036.Ser.07 Kate Dundon, Alix Norton, Maureen Carey, Christine Turk, Alex Moore University of California, Santa Cruz 2016 1156 High Street Santa Cruz 95064 [email protected] URL: http://guides.library.ucsc.edu/speccoll Lick Observatory Records: UA.036.Ser.07 1 Photographs UA.036.Ser.07 Contributing Institution: University of California, Santa Cruz Title: Lick Observatory Records: Photographs Creator: Lick Observatory Identifier/Call Number: UA.036.Ser.07 Physical Description: 101.62 Linear Feet127 boxes Date (inclusive): circa 1870-2002 Language of Material: English . https://n2t.net/ark:/38305/f19c6wg4 Conditions Governing Access Collection is open for research. Conditions Governing Use Property rights for this collection reside with the University of California. Literary rights, including copyright, are retained by the creators and their heirs. The publication or use of any work protected by copyright beyond that allowed by fair use for research or educational purposes requires written permission from the copyright owner. Responsibility for obtaining permissions, and for any use rests exclusively with the user. Preferred Citation Lick Observatory Records: Photographs. UA36 Ser.7. Special Collections and Archives, University Library, University of California, Santa Cruz. Alternative Format Available Images from this collection are available through UCSC Library Digital Collections. Historical note These photographs were produced or collected by Lick observatory staff and faculty, as well as UCSC Library personnel. Many of the early photographs of the major instruments and Observatory buildings were taken by Henry E. Matthews, who served as secretary to the Lick Trust during the planning and construction of the Observatory. -

Symposium on Telescope Science

Proceedings for the 26th Annual Conference of the Society for Astronomical Sciences Symposium on Telescope Science Editors: Brian D. Warner Jerry Foote David A. Kenyon Dale Mais May 22-24, 2007 Northwoods Resort, Big Bear Lake, CA Reprints of Papers Distribution of reprints of papers by any author of a given paper, either before or after the publication of the proceedings is allowed under the following guidelines. 1. The copyright remains with the author(s). 2. Under no circumstances may anyone other than the author(s) of a paper distribute a reprint without the express written permission of all author(s) of the paper. 3. Limited excerpts may be used in a review of the reprint as long as the inclusion of the excerpts is NOT used to make or imply an endorsement by the Society for Astronomical Sciences of any product or service. Notice The preceding “Reprint of Papers” supersedes the one that appeared in the original print version Disclaimer The acceptance of a paper for the SAS proceedings can not be used to imply or infer an endorsement by the Society for Astronomical Sciences of any product, service, or method mentioned in the paper. Published by the Society for Astronomical Sciences, Inc. First printed: May 2007 ISBN: 0-9714693-6-9 Table of Contents Table of Contents PREFACE 7 CONFERENCE SPONSORS 9 Submitted Papers THE OLIN EGGEN PROJECT ARNE HENDEN 13 AMATEUR AND PROFESSIONAL ASTRONOMER COLLABORATION EXOPLANET RESEARCH PROGRAMS AND TECHNIQUES RON BISSINGER 17 EXOPLANET OBSERVING TIPS BRUCE L. GARY 23 STUDY OF CEPHEID VARIABLES AS A JOINT SPECTROSCOPY PROJECT THOMAS C. -

Aqueous Alteration on Main Belt Primitive Asteroids: Results from Visible Spectroscopy1

Aqueous alteration on main belt primitive asteroids: results from visible spectroscopy1 S. Fornasier1,2, C. Lantz1,2, M.A. Barucci1, M. Lazzarin3 1 LESIA, Observatoire de Paris, CNRS, UPMC Univ Paris 06, Univ. Paris Diderot, 5 Place J. Janssen, 92195 Meudon Pricipal Cedex, France 2 Univ. Paris Diderot, Sorbonne Paris Cit´e, 4 rue Elsa Morante, 75205 Paris Cedex 13 3 Department of Physics and Astronomy of the University of Padova, Via Marzolo 8 35131 Padova, Italy Submitted to Icarus: November 2013, accepted on 28 January 2014 e-mail: [email protected]; fax: +33145077144; phone: +33145077746 Manuscript pages: 38; Figures: 13 ; Tables: 5 Running head: Aqueous alteration on primitive asteroids Send correspondence to: Sonia Fornasier LESIA-Observatoire de Paris arXiv:1402.0175v1 [astro-ph.EP] 2 Feb 2014 Batiment 17 5, Place Jules Janssen 92195 Meudon Cedex France e-mail: [email protected] 1Based on observations carried out at the European Southern Observatory (ESO), La Silla, Chile, ESO proposals 062.S-0173 and 064.S-0205 (PI M. Lazzarin) Preprint submitted to Elsevier September 27, 2018 fax: +33145077144 phone: +33145077746 2 Aqueous alteration on main belt primitive asteroids: results from visible spectroscopy1 S. Fornasier1,2, C. Lantz1,2, M.A. Barucci1, M. Lazzarin3 Abstract This work focuses on the study of the aqueous alteration process which acted in the main belt and produced hydrated minerals on the altered asteroids. Hydrated minerals have been found mainly on Mars surface, on main belt primitive asteroids and possibly also on few TNOs. These materials have been produced by hydration of pristine anhydrous silicates during the aqueous alteration process, that, to be active, needed the presence of liquid water under low temperature conditions (below 320 K) to chemically alter the minerals. -

The Minor Planet Bulletin

THE MINOR PLANET BULLETIN OF THE MINOR PLANETS SECTION OF THE BULLETIN ASSOCIATION OF LUNAR AND PLANETARY OBSERVERS VOLUME 35, NUMBER 3, A.D. 2008 JULY-SEPTEMBER 95. ASTEROID LIGHTCURVE ANALYSIS AT SCT/ST-9E, or 0.35m SCT/STL-1001E. Depending on the THE PALMER DIVIDE OBSERVATORY: binning used, the scale for the images ranged from 1.2-2.5 DECEMBER 2007 – MARCH 2008 arcseconds/pixel. Exposure times were 90–240 s. Most observations were made with no filter. On occasion, e.g., when a Brian D. Warner nearly full moon was present, an R filter was used to decrease the Palmer Divide Observatory/Space Science Institute sky background noise. Guiding was used in almost all cases. 17995 Bakers Farm Rd., Colorado Springs, CO 80908 [email protected] All images were measured using MPO Canopus, which employs differential aperture photometry to determine the values used for (Received: 6 March) analysis. Period analysis was also done using MPO Canopus, which incorporates the Fourier analysis algorithm developed by Harris (1989). Lightcurves for 17 asteroids were obtained at the Palmer Divide Observatory from December 2007 to early The results are summarized in the table below, as are individual March 2008: 793 Arizona, 1092 Lilium, 2093 plots. The data and curves are presented without comment except Genichesk, 3086 Kalbaugh, 4859 Fraknoi, 5806 when warranted. Column 3 gives the full range of dates of Archieroy, 6296 Cleveland, 6310 Jankonke, 6384 observations; column 4 gives the number of data points used in the Kervin, (7283) 1989 TX15, 7560 Spudis, (7579) 1990 analysis. Column 5 gives the range of phase angles. -

Appendix 1 1311 Discoverers in Alphabetical Order

Appendix 1 1311 Discoverers in Alphabetical Order Abe, H. 28 (8) 1993-1999 Bernstein, G. 1 1998 Abe, M. 1 (1) 1994 Bettelheim, E. 1 (1) 2000 Abraham, M. 3 (3) 1999 Bickel, W. 443 1995-2010 Aikman, G. C. L. 4 1994-1998 Biggs, J. 1 2001 Akiyama, M. 16 (10) 1989-1999 Bigourdan, G. 1 1894 Albitskij, V. A. 10 1923-1925 Billings, G. W. 6 1999 Aldering, G. 4 1982 Binzel, R. P. 3 1987-1990 Alikoski, H. 13 1938-1953 Birkle, K. 8 (8) 1989-1993 Allen, E. J. 1 2004 Birtwhistle, P. 56 2003-2009 Allen, L. 2 2004 Blasco, M. 5 (1) 1996-2000 Alu, J. 24 (13) 1987-1993 Block, A. 1 2000 Amburgey, L. L. 2 1997-2000 Boattini, A. 237 (224) 1977-2006 Andrews, A. D. 1 1965 Boehnhardt, H. 1 (1) 1993 Antal, M. 17 1971-1988 Boeker, A. 1 (1) 2002 Antolini, P. 4 (3) 1994-1996 Boeuf, M. 12 1998-2000 Antonini, P. 35 1997-1999 Boffin, H. M. J. 10 (2) 1999-2001 Aoki, M. 2 1996-1997 Bohrmann, A. 9 1936-1938 Apitzsch, R. 43 2004-2009 Boles, T. 1 2002 Arai, M. 45 (45) 1988-1991 Bonomi, R. 1 (1) 1995 Araki, H. 2 (2) 1994 Borgman, D. 1 (1) 2004 Arend, S. 51 1929-1961 B¨orngen, F. 535 (231) 1961-1995 Armstrong, C. 1 (1) 1997 Borrelly, A. 19 1866-1894 Armstrong, M. 2 (1) 1997-1998 Bourban, G. 1 (1) 2005 Asami, A. 7 1997-1999 Bourgeois, P. 1 1929 Asher, D.