Economics 582 Spring 2013

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Econometrics Oxford University, 2017 1 / 34 Introduction

Do attractive people get paid more? Felix Pretis (Oxford) Econometrics Oxford University, 2017 1 / 34 Introduction Econometrics: Computer Modelling Felix Pretis Programme for Economic Modelling Oxford Martin School, University of Oxford Lecture 1: Introduction to Econometric Software & Cross-Section Analysis Felix Pretis (Oxford) Econometrics Oxford University, 2017 2 / 34 Aim of this Course Aim: Introduce econometric modelling in practice Introduce OxMetrics/PcGive Software By the end of the course: Able to build econometric models Evaluate output and test theories Use OxMetrics/PcGive to load, graph, model, data Felix Pretis (Oxford) Econometrics Oxford University, 2017 3 / 34 Administration Textbooks: no single text book. Useful: Doornik, J.A. and Hendry, D.F. (2013). Empirical Econometric Modelling Using PcGive 14: Volume I, London: Timberlake Consultants Press. Included in OxMetrics installation – “Help” Hendry, D. F. (2015) Introductory Macro-econometrics: A New Approach. Freely available online: http: //www.timberlake.co.uk/macroeconometrics.html Lecture Notes & Lab Material online: http://www.felixpretis.org Problem Set: to be covered in tutorial Exam: Questions possible (Q4 and Q8 from past papers 2016 and 2017) Felix Pretis (Oxford) Econometrics Oxford University, 2017 4 / 34 Structure 1: Intro to Econometric Software & Cross-Section Regression 2: Micro-Econometrics: Limited Indep. Variable 3: Macro-Econometrics: Time Series Felix Pretis (Oxford) Econometrics Oxford University, 2017 5 / 34 Motivation Economies high dimensional, interdependent, heterogeneous, and evolving: comprehensive specification of all events is impossible. Economic Theory likely wrong and incomplete meaningless without empirical support Econometrics to discover new relationships from data Econometrics can provide empirical support. or refutation. Require econometric software unless you really like doing matrix manipulation by hand. -

Econometric Theory

Econometric Theory John Stachurski January 10, 2014 Contents Preface v I Background Material1 1 Probability2 1.1 Probability Models.............................2 1.2 Distributions................................. 16 1.3 Dependence................................. 25 1.4 Asymptotics................................. 30 1.5 Exercises................................... 39 2 Linear Algebra 49 2.1 Vectors and Matrices............................ 49 2.2 Span, Dimension and Independence................... 59 2.3 Matrices and Equations........................... 66 2.4 Random Vectors and Matrices....................... 71 2.5 Convergence of Random Matrices.................... 74 2.6 Exercises................................... 79 i CONTENTS ii 3 Projections 84 3.1 Orthogonality and Projection....................... 84 3.2 Overdetermined Systems of Equations.................. 90 3.3 Conditioning................................. 93 3.4 Exercises................................... 103 II Foundations of Statistics 107 4 Statistical Learning 108 4.1 Inductive Learning............................. 108 4.2 Statistics................................... 112 4.3 Maximum Likelihood............................ 120 4.4 Parametric vs Nonparametric Estimation................ 125 4.5 Empirical Distributions........................... 134 4.6 Empirical Risk Minimization....................... 137 4.7 Exercises................................... 149 5 Methods of Inference 153 5.1 Making Inference about Theory...................... 153 5.2 Confidence Sets.............................. -

International Journal of Forecasting Guidelines for IJF Software Reviewers

International Journal of Forecasting Guidelines for IJF Software Reviewers It is desirable that there be some small degree of uniformity amongst the software reviews in this journal, so that regular readers of the journal can have some idea of what to expect when they read a software review. In particular, I wish to standardize the second section (after the introduction) of the review, and the penultimate section (before the conclusions). As stand-alone sections, they will not materially affect the reviewers abillity to craft the review as he/she sees fit, while still providing consistency between reviews. This applies mostly to single-product reviews, but some of the ideas presented herein can be successfully adapted to a multi-product review. The second section, Overview, is an overview of the package, and should include several things. · Contact information for the developer, including website address. · Platforms on which the package runs, and corresponding prices, if available. · Ancillary programs included with the package, if any. · The final part of this section should address Berk's (1987) list of criteria for evaluating statistical software. Relevant items from this list should be mentioned, as in my review of RATS (McCullough, 1997, pp.182- 183). · My use of Berk was extremely terse, and should be considered a lower bound. Feel free to amplify considerably, if the review warrants it. In fact, Berk's criteria, if considered in sufficient detail, could be the outline for a review itself. The penultimate section, Numerical Details, directly addresses numerical accuracy and reliality, if these topics are not addressed elsewhere in the review. -

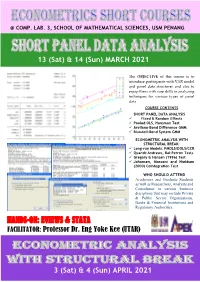

Hands-On & Exercise: Stata Hands-On: Eviews & Stata

@ COMP. LAB. 3, SCHOOL OF MATHEMATICAL SCIENCES, USM PENANG 13 (Sat) & 14 (Sun) MARCH 2021 The OBJECTIVE of this course is to introduce participants with VAR model and panel data structures and also to equip them with core skills in analyzing techniques for various types of panel data HANDS-ON & EXERCISE: STATA COURSE CONTENTS SHORT PANEL DATA ANALYSIS FACILITATORS: ✓ Fixed & Random Effects ✓ Pooled OLS, Hausman Test Dr Zainudin Arsad (USM) & ✓ Arellano-Bond Difference GMM ✓ Blundell-Bond System GMM Ass. Prof. Dr LAW S. HOOK (UPM) ECONOMETRIC ANALYSIS WITH STRUCTURAL BREAK: ✓ Long-run Models: FMOLS/DOLS/CCR ✓ Quandt-Andrews, Bai-Perron Tests ✓ Gregory & Hansen (1996) Test ✓ Johansen, Mosconi and Nieldsen (2000) Cointegration Test WHO SHOULD ATTEND Academics and Graduate Students as well as Researchers, Analysts and Consultants in various business disciplines that may include Private & Public Sector Organizations, Banks & Financial Institutions and Regulatory Authorities. HANDS-ON: EVIEWS & STATA FACILITATOR: Professor Dr. Eng Yoke Kee (UTAR) 3 (Sat) & 4 (Sun) APRIL 2021 SHORT PANEL DATA ANALYSIS DAY 1 : SATURDAY, 13 MARCH 2021 8:15 AM REGISTRATION 8:45 AM SESSION 1 – THE NATURE OF PANEL DATA ▪ Nature and Benefits od Panel Data; Examining & Arranging Your Dataset 10:30 AM TEA BREAK 10:45 AM SESSION 2 – STATIC LINEAR PANEL MODELS ▪ Panel Data Basics: Pooled OLS, Fixed and Random Effects 12:45 PM LUNCH 2:00 PM SESSION 3 – SELECTING PANEL DATA MODELS ▪ Poolability F-test, Breusch-Pagan LM Test, Hausman Test 3.45 PM TEA BREAK 4.00 PM SESSION 4 – ISSUES ON PANEL DATA MODELS: ROBUST ESTIMATES ▪ Diagnostic Tests and Robust Standard Errors 5:45 PM Q & A (SESSIONS 1 - 4) DAY 2 : SUNDAY, 14 MARCH 2021 8:30 AM SESSION 5 – DYNAMIC PANEL DATA APPROACH ▪ Why Dynamic Panel? 10:15 AM TEA BREAK 10:30 AM SESSION 6 – MULTIVARIATE DYNAMIC MODELS WITH PERSISTENT SERIES ▪ Arellano-Bond Difference Estimator, 1-step vs. -

Rtadf: Testing for Bubbles with Eviews

JSS Journal of Statistical Software November 2017, Volume 81, Code Snippet 1. doi: 10.18637/jss.v081.c01 Rtadf: Testing for Bubbles with EViews Itamar Caspi Bank of Israel Abstract This paper presents Rtadf (right-tail augmented Dickey-Fuller), an EViews add-in that facilitates the performance of time series based tests that help detect and date-stamp asset price bubbles. The detection strategy is based on a right-tail variation of the standard augmented Dickey-Fuller (ADF) test where the alternative hypothesis is of a mildly explo- sive process. Rejection of the null in each of these tests may serve as empirical evidence for an asset price bubble. The add-in implements four types of tests: standard ADF, rolling window ADF, supremum ADF (SADF; Phillips, Wu, and Yu 2011) and general- ized SADF (GSADF; Phillips, Shi, and Yu 2015). It calculates the test statistics for each of the above four tests, simulates the corresponding exact finite sample critical values and p values via Monte Carlo methods, under the assumption of Gaussian innovations, and produces a graphical display of the date stamping procedure. Keywords: rational bubble, ADF test, sup ADF test, generalized sup ADF test, mildly explo- sive process, EViews. 1. Introduction Empirical identification of asset price bubbles in real time, and even in retrospect, is surely not an easy task, and it has been the source of academic and professional debate for several decades.1 One strand of the empirical literature suggests using time series estimation tech- niques while exploiting predictions made by finance theory in order to test for the existence of bubbles in the data. -

Introduction to Eviews 2.0

Introduction to EViews 2.0 Overview of product EViews provides regression and forecasting tools on Windows computers. With EViews you can develop a statistical relation from your data and then use the relation to forecast future values of the data. Areas where EViews can be useful include: · Sales forecasting · Cost analysis and forecasting · Financial analysis · Macroeconomic forecasting · Simulation · Scientific data analysis and evaluation EViews is a new version of a set of tools for manipulating time series data originally developed in the Time Series Processor software for large computers. The immediate predecessor of EViews was MicroTSP, first released in 1981. Though EViews was developed by economists and most of its uses are in economics, there is nothing in its design that limits its usefulness to economic time series. Even quite large cross-section projects can be handled in EViews. The basic data object within EViews is the time series. Each series has a name, and you can request operations on all the observations just by mentioning the name of the series. EViews provides convenient visual ways to enter time series from the keyboard or from disk files, to create new series from existing ones, to display and print series, and to carry out statistical analysis of the relations among series. EViews uses the visual features of modern Windows software. You can use your mouse to guide the operation with standard Windows menus and dialogs. Results appear in windows and can be manipulated with standard Windows techniques. Alternatively, you may use EViews' powerful command language. You can enter and edit commands in the command window. -

Gretl User's Guide

Gretl User’s Guide Gnu Regression, Econometrics and Time-series Allin Cottrell Department of Economics Wake Forest university Riccardo “Jack” Lucchetti Dipartimento di Economia Università Politecnica delle Marche December, 2008 Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.1 or any later version published by the Free Software Foundation (see http://www.gnu.org/licenses/fdl.html). Contents 1 Introduction 1 1.1 Features at a glance ......................................... 1 1.2 Acknowledgements ......................................... 1 1.3 Installing the programs ....................................... 2 I Running the program 4 2 Getting started 5 2.1 Let’s run a regression ........................................ 5 2.2 Estimation output .......................................... 7 2.3 The main window menus ...................................... 8 2.4 Keyboard shortcuts ......................................... 11 2.5 The gretl toolbar ........................................... 11 3 Modes of working 13 3.1 Command scripts ........................................... 13 3.2 Saving script objects ......................................... 15 3.3 The gretl console ........................................... 15 3.4 The Session concept ......................................... 16 4 Data files 19 4.1 Native format ............................................. 19 4.2 Other data file formats ....................................... 19 4.3 Binary databases .......................................... -

Eviews 10 Getting Started Eviews 10 Getting Started Copyright © 1994–2017 IHS Global Inc

EViews 10 Getting Started EViews 10 Getting Started Copyright © 1994–2017 IHS Global Inc. All Rights Reserved ISBN: 978-1-880411-36-0 This software product, including program code and manual, is copyrighted, and all rights are reserved by IHS Global Inc. The distribution and sale of this product are intended for the use of the original purchaser only. Except as permitted under the United States Copyright Act of 1976, no part of this product may be reproduced or distributed in any form or by any means, or stored in a database or retrieval system, without the prior written permission of IHS Global Inc. Disclaimer The authors and IHS Global Inc.assume no responsibility for any errors that may appear in this manual or the EViews program. The user assumes all responsibility for the selection of the pro- gram to achieve intended results, and for the installation, use, and results obtained from the pro- gram. Trademarks EViews® is a registered trademark of IHS Global Inc. Windows, Excel, PowerPoint, and Access are registered trademarks of Microsoft Corporation. PostScript is a trademark of Adobe Corpora- tion. X11.2 and X12-ARIMA Version 0.2.7, and X-13ARIMA-SEATS are seasonal adjustment pro- grams developed by the U. S. Census Bureau. Tramo/Seats is copyright by Agustin Maravall and Victor Gomez. Info-ZIP is provided by the persons listed in the infozip_license.txt file. Please refer to this file in the EViews directory for more information on Info-ZIP. Zlib was written by Jean-loup Gailly and Mark Adler. More information on zlib can be found in the zlib_license.txt file in the EViews directory. -

Houtan BASTANI Philadelphia, PA 19143 Nationalities: USA, France, Iran [email protected]

Houtan BASTANI Philadelphia, PA 19143 Nationalities: USA, France, Iran [email protected] EDUCATION MSc Economics, London School of Economics 2008-09 Graduated with Merit B.S. Economics and Computer Science, College of William & Mary 2000-03 Graduated Summa Cum Laude, Phi Beta Kappa College education financed through scholarships, grants, loans and work Candidate for B.S.E. Computer Science Engineering, University of Pennsylvania 1998-00 Transferred FELLOWSHIPS, HONORS AND AWARDS US Fulbright Research Fellow, Italy 2003-04 Elected member of Phi Beta Kappa honor society 2002 Frances L. and Edwin K. Cummings Scholar 2002 NSF REU Program 2001, 2002 Dean’s List 1999-03 WORK EXPERIENCE Research Software Engineer, Dynare, CEPREMAP, École Normale Supérieure since 2009 Focused on development of Dynare, primarily in C++ and MATLAB Programmed o OLS, FGLS, Gibbs Sampling for SUR models, VAR forecasts, … o Interpreter for Dynare’s macro processing language o Dynare's Reporting functionality o Dynare's test suite, run nightly upon commit o Integrated Sims, Waggoner and Zha MS-SBVAR code o JSON and Julia output of preprocessor o among others... Responsible for distribution on macOS: create installation packages and snapshots; created and maintain installation scripts on Homebrew Webmaster http://www.dynare.org Consultant, Organisation for Economic Co-operation and Development (OECD) 2018 Added Gandhi, Navarro and Rivers (2017) module to MultiProd codebase Fixed bug in Stata Gtools Gave class on Git Consultant, Copywrighte Design 2018 Created interactive in-store display using Python and Raspberry Pi Consultant, CQER, Federal Reserve Bank of Atlanta 2011 Worked for Dan Waggoner and Tao Zha, creating an interface for use with their Regime-Switching DSGE models. -

Introduction to Scientific Computing

Introduction to Scientific Computing Vahe Krrikyan/Johannes Pfeifer University of Mannheim [email protected] May 20, 2016 Programming Introduction Motivation Best Practices Conclusion References 1/25cba Figure: https://xkcd.com/1513/ Programming Introduction Motivation Best Practices Conclusion References 2/25cba Outline 1 Introduction 2 Motivation 3 Best Practices 4 Conclusion Programming Introduction Motivation Best Practices Conclusion References 3/25cba Introduction This presentation is based on Greg Wilson et al. (2014). “Best Practices for Scientific Computing”. In: PLoS Biology 12.1, e1001745. doi: 10.1371/journal.pbio.1001745 Programming Introduction Motivation Best Practices Conclusion References 4/25cba Introduction Nowadays scientific work almost impossible without scientific computing software Software as important as hardware in modern science and economics in particular Coding is an integral part of economic research, unless purely theoretical (even then software helps checking algebra) Development of Information Technologies, a driver for more sophisticated research techniques Programming Introduction Motivation Best Practices Conclusion References 5/25cba Introduction Scientist spend 30% or more of their time on developing their own software (Hannay et al., 2009; Prabhu et al., 2011) Thus research quality and results highly dependent on developed software E.g. Managing and analyzing large amounts of data, running sophisticated regressions or quantitative experiments Even running simple OLS regressions requires coding -

Alvaro Silva

Alvaro Silva Department of Economics University of Maryland College Park [email protected] https://asilvub.github.io/ Current Position Aide to the Minister of Finance, Chile 2021 – Education Ph.D. in Economics, University of Maryland College Park 2018 – present M.A. in Economics, University of Maryland College Park 2020 M.Sc. in Applied Economics (with honors), Universidad de Concepción 2014 – 2016 B.Sc. in Economics (with honors), Universidad de Concepción 2009 – 2013 Fields Macroeconomics, International Finance, Trade Past Experience Macro Modeling Consultant, Interamerican Development Bank (IDB) 2020 – 2020 Young Researcher, CLAPES UC, PUC Chile 2016 – 2018 Research WORKING PAPERS “Earnings Effect of Startup Employment" with Gonzalo García-Trujillo and Nathalie González WORK IN PROGRESS “Commodity Shocks and Production Networks in Small Open Economies" with Petre Caraini, Jorge Miranda-Pinto and Juan Olaya PRE-PH.D.PUBLICATIONS “Price Controls, Hyperinflation, and the Inflation-Relative Price Variability Relationship" with Rodrigo Cerda and Rolf Lüders Forthcoming at Empirical Economics (2021). “Impact of Economic Uncertainty in a Small Open Economy: The Case of Chile" with Rodrigo Cerda and José Tomás Valente Applied Economics (2018), 50(26), pp. 2894 - 2908. BOOK CHAPTERS AND OTHER PUBLICATIONS “Towards Measuring Economic Policy Uncertainty in Latin America: A First Little Step" Latin American Policy Journal (2018), Volume 7, Spring: pp. 68-73. “El Financiamiento Escolar en Chile (School Funding in Chile)" with Sergio Urzúa In Ideas -

Econometrics with Octave

Econometrics with Octave Dirk Eddelb¨uttel∗ Bank of Montreal, Toronto, Canada. [email protected] November 1999 Summary GNU Octave is an open-source implementation of a (mostly Matlab compatible) high-level language for numerical computations. This review briefly introduces Octave, discusses applications of Octave in an econometric context, and illustrates how to extend Octave with user-supplied C++ code. Several examples are provided. 1 Introduction Econometricians sweat linear algebra. Be it for linear or non-linear problems of estimation or infer- ence, matrix algebra is a natural way of expressing these problems on paper. However, when it comes to writing computer programs to either implement tried and tested econometric procedures, or to research and prototype new routines, programming languages such as C or Fortran are more of a bur- den than an aid. Having to enhance the language by supplying functions for even the most primitive operations adds extra programming effort, introduces new points of failure, and moves the level of abstraction further away from the elegant mathematical expressions. As Eddelb¨uttel(1996) argues, object-oriented programming provides `a step up' from Fortran or C by enabling the programmer to seamlessly add new data types such as matrices, along with operations on these new data types, to the language. But with Moore's Law still being validated by ever and ever faster processors, and, hence, ever increasing computational power, the prime reason for using compiled code, i.e. speed, becomes less relevant. Hence the growing popularity of interpreted programming languages, both, in general, as witnessed by the surge in popularity of the general-purpose programming languages Perl and Python and, in particular, for numerical applications with strong emphasis on matrix calculus where languages such as Gauss, Matlab, Ox, R and Splus, which were reviewed by Cribari-Neto and Jensen (1997), Cribari-Neto (1997) and Cribari-Neto and Zarkos (1999), have become popular.