Python for Unified Research in Econometrics and Statistics Roseline Bilina, Steve Lawford

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Econometrics Oxford University, 2017 1 / 34 Introduction

Do attractive people get paid more? Felix Pretis (Oxford) Econometrics Oxford University, 2017 1 / 34 Introduction Econometrics: Computer Modelling Felix Pretis Programme for Economic Modelling Oxford Martin School, University of Oxford Lecture 1: Introduction to Econometric Software & Cross-Section Analysis Felix Pretis (Oxford) Econometrics Oxford University, 2017 2 / 34 Aim of this Course Aim: Introduce econometric modelling in practice Introduce OxMetrics/PcGive Software By the end of the course: Able to build econometric models Evaluate output and test theories Use OxMetrics/PcGive to load, graph, model, data Felix Pretis (Oxford) Econometrics Oxford University, 2017 3 / 34 Administration Textbooks: no single text book. Useful: Doornik, J.A. and Hendry, D.F. (2013). Empirical Econometric Modelling Using PcGive 14: Volume I, London: Timberlake Consultants Press. Included in OxMetrics installation – “Help” Hendry, D. F. (2015) Introductory Macro-econometrics: A New Approach. Freely available online: http: //www.timberlake.co.uk/macroeconometrics.html Lecture Notes & Lab Material online: http://www.felixpretis.org Problem Set: to be covered in tutorial Exam: Questions possible (Q4 and Q8 from past papers 2016 and 2017) Felix Pretis (Oxford) Econometrics Oxford University, 2017 4 / 34 Structure 1: Intro to Econometric Software & Cross-Section Regression 2: Micro-Econometrics: Limited Indep. Variable 3: Macro-Econometrics: Time Series Felix Pretis (Oxford) Econometrics Oxford University, 2017 5 / 34 Motivation Economies high dimensional, interdependent, heterogeneous, and evolving: comprehensive specification of all events is impossible. Economic Theory likely wrong and incomplete meaningless without empirical support Econometrics to discover new relationships from data Econometrics can provide empirical support. or refutation. Require econometric software unless you really like doing matrix manipulation by hand. -

Econometric Theory

Econometric Theory John Stachurski January 10, 2014 Contents Preface v I Background Material1 1 Probability2 1.1 Probability Models.............................2 1.2 Distributions................................. 16 1.3 Dependence................................. 25 1.4 Asymptotics................................. 30 1.5 Exercises................................... 39 2 Linear Algebra 49 2.1 Vectors and Matrices............................ 49 2.2 Span, Dimension and Independence................... 59 2.3 Matrices and Equations........................... 66 2.4 Random Vectors and Matrices....................... 71 2.5 Convergence of Random Matrices.................... 74 2.6 Exercises................................... 79 i CONTENTS ii 3 Projections 84 3.1 Orthogonality and Projection....................... 84 3.2 Overdetermined Systems of Equations.................. 90 3.3 Conditioning................................. 93 3.4 Exercises................................... 103 II Foundations of Statistics 107 4 Statistical Learning 108 4.1 Inductive Learning............................. 108 4.2 Statistics................................... 112 4.3 Maximum Likelihood............................ 120 4.4 Parametric vs Nonparametric Estimation................ 125 4.5 Empirical Distributions........................... 134 4.6 Empirical Risk Minimization....................... 137 4.7 Exercises................................... 149 5 Methods of Inference 153 5.1 Making Inference about Theory...................... 153 5.2 Confidence Sets.............................. -

Overview-Of-Statistical-Analytics-And

Brief Overview of Statistical Analytics and Machine Learning tools for Data Scientists Tom Breur 17 January 2017 It is often said that Excel is the most commonly used analytics tool, and that is hard to argue with: it has a Billion users worldwide. Although not everybody thinks of Excel as a Data Science tool, it certainly is often used for “data discovery”, and can be used for many other tasks, too. There are two “old school” tools, SPSS and SAS, that were founded in 1968 and 1976 respectively. These products have been the hallmark of statistics. Both had early offerings of data mining suites (Clementine, now called IBM SPSS Modeler, and SAS Enterprise Miner) and both are still used widely in Data Science, today. They have evolved from a command line only interface, to more user-friendly graphic user interfaces. What they also share in common is that in the core SPSS and SAS are really COBOL dialects and are therefore characterized by row- based processing. That doesn’t make them inherently good or bad, but it is principally different from set-based operations that many other tools offer nowadays. Similar in functionality to the traditional leaders SAS and SPSS have been JMP and Statistica. Both remarkably user-friendly tools with broad data mining and machine learning capabilities. JMP is, and always has been, a fully owned daughter company of SAS, and only came to the fore when hardware became more powerful. Its initial “handicap” of storing all data in RAM was once a severe limitation, but since computers now have large enough internal memory for most data sets, its computational power and intuitive GUI hold their own. -

Annual Report of the Center for Statistical Research and Methodology Research and Methodology Directorate Fiscal Year 2017

Annual Report of the Center for Statistical Research and Methodology Research and Methodology Directorate Fiscal Year 2017 Decennial Directorate Customers Demographic Directorate Customers Missing Data, Edit, Survey Sampling: and Imputation Estimation and CSRM Expertise Modeling for Collaboration Economic and Research Experimentation and Record Linkage Directorate Modeling Customers Small Area Simulation, Data Time Series and Estimation Visualization, and Seasonal Adjustment Modeling Field Directorate Customers Other Internal and External Customers ince August 1, 1933— S “… As the major figures from the American Statistical Association (ASA), Social Science Research Council, and new Roosevelt academic advisors discussed the statistical needs of the nation in the spring of 1933, it became clear that the new programs—in particular the National Recovery Administration—would require substantial amounts of data and coordination among statistical programs. Thus in June of 1933, the ASA and the Social Science Research Council officially created the Committee on Government Statistics and Information Services (COGSIS) to serve the statistical needs of the Agriculture, Commerce, Labor, and Interior departments … COGSIS set … goals in the field of federal statistics … (It) wanted new statistical programs—for example, to measure unemployment and address the needs of the unemployed … (It) wanted a coordinating agency to oversee all statistical programs, and (it) wanted to see statistical research and experimentation organized within the federal government … In August 1933 Stuart A. Rice, President of the ASA and acting chair of COGSIS, … (became) assistant director of the (Census) Bureau. Joseph Hill (who had been at the Census Bureau since 1900 and who provided the concepts and early theory for what is now the methodology for apportioning the seats in the U.S. -

International Journal of Forecasting Guidelines for IJF Software Reviewers

International Journal of Forecasting Guidelines for IJF Software Reviewers It is desirable that there be some small degree of uniformity amongst the software reviews in this journal, so that regular readers of the journal can have some idea of what to expect when they read a software review. In particular, I wish to standardize the second section (after the introduction) of the review, and the penultimate section (before the conclusions). As stand-alone sections, they will not materially affect the reviewers abillity to craft the review as he/she sees fit, while still providing consistency between reviews. This applies mostly to single-product reviews, but some of the ideas presented herein can be successfully adapted to a multi-product review. The second section, Overview, is an overview of the package, and should include several things. · Contact information for the developer, including website address. · Platforms on which the package runs, and corresponding prices, if available. · Ancillary programs included with the package, if any. · The final part of this section should address Berk's (1987) list of criteria for evaluating statistical software. Relevant items from this list should be mentioned, as in my review of RATS (McCullough, 1997, pp.182- 183). · My use of Berk was extremely terse, and should be considered a lower bound. Feel free to amplify considerably, if the review warrants it. In fact, Berk's criteria, if considered in sufficient detail, could be the outline for a review itself. The penultimate section, Numerical Details, directly addresses numerical accuracy and reliality, if these topics are not addressed elsewhere in the review. -

Fibrinogen Levels Are Associated with Lymph Node Involvement And

ANTICANCER RESEARCH 38 : 1097-1104 (2018) doi:10.21873/anticanres.12328 Fibrinogen Levels Are Associated with Lymph Node Involvement and Overall Survival in Gastric Cancer Patients JÚLIUS PALAJ 1, ŠTEFAN KEČKÉŠ 2, VÍTĚZSLAV MAREK 1, DANIEL DYTTERT 1, IVETA WACZULÍKOVÁ 3 and ŠTEFAN DURDÍK 1 1Department of Oncological Surgery, St. Elizabeth Cancer Institute, Slovak Republic and Faculty of Medicine in Bratislava of the Comenius University, Bratislava, Slovak Republic; 2Department of Immunodiagnostics, St. Elizabeth Cancer Institute, Bratislava, Slovak Republic; 3Department of Nuclear Physics and Biophysics, Comenius University, Faculty of Mathematics, Physics and Informatics, Bratislava, Slovak Republic Abstract. Background/Aim: Combination of perioperative accounting for 6.8% of all diagnosed cancers and making this chemotherapy with gastrectomy with D2 lymphadenectomy cancer the 5th most common malignancy globally (2). improves long-term survival in patients with gastric cancer. Moreover, it is the third leading cause of death in both sexes The aim of this study was to investigate the predictive value accounting for 8.8% of the total deaths from cancer (3). of preoperative levels of CRP, albumin, fibrinogen, In spite of advancements in chemotherapy and local neutrophil-to-lymphocyte ratio and routinely used tumor control of GC, prognosis remains poor, mainly because of markers (CEA, CA 19-9, CA 72-4) for lymph node the advancement of the disease at the time of diagnosis. involvement. Materials and Methods: This retrospective Approximately 50% patients in western countries have study was conducted in 136 patients who underwent surgery metastases at the time of diagnosis, and from those without between 2007 and 2015. Bivariable and multivariable metastatic disease only 50% are eligible for gastric resection analyses were performed in order to identify important (4). -

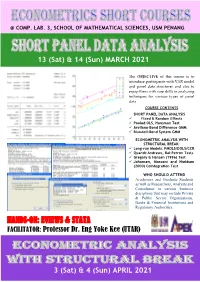

Hands-On & Exercise: Stata Hands-On: Eviews & Stata

@ COMP. LAB. 3, SCHOOL OF MATHEMATICAL SCIENCES, USM PENANG 13 (Sat) & 14 (Sun) MARCH 2021 The OBJECTIVE of this course is to introduce participants with VAR model and panel data structures and also to equip them with core skills in analyzing techniques for various types of panel data HANDS-ON & EXERCISE: STATA COURSE CONTENTS SHORT PANEL DATA ANALYSIS FACILITATORS: ✓ Fixed & Random Effects ✓ Pooled OLS, Hausman Test Dr Zainudin Arsad (USM) & ✓ Arellano-Bond Difference GMM ✓ Blundell-Bond System GMM Ass. Prof. Dr LAW S. HOOK (UPM) ECONOMETRIC ANALYSIS WITH STRUCTURAL BREAK: ✓ Long-run Models: FMOLS/DOLS/CCR ✓ Quandt-Andrews, Bai-Perron Tests ✓ Gregory & Hansen (1996) Test ✓ Johansen, Mosconi and Nieldsen (2000) Cointegration Test WHO SHOULD ATTEND Academics and Graduate Students as well as Researchers, Analysts and Consultants in various business disciplines that may include Private & Public Sector Organizations, Banks & Financial Institutions and Regulatory Authorities. HANDS-ON: EVIEWS & STATA FACILITATOR: Professor Dr. Eng Yoke Kee (UTAR) 3 (Sat) & 4 (Sun) APRIL 2021 SHORT PANEL DATA ANALYSIS DAY 1 : SATURDAY, 13 MARCH 2021 8:15 AM REGISTRATION 8:45 AM SESSION 1 – THE NATURE OF PANEL DATA ▪ Nature and Benefits od Panel Data; Examining & Arranging Your Dataset 10:30 AM TEA BREAK 10:45 AM SESSION 2 – STATIC LINEAR PANEL MODELS ▪ Panel Data Basics: Pooled OLS, Fixed and Random Effects 12:45 PM LUNCH 2:00 PM SESSION 3 – SELECTING PANEL DATA MODELS ▪ Poolability F-test, Breusch-Pagan LM Test, Hausman Test 3.45 PM TEA BREAK 4.00 PM SESSION 4 – ISSUES ON PANEL DATA MODELS: ROBUST ESTIMATES ▪ Diagnostic Tests and Robust Standard Errors 5:45 PM Q & A (SESSIONS 1 - 4) DAY 2 : SUNDAY, 14 MARCH 2021 8:30 AM SESSION 5 – DYNAMIC PANEL DATA APPROACH ▪ Why Dynamic Panel? 10:15 AM TEA BREAK 10:30 AM SESSION 6 – MULTIVARIATE DYNAMIC MODELS WITH PERSISTENT SERIES ▪ Arellano-Bond Difference Estimator, 1-step vs. -

Towards a Fully Automated Extraction and Interpretation of Tabular Data Using Machine Learning

UPTEC F 19050 Examensarbete 30 hp August 2019 Towards a fully automated extraction and interpretation of tabular data using machine learning Per Hedbrant Per Hedbrant Master Thesis in Engineering Physics Department of Engineering Sciences Uppsala University Sweden Abstract Towards a fully automated extraction and interpretation of tabular data using machine learning Per Hedbrant Teknisk- naturvetenskaplig fakultet UTH-enheten Motivation A challenge for researchers at CBCS is the ability to efficiently manage the Besöksadress: different data formats that frequently are changed. Significant amount of time is Ångströmlaboratoriet Lägerhyddsvägen 1 spent on manual pre-processing, converting from one format to another. There are Hus 4, Plan 0 currently no solutions that uses pattern recognition to locate and automatically recognise data structures in a spreadsheet. Postadress: Box 536 751 21 Uppsala Problem Definition The desired solution is to build a self-learning Software as-a-Service (SaaS) for Telefon: automated recognition and loading of data stored in arbitrary formats. The aim of 018 – 471 30 03 this study is three-folded: A) Investigate if unsupervised machine learning Telefax: methods can be used to label different types of cells in spreadsheets. B) 018 – 471 30 00 Investigate if a hypothesis-generating algorithm can be used to label different types of cells in spreadsheets. C) Advise on choices of architecture and Hemsida: technologies for the SaaS solution. http://www.teknat.uu.se/student Method A pre-processing framework is built that can read and pre-process any type of spreadsheet into a feature matrix. Different datasets are read and clustered. An investigation on the usefulness of reducing the dimensionality is also done. -

Rtadf: Testing for Bubbles with Eviews

JSS Journal of Statistical Software November 2017, Volume 81, Code Snippet 1. doi: 10.18637/jss.v081.c01 Rtadf: Testing for Bubbles with EViews Itamar Caspi Bank of Israel Abstract This paper presents Rtadf (right-tail augmented Dickey-Fuller), an EViews add-in that facilitates the performance of time series based tests that help detect and date-stamp asset price bubbles. The detection strategy is based on a right-tail variation of the standard augmented Dickey-Fuller (ADF) test where the alternative hypothesis is of a mildly explo- sive process. Rejection of the null in each of these tests may serve as empirical evidence for an asset price bubble. The add-in implements four types of tests: standard ADF, rolling window ADF, supremum ADF (SADF; Phillips, Wu, and Yu 2011) and general- ized SADF (GSADF; Phillips, Shi, and Yu 2015). It calculates the test statistics for each of the above four tests, simulates the corresponding exact finite sample critical values and p values via Monte Carlo methods, under the assumption of Gaussian innovations, and produces a graphical display of the date stamping procedure. Keywords: rational bubble, ADF test, sup ADF test, generalized sup ADF test, mildly explo- sive process, EViews. 1. Introduction Empirical identification of asset price bubbles in real time, and even in retrospect, is surely not an easy task, and it has been the source of academic and professional debate for several decades.1 One strand of the empirical literature suggests using time series estimation tech- niques while exploiting predictions made by finance theory in order to test for the existence of bubbles in the data. -

An Example of Statistical Data Analysis Using the R Environment for Statistical Computing

Tutorial: An example of statistical data analysis using the R environment for statistical computing D G Rossiter Version 1.4; May 6, 2017 Subsoil vs. topsoil clay, by zone Regression Residuals vs. Fitted Values, subsoil clay % 128 80 15 138 ● 17119 137 1 ● 139 70 2 ● 3 10 ● 4 ● 60 ● ● 5 50 0 Slopes: Residual 40 zone 1 : 0.834 Subsoil clay % Subsoil clay ● ● zone 2 : 0.739 zone 3 : 0.564 −5 30 zone 4 : 1.081 overall: 0.829 −10 20 81 −15 10 145 10 20 30 40 50 60 70 80 20 30 40 50 60 70 Topsoil clay % Fitted GLS 2nd−order trend surface, subsoil clay % 340000 335000 330000 N 325000 320000 315000 660000 670000 680000 690000 700000 E Copyright © D G Rossiter 2008 { 2010, 2014, 2017 All rights reserved. Repro- duction and dissemination of the work as a whole (not parts) freely permitted if this original copyright notice is included. Sale or placement on a web site where payment must be made to access this document is strictly prohibited. To adapt or translate please contact the author ([email protected]). Contents 1 Introduction1 2 Example Data Set2 2.1 Loading the dataset...........................3 2.2 A normalized database structure*...................5 3 Research questions8 4 Univariarte Analysis9 4.1 Univariarte Exploratory Data Analysis................9 4.2 Point estimation; inference of the mean............... 14 4.3 Answers.................................. 15 5 Bivariate correlation and regression 16 5.1 Conceptual issues in correlation and regression........... 16 5.2 Bivariate Exploratory Data Analysis................. 18 5.3 Bivariate Correlation Analysis..................... 22 5.4 Fitting a regression line........................ -

Introduction to Eviews 2.0

Introduction to EViews 2.0 Overview of product EViews provides regression and forecasting tools on Windows computers. With EViews you can develop a statistical relation from your data and then use the relation to forecast future values of the data. Areas where EViews can be useful include: · Sales forecasting · Cost analysis and forecasting · Financial analysis · Macroeconomic forecasting · Simulation · Scientific data analysis and evaluation EViews is a new version of a set of tools for manipulating time series data originally developed in the Time Series Processor software for large computers. The immediate predecessor of EViews was MicroTSP, first released in 1981. Though EViews was developed by economists and most of its uses are in economics, there is nothing in its design that limits its usefulness to economic time series. Even quite large cross-section projects can be handled in EViews. The basic data object within EViews is the time series. Each series has a name, and you can request operations on all the observations just by mentioning the name of the series. EViews provides convenient visual ways to enter time series from the keyboard or from disk files, to create new series from existing ones, to display and print series, and to carry out statistical analysis of the relations among series. EViews uses the visual features of modern Windows software. You can use your mouse to guide the operation with standard Windows menus and dialogs. Results appear in windows and can be manipulated with standard Windows techniques. Alternatively, you may use EViews' powerful command language. You can enter and edit commands in the command window. -

Gretl User's Guide

Gretl User’s Guide Gnu Regression, Econometrics and Time-series Allin Cottrell Department of Economics Wake Forest university Riccardo “Jack” Lucchetti Dipartimento di Economia Università Politecnica delle Marche December, 2008 Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.1 or any later version published by the Free Software Foundation (see http://www.gnu.org/licenses/fdl.html). Contents 1 Introduction 1 1.1 Features at a glance ......................................... 1 1.2 Acknowledgements ......................................... 1 1.3 Installing the programs ....................................... 2 I Running the program 4 2 Getting started 5 2.1 Let’s run a regression ........................................ 5 2.2 Estimation output .......................................... 7 2.3 The main window menus ...................................... 8 2.4 Keyboard shortcuts ......................................... 11 2.5 The gretl toolbar ........................................... 11 3 Modes of working 13 3.1 Command scripts ........................................... 13 3.2 Saving script objects ......................................... 15 3.3 The gretl console ........................................... 15 3.4 The Session concept ......................................... 16 4 Data files 19 4.1 Native format ............................................. 19 4.2 Other data file formats ....................................... 19 4.3 Binary databases ..........................................