Political-Economic Trends Around the World

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Shanghai, China's Capital of Modernity

SHANGHAI, CHINA’S CAPITAL OF MODERNITY: THE PRODUCTION OF SPACE AND URBAN EXPERIENCE OF WORLD EXPO 2010 by GARY PUI FUNG WONG A thesis submitted to The University of Birmingham for the degree of DOCTOR OF PHILOSOHPY School of Government and Society Department of Political Science and International Studies The University of Birmingham February 2014 University of Birmingham Research Archive e-theses repository This unpublished thesis/dissertation is copyright of the author and/or third parties. The intellectual property rights of the author or third parties in respect of this work are as defined by The Copyright Designs and Patents Act 1988 or as modified by any successor legislation. Any use made of information contained in this thesis/dissertation must be in accordance with that legislation and must be properly acknowledged. Further distribution or reproduction in any format is prohibited without the permission of the copyright holder. ABSTRACT This thesis examines Shanghai’s urbanisation by applying Henri Lefebvre’s theories of the production of space and everyday life. A review of Lefebvre’s theories indicates that each mode of production produces its own space. Capitalism is perpetuated by producing new space and commodifying everyday life. Applying Lefebvre’s regressive-progressive method as a methodological framework, this thesis periodises Shanghai’s history to the ‘semi-feudal, semi-colonial era’, ‘socialist reform era’ and ‘post-socialist reform era’. The Shanghai World Exposition 2010 was chosen as a case study to exemplify how urbanisation shaped urban experience. Empirical data was collected through semi-structured interviews. This thesis argues that Shanghai developed a ‘state-led/-participation mode of production’. -

Englischer Diplomat, Commissioner Chinese Maritime Customs Biographie 1901 James Acheson Ist Konsul Des Englischen Konsulats in Qiongzhou

Report Title - p. 1 of 348 Report Title Acheson, James (um 1901) : Englischer Diplomat, Commissioner Chinese Maritime Customs Biographie 1901 James Acheson ist Konsul des englischen Konsulats in Qiongzhou. [Qing1] Aglen, Francis Arthur = Aglen, Francis Arthur Sir (Scarborough, Yorkshire 1869-1932 Spital Perthshire) : Beamter Biographie 1888 Francis Arthur Aglen kommt in Beijing an. [ODNB] 1888-1894 Francis Arthur Aglen ist als Assistent für den Chinese Maritime Customs Service in Beijing, Xiamen (Fujian), Guangzhou (Guangdong) und Tianjin tätig. [CMC1,ODNB] 1894-1896 Francis Arthur Aglen ist Stellvertretender Kommissar des Inspektorats des Chinese Maritime Customs Service in Beijing. [CMC1] 1899-1903 Francis Arthur Aglen ist Kommissar des Chinese Maritime Customs Service in Nanjing. [ODNB,CMC1] 1900 Francis Arthur Aglen ist General-Inspektor des Chinese Maritime Customs Service in Shanghai. [ODNB] 1904-1906 Francis Arthur Aglen ist Chefsekretär des Chinese Maritime Customs Service in Beijing. [CMC1] 1907-1910 Francis Arthur Aglen ist Kommissar des Chinese Maritime Customs Service in Hankou (Hubei). [CMC1] 1910-1927 Francis Arthur Aglen ist zuerst Stellvertretender General-Inspektor, dann General-Inspektor des Chinese Maritime Customs Service in Beijing. [ODNB,CMC1] Almack, William (1811-1843) : Englischer Teehändler Bibliographie : Autor 1837 Almack, William. A journey to China from London in a sailing vessel in 1837. [Reise auf der Anna Robinson, Opiumkrieg, Shanghai, Hong Kong]. [Manuskript Cambridge University Library]. Alton, John Maurice d' (Liverpool vor 1883) : Inspektor Chinese Maritime Customs Biographie 1883 John Maurice d'Alton kommt in China an und dient in der chinesischen Navy im chinesisch-französischen Krieg. [Who2] 1885-1921 John Maurice d'Alton ist Chef Inspektor des Chinese Maritime Customs Service in Nanjing. -

Joseph Stalin Revolutionary, Politician, Generalissimus and Dictator

Military Despatches Vol 34 April 2020 Flip-flop Generals that switch sides Surviving the Arctic convoys 93 year WWII veteran tells his story Joseph Stalin Revolutionary, politician, Generalissimus and dictator Aarthus Air Raid RAF Mosquitos destory Gestapo headquarters For the military enthusiast CONTENTS April 2020 Page 14 Click on any video below to view How much do you know about movie theme songs? Take our quiz and find out. Hipe’s Wouter de The old South African Goede interviews former Defence Force used 28’s gang boss David a mixture of English, Williams. Afrikaans, slang and techno-speak that few Russian Special Forces outside the military could hope to under- stand. Some of the terms Features 34 were humorous, some A matter of survival were clever, while others 6 This month we continue with were downright crude. Ten generals that switched sides our look at fish and fishing for Imagine you’re a soldier heading survival. into battle under the leadership of Part of Hipe’s “On the a general who, until very recently 30 couch” series, this is an been trying very hard to kill you. interview with one of How much faith and trust would Ranks you have in a leader like that? This month we look at the author Herman Charles Army of the Republic of Viet- Bosman’s most famous 20 nam (ARVN), the South Viet- characters, Oom Schalk Social media - Soldier’s menace namese army. A taxi driver was shot Lourens. Hipe spent time in These days nearly everyone has dead in an ongoing Hanover Park, an area a smart phone, laptop or PC plagued with gang with access to the Internet and Quiz war between rival taxi to social media. -

Projecting Bolshevik Unity, Ritualizing Party Debate: the Thirteenth Party Congress, 1924

Acta Slavica Iaponica, Tomus 31, pp. 55‒76 Projecting Bolshevik Unity, Ritualizing Party Debate: The Thirteenth Party Congress, 1924 TAKIGUCHI Junya The Thirteenth Congress of the Bolshevik Party – the first Party congress since V. I. Lenin’s death – was convened in Moscow in May 1924, thirteen months after the Twelfth Congress.1 The Congress promoted an atmosphere of mourning by adorning the auditorium of the Bol’shoi Palace in the Kremlin (the venue of the plenary session) with portraits of Lenin. The accompany- ing publicity emphasized how the great Party leader had uncompromisingly worked for the Soviet state throughout his life.2 In preparing the Congress re- ports, party officials scrutinized Lenin’s writings and speeches, and the Central Committee reports constantly referred to “what Lenin said” in order to repre- sent itself as the legitimate heir of Leninism.3 Nearly all speakers representing the central Party institutions mentioned Lenin’s name in their reports. Grigorii Zinoviev said in his opening speech that the Party ought to be united, and should be “based on Leninism.”4 However, the Thirteenth Congress was not merely one of grief and con- dolence. The Bolshevik leadership orchestrated the Congress to project Party unity, to propagate the achievements and the glorious future of the Soviet gov- ernment, and to mobilize Soviet citizens into Bolshevik state-building. There were few attempts to inject this kind of drama before 1924 when the Party congress instead acted as a genuine debating forum with little propaganda.5 The Thirteenth Congress hence represented a new departure in terms of the structure, function and significance in the history of the congress during the early Soviet era. -

The Historical Legacy for Contemporary Russian Foreign Policy

CHAPTER 1 The Historical Legacy for Contemporary Russian Foreign Policy o other country in the world is a global power simply by virtue of geogra- N phy.1 The growth of Russia from an isolated, backward East Slavic principal- ity into a continental Eurasian empire meant that Russian foreign policy had to engage with many of the world’s principal centers of power. A Russian official trying to chart the country’s foreign policy in the 18th century, for instance, would have to be concerned simultaneously about the position and actions of the Manchu Empire in China, the Persian and Ottoman Empires (and their respec- tive vassals and subordinate allies), as well as all of the Great Powers in Europe, including Austria, Prussia, France, Britain, Holland, and Sweden. This geographic reality laid the basis for a Russian tradition of a “multivector” foreign policy, with leaders, at different points, emphasizing the importance of rela- tions with different parts of the world. For instance, during the 17th century, fully half of the departments of the Posolskii Prikaz—the Ambassadors’ Office—of the Muscovite state dealt with Russia’s neighbors to the south and east; in the next cen- tury, three out of the four departments of the College of International Affairs (the successor agency in the imperial government) covered different regions of Europe.2 Russian history thus bequeaths to the current government a variety of options in terms of how to frame the country’s international orientation. To some extent, the choices open to Russia today are rooted in the legacies of past decisions. -

DSA's Options and the Socialist International DSA Internationalism

DSA’s Options and the Socialist International DSA Internationalism Committee April 2017 At the last national convention DSA committed itself to holding an organizational discussion on its relationship to the Socialist International leading up to the 2017 convention. The structure of this mandatory discussion was left to DSA’s internationalism committee. The following sheet contains information on the Socialist International, DSA’s involvement with it, the options facing DSA, and arguments in favor of downgrading to observer status and withdrawing completely. A. History of the Socialist International and DSA The Socialist International (SI) has its political and intellectual origins in the nineteenth century socialist movement. Its predecessors were the First International (1864-1876), of which Karl Marx was a leader, and the Second International (1889-1916). In the period of the Second International, the great socialist parties of Europe (particularly the British Labour Party, German Social Democratic Party, and the French Section of the Workers International) formed and became major electoral forces in their countries, advancing ideologies heavily influenced by Marx and political programs calling for the abolition of capitalism and the creation of new systems of worker democracy. The Second International collapsed when nearly all of its member parties, breaking their promise not to go to war against other working people, rallied to their respective governments in the First World War. The Socialist Party of America (SPA)—DSA’s predecessor—was one of the very few member parties to oppose the war. Many of the factions that opposed the war and supported the Bolshevik Revolution came together to form the Communist International in 1919, which over the course of the 1920s became dominated by Moscow and by the 1930s had become a tool of Soviet foreign policy and a purveyor of Stalinist orthodoxy. -

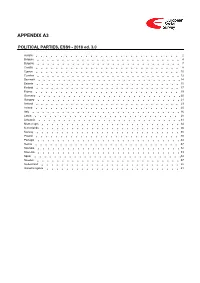

ESS9 Appendix A3 Political Parties Ed

APPENDIX A3 POLITICAL PARTIES, ESS9 - 2018 ed. 3.0 Austria 2 Belgium 4 Bulgaria 7 Croatia 8 Cyprus 10 Czechia 12 Denmark 14 Estonia 15 Finland 17 France 19 Germany 20 Hungary 21 Iceland 23 Ireland 25 Italy 26 Latvia 28 Lithuania 31 Montenegro 34 Netherlands 36 Norway 38 Poland 40 Portugal 44 Serbia 47 Slovakia 52 Slovenia 53 Spain 54 Sweden 57 Switzerland 58 United Kingdom 61 Version Notes, ESS9 Appendix A3 POLITICAL PARTIES ESS9 edition 3.0 (published 10.12.20): Changes from previous edition: Additional countries: Denmark, Iceland. ESS9 edition 2.0 (published 15.06.20): Changes from previous edition: Additional countries: Croatia, Latvia, Lithuania, Montenegro, Portugal, Slovakia, Spain, Sweden. Austria 1. Political parties Language used in data file: German Year of last election: 2017 Official party names, English 1. Sozialdemokratische Partei Österreichs (SPÖ) - Social Democratic Party of Austria - 26.9 % names/translation, and size in last 2. Österreichische Volkspartei (ÖVP) - Austrian People's Party - 31.5 % election: 3. Freiheitliche Partei Österreichs (FPÖ) - Freedom Party of Austria - 26.0 % 4. Liste Peter Pilz (PILZ) - PILZ - 4.4 % 5. Die Grünen – Die Grüne Alternative (Grüne) - The Greens – The Green Alternative - 3.8 % 6. Kommunistische Partei Österreichs (KPÖ) - Communist Party of Austria - 0.8 % 7. NEOS – Das Neue Österreich und Liberales Forum (NEOS) - NEOS – The New Austria and Liberal Forum - 5.3 % 8. G!LT - Verein zur Förderung der Offenen Demokratie (GILT) - My Vote Counts! - 1.0 % Description of political parties listed 1. The Social Democratic Party (Sozialdemokratische Partei Österreichs, or SPÖ) is a social above democratic/center-left political party that was founded in 1888 as the Social Democratic Worker's Party (Sozialdemokratische Arbeiterpartei, or SDAP), when Victor Adler managed to unite the various opposing factions. -

China's Economic Growth: Implications to the ASEAN (An Integrative Report)

Philippine APEC PASCN Study Center Network PASCN Discussion Paper No. 2001-01 China's Economic Growth: Implications to the ASEAN (An Integrative Report) Ellen H. Palanca The PASCN Discussion Paper Series constitutes studies that are preliminary and subject to further revisions and review. They are being circulated in a limited number of copies only for purposes of soliciting comments and suggestions for further refinements. The views and opinions expressed are those of the author(s) and do not neces- sarily reflect those of the Network. Not for quotation without permission from the author(s). P HILIPPINE APEC PASCN S TUDY C ENTER N ETWORK PASCN Discussion Paper No. 2001-01 China’s Economic Growth: Implications to the ASEAN (An Integrative Report) Ellen H. Palanca Ateneo de Manila University September 2001 The PASCN Discussion Paper Series constitutes studies that are preliminary and subject to further revisions and review. They are being circulated in a limited number of copies only for purposes of soliciting comments and suggestions for further refinements. The views and opinions expressed are those of the author(s) and do not necessarily reflect those of the Network. Not for quotation without permission from the author(s). For comments, suggestions or further inquiries, please contact: The PASCN Secretariat Philippine Institute for Development Studies NEDA sa Makati Building, 106 Amorsolo Street Legaspi Village, Makati City, Philippines Tel. Nos. 893-9588 and 892-5817 ABSTRACT The rise of China’s economy in the last couple of decades can be attributed to the favorable initial conditions, the market-oriented economic reforms, and good macroeconomic management in the nineties. -

How Effective Is the Renminbi Devaluation on China's Trade

How Effective is the Renminbi Devaluation on China’s Trade Balance Zhaoyong Zhang, Edith Cowan University and Kiyotaka Sato, Yokohama National University Working Paper Series Vol. 2008-16 June 2008 The views expressed in this publication are those of the author(s) and do not necessarily reflect those of the Institute. No part of this article may be used reproduced in any manner whatsoever without written permission except in the case of brief quotations embodied in articles and reviews. For information, please write to the Centre. The International Centre for the Study of East Asian Development, Kitakyushu HOW EFFECTIVE IS THE RENMINBI DEVALUATION ON CHINA’S TRADE BALANCE∗† Zhaoyong Zhang Edith Cowan University Kiyotaka Sato Yokohama National University Abstract The objective of this study is to contribute to the current discussion on the Renminbi (RMB) exchange rate by providing new evidence on China’s exchange rate policy and the impacts of RMB devaluation/revaluation on China’s output and trade balance. For a rigorous empirical examination, this research constructs a vector autoregression (VAR) model and employs the most recent econometric techniques to identify if the Chinese economic system has become responsive to the changes in the exchange rate after about three decades reform. More specifically, we use a structural VAR technique to estimate impulse response functions and variance decompositions for China’s output and trade balance, and to determine how the fundamental macroeconomic shocks contribute to the fluctuations in the real exchange rate, and how output and trade account respond to the identified various shocks. This study will contribute to our better understanding of how far and how fast China’s reforms have transformed the economy to a market-oriented economy, and also the recent discussion on China’s exchange rate policy. -

An Analysis of the Appreciation of the Chinese Currency and Influences on China's Economy

University of Denver Digital Commons @ DU Electronic Theses and Dissertations Graduate Studies 1-1-2014 An Analysis of the Appreciation of the Chinese Currency and Influences on China's Economy Lina Ma University of Denver Follow this and additional works at: https://digitalcommons.du.edu/etd Part of the Asian Studies Commons, Economic Theory Commons, and the International Economics Commons Recommended Citation Ma, Lina, "An Analysis of the Appreciation of the Chinese Currency and Influences on China's Economy" (2014). Electronic Theses and Dissertations. 408. https://digitalcommons.du.edu/etd/408 This Thesis is brought to you for free and open access by the Graduate Studies at Digital Commons @ DU. It has been accepted for inclusion in Electronic Theses and Dissertations by an authorized administrator of Digital Commons @ DU. For more information, please contact [email protected],[email protected]. An Analysis of the Appreciation of the Chinese Currency and Influences on China’s Economy A Thesis Presented to the Faculty of Social Sciences University of Denver In Partial Fulfillment of the Requirements for the Degree Master of Arts By Lina Ma November 2014 Advisor: Tracy Mott Author: Lina Ma Title: An Analysis of the Appreciation of the Chinese Currency and Influences on China’s Economy Advisor: Tracy Mott Degree Date: November 2014 ABSTRACT In recent years, China’s economy development has had more and more impact on the global economy. The Chinese currency continued to appreciate since 2005, which has had both positive and negative results on Chinese’s economy. The Chinese government uses the monetary policy to control the inflation pressure, which could work counter to Chinese exchange rate policy. -

List of Members

Delegation to the Parliamentary Assembly of the Union for the Mediterranean Members David Maria SASSOLI Chair Group of the Progressive Alliance of Socialists and Democrats in the European Parliament Italy Partito Democratico Asim ADEMOV Member Group of the European People's Party (Christian Democrats) Bulgaria Citizens for European Development of Bulgaria Alex AGIUS SALIBA Member Group of the Progressive Alliance of Socialists and Democrats in the European Parliament Malta Partit Laburista François ALFONSI Member Group of the Greens/European Free Alliance France Régions et Peuples Solidaires Malik AZMANI Member Renew Europe Group Netherlands Volkspartij voor Vrijheid en Democratie Nicolas BAY Member Identity and Democracy Group France Rassemblement national Tiziana BEGHIN Member Non-attached Members Italy Movimento 5 Stelle François-Xavier BELLAMY Member Group of the European People's Party (Christian Democrats) France Les Républicains Sergio BERLATO Member European Conservatives and Reformists Group Italy Fratelli d'Italia Manuel BOMPARD Member The Left group in the European Parliament - GUE/NGL France La France Insoumise 24/09/2021 1 Sylvie BRUNET Member Renew Europe Group France Mouvement Démocrate Jorge BUXADÉ VILLALBA Member European Conservatives and Reformists Group Spain VOX Catherine CHABAUD Member Renew Europe Group France Mouvement Démocrate Nathalie COLIN-OESTERLÉ Member Group of the European People's Party (Christian Democrats) France Les centristes Gilbert COLLARD Member Identity and Democracy Group France Rassemblement national -

Bajo El Signo Del Escorpión

Por Juri Lina Bajo el Signo del Escorpión pg. 1 de 360 - 27 de septiembre de 2008 Por Juri Lina pg. 2 de 360 - 27 de septiembre de 2008 Por Juri Lina PRESENTACION Juri Lina's Book "Under the Sign of the Scorpion" is a tremendously important book, self-published in the English language in Sweden by the courageous author. Jüri Lina, has been banned through out the U.S.A. and Canada. Publishers and bookstores alike are so frightened by the subject matter they shrink away and hide. But now, braving government disapproval and persecution by groups that do not want the documented information in Under the Sign of the Scorpion to see the light of day, Texe Marrs and Power of Prophecy are pleased to offer Mr. Lina's outstanding book. Lina's book reveals what the secret societies and the authorities are desperate to keep hidden—how Jewish Illuminati revolutionaries in the United States, Britain, and Germany—including Marx, Lenin, Trotsky, and Stalin—conspired to overthrow the Czar of Russia. It details also how these monsters succeeded in bringing the bloody reign of Illuministic Communism to the Soviet Empire and to half the world's population. Under the Sign of the Scorpion reveals the whole, sinister, previously untold story of how a tiny band of Masonic Jewish thugs inspired by Satan, funded by Illuminati bigwigs, and emboldened by their Talmudic hatred were able to starve, bludgeon, imprison and massacre over 30 million human victims with millions more suffering in Soviet Gulag concentration camps. Fuhrer Adolf Hitler got his idea for Nazi concentration camps from these same Bolshevik Communist butchers.