HPE Proliant DL380 Gen10 Server User Guide

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

New CSC Computing Resources

New CSC computing resources Atte Sillanpää, Nino Runeberg CSC – IT Center for Science Ltd. Outline CSC at a glance New Kajaani Data Centre Finland’s new supercomputers – Sisu (Cray XC30) – Taito (HP cluster) CSC resources available for researchers CSC presentation 2 CSC’s Services Funet Services Computing Services Universities Application Services Polytechnics Ministries Data Services for Science and Culture Public sector Information Research centers Management Services Companies FUNET FUNET and Data services – Connections to all higher education institutions in Finland and for 37 state research institutes and other organizations – Network Services and Light paths – Network Security – Funet CERT – eduroam – wireless network roaming – Haka-identity Management – Campus Support – The NORDUnet network Data services – Digital Preservation and Data for Research Data for Research (TTA), National Digital Library (KDK) International collaboration via EU projects (EUDAT, APARSEN, ODE, SIM4RDM) – Database and information services Paituli: GIS service Nic.funet.fi – freely distributable files with FTP since 1990 CSC Stream Database administration services – Memory organizations (Finnish university and polytechnics libraries, Finnish National Audiovisual Archive, Finnish National Archives, Finnish National Gallery) 4 Current HPC System Environment Name Louhi Vuori Type Cray XT4/5 HP Cluster DOB 2007 2010 Nodes 1864 304 CPU Cores 10864 3648 Performance ~110 TFlop/s 34 TF Total memory ~11 TB 5 TB Interconnect Cray QDR IB SeaStar Fat tree 3D Torus CSC -

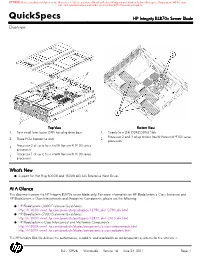

Quickspecs HP Integrity Bl870c Server Blade Overview

RETIRED: Retired products sold prior to the November 1, 2015 separation of Hewlett-Packard Company into Hewlett Packard Enterprise Company and HP Inc. may have older product names and model numbers that differ from current models. QuickSpecs HP Integrity BL870c Server Blade Overview Top View Bottom View 1. Four small form factor (SFF) hot-plug drive bays 1. Twenty-four (24) DDR2 DIMM Slots Processor 2 and 3 of up to four Intel® Itanium® 9100 series 2. Three PCIe Mezzanine slots 2. processors Processor 0 of up to four Intel® Itanium® 9100 series 3. processors Processor 1 of up to four Intel® Itanium® 9100 series 4. processors What's New Support for Hot Plug 600GB and 450GB 6G SAS Enterprise Hard Drives At A Glance This document covers the HP Integrity BL870c server blade only. For more information on HP BladeSystem c-Class Enclosure and HP BladeSystem c-Class Interconnects and Mezzanine Components, please see the following: HP BladeSystem c3000 Enclosure QuickSpecs: http://h18000.www1.hp.com/products/quickspecs/12790_div/12790_div.html HP BladeSystem c7000 Enclosure QuickSpecs: http://h18000.www1.hp.com/products/quickspecs/12810_div/12810_div.html HP BladeSystem c-Class Interconnect and Mezzanine Components: http://h18004.www1.hp.com/products/blades/components/c-class-interconnects.html http://h18004.www1.hp.com/products/blades/components/c-class-adapters.html The HP Integrity BL870c delivers the performance, reliability, and availability on 64-bit operating systems for the ultimate in DA - 12926 Worldwide — Version 16 — June 24, 2011 Page 1 RETIRED: Retired products sold prior to the November 1, 2015 separation of Hewlett-Packard Company into Hewlett Packard Enterprise Company and HP Inc. -

HP/NVIDIA Solutions for HPC Compute and Visualization

HP Update Bill Mannel VP/GM HPC & Big Data Business Unit Apollo Servers © Copyright 2015 Hewlett-Packard Development Company, L.P. The information contained herein is subject to change without notice. The most exciting shifts of our time are underway Security Mobility Cloud Big Data Time to revenue is critical Decisions Making IT critical Business needs must be rapid to business success happen anywhere Change is constant …for billion By 2020 billion 30 devices 8 people trillion GB million 40 data 10 mobile apps 2 © Copyright 2015 Hewlett-Packard Development Company, L.P. The information contained herein is subject to change without notice. HP’s compute portfolio for better IT service delivery Software-defined and cloud-ready API HP OneView HP Helion OpenStack RESTful APIs WorkloadWorkload-optimized Optimized Mission-critical Virtualized &cloud Core business Big Data, HPC, SP environments workloads applications & web scalability Workloads HP HP HP Apollo HP HP ProLiant HP Integrity HP Integrity HP BladeSystem HP HP HP ProLiant SL Moonshot Family Cloudline scale-up blades & Superdome NonStop MicroServer ProLiant ML ProLiant DL Density and efficiency Lowest Cost Convergence Intelligence Availability to scale rapidly built to Scale for continuous business to accelerate IT service delivery to increase productivity Converged Converged network Converged management Converged storage Common modular HP Networking HP OneView HP StoreVirtual VSA architecture Global support and services | Best-in-class partnerships | ConvergedSystem 3 © Copyright 2015 Hewlett-Packard Development Company, L.P. The information contained herein is subject to change without notice. Hyperscale compute grows 10X faster than the total market HPC is a strategic growth market for HP Traditional Private Cloud HPC Service Provider $50 $40 36% $30 50+% $B $20 $10 $0 2013 2014 2015 2016 2017 4 © Copyright 2015 Hewlett-Packard Development Company, L.P. -

HP Integrity Nonstop Operations Guide for H-Series and J-Series Rvus

HP Integrity NonStop Operations Guide for H-Series and J-Series RVUs HP Part Number: 529869-023 Published: February 2014 Edition: J06.03 and subsequent J-series RVUs and H06.13 and subsequent H-series RVUs © Copyright 2014 Hewlett-Packard Development Company, L.P. Legal Notice Confidential computer software. Valid license from HP required for possession, use or copying. Consistent with FAR 12.211 and 12.212, Commercial Computer Software, Computer Software Documentation, and Technical Data for Commercial Items are licensed to the U.S. Government under vendor’s standard commercial license. The information contained herein is subject to change without notice. The only warranties for HP products and services are set forth in the express warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. HP shall not be liable for technical or editorial errors or omissions contained herein. Export of the information contained in this publication may require authorization from the U.S. Department of Commerce. Microsoft, Windows, and Windows NT are U.S. registered trademarks of Microsoft Corporation. Intel, Pentium, and Celeron are trademarks or registered trademarks of Intel Corporation or its subsidiaries in the United States and other countries. Java® is a registered trademark of Oracle and/or its affiliates. Motif, OSF/1, Motif, OSF/1, UNIX, X/Open, and the "X" device are registered trademarks, and IT DialTone and The Open Group are trademarks of The Open Group in the U.S. and other countries. Open Software Foundation, OSF, the OSF logo, OSF/1, OSF/Motif, and Motif are trademarks of the Open Software Foundation, Inc. -

Microsoft® Dynamics CRM: HP Solution Brief for Midsize

Microsoft® Dynamics CRM HP Solution brief for midsize businesses To compete effectively and drive a profitable business, You can count on HP, Microsoft, and local technology midsize companies such as yours require a tangible partners, together on the frontline, to deliver optimized return on their technology investments. With limited IT solutions that are reliable and ready to meet your resources, you must take a pragmatic, project-oriented unique business needs. You can have the confidence approach. In short, you need a fast payback, solutions that HP, Microsoft, and our partners will continue to that are easy to deploy and manage, and ones that support your business each and every step of the way. are both affordable and reliable. We deliver uniquely valuable technology experiences for your business through impressive technologies, a As you invest in customer relationship management complete portfolio of total care services, and award- (CRM) solutions, HP makes sure that you get the most winning support. out of them with an application infrastructure you can count on. With our approach, you know what to expect—configurations on HP servers, storage, and software designed for midsize companies, optimized to meet your need for higher levels of business continuity, security, and operational efficiency. Microsoft Dynamics CRM delivers a fast, flexible, and affordable solution that helps you create consistent and measurable improvements in your customer relationships. By helping midsize businesses reduce risk, cut costs, Microsoft Dynamics CRM application and generate growth, HP and Microsoft—together with our partners—provide you with outstanding technology architecture for better business outcomes. Microsoft Dynamics CRM has a multi-tier architecture consisting of a Web-based client, a highly Solution description configurable business logic layer, and a back-end Microsoft Dynamics CRM provides all the tools and database. -

Nonstop Product Offerings and Roadmap

NonStop Product Offerings and Roadmap Iain Liston-Brown (EMEA NED Presales Consulting) 8th May, 2013 © Copyright 2013 Hewlett-Packard Development Company, L.P. The information contained herein is subject to change without notice. Forward-looking statements This is a rolling (up to three year) Roadmap and is subject to change without notice. This document contains forward looking statements regarding future operations, product development, product capabilities and availability dates. This information is subject to substantial uncertainties and is subject to change at any time without prior notification. Statements contained in this document concerning these matters only reflect Hewlett Packard's predictions and / or expectations as of the date of this document and actual results and future plans of Hewlett-Packard may differ significantly as a result of, among other things, changes in product strategy resulting from technological, internal corporate, market and other changes. This is not a commitment to deliver any material, code or functionality and should not be relied upon in making purchasing decisions. 2 © Copyright 2013 Hewlett-Packard Development Company, L.P. The information contained herein is subject to change without notice. HP confidential information This is a rolling (up to three year) Roadmap and is subject to change without notice. This Roadmap contains HP Confidential Information. If you have a valid Confidential Disclosure Agreement with HP, disclosure of the Roadmap is subject to that CDA. If not, it is subject to the following terms: for a period of 3 years after the date of disclosure, you may use the Roadmap solely for the purpose of evaluating purchase decisions from HP and use a reasonable standard of care to prevent disclosures. -

More Robust So That They Can Be Used Alongside Or Instead of HP-UX

the Availability Digest www.availabilitydigest.com HP’s Project Odyssey – Migrating Mission Critical to x86 March 2012 HP’s Enterprise Servers, Storage and Networking (ESSN) Business Unit markets two lines of servers – Proliant servers (acquired from Compaq) and Integrity servers. Proliant servers are based on the Intel x86 Xeon processor and support Windows and Linux operating systems. Integrity servers are Itanium-based and support HP mission-critical operating systems – HP-UX, NonStop, and OpenVMS. Both Proliant and Integrity servers are available either as blades for HP’s BladeSystem or as rack- mounted servers. Integrity servers are also available as Superdome 2 blades. Within ESSN, the Business Critical Systems Division (BCS) focuses on delivering mission critical solutions to the market including Integrity, NonStop and scale-up x86 solutions (ProLiant DL980). On November 22, 2011, HP announced1 a major new initiative dubbed “Project Odyssey.” It is intended to extend the mission-critical features of HP-UX from Itanium blades to Windows and Linux x86 blades. Project Odyssey raises many questions for those involved with HP’s current highly available operating systems – HP-UX, NonStop, and OpenVMS. In this article, these concerns are explored. Martin Fink, senior vice president and general manager of Business Critical Systems at HP, gladly offered his insights in a one-on-one interview. His comments, interspersed throughout the article, are significantly abridged but hopefully capture his intent.2 Project Odyssey The motivation for Project Odyssey was expressed by Martin in HP’s press release: “Clients have been asking us to expand the mission-critical experience that is delivered today with HP-UX on Integrity to an x86-based infrastructure. -

HP Nc360m Dual Port 1Gbe BL-C Adapter

QuickSpecs HP NC360m Dual Port 1GbE BL-c Adapter Overview The HP NC360m is a ProLiant c-Class BladeSystem network adapter providing two Gigabit Ethernet ports on a single mezzanine card utilizing only a maximum of 1.6 watts. With the addition of the NC360m, ProLiant c-Class customers now have the choice of an HP dual port network adapter that uses either an Intel or Broadcom controller. The NC360m is designed for applications requiring additional Gigabit Ethernet ports than those provided on the server. It is ideal for virtualization, security, server consolidation, network segmentation, and other BladeSystem applications requiring additional network port density. Tested and proven to meet demanding BladeSystem standards, the NC360m ships with the advanced server features that ProLiant customers have come to expect, such as support for failover and load balancing, TCP/IP checksum offloading, large send offloading, Wake-on-LAN, jumbo frames, VLAN tagging, and much more. HP NC360m Dual Port 1GbE BL-c Adapter What's New Support for HP ProLiant Generation 7 blade servers Models HP NC360m Dual Port 1GbE BL-c Adapter 445978-B21 DA - 12797 North America — Version 18 — April 12, 2013 Page 1 QuickSpecs HP NC360m Dual Port 1GbE BL-c Adapter Overview Kit Contents 1. HP NC360m Dual Port 1GbE BL-c Adapter 2. Quick install card 3. Product warranty statement 4. Drivers, user guide, and utilities via http://www.hp.com DA - 12797 North America — Version 18 — April 12, 2013 Page 2 QuickSpecs HP NC360m Dual Port 1GbE BL-c Adapter Compatibility HP ProLiant -

HPE Integrity Bl860c I6 Server Blade Digital Data Sheet

Digital data sheet HPE Integrity BL860c i6 Server Blade Integrity Server Blades Overview What's new Do you need a high-performance enterprise blade server that is · Supports the latest Intel Itanium 9700 series designed for database, Java, and technical computing processor for higher performance. applications? The HPE Integrity BL860c i6 Server Blade is a two- · Supports the latest HP-UX 2017 Operating socket full-height blade featuring the Intel® Itanium® processor Environment Update Release which has 9700 series supported by up to 384 GB memory (24 DIMM enhancements in data management, slots), with four HPE FlexFabric Converged Network Adapter virtualization, high availability, security and (CNA) ports standard. The HPE BL860c i6 Server Blade also private cloud management. features the unique HPE Blade Link technology which combines · Supports the latest HPE Storage XP7 and multiple blades to create two, four and eight socket systems HPE 3PAR StoreServ All Flash arrays for providing increased scalability. The HPE Integrity BL860c i6 higher performance and lower TCO. Server Blade can coexist with HPE ProLiant Server Blades within Digital data sheet Page 2 the HPE BladeSystem c-Class Enclosure. Ideal replacement for legacy Integrity products like HPE BL860c and HPE BL860c i2 servers. Features Improved Platform Value with New Innovations in HP-UX and HPE Storage Extend mission-critical computing of the HPE Integrity and HP-UX platform with support for the new i6 servers through at least 2025. Take advantage of recent HP-UX innovations that include - Veritas 6.1 File system support, Online HP-UX vPAR migration, Smart Quorum with Serviceguard and OpenStack® support for efficient data management and high availability. -

HP Integrity Systems Family Guide HP Integrity Systems Family

HARNESS IT with a mission-critical converged infrastructure. HP Integrity systems Family guide HP Integrity systems family Table of contents Build an IT environment that delivers better business results ........................................ 3 Mission-critical converged infrastructure: the foundation of the next decade of mission-critical computing ...................................................................................... 3 HP Integrity systems deliver modern mission-critical results .......................................... 4 Rackmount Integrity servers and Integrity NonStop servers complete the line................. 5 HP-UX 11i v3: the operating system of the HP mission-critical Converged Infrastructure ... 5 HP CloudSystem Matrix: the private cloud platform for HP mission-critical Converged Infrastructure ....................................................................................................... 5 Complement your Integrity systems with additional software, storage, services, and system options ..................................................................................................... 5 HP Integrity server blades ..................................................................................... 6 HP Integrity rackmount servers—entry class ............................................................. 8 HP Integrity rackmount servers—midrange ..............................................................10 HP Integrity Superdome—high end ........................................................................12 -

Hp Converged Infrastructure Unleash Your Potential

HP CONVERGED INFRASTRUCTURE UNLEASH YOUR POTENTIAL Leslie Ong General Manager Enterprise Servers, Storage and Networks HP En terpr ise Bus iness ©2010 Hewlett-Packard Development Company, L.P. The information contained herein is subject to change without notice. Captive in Business operations and innovation throttled 70% maintenance to 30% Netra SUN IBM SPARC BladeCenter Solaris System x VSphere JAVA AIX SAP ERP VirtualBox Director EXCHANGE SERVER ORACLE DATABASE XenServer Mainframe JBOSS COMVERSE DB2 LOTUS SONAS VirusScan NETAPP RHEL FAS SQL SERVER Veritas SnapManager SAS JD EDWARDS SIEBEL SANscreen WEBSPHERE NetWare Windows Server SnapVault HP Proliant SnapMirror CISCO DELL Integrity MYSQL Catalyst BladeSystem Matrix Vmware ESX IOS EMC PowerEdge Nexus Cellera PowerVault StorageWorks PEOPLESOFT UCS Connectrix SLES TIVOLI VFrame Documentum EqqgualLogic SYBASE Ionix FUJITSU IronPort HITACHI PowerConnect ScanSafe Symmetrix USP-V PRIMERGY ETERNUS CLARiioN AMS SMS PRIMEQUEST BladeSymphony UDS SHAREPOINT THE DATA CENTER OF THE FUTURE will be built on a Converged Infrastructure HP CONVERGED INFRASTRUCTURE Storage Servers Power & cooling Network Management software REQUIREMENTS to BUILD a CONVERGED INFRASTRUCTURE HP CONVERGED INFRASTRUCTURE VIRTUALIZED RESILIENT OPEN ORCHESTRATED MODULAR THE ARCHITECTURE OF the CONVERGED INFRASTRUCTURE Virtual Data Center Flex Matrix Resource Smart Grid Fabric Operating Pool Environment Virtualized Intelligent Wire-once, Enables compute, energy dynamic shared-service memory, management assembly, management storage -

HPE Integrity Bl860c I6 Server Blade Overview

QuickSpecs HPE Integrity BL860c i6 Server Blade Overview HPE Integrity BL860c i6 Server Blade 1. 3 x PCIe Mezzanine Slots 3. 2 x SFF SAS HDDs, hot-swap bays 2. 24 x DDR3 DIMM Slots 4. Two Intel® Itanium® processor 9700 series sockets What's New • Intel® Itanium® processor 9700 series 8-core and 4-core processors • Higher Frequency and Low Voltage PC3L-12800 Registered CAS 11 Memory DIMMs • Supports the HP-UX 11iv3 2018 OEUR which has significant enhancements in data management (Veritas 6.1 default installed), Virtualization (un-capped vPars warning), high availability (Serviceguard SMS certified with Oracle RAC 12cR2), Java and Open Source (CIFS, BIND, Apache, PHP updates), Security (deactivate online CPU migration in SRP containers) and, manageability (HP-UX in private cloud environments through HPE Helion OpenStack) • Support for newer networking and storage solutions, such as the HPE 10GbE Pass-Thru Module II, 3PAR 9450, MSA 2050 and MSA 2052, 12Gb/s SAS adapter and Pure Storage FlashArray//M™ • Supports the latest HPE Storage arrays – XP7 and 3PAR 8000/2000 All Flash Storage to significantly boost performance and lower TCO • VSI OpenVMS V8.4-2L1 supports BL860c i6 servers. It includes a new release of OpenSSL and Secure Web Server (SWS) for OpenVMS based on Apache HTTP Server Version 2.4-12 At A Glance This document covers the HPE Integrity BL860c i6 server blade only. For more information on HPE BladeSystem c-Class Enclosures and HPE BladeSystem c-Class Interconnect and Mezzanine Components, please see the following: • HPE BladeSystem