Semi-Supervised Pattern Recognition and Machine Learning for Eye-Tracking

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Quarterly News·Letter

the Quarterly news·letter volume lxxix · number 2 · spring 2014 A Brief Editorial Manifesto by Peter Rutledge Koch What is Fine Printing Anyway? by Peter Rutledge Koch Forthcoming from the Publications Committee by Jennifer Sime Report from the Toronto Book Fair by Bruce Whiteman Review by Crispin Elsted Like a Moth to a Flame by Bo Wreden Southern California Sightings by Carolee Campbell News from the Library by Henry Snyder News & Notes Letter to the Editor New Members published for its members by the book club of california the book club of california is a non-profit membership corporation founded in 1912. A Brief Editorial Manifesto Based on One It supports the art of fine printing related to the history and literature of California and the Hundred Years of Tradition With a Few Minor western states of America through research, publishing, public programs, and exhibitions. Suggestions To Account for Changes in Our The Club is limited to 1,250 members, and membership in the Club is open to all. Annual renewals are due by January 1 of every year. Memberships are: Regular, $95; Sustaining, $150; Patron, Perception of Fine Printing in the Real West $250; Sponsor, $500; Benefactor, $1,000; Apprentice, $35; and Student, $25. All members by Peter Rutledge Koch receive the Quarterly News-Letter and, except for Apprentice and Student members, the current keepsake. All members have the privilege—but not the obligation—of buying Club publications, which are limited, as a rule, to one copy per member. All members may purchase extra copies of keepsakes or QN-Ls, when available. -

Race Nears Finish Line

City d ing too much for A-B? It's true, some say 3 ~ Community Newspaper Company www.allstcmbrightontab.com FRIDAY, NOVEMBER Vol. 10, No. 13 46 Pages 3 Sections 75¢ ELECTIO --.....-.......L ME 1cH OD· Race nears finish line By Audltl Guha STAFF WRITER Tuesday is around the comer, wh~n voters will decide who gets to wear the District City Councilor hat inAllston-Brighton. Both canrudates hope the . weilther will cooperate and people wi 11 come out and vote on Election Day. Local polls will be open from ' 8 a,m. to 8 p.m. Incumbent Jerry McDermott of Brighton is busy with his cam paign and said he is "cautiously optimistic." "I've been out knocking on d<>ors, and my volunteers are on the phone and sending out mail e~i" he said. '1t's been a good run, I've lost 10 pounds." His challenger, Area Planning Action Council Director Paul Creighton of Allston, who has been clamoring for more debates, said he believes he ran a strong campaign and is hoping for the lx>:st. "I'm pumped; we are coming down tbestretch and come on it, as tht•y say at the racetrack," he said. "I feel we've been very well re ceived. There are a lot of problems in the community that aren't being rAJ 'TO 8'1 DAVID GORDON A headless motorcyclist, who would not give hi name, made an appearance for the fifth straight Halloween, pfovldlng the children of North Allston with a spooky reminder addressed, and we really do need a of the need to always wear a helmet. -

Awards 10X Diamond Album March // 3/1/17 - 3/31/17

RIAA GOLD & PLATINUM NICKELBACK//ALL THE RIGHT REASONS AWARDS 10X DIAMOND ALBUM MARCH // 3/1/17 - 3/31/17 KANYE WEST//THE LIFE OF PABLO PLATINUM ALBUM In March 2017, RIAA certified 119 Digital Single Awards and THE CHAINSMOKERS//COLLAGE EP 21 Album Awards. All RIAA Awards PLATINUM ALBUM dating back to 1958, plus top tallies for your favorite artists, are available ED SHEERAN//÷ at riaa.com/gold-platinum! GOLD ALBUM JOSH TURNER//HAYWIRE SONGS GOLD ALBUM www.riaa.com //// //// GOLD & PLATINUM AWARDS MARCH // 3/1/17 - 3/31/17 MULTI PLATINUM SINGLE // 12 Cert Date// Title// Artist// Genre// Label// Plat Level// Rel. Date// R&B/ 3/30/2017 Caroline Amine Republic Records 8/26/2016 Hip Hop Ariana Grande 3/9/2017 Side To Side Pop Republic Records 5/20/2016 Feat. Nicki Minaj 3/3/2017 24K Magic Bruno Mars Pop Atlantic Records 10/7/2016 3/2/2017 Shape Of You Ed Sheeran Pop Atlantic Records 1/6/2017 R&B/ 3/30/2017 All The Way Up Fat Joe & Remy Ma Rng/Empire 3/2/2016 Hip Hop I Hate U, I Love U 3/1/2017 Gnash Pop :): 8/7/2015 (Feat. Olivia O’brien) R&B/ 3/22/2017 Ride SoMo Republic Records 11/19/2013 Hip Hop 3/27/2017 Closer The Chainsmokers Pop Columbia 7/29/2016 3/27/2017 Closer The Chainsmokers Pop Columbia 7/29/2016 3/27/2017 Closer The Chainsmokers Pop Columbia 7/29/2016 R&B/ 3/9/2017 Starboy The Weeknd Republic Records/Xo Records 7/29/2016 Hip Hop R&B/ 3/9/2017 Starboy The Weeknd Republic Records/Xo Records 7/29/2016 Hip Hop www.riaa.com // // GOLD & PLATINUM AWARDS MARCH // 3/1/17 - 3/31/17 PLATINUM SINGLE // 16 Cert Date// Title// Artist// Genre// Label// Platinum// Rel. -

Galatea's Daughters: Dolls, Female Identity and the Material Imagination

Galatea‘s Daughters: Dolls, Female Identity and the Material Imagination in Victorian Literature and Culture Dissertation Presented in Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy in the Graduate School of The Ohio State University By Maria Eugenia Gonzalez-Posse, M.A. Graduate Program in English The Ohio State University 2012 Dissertation Committee: David G. Riede, Advisor Jill Galvan Clare A. Simmons Copyright by Maria Eugenia Gonzalez-Posse 2012 Abstract The doll, as we conceive of it today, is the product of a Victorian cultural phenomenon. It was not until the middle of the nineteenth century that a dedicated doll industry was developed and that dolls began to find their way into children‘s literature, the rhetoric of femininity, periodical publications and canonical texts. Surprisingly, the Victorian fascination with the doll has largely gone unexamined and critics and readers have tended to dismiss dolls as mere agents of female acculturation. Guided by the recent material turn in Victorian studies and drawing extensively from texts only recently made available through digitization projects and periodical databases, my dissertation seeks to provide a richer account of the way this most fraught and symbolic of objects figured in the lives and imaginations of the Victorians. By studying the treatment of dolls in canonical literature alongside hitherto neglected texts and genres and framing these readings in their larger cultural contexts, the doll emerges not as a symbol of female passivity but as an object celebrated for its remarkable imaginative potential. The doll, I argue, is therefore best understood as a descendant of Galatea – as a woman turned object, but also as an object that Victorians constantly and variously brought to life through the imagination. -

Volume VI, Spring/Summer 2012, No.1&2

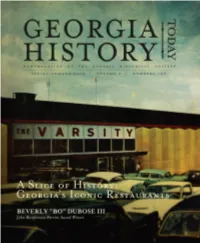

PERSPECTIVES Editor Brian Williams ON THE COVER Design and Layout The Varsity in the 1960s Home Improvements Modish by W. Todd Groce, Ph.D. Contributors Jim Burran, Ph.D. Most folks are aware that the Georgia Historical Society is home to the first and oldest William Vollono collection of Georgia history in the nation. But few realize how far we’ve had to come in a Photography Spring/ Summer 2012 | Volume 6, Numbers 1 & 2 short time to open that collection up to the world. Gordon Jones, Brandy Mai, Charles Snyder, Brian Williams The Society’s 203-year-old collection traces its roots to 1809, when the Savannah Library Board of Curators Society began assembling an archive of manuscripts, books, and portraits. Forty years Chairman later, the SLS merged with GHS and its collection was added to ours. Bill Jones III, Sea Island Vice Chairman Since then, the GHS collection has grown into the largest dedicated exclusively to Georgia Robert L. Brown, Jr., Atlanta history—over 4 million documents, books, maps and artifacts, enough to create a museum Treasurer of Georgia history. It represents every part of the state and covers every period of time, John C. Helmken II, Savannah from James Oglethorpe and Helen Dortch Longstreet to Leah Ward Sears and Vince Secretary Dooley. An original draft of the United States Constitution, the only collection of Robert Shell H. Knox, Augusta E. Lee correspondence in the state, and the papers of a U.S. Supreme Court Justice, 18 President and Chief Executive Officer Georgia governors, and the only two Georgians to serve as U.S. -

Hang Out! 5 Workbook Transcripts

Hang Out! 5 Workbook Transcripts Unit 1 Mexico has corn tortilla pizza. To make it, first, <Track 002> spread beans on the pizza. Next, add cheese and C. Listen and check. vegetables. Then bake it in the oven. After that, you 1.The boy is whisking. can add tomatoes and olives. You can also put sauce 2.The girl is heating the oven. on it. 3.The boy is stirring. 4.The girl is mixing. Turkey has a pizza called lahmacun [la-mah-jun]. It has beef but not cheese. To make it, first, chop some <Track 003> parsley and other vegetables. Next, fry the beef. A. Listen and write. Then put them on the pizza and bake it in the oven. Girl: Andrew, let’s make a cake! In New York City, there are dessert pizzas. You make BOY: OK, Iris. I don’t know what to do, though. them with chocolate and sugar. You can add sweet Girl: I’ll show you. First, heat the oven. It needs to sauce and fruit to them. These pizzas aren’t very get really hot. healthy, but they taste good. BOY: What’s the next step? Girl: Next, whisk the eggs in a bowl. Unit 2 BOY: It looks good so far. Then what do we do? <Track 007> Girl: Then, add milk and sugar. After that, mix A. Listen and write. everything together with the flour. BOY: Grace, check out my new computer. It is made BOY: Finally, stir the batter with a spoon, right? in China. It is extremely useful. -

Quote of the Nine Weeks

Le Philosophe pearlriverhigh.stpsb.org Pearl River High School October 20, 2016 Volume 2 Issue 1 Whittie Wins Mr. Rebel PR Sophomore Jeremy Whittie receives multiple honors for Images courtesy of Google.com his stellar performance across all events at Pearl River High School’s second annual Mr. Rebel Pageant. Photo By Alan Jones The Pearl River High (PRHS) Mr. To participate in the pageant all The first part of the pageant was why their particular costume Rebel pageant did not originate in applicants must have an unweighted the introduction of each contestant in represented their club. The most Pearl River. Mrs. Laurie Jo Koster, the GPA of 2.0 and are active members in his original costume. They were asked memorable costumes were senior PRHS talented theatre teacher, came their sponsoring organization. exactly what they were dressed as, and Hunter Young’s toga, representing the up with the idea for this pageant in her democracy of Student Council, sorority days. As a freshman member sophomore Luke Rullman’s mime of Alpha Gamma Delta at Pittsburg costume, literally representing the University, she needed to come up translation of “Facta non with an idea to raise money for Verba” (deeds, not words), and juvenile diabetes. Her idea was a hit: sophomore Zachary Mayfield’s farmer forty-two organizations on campus costume, representing Future Farmers took part in the pageant, and they of America (FFA). raised $9,000 in all. For the talent category, junior Koster decided to bring this Gabe Danton’s pantomime “tape face” competition back to the stage at PRHS was certainly unforgettable; his act had just last year to promote the boys and the entire audience laughing. -

GENTLE GIANTS Hercules & Love Affair Expand Their Sound on Thoughtful New Album, ‘Omnion’

MUSIC RATE THAT CHOON Reviewing the month’s biggest tracks p.110 LENGTHY BUSINESS August’s albums analysed p.144 COMPILATION COMPETITION Mixes and collections broken down p.148 GENTLE GIANTS Hercules & Love Affair expand their sound on thoughtful new album, ‘Omnion’... p.144 djmag.com 117 SOONEY HOT CREATIONS DJ DEEP DEEPLY ROOTED HOUSE HOUSE BEN ARNOLD as the title might suggest, has echoes of raves gone by, dropping QUICKIES into a clattering breakbeat in the mid-section. Meanwhile, 'Drug Will Saul & Tee Mango present Dilling' finds label-chum DJ Tennis Primitive Trust [email protected] on the vocals, tripping out over Power On EP deep, throbbing tech, while the Aus Music legendary DJ Bone takes things 7. 5 on a friskier, more angular tip with Highly recommended, this new collab between his remix. Millionhands boss Tee Mango and Aus main man Will Saul. 'Power On' is all lovely pads and bleeps. Sebastopol Nothing not to like there. Gahalowood Kompakt Matuss 8.0 Absence Seizure 008 Frenchman and Hypercolour Absence Seizure alumnus Sebastien Bouchet 7. 5 returns to Köln's most stately Beautifully crafted, cerebral house music from New Kompakt under his new alias, York via Ukraine's Julia Matuss. With its warbling Sebastopol, with three tracks Rhodes, 'Fairy Dust' is a delight, while 'Faramant' of largesse. ‘Gahalowood’ is delves deep. something of a monstrous proposition, with shoe-gaze Inland Knights vocals coupled with epic, big-room Subway reverberations. On the flip, there's Drop Music the wonderfully tripped-out ‘Flash 8.0 MONEY Pool’, a wonky, off-kilter mash-up Two tracks of irresistible funk from the legendary Letherette SHOT! of pulsing synths and cowbells. -

07-08 Issue 2 FINAL 2.Indd

“A beacon of truth.” Issue 2, Volume 35 HuntingtonDispatch High School Oakwood and McKay Roads Huntington, NY 11743 Internet 08 CLOSED CAMPUS FOR 2010 Restrictions by JENN SZILAGY APRIL Recent discussion of Hinder Research PHOTO: MIKE DIVUOLO whether or not senior privi- by HENRY BAUGHMAN news in short leges will be taken away at National History Day Huntington High School has Providing America’s children with a In March, several HHS caused strong controversy safe place to learn is the number one duty students distinguished them- amongst its students and staff, of all schools. Computers have opened up selves in the National History particularly with the Class of an entirely new pathway for danger. In the Day competition. Five students 2010. 21st Century, a time when upwards of 40 who went to the Long Island Because of fatal accidents percent of Internet traffi c appeals to the Regional History Day contest that continue to occur on Long prurient interest and a great deal more is of will be moving on to the state Island by teen drivers (the questionable content, censoring the Inter- competition. most recent of which killed net takes a Herculean effort. GRAPHIC: MIKE MCCOURT Caitlin Etri, Kirsten Frei- one and injured two students Huntington High School uses an au- man, Rebecca Silverman, Col- on April 10th in West Hemp- tomated system to ensure that any Internet leen Teubner, Jeffrey Bishop, stead), administrators feel it browsing bears on education. Unfortu- Aliyah Cohen and Mia Rienzo may be necessary to close the Doyle’s state- nately for the administration, the Internet will take their projects the the campus completely. -

At Home in the -.N O R T H W E S T » M O N T a N A

m m H UNI at Home in THE -.N o r t h w e s t » M o n t a n a Extraordinary Recreation, Conservation and Developm ent Pr o per ties Available I La r g e p a r c e l s a n d a c r e a g % La k e f r o n t , r iv e r f r o n t /^n d v m w p r o p e r t y . p s ,1 P l u m C r e e k . ExctoilV % , PEACEFUL, NATURAL, INSPIRING SETTINGS. $240*000 TO $0,500,000. Lenders In Environments} Forestry. jgp$ more information' contact: Bill DeReu, Plum Creek at (406) 892-6264. Visit pur Web Site at http://land.plumcreek.com/ "The Foundation of Every State is its Education of its Youth"- Diogenes FOR OVER 100 YEARS the Maureen and Mike Mansfield Library at The University of Montana has been a foundation of information for Montana's youth. That foundation now includes print, CD-Rom, video and electronic formats. Expanding traditional sources to keep pace with educational and research needs is our challenge. You can help build a foundation of quality information with a gift to the Mansfield Library. A library endowment extends your gift's benefits in perpetuity. A gift of $10,000 or more endows a fund in your name or the name of someone you wish to honor. Your generosity is recognized with gift plates and in the GrizNet electronic catalog. Take this opportunity to endow knowledge for the future. -

2017 First Time Gold & Platinum Recipients

2017 FIRST TIME Gold & Platinum Recipients Breaking artists is what music labels, big and small, do every day. Tastemakers. Innovators. Partners. Investors. Fans. That’s who labels are. Each story behind these 2017 certified Gold, Platinum or multi-Platinum artists is different, but one consistent thread is that a record label helped achieve that level of prestigious success. We are #labelsatwork. 2017 FIRST TIME GOLD & PLATINUM RECIPIENTS 64 ARTISTS EARNED FIRST TIME GOLD OR PLATINUM AWARDS IN 2017 ALBUM AWARDS MULTI-PLATINUM SONG AWARDS Aminé Young M.A Khalid Caroline OOOUUU 21 Savage Cardi B 21 Savage Brett Young Bodak Yellow Bank Account James Arthur A Boogie Wit Da Hoodie Harry Styles Say You Won’t Let Go Drowning (Feat. Kodak Black) Luke Combs Julia Michaels Brett Young Russ Issues In Case You Didn’t Know Khalid Kyle Camila Cabello SZA Location iSpy (Feat. Lil Yachty) Havana (Feat. Young Thug) ARTIST GENRE RECORD LABEL CERTIFIED SONGS CERTIFIED ALBUMS R&B/ Slaughter Gang, LLC/ Bank Account 2X Multi-Platinum 21 Savage Issa Album Gold Hip Hop Epic Records Red Opps Platinum R&B/ 6lack LVRN/Interscope Prblms Platinum Hip Hop Drowning (Feat. Kodak Black) 2X Platinum A Boogie Wit R&B/ Highbridge/ My Shit Platinum Da Hoodie Hip Hop Atlantic Records Jungle Gold Timeless Gold R&B/ Aminé Republic Records Caroline 3X Multi-Platinum Hip Hop Anne-Marie Pop Atlantic Records Alarm Gold Ayo & Teo Pop Columbia Records Rolex Platinum Bebe Rexha Pop Warner Bros Records I Got You Gold Bishop Briggs Alternative Island Records River Gold In Case You Didn’t Know 2X Multi-Platinum Brett Young Country Big Machine Records Sleep Without You Platinum Brett Young Gold Like I Loved You Gold Calum Scott Pop Capitol Records Dancing On My Own Gold Havana (Feat. -

Vegetables, Soups, Sauces, Gravies and Beverages. INSTITUTION Marine Corps Inst., Washington, DC

DOCUMENT RESUME ED 258 042 CE 041 781 TITLE Vegetables, Soups, Sauces, Gravies and Beverages. INSTITUTION Marine Corps Inst., Washington, DC. REPORT NO MCI-33.19 PUB DATE (84] NOTE 56p. PUB TYPE Guides - Classroom Use - Materia33 (for Learner) (051) EDRS PRICE MP01/PC03 Plus Postage. DESCRIPTORS Adult Education; Behavioral Objectives; Continuing Education; *Cooking Instruction; *Cooks; *Correspondence Study; Course Descriptions; *Food; *Foods Instruction; Individualized Instruction; Learning Activities; Military Training; *Occupational Nome Economics; Study Guides ABSTRACT Developed as part of the Marine Corps Institute (MCI) correspondence training program, this course on vegetables, soups, sauces, gravies, and beverages is designed to increase Marine Corps cooks' effectiveness as food handlers, using the proper techniques in the preparation of these items. Introductory materials include specific information for MCI students and a study guide (guidelines to complete the course). The nine-hour course consists of three chapters or lessons. Each unit contains a text and a lesson sheet that details the study assignment and sets forth the lesson objective. A written assignment is also provided. (YLB) *********************************************************************** * Reproductions supplied by EDRS are the best that cau be made * * from the original document. * *********************************************************************** UN:TED STATES MARINECORPS MARINE CORPS INSTITUTE.MARINE SARRACKS BOX17195 ARLINGTON. VA. 22222 33.19 1. ORIGIN MCI course 33.19, Vegetables, Soups, Sauces, Gravies, and Beverages, has been prepared by the Marine Corps Institute. 2. APPLICABILITY This course is for instructional purposes only. M. D. HOLLADAY Lieutenant Colonel, U. S. Marine Corps Deputy Director 3 MCI-R241 INFORMATION FOR MCI STUDENTS Welcome to the Marine Corps Institute training program. Your interest in self-improvement and increased professional competence is noteworthy.